XGBoost vs. Random Forest for Caco-2 Permeability Prediction: A Comprehensive Comparison for Drug Discovery

Accurately predicting Caco-2 permeability is crucial for assessing intestinal absorption and oral bioavailability of drug candidates.

XGBoost vs. Random Forest for Caco-2 Permeability Prediction: A Comprehensive Comparison for Drug Discovery

Abstract

Accurately predicting Caco-2 permeability is crucial for assessing intestinal absorption and oral bioavailability of drug candidates. This article provides a detailed comparison of two prominent machine learning algorithms, XGBoost and Random Forest, for this task. Drawing on the latest research, including 2025 studies, we explore the foundational principles, methodological applications, and optimization strategies for both models. We delve into performance validation on industrial datasets, address common challenges like data variability and class imbalance, and synthesize evidence on their comparative predictive power and real-world applicability. Aimed at researchers and drug development professionals, this review offers actionable insights for selecting and implementing the right model to enhance efficiency in early-stage drug discovery.

Caco-2 Permeability and Machine Learning: Establishing the Foundation for Oral Drug Development

The Critical Role of Caco-2 Assays as the 'Gold Standard' for Intestinal Permeability

For decades, the Caco-2 cell model has maintained its status as the gold standard in vitro tool for predicting intestinal drug permeability and absorption. This human colon adenocarcinoma cell line, when cultured on permeable Transwell inserts, spontaneously differentiates into enterocyte-like cells that form polarized monolayers with tight junctions and brush border enzymes, functionally resembling human intestinal epithelium [1] [2]. The model's widespread adoption in pharmaceutical research stems from its well-documented ability to provide reliable permeability data with good correlation to human fraction absorbed values, making it indispensable for Biopharmaceutics Classification System (BCS) categorization and regulatory submissions [2] [3]. Despite the emergence of innovative technologies, Caco-2 assays continue to serve as the benchmark against which new permeability models are validated.

However, this gold standard status exists alongside recognized limitations. Caco-2 cells require extended differentiation periods (7-21 days), exhibit inter-laboratory variability, and lack the full cellular diversity and metabolic competence of the human intestine [2] [3]. These shortcomings have driven both methodological enhancements to the traditional model and the development of complementary approaches, including advanced machine learning algorithms like XGBoost and Random Forest that can predict Caco-2 permeability from chemical structure alone [3] [4].

Caco-2 Model Fundamentals: Strengths and Recognized Limitations

Core Strengths Supporting Gold Standard Status

The Caco-2 model's enduring value lies in several key attributes that make it particularly suitable for drug permeability assessment:

- Predictive Power for Passive Permeability: The model demonstrates exceptional correlation with human intestinal absorption for passively diffused compounds, with compounds having Papp values >1 × 10â»â¶ cm/s typically showing complete absorption in humans, while those <1 × 10â»â· cm/s are poorly absorbed [2].

- Functional Biological Relevance: Differentiated Caco-2 cells express tight junctions, microvilli, and various transport systems (influx and efflux transporters) that allow investigation of multiple absorption pathways, including passive transcellular/paracellular diffusion and carrier-mediated transport [2].

- Regulatory Acceptance: The model is recognized by regulatory agencies including the FDA as a validated tool for permeability assessment supporting BCS classification and biowaiver requests [2] [3].

- Reproducibility and Standardization: Despite inter-laboratory variability, standardized protocols enable consistent and reproducible results crucial for comparative studies [1].

Established Limitations and Challenges

Despite these strengths, researchers must contend with several well-characterized limitations:

- Limited Metabolic Capability: Caco-2 cells have restricted expression of Phase 1 and Phase 2 metabolic enzymes, particularly cytochrome P450 (CYP) enzymes like CYP3A4, and non-physiological expression of carboxylesterases (CES1/2), leading to incomplete understanding of a drug's metabolic profile [1].

- Lack of Cellular Diversity: The model lacks the diversity of cell types found in native intestine (goblet cells, M-cells, enteroendocrine cells), and does not secrete mucus, which can impact drug absorption predictions [1] [2].

- Extended Culture Time: The required 7-21 day differentiation period creates practical challenges for high-throughput screening and increases contamination risks [5] [3].

- Variable Expression of Transporters: Caco-2 cells may overexpress or underexpress certain transporters compared to human intestine, potentially leading to misprediction of transporter-mediated drug absorption [1] [2].

Table 1: Key Limitations of Conventional Caco-2 Models and Their Implications

| Limitation | Impact on Drug Permeability Assessment | Experimental Consequences |

|---|---|---|

| Limited metabolic enzyme expression | Incomplete understanding of drug metabolism | Underestimation of first-pass metabolism; prodrug activation issues |

| Lack of cellular diversity | Non-physiological barrier environment | Altered absorption for mucus-interacting compounds |

| Extended culture time (7-21 days) | Reduced throughput and increased costs | Limitations in early discovery screening |

| Variable transporter expression | Misclassification of transporter substrates | Potential false positives/negatives in efflux assays |

| Inter-laboratory variability | Challenges in data comparison | Need for internal standards and controls |

Experimental Approaches: From Traditional Assays to Machine Learning

Standard Caco-2 Permeability Assay Protocol

The conventional Caco-2 permeability assay follows a well-established methodology that has been refined over decades of use:

Cell Culture and Seeding: Caco-2 cells are seeded onto porous Transwell inserts at high density (typically 50,000-100,000 cells/cm²) and allowed to differentiate for 7-21 days [2] [3].

Quality Control Checks: Barrier integrity is verified by measuring Transepithelial Electrical Resistance (TEER) values (>300 Ω·cm²) and using paracellular markers like mannitol to ensure monolayer integrity before experiments [5].

Permeability Experimentation: Test compounds are applied to either the apical (for A-B transport) or basolateral (for B-A transport) compartment, with samples taken from the opposite compartment at predetermined time points [2].

Analytical Quantification: Compound concentration in samples is determined using analytical methods (HPLC, LC-MS/MS), and apparent permeability (Papp) is calculated using the standard formula: Papp = (dQ/dt)/(A × C₀), where dQ/dt is the transport rate, A is the membrane surface area, and C₀ is the initial donor concentration [2].

Data Interpretation: Compounds are classified based on permeability thresholds, with Papp >10 × 10â»â¶ cm/s typically indicating high permeability, and Papp <1 × 10â»â¶ cm/s indicating low permeability [2].

Enhanced Caco-2 Models and Modifications

To address limitations of the conventional model, several enhanced approaches have been developed:

- Co-culture Models: Incorporating goblet cells (HT29-MTX) or other intestinal cell types to create more physiologically relevant barriers with mucus production [1] [2].

- Microfluidic Gut-on-Chip Systems: Culturing Caco-2 cells under flow conditions that improve differentiation and barrier function, with some studies showing better correlation to native tissue than traditional Transwells [6].

- Multi-Organ Systems: Fluidically linking Caco-2 models with hepatocyte systems to simulate first-pass metabolism, providing more accurate bioavailability predictions [1].

A 2024 validation study of a Caco-2 microfluidic chip model demonstrated comparable predictive performance to traditional Transwell systems (r² = 0.41-0.79 for chip vs. r² = 0.59-0.83 for Transwell) while offering advantages in physiological relevance [6].

Machine Learning in Caco-2 Permeability Prediction: XGBoost vs. Random Forest

The Shift Toward In Silico Prediction

The pharmaceutical industry increasingly complements experimental approaches with computational models to accelerate early-stage drug discovery. Machine learning algorithms can predict Caco-2 permeability from chemical structure alone, bypassing the time and resource constraints of biological assays [3] [4]. This capability is particularly valuable for virtual screening of large compound libraries before synthesis and experimental testing.

The critical challenge lies in selecting the optimal algorithm and molecular representations for these predictions. Recent research has systematically evaluated various approaches, with XGBoost and Random Forest emerging as two of the most effective algorithms for this task [3] [4].

Performance Comparison: Experimental Data

Multiple recent studies have directly compared XGBoost and Random Forest for Caco-2 permeability prediction:

Table 2: Performance Comparison of XGBoost vs. Random Forest for Caco-2 Permeability Prediction

| Study Context | Dataset Size | XGBoost Performance | Random Forest Performance | Key Findings |

|---|---|---|---|---|

| Industrial validation study [3] | 5,654 compounds | Generally provided better predictions than comparable models | Competitive performance but slightly inferior to XGBoost | XGBoost showed superior predictive power on test sets |

| AutoML benchmarking [4] | 906 compounds (TDC) 9,402 compounds (OCHEM) | Best MAE performance with AutoML framework | Not specified in detail | AutoML-based models outperformed standard implementations |

| Feature representation study [4] | Multiple datasets | Effective with PaDEL, Mordred, and RDKit descriptors | Comparable performance with selected feature sets | 3D descriptors reduced MAE by 15.73% vs. 2D alone |

A comprehensive 2025 study evaluating multiple machine learning algorithms on a large dataset of 5,654 Caco-2 permeability measurements found that XGBoost generally provided better predictions than Random Forest and other comparable models for test sets [3]. The study employed diverse molecular representations including Morgan fingerprints, RDKit 2D descriptors, and molecular graphs, with XGBoost demonstrating consistent advantage across representations.

Another 2025 systematic investigation of molecular representations found that ensemble methods including both XGBoost and Random Forest consistently outperformed deep learning approaches on Caco-2 permeability prediction tasks, particularly with small to medium-sized datasets [4]. This study highlighted the importance of feature selection, with 3D molecular descriptors providing significant performance improvements over 2D representations alone.

Machine Learning Experimental Protocol

For researchers implementing these algorithms, the standard workflow involves:

Data Collection and Curation: Compiling experimental Caco-2 Papp values from public databases (e.g., TDC benchmark with 906 compounds) or proprietary sources [3] [4].

Molecular Featurization: Converting chemical structures into machine-readable features using:

- Fingerprints: Morgan (ECFP), Avalon, ErG, MACCS keys

- Molecular Descriptors: RDKit 2D, PaDEL, Mordred (including 3D descriptors)

- Deep Learning Embeddings: CDDD and other learned representations [4]

Model Training and Validation: Implementing algorithms with appropriate validation strategies (scaffold splitting, cross-validation) to assess generalizability to novel chemical structures [3] [4].

Performance Evaluation: Using metrics including Mean Absolute Error (MAE), Root Mean Square Error (RMSE), R², and Pearson correlation to quantify predictive accuracy [3] [4].

Research Toolkit: Essential Materials and Methods

Table 3: Essential Research Reagents and Tools for Caco-2 and Computational Permeability Studies

| Tool Category | Specific Examples | Function and Application |

|---|---|---|

| Cell Culture Systems | Caco-2 cell line (ATCC HTB-37), Transwell inserts, TEER measurement equipment | Creating biological barrier models for experimental permeability assessment |

| Analytical Instruments | HPLC, LC-MS/MS systems | Quantifying drug concentrations in permeability samples |

| Molecular Featurization Tools | RDKit, PaDEL, Mordred software | Generating molecular descriptors and fingerprints from chemical structures |

| Machine Learning Frameworks | XGBoost, Scikit-learn (Random Forest), AutoGluon | Implementing predictive algorithms for permeability estimation |

| Benchmark Datasets | TDC Caco2_Wang (906 compounds), OCHEM dataset (9,402 compounds) | Training and validating computational models with experimental data |

| Validation Tools | SHAP analysis, applicability domain assessment, y-randomization | Evaluating model robustness and interpretability |

| Dermaseptin TFA | Dermaseptin TFA | |

| Zin3 AM | Zin3 AM Cell-Permeable Zinc Indicator|RUO | Zin3 AM is a Zn2+-selective fluorescent probe for detecting low intracellular zinc levels. For Research Use Only. Not for diagnostic or therapeutic use. |

The Caco-2 permeability assay maintains its gold standard status through decades of validation and regulatory acceptance, despite recognized limitations. While traditional experimental approaches continue to evolve with enhanced models and protocols, machine learning methods—particularly XGBoost and Random Forest—offer powerful complementary approaches for high-throughput prediction.

Current evidence suggests that XGBoost holds a slight performance advantage for Caco-2 permeability prediction tasks, particularly when combined with comprehensive molecular descriptors including 3D features [3] [4]. However, both algorithms significantly outperform deep learning approaches on small to medium-sized datasets typical in pharmaceutical research.

The most effective strategy for modern drug development involves integrating experimental and computational approaches—using machine learning for rapid screening of virtual compound libraries, followed by experimental validation using enhanced Caco-2 models that address specific physiological limitations of the traditional assay. This integrated approach maximizes efficiency while maintaining the physiological relevance necessary for accurate permeability assessment.

The Caco-2 cell model stands as the "gold standard" in vitro tool for predicting the intestinal permeability and absorption of orally administered drug candidates. This human colon adenocarcinoma cell line is favored because, upon differentiation, it exhibits morphological and functional similarities to human enterocytes, forming a monolayer with tight junctions and a brush border that mimics the intestinal epithelial barrier. [7] [8] Its use is recommended by regulatory bodies like the FDA and EMA for classifying compounds under the Biopharmaceutics Classification System (BCS). [3] [9]

Despite its widespread adoption and regulatory endorsement, the traditional Caco-2 permeability assay is fraught with significant challenges that can impede the rapid pace of modern drug discovery. Three core limitations are its prolonged experimental timeline, substantial resource costs, and inherent experimental variability. This guide objectively compares the performance of traditional experimental protocols against a modern computational alternative: machine learning models, with a specific focus on the comparative strengths of XGBoost and Random Forest algorithms.

Quantifying the Traditional Workflow and Its Limitations

The standard Caco-2 assay is a multi-stage, labor-intensive process. A detailed breakdown of its protocol and associated challenges is provided below.

Detailed Experimental Protocol

The following workflow outlines the key steps in a standard Caco-2 permeability assay, highlighting the points that contribute to its time-consuming nature and variability. [8]

Key Challenges in the Wet-Lab Protocol

- Extended Timelines: The most pronounced bottleneck is the 21-day cultivation period required for Caco-2 cells to fully differentiate into an enterocyte-like phenotype. This extended timeline drastically reduces throughput and slows down decision-making in early drug discovery. [3] [7]

- High Costs and Resource Use: The assay requires specialized cell culture facilities, consumables like Transwell inserts, and sophisticated analytical equipment (e.g., LC-MS/MS). Furthermore, it consumes significant quantities of test compounds, which may be scarce in the early stages of development. [8]

- Experimental Variability: The heterogeneity of the Caco-2 cell line itself and differences in laboratory-specific protocols (e.g., passage number, culture conditions) can lead to high variability in permeability measurements. This lack of standardization can compromise the reproducibility and reliability of data across different studies. [10] Ensuring monolayer integrity is critical, and failure to maintain tight junctions (with TEER values typically requiring 300-500 Ω·cm²) can yield unreliable data. [8]

Machine Learning as a Strategic Alternative

In silico methods, particularly machine learning (ML) models, have emerged as powerful tools to overcome the limitations of the biological assay. By leveraging existing chemical data, these models can predict Caco-2 permeability directly from molecular structure, offering a rapid and cost-effective solution for initial compound prioritization.

The In Silico Workflow for Permeability Prediction

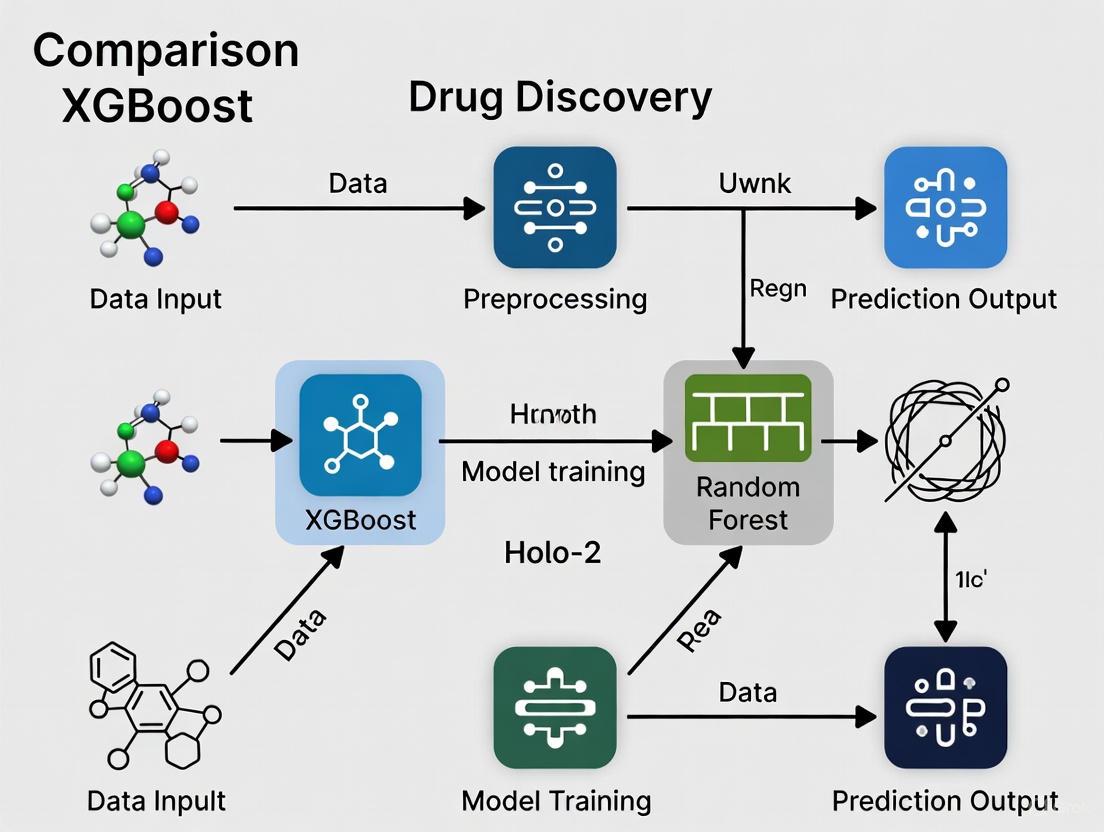

The process of building and applying ML models for Caco-2 prediction involves a structured pipeline from data collection to model deployment, as visualized below.

Performance Comparison: XGBoost vs. Random Forest

Extensive research has been conducted to evaluate the performance of various ML algorithms for Caco-2 permeability prediction. Below, we summarize quantitative data and methodological details that allow for a direct comparison between two of the most prominent ensemble methods: XGBoost and Random Forest (RF).

Table 1: Comparative Performance Metrics of XGBoost and Random Forest Models

| Study Context | Algorithm | Dataset | Key Performance Metrics | Experimental Notes |

|---|---|---|---|---|

| Industrial Validation [3] | XGBoost | Large public dataset (5,654 compounds) & internal validation | Generally provided better predictions than comparable models (RF, GBM, SVM) on test sets. | Combined Morgan fingerprints + RDKit 2D descriptors. Models retained predictive efficacy on internal pharmaceutical industry data. |

| Multiclass Classification [11] | XGBoost | Imbalanced permeability dataset | Best Model Performance: Accuracy: 0.717 MCC: 0.512 | Used ADASYN oversampling to handle class imbalance. SHAP analysis provided model interpretability. |

| Systematic Benchmarking [4] | XGBoost (CaliciBoost) | TDC (906 compounds) & OCHEM (9,402 compounds) | Achieved the best MAE performance among tested models. | AutoML framework (AutoGluon). PaDEL, Mordred, and RDKit descriptors were most effective. Incorporation of 3D descriptors reduced MAE by ~15.7%. |

| Systematic Benchmarking [4] | RF / Other Ensembles | TDC benchmark | Consistently outperformed deep learning models (CNN, GNN) on this medium-sized dataset. | Classical ensemble methods like RF and XGBoost are noted to generalize better than deep learning on small-to-medium-sized Caco-2 datasets. |

| Supervised Recursive Model [10] | Random Forest | Structurally diverse dataset (>4,900 molecules) | Conditional Consensus RF Model: RMSE: 0.43 - 0.51 for all validation sets. | Used supervised recursive algorithms for feature selection. Model was validated for BCS/BDDCS class estimation on 32 ICH drugs. |

- Predictive Accuracy: Across multiple studies and dataset sizes, XGBoost consistently demonstrates a slight edge in predictive accuracy, as evidenced by superior metrics (MAE, Accuracy, MCC) in head-to-head comparisons. [3] [11] [4]

- Handling Data Complexity: XGBoost's built-in regularization techniques make it particularly adept at handling complex, non-linear relationships in permeability data and mitigating overfitting, especially when using high-dimensional molecular descriptors. [4]

- Robustness and Interpretability: Random Forest remains a highly robust and interpretable algorithm. Its ability to provide feature importance and its strong performance, as shown in the development of validated consensus models, make it a reliable and trustworthy choice for many applications. [10]

- Performance on Smaller Datasets: Both algorithms, as classical ensemble methods, have been shown to generalize more effectively than complex deep learning models (e.g., Graph Neural Networks) on the small-to-medium-sized datasets typical of Caco-2 permeability studies. [4]

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 2: Key Materials and Tools for Caco-2 and In Silico Research

| Item / Solution | Function / Application | Relevance |

|---|---|---|

| Caco-2 Cell Line | Differentiates into enterocyte-like cells to form the intestinal barrier model for permeability testing. | Essential for all in vitro Caco-2 assays. [7] |

| Transwell Inserts | Semi-permeable supports for growing cell monolayers, creating apical and basolateral compartments. | Critical for the experimental setup of the assay. [8] |

| TEER Measurement System | Measures Transepithelial Electrical Resistance to quantitatively assess monolayer integrity. | Quality control for ensuring valid assay results. [8] |

| P-gp Inhibitors (e.g., Verapamil) | Inhibits P-glycoprotein efflux transporter to investigate active transport mechanisms. | For mechanistic studies of transporter-mediated permeability. [8] |

| RDKit | Open-source cheminformatics toolkit for calculating molecular descriptors and fingerprints. | Core component for featurizing molecules in ML workflows. [3] [4] |

| PaDEL/Mordred Descriptors | Software for calculating a comprehensive set of 2D and 3D molecular descriptors. | Provides critical input features for high-performing models. [4] [9] |

| KNIME Analytics Platform | Open-platform for creating automated data science workflows, including QSPR models. | Enables building and deploying reproducible in silico prediction pipelines. [10] |

| SHAP Analysis | Method for interpreting ML model output and understanding feature importance. | Provides critical interpretability for black-box models like XGBoost and RF. [11] [4] |

| Azaperone dimaleate | Azaperone dimaleate, CAS:59698-53-2, MF:C27H30FN3O9, MW:559.5 g/mol | Chemical Reagent |

| Halomicin A | Halomicin A|Ansamycin Antibiotic|For Research Use | Halomicin A is a macrocyclic ansamycin antibiotic produced by Micromonospora. It is for research use only (RUO) and not for human consumption. |

The challenges of time, cost, and variability associated with the traditional Caco-2 assay are substantial. Machine learning models, particularly advanced ensemble methods like XGBoost and Random Forest, offer a validated and strategic alternative for high-throughput permeability prediction in early drug discovery.

While XGBoost often holds a slight performance advantage in direct comparisons, Random Forest remains a highly robust and interpretable choice. The decision between them may depend on specific project needs: prioritizing maximum predictive power (favoring XGBoost) or valuing extreme robustness and straightforward interpretability (favoring Random Forest).

A synergistic approach is recommended for optimal efficiency: using in silico models as a rapid filter for virtual compound screening and prioritization, followed by targeted in vitro Caco-2 assays for final validation and mechanistic studies of lead compounds. This integrated strategy maximizes the strengths of both worlds, accelerating the drug development pipeline while maintaining scientific rigor.

In drug discovery, predicting intestinal absorption is crucial for assessing oral bioavailability. The Caco-2 cell model, derived from human colon adenocarcinoma cells, has emerged as the gold standard for evaluating drug permeability in vitro due to its morphological and functional similarity to human intestinal enterocytes [3]. This assay measures the apparent permeability (Papp) of compounds across a cell monolayer, providing critical data for the Biopharmaceutics Classification System (BCS) [3]. However, the traditional Caco-2 assay is time-consuming and resource-intensive, requiring 7-21 days for cell differentiation before experimentation can even begin [3]. These practical limitations have driven the development of computational models that can accurately predict Caco-2 permeability, enabling rapid screening of compound libraries during early drug discovery stages.

Quantitative Structure-Property Relationship (QSPR) modeling represents the foundational approach for predicting Caco-2 permeability, establishing mathematical relationships between molecular descriptors and experimental permeability values [12]. With advances in computational power and algorithms, machine learning has dramatically enhanced QSPR capabilities, with XGBoost and Random Forest emerging as particularly effective algorithms for handling the complex, non-linear relationships inherent in permeability data [3] [12] [4]. These models have demonstrated robust predictive performance across diverse chemical spaces, including natural products and novel therapeutic modalities like targeted protein degraders [12] [13].

Key Machine Learning Algorithms

Machine learning algorithms for Caco-2 permeability prediction span traditional methods to advanced deep learning architectures. The selection of an appropriate algorithm depends on dataset size, molecular representation, and specific project requirements. Below is a comprehensive comparison of the primary algorithms used in the field.

Table 1: Key Machine Learning Algorithms for Caco-2 Permeability Prediction

| Algorithm | Model Type | Key Advantages | Performance Highlights | Best Applications |

|---|---|---|---|---|

| XGBoost | Ensemble (Gradient Boosting) | Handles class imbalance well; high predictive accuracy | Accuracy: 0.717; MCC: 0.512 (multiclass) [11]; Superior for industrial validation [3] | Multiclass permeability; Imbalanced datasets |

| Random Forest | Ensemble (Bagging) | Robust to overfitting; provides feature importance | R²: 0.73-0.74; RMSE: 0.39-0.40 [12]; Accuracy: 81-91% for PAMPA [14] | General permeability prediction; Feature selection |

| Support Vector Machines | Kernel-based | Effective in high-dimensional spaces | R²: 0.73-0.74; RMSE: 0.39-0.40 [12] | Small to medium datasets |

| Message Passing Neural Networks | Deep Learning (Graph-based) | Learns directly from molecular structure | Benefits from multi-task learning [15] [16] | Large datasets; Transfer learning |

| Molecular Attention Transformer | Deep Learning (Attention-based) | Interpretable; captures long-range dependencies | R²: 0.62-0.75 for cyclic peptides [17] | Complex molecules; Interpretability needs |

XGBoost versus Random Forest: A Detailed Comparison

The comparison between XGBoost and Random Forest represents a central consideration in modern Caco-2 permeability prediction. Both are ensemble methods but employ fundamentally different approaches. Random Forest utilizes bagging (bootstrap aggregating) to create multiple decision trees from random subsets of the training data and features, then averages their predictions [12]. This approach reduces variance and minimizes overfitting. In contrast, XGBoost employs gradient boosting, which builds trees sequentially where each new tree corrects errors made by previous trees [11] [3]. This often results in higher accuracy but requires more careful parameter tuning.

Experimental evidence demonstrates that XGBoost frequently achieves slightly superior predictive performance for Caco-2 permeability tasks. In a comprehensive industrial validation study, XGBoost generally provided better predictions than comparable models for test sets [3]. Similarly, for challenging multiclass classification of Caco-2 permeability, XGBoost combined with ADASYN oversampling achieved the best performance with an accuracy of 0.717 and Matthews Correlation Coefficient (MCC) of 0.512 [11]. The algorithm's effectiveness in handling class-imbalanced datasets through its built-in regularization and customized loss functions makes it particularly valuable for permeability prediction where extreme permeability classes are naturally underrepresented.

Random Forest remains highly competitive, especially in scenarios with limited data or when model interpretability is prioritized. Studies have shown Random Forest achieving R² values of 0.73-0.74 and RMSE of 0.39-0.40 for Caco-2 permeability prediction [12]. Its inherent parallelization capability also provides advantages for rapid prototyping. For permeability prediction tasks beyond Caco-2, such as PAMPA, Random Forest demonstrated remarkable accuracy between 86-91% on external test sets [14], highlighting its general robustness for permeability prediction applications.

Experimental Data and Performance Comparison

Quantitative Performance Metrics

Rigorous evaluation of machine learning models requires multiple performance metrics to assess different aspects of predictive accuracy. The following table summarizes key performance indicators for Caco-2 permeability prediction models across notable studies.

Table 2: Comparative Performance Metrics for Caco-2 Permeability Prediction Models

| Study | Algorithm | Dataset Size | Key Metrics | Evaluation Method |

|---|---|---|---|---|

| Dasgupta et al. 2025 [11] | XGBoost (ADASYN) | Multiclass dataset | Accuracy: 0.717; MCC: 0.512 | Test set validation |

| San Marcos University 2024 [12] | SVM-RF-GBM Ensemble | 1,817 compounds | R²: 0.76; RMSE: 0.38 | Test set validation |

| San Marcos University 2024 [12] | Random Forest | 1,817 compounds | R²: 0.73-0.74; RMSE: 0.39-0.40 | Test set validation |

| CaliciBoost 2025 [4] | AutoML (PaDEL + Mordred 3D) | 9,402 compounds (OCHEM) | MAE: 0.291 (15.73% reduction vs 2D) | Scaffold split |

| CaliciBoost 2025 [4] | AutoML (PaDEL 2D) | 9,402 compounds (OCHEM) | MAE: 0.345 | Scaffold split |

| CPMP Model 2025 [17] | Molecular Attention Transformer | 1,310 compounds | R²: 0.62-0.75 | Test set validation |

Beyond these standard metrics, the Matthews Correlation Coefficient (MCC) is particularly valuable for imbalanced datasets as it provides a more reliable measure of predictive quality than accuracy alone [11]. For regression tasks, the Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) offer complementary insights, with MAE being more robust to outliers while RMSE penalizes larger errors more heavily [12] [4].

Impact of Dataset Characteristics on Model Performance

Dataset size and diversity significantly influence model performance and generalizability. Larger datasets like the OCHEM collection (9,402 compounds) enable more robust model training and validation through proper scaffold splitting [4]. The Therapeutics Data Commons (TDC) benchmark, while smaller (906 compounds), provides a standardized evaluation framework with scaffold splits that test model generalization to novel chemical structures [4]. For specialized applications like cyclic peptide permeability prediction, targeted datasets (1,310 compounds for Caco-2) coupled with transfer learning approaches have proven effective [17].

Industrial validation studies reveal that models trained on public data can maintain predictive performance when applied to proprietary pharmaceutical company datasets, though some performance degradation is expected due to domain shift [3]. The incorporation of multi-task learning, where models are trained simultaneously on multiple permeability endpoints (Caco-2, PAMPA, MDCK), has demonstrated improved accuracy by leveraging shared information across related tasks [15] [13]. This approach is particularly valuable for targeted protein degraders and other novel therapeutic modalities where data scarcity presents modeling challenges [13].

Experimental Protocols and Methodologies

Standardized Model Development Workflow

The development of robust machine learning models for Caco-2 permeability prediction follows a systematic workflow encompassing data collection, preprocessing, feature engineering, model training, and validation.

Diagram 1: Experimental workflow for ML model development

Data Collection and Curation: Experimental Caco-2 permeability values are gathered from public databases like OCHEM, TDC, or literature compilations [3] [4]. Permeability measurements are typically converted to logarithmic scale (logPapp) and standardized using tools like RDKit's MolStandardize to achieve consistent tautomer states and neutral forms [3]. Critical curation steps include removing duplicates, handling out-of-bound measurements, and ensuring measurement consistency across different experimental conditions [3] [15].

Feature Engineering and Selection: Molecular representations include (1) 2D/3D descriptors (RDKit, PaDEL, Mordred) capturing physicochemical properties, (2) structural fingerprints (Morgan, MACCS, Avalon) encoding molecular substructures, and (3) learned representations from deep learning models [3] [4]. Feature selection techniques like Recursive Feature Elimination (RFE) and Genetic Algorithms (GA) identify optimal descriptor subsets, typically reducing feature sets from 523 to 41 descriptors while preserving predictive performance [12].

Model Training and Validation: Data splitting employs scaffold-based approaches to evaluate generalization to novel chemotypes [4]. Hyperparameter optimization uses cross-validation, with Bayesian optimization emerging as an efficient strategy [4]. Validation includes both internal (cross-validation, Y-randomization) and external testing (temporal validation, independent test sets) [3] [17].

Advanced Modeling Techniques

Handling Class Imbalance: For multiclass permeability classification, addressing class imbalance is crucial. Strategies include oversampling (ADASYN), undersampling, and hybrid approaches, with ADASYN oversampling combined with XGBoost demonstrating superior performance for imbalanced multiclass datasets [11].

Multi-Task Learning: Training single models on multiple permeability endpoints (Caco-2, PAMPA, MDCK) and efflux ratios leverages shared information, improving accuracy compared to single-task models [15] [13]. This approach is particularly effective when augmented with predicted physicochemical properties like pKa and LogD [15].

Transfer Learning and Self-Supervised Learning: For data-scarce scenarios like cyclic peptide permeability, pre-training on large molecular datasets followed by fine-tuning on target tasks improves performance [16] [17]. Contrastive learning with graph neural networks using atom masking augmentation creates robust molecular representations that enhance prediction accuracy [16].

Visualization and Interpretability

Model Interpretation Using SHAP Analysis

Interpretability is crucial for building trust in machine learning predictions and gaining mechanistic insights. SHAP (SHapley Additive exPlanations) analysis has emerged as the standard approach for explaining permeability predictions.

Diagram 2: Model interpretation workflow using SHAP

SHAP analysis quantifies the contribution of each molecular feature to individual predictions, enabling both local and global interpretation [11] [14]. Studies have consistently identified lipophilicity (LogP/LogD), topological polar surface area (TPSA), molecular weight, and hydrogen bonding capacity as key determinants of Caco-2 permeability [11] [4]. For complex models like graph neural networks, atom-attention mechanisms highlight specific molecular substructures influencing permeability, providing structural insights for medicinal chemistry optimization [16].

Applicability Domain Analysis

Defining the applicability domain is essential for establishing model reliability and identifying when predictions may be unreliable. Methods like Local Outlier Factor (LOF) analysis assess whether new compounds fall within the chemical space represented in the training data [14]. This is particularly important for novel therapeutic modalities like targeted protein degraders, which often occupy distinct regions of chemical space compared to traditional small molecules [13]. Model performance typically degrades for compounds with high molecular weight (>900 Da) and complex structural features that are underrepresented in training data [13].

Table 3: Essential Research Resources for Caco-2 Permeability Prediction

| Resource Category | Specific Tools/Services | Key Applications | Performance Considerations |

|---|---|---|---|

| Molecular Descriptors | RDKit, PaDEL, Mordred | Feature calculation for traditional ML | PaDEL & Mordred with 3D descriptors reduce MAE by 15.73% vs 2D [4] |

| Structural Fingerprints | Morgan (ECFP), MACCS, Avalon | Substructure-based representation | Morgan fingerprints widely used with tree-based models [3] [4] |

| Deep Learning Frameworks | ChemProp, D-MPNN, MAT | Graph-based molecular representation | Effective for large datasets; benefit from transfer learning [3] [17] |

| Benchmark Datasets | TDC, OCHEM | Model training and evaluation | OCHEM (9,402 compounds) provides greater statistical power [4] |

| AutoML Platforms | AutoGluon, CaliciBoost | Automated model development | Effective for high-dimensional tabular data [4] |

| Interpretability Tools | SHAP, Atom-Attention Mechanisms | Model explanation and insight generation | Identify dominant molecular features [11] [14] |

The comparison between XGBoost and Random Forest for Caco-2 permeability prediction reveals a nuanced landscape where both algorithms offer distinct advantages. XGBoost demonstrates superior performance for complex modeling scenarios including multiclass classification and imbalanced datasets [11] [3], while Random Forest provides robust performance with less extensive hyperparameter tuning [14] [12]. The optimal algorithm selection depends on specific project requirements, dataset characteristics, and computational resources.

Future directions in Caco-2 permeability prediction include increased integration of multi-task learning across related ADMET endpoints [15] [13], advancement in explainable AI for model interpretation [11] [16], and development of specialized approaches for challenging molecular classes like cyclic peptides and targeted protein degraders [17] [13]. As these methodologies continue to evolve, machine learning models will play an increasingly central role in accelerating drug discovery by providing rapid, accurate predictions of intestinal permeability.

In the field of cheminformatics, the accurate prediction of molecular properties is a critical component of drug discovery and development. Among the various properties assessed, Caco-2 permeability serves as a vital in vitro indicator for estimating the intestinal absorption potential of drug candidates, directly influencing their oral bioavailability [3] [4]. The development of robust computational models to predict this property can significantly enhance the efficiency of the early-stage drug discovery pipeline. For such predictive tasks, ensemble learning algorithms have demonstrated remarkable performance. Two of the most prominent and powerful ensemble methods are Random Forest and XGBoost. This guide provides an objective comparison of these two algorithms, detailing their core principles, relative strengths, and experimental performance specifically within the context of Caco-2 permeability prediction, empowering researchers to make informed methodological choices.

Core Algorithmic Principles

Random Forest: The Power of Bagging

Random Forest is an ensemble learning method that operates on the principle of "bagging" (Bootstrap Aggregating). It constructs a multitude of decision trees during training, introducing randomness in two key ways to ensure the trees are de-correlated and robust [18] [19].

- Bootstrap Sampling: Each tree in the forest is trained on a different random subset of the original training data, sampled with replacement. This means some data points may be repeated, while others may be omitted in any given sample [19] [20].

- Feature Randomness: When splitting a node during the construction of a tree, the algorithm considers only a random subset of the available features (e.g., √p features for classification, where p is the total number of features) [19]. This prevents any single dominant feature from being used in every tree.

For a classification task, the final prediction is determined by a majority vote from all the individual trees. For regression, the final output is the average prediction of all the trees [18] [19] [20]. This aggregation process reduces variance and mitigates the risk of overfitting, which is common with a single decision tree.

XGBoost: The Precision of Boosting

XGBoost (eXtreme Gradient Boosting) is an advanced implementation of the gradient boosting framework. Unlike the bagging approach of Random Forest, boosting is a sequential process where each new model is trained to correct the errors made by the previous ones [21] [22].

- Sequential Correction: The algorithm starts with a simple initial prediction (e.g., the mean of the target variable for regression). It then iteratively adds new decision trees, where each new tree is trained on the residual errors—the differences between the current predictions and the actual target values—of the existing ensemble [21] [22].

- Regularized Objective: A key innovation of XGBoost is its use of a regularized objective function [22] [23]. This function combines a differentiable loss function (e.g., mean squared error) with a regularization term that penalizes the complexity of the trees. The regularization term, defined as

Ω(ft) = γT + ½λ∑wj², controls the number of leaves (T) and the magnitude of the leaf weights (wj), thus preventing overfitting and promoting simpler models [22]. - Efficiency Optimizations: XGBoost incorporates several computational optimizations, including support for parallel processing, a sparsity-aware algorithm for handling missing data, and a cache-aware access pattern to speed up tree construction [21] [22].

The following diagram illustrates the fundamental difference in how the two algorithms build their ensembles.

Head-to-Head Algorithmic Comparison

The table below summarizes the fundamental characteristics of Random Forest and XGBoost.

Table 1: Core Algorithmic Comparison

| Feature | Random Forest | XGBoost |

|---|---|---|

| Ensemble Method | Bagging (Bootstrap Aggregating) | Boosting (Gradient Boosting) |

| Tree Relationship | Trees built independently & in parallel | Trees built sequentially, correcting previous errors |

| Training Speed | Generally faster to train (parallelization) | Can be slower due to sequential nature, but optimized |

| Key Strength | Robust against overfitting, handles noisy data | High predictive accuracy, model precision |

| Hyperparameters | Number of trees, features per split, tree depth | Learning rate, number of trees, regularization terms (γ, λ) |

| Handling Missing Data | Can handle missing values without pre-processing | Uses sparsity-aware split finding algorithm [22] |

Performance in Caco-2 Permeability Prediction

Caco-2 permeability prediction is a classic quantitative structure-activity relationship (QSAR) problem in cheminformatics. Researchers typically represent molecules using various feature sets, such as molecular descriptors (e.g., from RDKit, PaDEL, Mordred) or structural fingerprints (e.g., Morgan, MACCS), and then train machine learning models to predict the experimental permeability values [3] [4].

Key Experimental Protocols

To ensure a fair and rigorous comparison, benchmarking studies in the literature generally adhere to the following protocol:

- Data Collection and Curation: A large dataset of compounds with experimentally measured Caco-2 permeability (e.g., apparent permeability, Papp) is collected from public sources like the Therapeutics Data Commons (TDC) or OCHEM [3] [4]. The data is standardized, and duplicates are removed.

- Data Splitting: The dataset is split into training, validation, and test sets. A common robust approach is to use a scaffold split, which ensures that molecules with different core structures are in different splits, thus testing the model's ability to generalize to novel chemotypes [4].

- Molecular Featurization: Multiple molecular representation methods are employed, including:

- Model Training and Hyperparameter Optimization: Both Random Forest and XGBoost models are trained. Their hyperparameters are extensively optimized using techniques like grid search or Bayesian optimization to ensure a fair performance comparison [23].

- Evaluation: Model performance is evaluated on the held-out test set using standard regression metrics, including Mean Absolute Error (MAE), Root Mean Square Error (RMSE), and the coefficient of determination (R²).

Comparative Performance Data

Recent, rigorous studies provide quantitative evidence for the performance of both algorithms in this domain.

Table 2: Experimental Performance in Caco-2 Permeability Modeling

| Study Context | Algorithm | Reported Performance | Key Finding |

|---|---|---|---|

| Industrial Validation (2025) [3] | XGBoost | Generally provided better predictions for the test sets. | Boosting models (including XGBoost) retained predictive efficacy when applied to internal pharmaceutical industry data. |

| Random Forest | Competitive but generally lower than XGBoost. | ||

| Large-Scale QSAR Benchmark (2023) [23] | XGBoost | Generally the best predictive performance across 16 datasets and 94 endpoints. | XGBoost's regularized objective function provides superior generalization. |

| Random Forest | Strong and robust performance. | ||

| ADMET Prediction (2022) [24] | XGBoost | Prediction accuracy for Caco-2: 94.0% (Highest among tested methods). | Outperformed SVM, RF, KNN, LDA, and NB in predicting ADMET properties. |

| Random Forest | Lower accuracy than XGBoost. |

The experimental data consistently shows that XGBoost often holds a slight edge in predictive accuracy for Caco-2 permeability tasks. This is attributed to its ability to sequentially correct errors and its built-in regularization, which allows it to capture complex, non-linear relationships in the data without overfitting. However, it is crucial to note that Random Forest remains a highly robust and competitive algorithm, and its performance is often very close to that of XGBoost.

The Scientist's Toolkit: Essential Research Reagents

Building a predictive model for Caco-2 permeability requires a suite of computational "reagents." The table below lists key resources and their functions.

Table 3: Essential Tools and Resources for Caco-2 Model Development

| Tool / Resource | Type | Primary Function | Relevance to Caco-2 Research |

|---|---|---|---|

| RDKit [3] [4] | Cheminformatics Library | Calculates 2D molecular descriptors and fingerprints. | Extracts features like molecular weight, TPSA, and LogP, critical for permeability prediction. |

| PaDEL & Mordred [4] | Molecular Descriptor Software | Generates comprehensive sets of 2D and 3D molecular descriptors. | Provides a large feature space for models to learn from; 3D descriptors can improve performance. |

| Therapeutics Data Commons (TDC) [4] | Data Repository | Provides curated datasets for drug discovery, including Caco-2 permeability. | Offers a standardized benchmark dataset for model training and comparison. |

| AutoGluon [4] | AutoML Framework | Automates model selection, hyperparameter tuning, and ensemble creation. | Streamlines the development of high-performance models by combining algorithms like XGBoost, RF, etc. |

| XGBoost / scikit-learn RF | Algorithm Library | Provides optimized implementations of the ML algorithms. | Core libraries for building and training the predictive models. |

| (Rac)-Telinavir | (Rac)-Telinavir, CAS:162679-88-1, MF:C33H44N6O5, MW:604.7 g/mol | Chemical Reagent | Bench Chemicals |

| Cepacin A | Cepacin A, CAS:91682-95-0, MF:C16H14O4, MW:270.28 g/mol | Chemical Reagent | Bench Chemicals |

Both Random Forest and XGBoost are powerful, ensemble-based algorithms that are well-suited for the challenges of cheminformatics, including Caco-2 permeability prediction. Random Forest, with its bagging approach, is a remarkably robust and straightforward method that is less prone to overfitting and faster to train. XGBoost, through its sequential boosting and sophisticated regularization, often achieves marginally higher predictive accuracy at the cost of increased computational complexity and the need for more careful hyperparameter tuning.

The choice between them is not always straightforward. For a quick, robust baseline model, Random Forest is an excellent choice. For pushing the boundaries of predictive performance and winning a benchmarking study, the evidence suggests that investing the time to properly tune an XGBoost model is often rewarded. Ultimately, the specific nature of the dataset and the strategic goals of the research project should guide the selection.

Building Predictive Models: A Practical Guide to Implementing XGBoost and Random Forest

In the field of drug discovery, predicting the intestinal permeability of potential drug candidates is a critical step in assessing their oral bioavailability. The Caco-2 cell line, derived from human colon adenocarcinoma, has emerged as the "gold standard" in vitro model for this purpose due to its morphological and functional similarity to human intestinal epithelial cells [3]. However, experimental assessment of Caco-2 permeability is time-consuming, requiring extended culturing periods of 7-21 days for full differentiation, which increases costs and risks of contamination [3]. These challenges have accelerated the adoption of in silico approaches, particularly machine learning (ML) models, for reliable permeability prediction in the early stages of drug development.

Among various ML algorithms, XGBoost and Random Forest (RF) have demonstrated particularly promising results in cheminformatics and drug discovery applications. These ensemble methods offer robust performance in handling complex, high-dimensional chemical data and can effectively model non-linear relationships between molecular structures and permeability properties. The performance of these algorithms, however, is highly dependent on the quality and composition of the training data, making proper data curation and preparation essential components of successful model development [3] [11] [4].

This guide provides a comprehensive comparison of XGBoost and Random Forest for Caco-2 permeability prediction, with particular emphasis on data curation strategies and preparation methodologies for handling large, augmented datasets from public sources. We summarize quantitative performance metrics across multiple studies, detail experimental protocols for dataset construction, and provide practical resources for researchers developing predictive models in this domain.

Performance Comparison: XGBoost vs. Random Forest

Evaluation across multiple independent studies reveals a consistent performance trend between XGBoost and Random Forest algorithms for Caco-2 permeability prediction. The table below summarizes key quantitative metrics from recent investigations:

Table 1: Performance comparison of XGBoost and Random Forest across recent Caco-2 permeability studies

| Study & Context | Best Algorithm | Key Performance Metrics | Dataset Size & Type | Data Curation Approach |

|---|---|---|---|---|

| Dasgupta et al. (2025) Multiclass Classification [11] | XGBoost | Accuracy: 0.717, MCC: 0.512 | Not specified; Multiclass | ADASYN oversampling for class imbalance |

| CaliciBoost (2025) Systematic Benchmarking [4] | XGBoost (via AutoML) | Best MAE performance | TDC: 906 compounds; OCHEM: 9,402 compounds | Standardization, scaffold splitting |

| Jiang et al. (2025) Cyclic Peptides [17] | MAT Deep Learning Model (XGBoost not tested) | R²: 0.62-0.75 for cell permeability | Caco-2: 1,310 cyclic peptides | Train/validation/test split (8:1:1) |

| Permeability Prediction with Molecular Representations [3] | XGBoost | Superior predictions on test sets | Combined dataset: 5,654 compounds | Duplicate removal (SD ≤ 0.3), train/validation/test split (8:1:1) |

| Interpretable PAMPA Prediction [14] | Random Forest | Accuracy: 0.91 on external test set | 5,447 compounds | Random splitting, applicability domain analysis |

The consistent outperformance of XGBoost across multiple studies suggests its particular strength for Caco-2 permeability prediction tasks. In one comprehensive validation study, XGBoost generally provided better predictions than comparable models, including Random Forest, for test sets [3]. Similarly, in multiclass classification tasks addressing class imbalance, XGBoost achieved the best performance when combined with appropriate balancing strategies like ADASYN oversampling [11].

Random Forest, while consistently demonstrating strong performance, typically ranked slightly below XGBoost in head-to-head comparisons. However, it remains a highly competitive algorithm, particularly valued for its robustness and interpretability. In one study focused on PAMPA permeability (a related assay), Random Forest achieved the highest accuracy (91%) on an external test set among all compared models [14].

Data Curation and Preparation Protocols

Data Collection and Augmentation Strategies

The foundation of any reliable predictive model is a comprehensive, high-quality dataset. Researchers have employed various strategies for assembling Caco-2 permeability datasets:

Multi-source Data Integration: One prominent approach combines data from multiple publicly available sources to create large, augmented datasets. One study integrated data from three public datasets, resulting in an initial collection of 7,861 compounds [3]. After rigorous curation, this was refined to 5,654 non-redundant Caco-2 permeability records.

Standardized Permeability Values: To ensure consistency across different sources, researchers convert permeability measurements to standardized units (cm/s × 10^(-6)) and apply logarithmic transformation (base 10) for modeling [3].

Industrial Dataset Validation: To assess real-world applicability, some studies incorporate proprietary industrial datasets as external validation sets. For example, one study used Shanghai Qilu's in-house collection of 67 compounds to test model transferability from public to proprietary data [3].

Data Cleaning and Standardization

Robust data cleaning protocols are essential for handling aggregated datasets:

Duplicate Handling: For duplicate entries, researchers typically calculate mean values and standard deviations, retaining only entries with standard deviation ≤ 0.3 and using mean values as standards for model training [3].

Molecular Standardization: The RDKit module MolStandardize is commonly employed for molecular standardization to achieve consistent tautomer canonical states and final neutral forms while preserving stereochemistry [3].

Outlier Removal: In some workflows, out-of-bound measurements (e.g., values exceeding the quantifiable range indicated as "lower than X" or "greater than X") are excluded after removing the qualifiers [15].

Dataset Splitting Strategies

The method used to split data into training, validation, and test sets significantly impacts model performance evaluation:

Random Splitting: Simple random splitting is commonly used, with one study employing an 8:1:1 ratio for training, validation, and test sets respectively [3]. To enhance robustness against partitioning variability, the dataset may undergo multiple splits using different random seeds, with model assessment based on average performance across independent runs [3].

Scaffold Splitting: This approach groups compounds based on their molecular scaffolds, ensuring that structurally similar molecules are kept together in splits. This provides a more challenging and realistic assessment of a model's ability to generalize to novel chemotypes [4].

Temporal Splitting: Some studies implement time-based splits where earlier data is used for training and later data for testing, simulating real-world deployment scenarios where models predict properties for newly synthesized compounds [15].

Table 2: Essential data preparation tools and their applications in Caco-2 permeability prediction

| Tool/Resource | Primary Function | Application in Caco-2 Research | Key Features |

|---|---|---|---|

| RDKit | Cheminformatics toolkit | Molecular standardization, fingerprint generation, descriptor calculation | Open-source, MolStandardize module, Morgan fingerprints |

| PaDEL | Molecular descriptor calculation | Generation of 2D and 3D molecular descriptors | Extensible, includes fingerprint patterns, command-line interface |

| Mordred | Molecular descriptor calculator | Comprehensive descriptor calculation (1D, 2D, 3D) | 1,826 descriptors, parallel computation, Python API |

| AutoGluon | Automated Machine Learning | Model selection and hyperparameter optimization | Automatic feature preprocessing, ensemble construction, neural architecture search |

| ChEMBL Structure Pipeline | Molecular standardization | Standardizing SMILES representations | Standardized representation, salt stripping, canonicalization |

Experimental Workflows

The typical workflow for developing Caco-2 permeability prediction models involves multiple interconnected stages, as illustrated in the following diagram:

Molecular Representation Strategies

The choice of molecular representation significantly impacts model performance, with different approaches offering complementary advantages:

Fingerprint-Based Representations: Extended Connectivity Fingerprints (ECFP) like Morgan fingerprints with a radius of 2 and 1024 bits are widely used for capturing molecular substructures [3]. Additional fingerprint types include Avalon, ErG, and MACCS keys, each providing different representations of molecular features [4].

Molecular Descriptors: RDKit 2D descriptors, PaDEL, and Mordred descriptors capture physicochemical properties such as molecular weight, topological polar surface area (TPSA), logP, and hydrogen bond donors/acceptors [3] [4]. Recent studies indicate that incorporating 3D descriptors can reduce MAE by up to 15.73% compared to using 2D features alone [4].

Graph-Based Representations: For deep learning approaches, molecular graphs G=(V,E) serve as foundational representations, where V represents atoms (nodes) and E represents bonds (edges) [3]. The Directed Message Passing Neural Network (D-MPNN) employs a mixed representation combining molecular convolution encoding with traditional descriptors [16].

Advanced Modeling Techniques

Multitask Learning: Recent approaches have explored multitask learning (MTL) to leverage shared information across related permeability endpoints. MTL models trained on combined Caco-2 and MDCK cell line data have demonstrated higher accuracy than single-task approaches by leveraging correlations between different permeability measurements [15].

Handling Class Imbalance: For classification tasks, addressing class imbalance is crucial. Studies have successfully employed balancing strategies including oversampling, undersampling, and hybrid approaches, with ADASYN oversampling combined with XGBoost achieving the best performance in multiclass classification [11].

Automated Machine Learning: AutoML approaches like AutoGluon have shown promising results by automatically selecting optimal algorithms, preprocessing steps, and hyperparameters. These methods are particularly valuable in data-limited prediction tasks and have demonstrated superior performance in systematic benchmarking studies [4].

The Scientist's Toolkit

Table 3: Essential research reagents and computational tools for Caco-2 permeability prediction

| Tool/Resource | Type | Primary Function | Application Example |

|---|---|---|---|

| RDKit | Software Library | Cheminformatics & ML | Molecular standardization, fingerprint generation [3] |

| PaDEL Descriptors | Molecular Descriptors | Feature Extraction | 2D/3D descriptor calculation for QSAR [4] |

| Mordred Descriptors | Molecular Descriptors | Feature Extraction | Comprehensive 1D/2D/3D descriptor calculation [4] |

| AutoGluon | AutoML Framework | Model Selection & Optimization | Automated pipeline creation for Caco-2 prediction [4] |

| Chemprop | Deep Learning Library | Message Passing Neural Networks | Graph-based property prediction [15] |

| SHAP | Interpretation Tool | Model Explainability | Feature importance analysis [11] [4] |

| OCHEM Database | Data Repository | Experimental Permeability Data | Source of curated Caco-2 measurements [4] |

| TDC Benchmark | Benchmarking Suite | Dataset & Evaluation | Standardized performance assessment [4] |

| Everninomicin D | Everninomicin D | Everninomicin D is a potent oligosaccharide antibiotic for RUO study of Gram-positive infections. For Research Use Only. Not for human use. | Bench Chemicals |

| Anthelvencin A | Anthelvencin A, CAS:58616-25-4, MF:C19H25N9O3, MW:427.5 g/mol | Chemical Reagent | Bench Chemicals |

The comprehensive comparison of XGBoost and Random Forest for Caco-2 permeability prediction reveals XGBoost's consistent performance advantage across multiple studies and dataset configurations. However, both algorithms demonstrate robust predictive capabilities when paired with appropriate data curation protocols and molecular representations. The effectiveness of both algorithms is fundamentally dependent on rigorous data preparation, including multi-source dataset integration, careful handling of duplicates, molecular standardization, and appropriate dataset splitting strategies.

Future directions in the field point toward increased use of multitask learning to leverage information across related permeability assays, enhanced model interpretability through SHAP analysis and similar methods, and the development of more sophisticated deep learning architectures that effectively integrate diverse molecular representations. As these computational approaches continue to evolve, they offer the promise of significantly accelerating early-stage drug discovery by providing rapid, reliable predictions of intestinal permeability, ultimately contributing to more efficient development of orally bioavailable therapeutics.

The accurate prediction of Caco-2 permeability represents a critical challenge in modern drug discovery, serving as a key indicator for estimating oral absorption and bioavailability of potential drug candidates. Within this field, the selection of molecular representation—how chemical structures are converted into computationally readable data—has emerged as a factor equally as important as the choice of machine learning algorithm itself. Molecular representations form the fundamental input that machine learning models use to learn the complex relationships between chemical structure and permeability behavior. Despite the emergence of sophisticated deep learning approaches, traditional expert-based representations continue to demonstrate remarkable effectiveness, with recent comprehensive studies showing that several molecular feature representations work similarly well across benchmark datasets, though each carries distinct advantages and limitations [25].

This comparative analysis examines the predominant molecular representation strategies used in Caco-2 permeability prediction, with a specific focus on their performance when employed with two of the most prevalent algorithms in cheminformatics: XGBoost and Random Forest. The representations evaluated span structural fingerprints, traditional molecular descriptors (1D, 2D, and 3D), and molecular graphs used with graph neural networks. By synthesizing evidence from recent benchmarking studies, this guide provides researchers with evidence-based recommendations for selecting optimal molecular representations for permeability modeling tasks, with particular attention to the interplay between representation choice and algorithm performance.

Comparative Performance Analysis of Molecular Representations

Quantitative Performance Metrics Across Representations

Table 1: Performance comparison of molecular representations with different machine learning algorithms for Caco-2 permeability prediction

| Molecular Representation | Best-Performing Algorithm | Reported Performance Metrics | Key Advantages | Limitations |

|---|---|---|---|---|

| Morgan Fingerprints (ECFP) | XGBoost | AUROC: 0.828 [26]; Competitive MAE in Caco-2 prediction [27] | Captures topological patterns; Excellent with tree-based models; Computational efficiency | Limited 3D structural information; May miss physicochemical properties |

| 2D Molecular Descriptors | XGBoost | Superior to fingerprints for ADME-Tox targets [28]; Well-suited for physical properties [25] | Direct encoding of physicochemical properties; Interpretability; Comprehensive molecular characterization | Feature selection often required; May not capture complex structural patterns |

| 3D Molecular Descriptors | Neural Networks/XGBoost | 15.73% MAE reduction vs. 2D alone [27]; Superior generalizability across scaffolds [29] | Captures stereochemistry and conformation; Meaningful feature extraction for permeability [29] | Conformational dependence; Computational intensity; Sensitivity to alignment |

| Molecular Graphs (MPNN) | Graph Neural Networks | Competitive with literature models [29] [16]; Enhanced by multitask learning [15] | No predefined features needed; Direct structure learning; Captures atomic interactions | Data hunger; Computational intensity; Limited interpretability |

| MACCS Fingerprints | Random Forest/XGBoost | Strong overall performance [25]; Good baseline representation | Simplicity; Interpretability; Computational efficiency | Limited resolution; Less discriminative power |

Algorithm-Specific Performance Patterns

The interaction between molecular representation and algorithm choice reveals consistent patterns across studies. For tree-based methods like XGBoost and Random Forest, traditional 2D descriptors and structural fingerprints typically deliver superior performance. In comprehensive comparisons of descriptor- and fingerprint-sets for ADME-Tox targets, traditional 1D, 2D, and 3D descriptors showed clear superiority when used with XGBoost, with 2D descriptors producing better models for almost every dataset than the combination of all examined descriptor sets [28]. This advantage extends to Caco-2 prediction, where XGBoost generally provided better predictions than comparable models across different molecular representations [30].

For neural network architectures, molecular graphs and learned representations demonstrate increasing competitiveness. The atom-attention Message Passing Neural Network (AA-MPNN) combined with contrastive learning has shown significant improvements in predicting BBB and Caco-2 permeability by focusing on critical substructures within the molecular graph [16]. Similarly, 3D neural networks (3D-NN) can independently extract more meaningful features for permeability tasks, achieving superior generalizability across scaffolds and performing competitively with task-specific literature models [29].

Representation Combinations and Complementarity

A notable finding across multiple studies is that combining different molecular feature representations typically does not yield significant improvements compared to individual representations. Research has shown that the information contained in different molecular features is largely complementary rather than redundant [25]. However, strategic combinations can be beneficial in specific contexts. The incorporation of 3D descriptors with 2D features resulted in a 15.73% reduction in Mean Absolute Error (MAE) for Caco-2 prediction compared to using 2D features alone [27]. Similarly, augmenting graph neural networks with physicochemical features like pKa and LogD has been shown to improve the accuracy of both permeability and efflux endpoints [15].

Experimental Protocols and Methodologies

Standardized Workflow for Representation Comparison

Table 2: Key research reagents and computational tools for molecular representation

| Research Reagent/Tool | Function | Application Context |

|---|---|---|

| RDKit | Open-source cheminformatics; Fingerprint and descriptor generation | Standardized molecular representation; Feature calculation [28] [30] [26] |

| PaDEL Descriptors | Molecular descriptor calculation | Comprehensive 1D-3D descriptor generation [27] [25] |

| Mordred Descriptors | Molecular descriptor calculation | High-dimensional descriptor generation [27] |

| ChemProp | Message Passing Neural Networks | Molecular graph implementation [30] [15] |

| Schrödinger Suite | Molecular modeling and geometry optimization | 3D structure preparation [28] |

| OpenBabel | Format conversion and charge assignment | Molecular file preparation [31] |

Diagram 1: Experimental workflow for comparing molecular representations in Caco-2 permeability prediction

Dataset Curation and Preparation Standards

High-quality dataset curation forms the foundation of reliable permeability prediction models. The standard protocol involves collecting Caco-2 permeability measurements from public databases such as DrugBank, followed by rigorous standardization. This process includes: (1) converting permeability measurements to consistent units (cm/s × 10â»â¶) and applying logarithmic transformation (base 10); (2) removing entries with missing permeability values; (3) calculating mean values and standard deviations for duplicate entries, retaining only entries with standard deviation ≤ 0.3; and (4) employing RDKit's MolStandardize for molecular standardization to achieve consistent tautomer canonical states and final neutral forms while preserving stereochemistry [30]. After curation, datasets are typically randomly divided into training, validation, and test sets in an 8:1:1 ratio, ensuring identical distribution across datasets. To enhance robustness against data partitioning variability, the experimental dataset often undergoes multiple splits using different random seeds, with model assessment based on average performance across independent runs [30].

Model Training and Evaluation Framework

The evaluation of molecular representations follows a standardized benchmarking approach. Studies typically employ stratified five-fold cross-validation on an 80:20 train-test split, maintaining the positive-negative ratio within each fold [26]. Within each fold, models are fitted on four subsets and evaluated on the held-out subset, yielding mean metrics across folds. Common performance metrics include Accuracy, Area Under the Receiver Operating Characteristic Curve (AUROC), Area Under the Precision-Recall Curve (AUPRC), Specificity, Precision, and Recall for classification tasks, and Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) for regression tasks [30] [26]. For Caco-2 permeability prediction, which is often framed as a regression problem, R² values are also frequently reported [30]. To assess model robustness, Y-randomization tests and applicability domain analysis are commonly employed, while Matched Molecular Pair Analysis (MMPA) may be used to extract chemical transformation rules that provide insights for optimizing Caco-2 permeability [30].

Specialist Discussion and Research Applications

Representation Selection Guidance for Specific Research Scenarios

The optimal choice of molecular representation depends significantly on specific research goals and constraints. For high-throughput virtual screening scenarios requiring rapid predictions, Morgan fingerprints with XGBoost provide an excellent balance of computational efficiency and predictive performance, with demonstrated AUROC values of 0.828 in multi-label prediction tasks [26]. For mechanistic studies requiring interpretability, 2D molecular descriptors offer superior insights into structure-property relationships, with PaDEL and RDKit descriptors being particularly effective for predicting physical properties [27] [25]. When investigating complex transport phenomena involving stereochemistry or transporter interactions, 3D representations become increasingly valuable, with 3D-NN demonstrating superior generalizability across molecular scaffolds [29]. For data-rich environments with large, diverse compound libraries, molecular graphs with MPNNs show promising performance, particularly when augmented with contrastive learning techniques [16].

Emerging Trends and Future Directions

The field of molecular representation for permeability prediction is evolving toward hybrid approaches that leverage the strengths of multiple representation strategies. The integration of atom-attention mechanisms with message-passing neural networks represents a significant advancement, allowing models to focus on critical substructures within molecular graphs [16]. Similarly, the combination of 3D molecular representations with neural networks (3D-NN) has shown promise in extracting more meaningful features for permeability tasks, achieving competitive performance with task-specific literature models [29]. Multitask learning approaches that jointly predict multiple permeability-related endpoints (e.g., Caco-2 Papp, MDCK-MDR1 efflux ratios) demonstrate that shared information across endpoints can enhance model accuracy [15]. Furthermore, the incorporation of predicted physicochemical properties such as pKa and LogD as additional descriptors has been shown to improve the accuracy of both permeability and efflux predictions in graph neural networks [15].

Practical Implementation Considerations

When implementing molecular representations for permeability prediction, several practical considerations emerge. For low-data scenarios, traditional descriptors and fingerprints generally outperform more complex representations, with MACCS fingerprints providing surprisingly competitive performance despite their simplicity [25]. For industrial applications requiring transferability across diverse chemical spaces, models trained on public data may retain predictive efficacy when applied to proprietary datasets, though performance should be validated on internal compounds [30]. For multi-class permeability classification tasks, addressing class imbalance through techniques like ADASYN oversampling can significantly improve predictive performance, with XGBoost classifiers achieving accuracy of 0.717 and MCC of 0.512 on test sets [32]. Finally, for applications requiring model interpretability, SHAP analysis applied to descriptor-based models can elucidate feature importance and provide explainability for permeability predictions [32].

This guide provides an objective comparison of XGBoost and Random Forest, focusing on their application in predicting Caco-2 permeability—a critical task in drug development for assessing intestinal absorption. We present performance data, detailed tuning methodologies, and practical protocols to inform model selection and implementation.

Performance Comparison: XGBoost vs. Random Forest in Caco-2 Permeability Prediction

In a 2025 study that conducted an in-depth evaluation of various machine learning algorithms for Caco-2 permeability prediction, the performance of several models was directly compared on a large, curated dataset. The results, summarized in the table below, indicate that XGBoost generally provided better predictions than comparable models for the test sets [3].

Table 1: Comparative Performance of Machine Learning Models on Caco-2 Permeability Data

| Model | Reported Performance (Test Set) | Key Strengths |

|---|---|---|

| XGBoost | Generally provided better predictions [3] | High predictive accuracy, handles complex, non-linear relationships effectively. |

| Random Forest (RF) | Slightly lower accuracy than XGBoost [3] | Robust, less prone to overfitting, provides feature importance. |

| Support Vector Machine (SVM) | Performance not explicitly ranked above RF or XGBoost [3] | Effective in high-dimensional spaces. |

| Deep Learning Models(DMPNN, CombinedNet) | Performance not explicitly ranked above RF or XGBoost [3] | Can capture complex patterns from raw molecular graphs. |

The study also highlighted the practical value of tree-based models in an industrial setting, finding that boosting models retained a degree of predictive efficacy when applied to an internal pharmaceutical industry dataset [3]. This demonstrates their transferability and robustness beyond publicly available benchmark data.

Detailed Hyperparameter Tuning & Training Protocols

The performance of any machine learning model is heavily dependent on its hyperparameter configuration. Below are detailed tuning strategies for both Random Forest and XGBoost.

Random Forest Tuning Protocol

Random Forest is considered "robust" but can yield significantly better accuracy and stability with proper tuning [33]. The key is a compact, sensible grid search.

Table 2: Key Random Forest Hyperparameters and Tuning Ranges

| Hyperparameter | Description | Typical Tuning Range / Values [33] [34] |

|---|---|---|

n_estimators |