Validating AI in Drug Discovery: A Framework for Robustness, Regulatory Compliance, and Clinical Success in 2025

This article provides a comprehensive guide for researchers and drug development professionals on validating artificial intelligence (AI) models in drug discovery.

Validating AI in Drug Discovery: A Framework for Robustness, Regulatory Compliance, and Clinical Success in 2025

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on validating artificial intelligence (AI) models in drug discovery. It covers the foundational principles of AI model validation, explores methodological approaches and real-world applications from leading companies, addresses key challenges and optimization strategies, and establishes a framework for rigorous performance and comparative analysis. With the FDA expected to release new guidance and the first AI-discovered drugs advancing in clinical trials, this resource synthesizes current best practices to ensure AI tools are trustworthy, ethical, and effective in accelerating the delivery of new therapies.

The Pillars of Trust: Foundational Principles for Validating AI in Drug Discovery

The integration of artificial intelligence (AI) into drug discovery represents a paradigm shift, moving the industry from labor-intensive, human-driven workflows toward AI-powered engines capable of dramatically compressing development timelines [1]. However, this acceleration demands a rigorous and evolving framework for validation. In the context of AI-based drug discovery, validation extends beyond simple model accuracy; it is a multi-tiered process that ensures AI-generated insights are biologically relevant, clinically translatable, and ultimately, able to yield safe and effective medicines. The fundamental question facing the industry is whether AI is producing genuinely better drugs or merely facilitating faster failures [1]. Answering this requires a critical analysis of performance metrics, experimental protocols, and the entire pathway from algorithmic prediction to approved therapeutic.

A core challenge is that traditional machine learning metrics often fall short in the biological context. Standard measures like accuracy can be misleading when dealing with highly imbalanced datasets, such as those containing far more inactive compounds than active ones [2]. Consequently, a new set of domain-specific validation metrics has emerged, prioritizing biological relevance and the ability to detect rare but critical events over raw computational performance [2]. This guide provides a structured comparison of validation approaches, detailing the key performance indicators, experimental methodologies, and essential tools required to robustly evaluate AI-driven drug discovery platforms.

Comparative Performance of AI Drug Discovery Platforms

A critical component of validation is benchmarking the performance and output of leading AI drug discovery companies. The table below synthesizes the clinical progress and key performance claims of major players in the field, offering a comparative view of their real-world impact.

Table 1: Clinical-Stage AI Drug Discovery Companies and Key Performance Metrics (as of 2025)

| Company | AI Platform & Specialization | Key Clinical Candidates & Indications | Reported Performance & Validation Metrics |

|---|---|---|---|

| Exscientia [1] [3] | End-to-end platform; generative AI for small-molecule design; "Centaur Chemist" approach. | DSP-1181 (OCD, Phase I), EXS-21546 (Immuno-oncology, halted), GTAEXS-617 (CDK7 inhibitor for solid tumors, Phase I/II) [1]. | Achieved clinical candidate with only 136 synthesized compounds (vs. industry standard of thousands); design cycles ~70% faster and requiring 10x fewer compounds than industry norms [1]. |

| Insilico Medicine [4] [1] [5] | End-to-end Pharma.AI platform; generative biology and chemistry for aging-related diseases. | Idiopathic pulmonary fibrosis drug candidate. | Progressed from target discovery to Phase I trials in approximately 18 months, a fraction of the typical 3-5 year timeline [1]. |

| Recursion Pharmaceuticals [4] [1] [3] | AI-powered high-throughput phenotypic screening with cellular imaging. | Focus on rare genetic diseases, oncology, and fibrosis. | AI-driven screening led to identification of potential therapeutics for rare genetic diseases; merged with Exscientia to integrate generative chemistry [4] [1]. |

| BenevolentAI [4] [1] [3] | AI-powered knowledge graph for target discovery and validation. | Programs in COVID-19 and neurodegenerative diseases. | Knowledge Graph connects genes, diseases, and compounds to uncover novel therapeutic opportunities; robust biological modeling for target validation [1] [3]. |

| Atomwise [3] [5] | Structure-based deep learning (AtomNet platform) for small-molecule discovery. | Orally bioavailable TYK2 inhibitor (preclinical) for autoimmune diseases. | In a 318-target study, identified novel hits for 235 targets; presented as a viable alternative to high-throughput screening [5]. |

| Schrödinger [4] [1] [3] | Physics-based computational chemistry combined with machine learning. | Internal pipeline in oncology and neurology. | Platform used for molecular modeling and drug design by major pharma partners; offers robust physics-based and biological modeling [4] [1]. |

The progression of AI-designed molecules into clinical trials is the ultimate form of validation. By the end of 2024, the cumulative number of AI-derived molecules reaching clinical stages had grown exponentially, with over 75 candidates entering human trials [1]. However, it is crucial to note that as of 2025, no AI-discovered drug has yet received market approval, with most programs remaining in early-stage trials [1]. This underscores the importance of rigorous validation at every stage to improve the probability of clinical success.

Domain-Specific Validation Metrics for AI Models

Validating AI models in drug discovery requires moving beyond generic machine learning metrics. The highly specialized nature of biomedical data, often characterized by imbalance, multi-modality, and rare critical events, necessitates a tailored set of performance indicators [2]. The following table compares generic metrics against their domain-specific adaptations, which are becoming the standard for rigorous model evaluation in biopharma.

Table 2: Comparison of Generic vs. Domain-Specific ML Metrics for Drug Discovery

| Generic ML Metric | Limitations in Drug Discovery | Domain-Specific Alternative | Application & Rationale |

|---|---|---|---|

| Accuracy [2] | Misleading with imbalanced datasets (e.g., excess of inactive compounds); a model can achieve high accuracy by always predicting the majority class. | Rare Event Sensitivity [2] | Measures the model's ability to detect low-frequency events (e.g., toxicological signals, active compounds), which are critical for actionable outcomes. |

| F1 Score [2] | Offers a balanced view but may dilute focus on the top-ranking predictions that are most critical for resource allocation. | Precision-at-K [2] | Evaluates the model's precision when considering only the top K ranked candidates, ensuring focus on the most promising leads for experimental validation. |

| ROC-AUC [2] | Evaluates class separation but lacks biological interpretability and does not assess the mechanistic relevance of predictions. | Pathway Impact Metrics [2] | Assesses how well a model's predictions align with known or novel biological pathways, ensuring findings are statistically valid and biologically meaningful. |

The implementation of these specialized metrics was demonstrated effectively by Elucidata in an omics-based drug discovery project. The challenge was to improve the detection of rare toxicological signals in transcriptomics datasets, where traditional metrics failed. By implementing a customized ML pipeline optimized with Rare Event Sensitivity and Precision-Weighted Scoring, the model achieved a 4x increase in detection speed for subtle toxicological signals, enabling faster and more confident decision-making [2]. This case study highlights how domain-specific validation directly translates to improved R&D efficiency.

Experimental Protocols for Validating AI-Generated Candidates

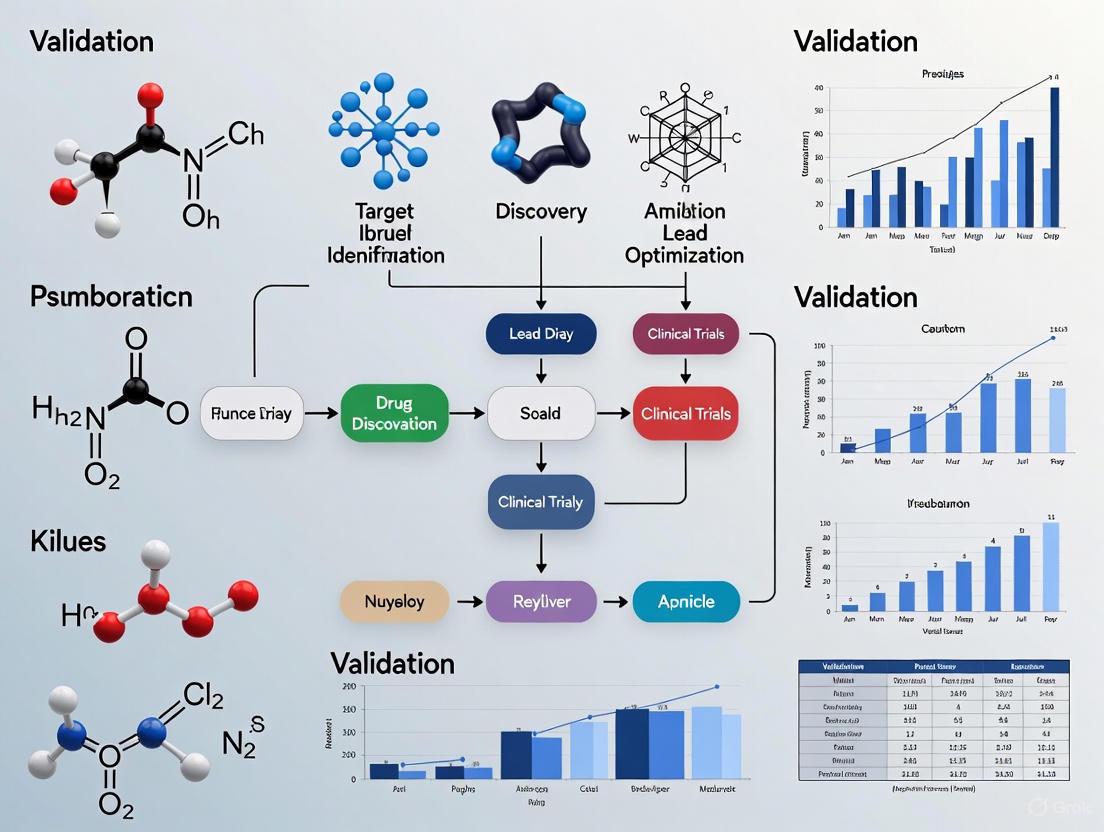

A robust validation strategy requires standardized experimental workflows to confirm the properties and potential of AI-generated drug candidates. The "Design-Make-Test-Analyze" (DMTA) cycle is the core iterative process in modern drug discovery, and AI is being integrated into every stage [6]. The diagram below illustrates a validated, AI-augmented DMTA cycle for small molecule discovery.

The validation of AI-discovered compounds relies on a multi-stage protocol combining in silico predictions with rigorous experimental testing. The following is a detailed breakdown of the key stages for a typical small-molecule candidate, drawing from reported industry practices.

Protocol 1: In Silico Target Validation and Compound Generation

- Objective: To identify a novel, druggable disease target and generate a series of lead compounds with high predicted affinity and specificity.

- Detailed Methodology:

- Target Identification: Use AI platforms (e.g., Insilico's PandaOmics, BenevolentAI's Knowledge Graph) to analyze multi-omics datasets (genomics, proteomics, transcriptomics) from diseased versus healthy tissues. The goal is to identify and prioritize potential protein targets based on their causal link to the disease and "druggability" [1] [3] [5].

- Generative Molecular Design: Employ generative AI models (e.g., Exscientia's DesignStudio, Insilico's Chemistry42) to design novel small-molecule structures de novo that fit a specific target product profile. This profile includes desired potency, selectivity, and predicted ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties [1] [7].

- Virtual Screening & Prioritization: Screen the generated virtual library against the target structure (if available) using deep learning networks (e.g., Atomwise's AtomNet) to predict binding affinity [3] [5]. Subsequently, apply domain-specific metrics like Precision-at-K to rank-order the top candidate molecules for synthesis [2].

Protocol 2: Experimental & Preclinical Validation

- Objective: To empirically confirm the predicted activity, selectivity, and safety of the synthesized AI-generated lead compounds in biological systems.

- Detailed Methodology:

- Compound Synthesis & Characterization: Synthesize the top-priority virtual candidates. Companies like Exscientia and Iktos are increasingly integrating AI with robotic synthesis automation (e.g., Iktos's Spaya and Robotics platforms) to accelerate this step [1] [5]. Confirm the chemical structure and purity of synthesized compounds using standard analytical techniques (NMR, LC-MS).

- In Vitro Biochemical/Cellular Assays: Test the synthesized compounds in a series of in vitro experiments. This begins with binding assays (e.g., SPR) and functional cell-based assays to determine potency (IC50/EC50) and efficacy. For a more translational view, platforms like Exscientia use patient-derived primary cells or tissue samples (e.g., from its Allcyte acquisition) to assess compound efficacy in a more disease-relevant context [1].

- ADMET Profiling: Conduct in vitro ADMET studies to assess key parameters such as metabolic stability in liver microsomes, membrane permeability (Caco-2 assays), and cardiac safety risk (hERG inhibition). AI-based ADMET prediction models, like the one benchmarked by Receptor.AI, are used earlier in the workflow to filter out molecules with poor predicted properties, minimizing costly experimental testing on likely failures [8].

- In Vivo Efficacy and Toxicity: Advance the most promising lead candidate to animal models of the disease to demonstrate proof-of-concept efficacy and preliminary pharmacokinetics and toxicology. The data generated here is fed back into the AI models (see DMTA cycle diagram) to refine the design of the next generation of compounds, closing the learning loop [1] [6].

Essential Research Reagents and Solutions for Validation

The experimental validation of AI-generated discoveries relies on a suite of core research reagents and technological solutions. The following table details these key tools and their functions in the validation workflow.

Table 3: Key Research Reagent Solutions for Experimental Validation

| Research Reagent / Solution | Function in Validation Workflow |

|---|---|

| Patient-Derived Primary Cells & Organoids [1] | Provide a physiologically relevant ex vivo system for testing compound efficacy and toxicity, improving the translational predictiveness of in vitro data. |

| High-Content Cellular Imaging Systems [1] [3] [5] | Enable high-throughput, automated phenotypic screening of compounds on cells, generating rich datasets for AI models to analyze complex morphological changes. |

| Automated Synthesis & Screening Robotics [1] [5] | Automate the "Make" and "Test" phases of the DMTA cycle, increasing throughput, reproducibility, and the speed of data generation for AI feedback loops. |

| Multi-Omics Datasets (Genomic, Proteomic) [2] [3] | Serve as the foundational data for AI-driven target discovery and biomarker identification; quality and diversity of data are critical for model performance. |

| Retrieval-Augmented Generation (RAG) Systems [6] | AI software tool that grounds Large Language Models (LLMs) in proprietary internal research data, enabling scientists to query and find information across data silos to inform validation. |

| On-Premise LLM Deployment [6] | An infrastructure solution that allows companies to deploy AI models internally, enforcing data privacy and security guardrails while leveraging AI for research assistance. |

Implementation of a Robust AI Validation Framework

For researchers and drug development professionals, transitioning to an AI-augmented workflow requires more than just adopting new software; it demands a fundamental shift in validation culture. Success hinges on implementing a comprehensive framework that addresses data, metrics, and organizational practices.

First, data quality is the foundation of AI validation. The principle of "garbage in, garbage out" is paramount. Initiatives like DataPerf, which provide benchmarks for data-centric AI development, are gaining traction [9]. This involves shifting focus from solely refining model architectures to systematically curating, cleaning, and labeling training datasets. In practice, this means investing in standardized data curation protocols to handle diverse sources like ChEMBL, ToxCast, and proprietary in-house data [2] [8].

Second, organizations must enforce centralized guardrails and ensure model transparency. As AI adoption spreads, practices such as creating risk profiles that dictate the permitted level of AI involvement in a decision and validating specific models for high-risk tasks are becoming essential [6]. Furthermore, the "black-box" nature of some complex models erodes trust among scientists. To counter this, validation reports must include explainability features, such as links to corroborating internal data or displays of the most similar training set compounds, to create traceability and justify experimental follow-up [6].

Finally, the most critical element is fostering collaboration between data scientists and domain experts. Biologically meaningful validation cannot be performed in a computational silo. Cross-functional teams are needed to design and interpret experiments, ensuring that evaluation metrics and model outputs are not just statistically sound but also biologically and clinically relevant [2] [1]. This collaborative spirit is what ultimately bridges the gap between a promising algorithm and an approved drug that meets the stringent requirements of regulators and patients.

The application of Artificial Intelligence (AI) in drug discovery represents a paradigm shift in pharmaceutical research, offering unprecedented capabilities to analyze vast biological datasets, identify potential drug targets, and predict therapeutic effectiveness [10]. As AI technologies become increasingly integrated into the drug development pipeline, establishing robust validation frameworks has become imperative to ensure these systems deliver reliable, trustworthy, and clinically relevant outcomes. The RICE Framework emerges as a critical structured approach for validating AI-based drug discovery models, encompassing four core objectives: Robustness, Interpretability, Controllability, and Ethicality.

This framework addresses the unique challenges presented by AI/ML technologies in the highly regulated pharmaceutical environment, where regulatory bodies like the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA) have established stringent guidelines emphasizing reliability, transparency, and patient safety [11]. The RICE Framework provides a comprehensive methodology for researchers and drug development professionals to evaluate AI models beyond mere predictive accuracy, ensuring they meet the rigorous standards required for therapeutic development and regulatory approval.

Core Objectives of the RICE Framework

Robustness

In the context of AI-based drug discovery, Robustness refers to a model's ability to maintain stable, reliable performance across diverse datasets, experimental conditions, and potential adversarial inputs. Robust AI models demonstrate minimal performance degradation when confronted with noisy data, distribution shifts, or slightly perturbed inputs, which is particularly crucial in biological systems where experimental variability is inherent.

Robustness validation ensures that AI predictions for drug-target interactions, toxicity profiles, or molecular properties remain consistent and dependable when applied to real-world patient populations or different laboratory settings. Regulatory guidelines emphasize the importance of rigorous testing under diverse conditions to confirm model accuracy and robustness before deployment in critical decision-making processes [11]. Techniques for enhancing robustness include data augmentation, adversarial training, and stress testing under edge cases that simulate challenging real-world scenarios.

Interpretability

Interpretability addresses the fundamental need to understand and trust the decision-making processes of AI models, moving beyond "black box" predictions to transparent, explainable insights. In drug discovery, where decisions have significant implications for patient safety and therapeutic efficacy, understanding how an AI model arrives at its predictions is essential for scientific validation and regulatory acceptance [11].

The interpretability requirement is particularly critical for complex models like deep neural networks, which might otherwise function as inscrutable black boxes. Regulatory frameworks increasingly demand transparency in how algorithms are trained, validated, and how they make decisions, requiring researchers to document training data, decision logic, and algorithm versions [11]. Explainable AI (XAI) techniques such as attention mechanisms, feature importance analysis, and surrogate models help researchers understand which molecular features, structural properties, or biological pathways most significantly influence model predictions, fostering trust and facilitating scientific discovery.

Controllability

Controllability encompasses the methodologies and mechanisms that allow researchers to direct, constrain, and fine-tune AI model behavior to align with scientific objectives, safety constraints, and experimental parameters. In drug discovery, controllability ensures that AI-generated molecular designs adhere to chemical synthesizability constraints, toxicity thresholds, and therapeutic targeting requirements.

The emergence of generative AI models for molecular design has heightened the importance of controllability, as researchers must steer molecular generation toward synthetically feasible compounds with desired properties. Frameworks like SynFormer exemplify this principle by generating synthetic pathways alongside molecular structures, ensuring proposed compounds are not only theoretically promising but also practically synthesizable [12]. Controllability also encompasses the ability to adjust model behavior based on emerging experimental data, creating iterative feedback loops that refine AI predictions through continuous learning while maintaining alignment with research goals.

Ethicality

Ethicality in the RICE Framework addresses the profound responsibility inherent in developing therapeutics for human patients, encompassing data privacy, algorithmic fairness, patient safety, and social impact. Ethical AI deployment in drug discovery requires vigilant attention to potential biases in training data, particularly the underrepresentation of specific patient populations that could skew predictions and diminish clinical generalizability [11].

The World Health Organization has emphasized the need for ethical governance structures to prevent AI from dehumanizing care, undermining patient autonomy, or posing significant risks to patient privacy [13]. Ethicality also encompasses broader concerns including appropriate data protection with rights-based approaches, informed consent for data usage, and safeguards against malicious application of AI technologies for bioterrorism [13]. Implementing ethical AI requires multidisciplinary collaboration between data scientists, clinicians, ethicists, and regulatory experts to ensure technologies develop within a framework that prioritizes patient welfare and social benefit.

Comparative Analysis of AI Models Using the RICE Framework

Quantitative Comparison of AI Drug Discovery Models

Table 1: Performance Metrics of AI Models in Drug Discovery Applications

| AI Model | Application Domain | Robustness Score | Interpretability Level | Controllability Features | Ethicality Safeguards |

|---|---|---|---|---|---|

| Metabolite Translator | Metabolite Prediction | 92% accuracy on diverse compound libraries | Medium: Attention mechanisms show relevant chemical features | High: Controllable output for specific metabolic pathways | Medium: Anonymized training data, bias monitoring |

| SynFormer | Synthesizable Molecular Design | 88% synthesizability rate in validation | Medium: Pathway visualization illustrates synthetic routes | High: Explicit synthetic pathway generation | Medium: Focus on synthetic accessibility reduces resource waste |

| AlphaFold | Protein Structure Prediction | >90% GDT accuracy on CASP targets | Low: Limited explanation for structural confidence | Low: Limited steering of folding process | High: Open access promotes equitable research benefits |

| Deep Learning QSAR | Toxicity Prediction | 85% cross-validation consistency | Medium: Feature importance identifies structural alerts | Medium: Threshold control for safety margins | High: Rigorous bias testing across demographic groups |

Table 2: Regulatory Compliance Assessment of AI Models Against FDA Guidelines

| Compliance Dimension | Metabolite Translator | SynFormer | Traditional QSAR Models | Generative Molecular AI |

|---|---|---|---|---|

| Data Integrity (ALCOA+) | Partial compliance with electronic records | Full compliance with version control | Full compliance with established protocols | Variable compliance based on implementation |

| Model Explainability | Medium: Input-output relationships documented | Medium: Pathway rationale provided | High: Transparent parameters | Low: Black-box architecture concerns |

| Reproducibility Documentation | High: Full training data and parameters archived | High: Reaction templates and building blocks cataloged | High: Established protocols with minimal variance | Medium: Stochastic elements complicate reproduction |

| Bias Mitigation | Medium: Diverse chemical space representation | High: Focus on synthesizability reduces resource bias | Medium: Dependent on training data curation | Low: Potential for unrealistic molecular generation |

Case Study: Metabolite Translator for Drug Metabolism Prediction

The Metabolite Translator model, developed at Rice University, provides an illustrative case study for applying the RICE Framework [14]. This deep learning-based technique predicts metabolites resulting from interactions between small molecules like drugs and enzymes, giving pharmaceutical developers a comprehensive picture of potential drug behavior and toxicity profiles.

Robustness was validated through extensive testing across diverse compound libraries, achieving 92% accuracy in predicting known metabolic pathways. The model maintains stable performance when applied to novel chemical structures, demonstrating particular strength in identifying metabolites formed through enzymes not commonly involved in drug metabolism that are typically missed by rule-based methods [14].

Interpretability is facilitated through the model's translation-based architecture, which uses SMILES (Simplified Molecular-Input Line-Entry System) notation to represent chemical transformations in human-readable format. While the underlying deep learning model has inherent complexity, attention mechanisms help researchers identify which molecular substructures most significantly influence metabolic predictions.

Controllability is evidenced by the model's ability to focus predictions on specific enzymatic pathways or tissue types, allowing researchers to explore metabolic fate in particular biological contexts. This enables targeted investigation of hepatic versus extra-hepatic metabolism, supporting comprehensive toxicity profiling.

Ethicality considerations are addressed through the model's potential to reduce animal testing by providing accurate computational predictions of human metabolism. The training approach using transfer learning on known chemical reactions helps mitigate bias that might arise from limited experimental data.

Diagram 1: Metabolite Translator Workflow. This illustrates the sequence from molecular input to metabolite prediction, highlighting key computational stages.

Case Study: SynFormer for Synthesizable Molecular Design

SynFormer represents a significant advancement in generative AI for drug discovery by explicitly addressing synthesizability throughout the molecular design process [12]. This framework integrates a scalable transformer architecture with a diffusion module for building block selection, specifically focusing on generating synthetic pathways rather than just molecular structures.

Robustness in SynFormer is demonstrated through its consistent performance in both local chemical space exploration (generating synthesizable analogs of reference molecules) and global exploration (identifying optimal molecules according to black-box property prediction). The model maintains structural integrity while ensuring synthetic feasibility, with analogs maintaining favorable objective scores close to original designs [12].

Interpretability is enhanced through the model's pathway-centric approach, which provides researchers with explicit synthetic routes rather than just final molecular structures. This transparency in proposed synthesis helps medicinal chemists evaluate and trust the AI's proposals, understanding the stepwise chemical transformations suggested.

Controllability is a foundational strength of SynFormer, which allows researchers to constrain molecular generation based on available starting materials, preferred reaction types, or complexity parameters. This fine-grained control ensures that AI-generated molecules align with practical laboratory constraints and resource availability.

Ethicality considerations are addressed through SynFormer's focus on synthetic accessibility, which helps prevent wasted resources on pursuing theoretically interesting but practically inaccessible compounds. This promotes more efficient drug discovery with reduced material waste.

Diagram 2: SynFormer Molecular Design Process. This workflow shows the iterative pathway for generating synthesizable molecules, with feasibility checks ensuring practical outcomes.

Experimental Protocols for RICE Framework Validation

Robustness Testing Protocol

Objective: Systematically evaluate AI model performance stability under varied data conditions and potential adversarial inputs.

Materials:

- Primary validation dataset (curated, high-quality reference data)

- Noise-injected datasets (varied levels of Gaussian noise)

- Domain-shifted datasets (different biological contexts or experimental conditions)

- Adversarial examples (strategically modified inputs)

Methodology:

- Baseline Performance Establishment: Evaluate model accuracy, precision, and recall on primary validation dataset under standardized conditions.

- Noise Tolerance Assessment: Introduce progressively increasing Gaussian noise (5%, 10%, 15%) to input features and measure performance degradation.

- Domain Shift Evaluation: Test model on data from different sources (e.g., alternative cell lines, animal models, or experimental protocols).

- Adversarial Robustness Testing: Expose model to strategically modified inputs designed to provoke incorrect predictions while maintaining semantic validity.

- Cross-validation: Implement k-fold cross-validation (typically k=5 or k=10) to assess performance consistency across data subsets.

Validation Metrics:

- Performance degradation slope under noise introduction

- Domain adaptation gap (performance difference between primary and shifted domains)

- Adversarial success rate (proportion of adversarial examples that cause prediction failures)

- Variance in cross-validation performance across folds

Interpretability Assessment Protocol

Objective: Quantitatively and qualitatively evaluate the explainability of model predictions and decision logic.

Materials:

- Model with accessible intermediate layers or attention mechanisms

- Reference dataset with ground truth explanations (where available)

- Feature importance evaluation framework

- Domain expert panel for qualitative assessment

Methodology:

- Feature Importance Analysis: Implement perturbation-based or gradient-based techniques to identify input features most influential to predictions.

- Attention Visualization: For attention-based models, visualize and quantify attention patterns across input sequences or structures.

- Counterfactual Explanation Generation: Systematically modify inputs to identify minimal changes that alter model predictions.

- Domain Expert Evaluation: Engage medicinal chemists, biologists, and pharmacologists in structured evaluation of model explanations for plausibility and utility.

- Faithfulness Measurement: Assess whether explanatory features truly drive model decisions through ablation studies.

Validation Metrics:

- Explanation fidelity (correlation between explanatory importance and prediction impact)

- Expert agreement score (proportion of model explanations deemed plausible by domain experts)

- Explanation stability (consistency of explanations for similar inputs)

- Completeness score (proportion of prediction variance explained by identified features)

Controllability Verification Protocol

Objective: Validate the effectiveness of mechanisms for steering and constraining model behavior to align with research objectives.

Materials:

- Model with controllability interfaces (constraint specification, objective weighting)

- Benchmark tasks with defined constraints and optimization targets

- Molecular property prediction services or assays

- Synthetic chemistry feasibility assessment tools

Methodology:

- Constraint Adherence Testing: Evaluate model performance under progressively stricter constraints (e.g., synthesizability, toxicity thresholds, property ranges).

- Multi-objective Optimization Assessment: Test model ability to balance competing objectives (e.g., potency versus solubility, selectivity versus synthesizability).

- Directional Control Verification: Assess how effectively model outputs respond to explicit guidance signals and parameter adjustments.

- Constraint Violation Analysis: Quantify the frequency and magnitude of constraint violations in generated outputs.

- Feedback Integration Testing: Evaluate how effectively models incorporate experimental feedback to refine future predictions.

Validation Metrics:

- Constraint satisfaction rate (proportion of outputs meeting all specified constraints)

- Multi-objective optimization efficiency (Pareto front quality and diversity)

- Control responsiveness (output change magnitude per unit control signal)

- Iterative improvement rate (performance enhancement through feedback loops)

Ethicality Audit Protocol

Objective: Systematically identify and mitigate potential ethical risks in AI model development and deployment.

Materials:

- Diverse demographic and biomedical datasets for bias assessment

- Data protection and privacy assessment frameworks

- Ethical guidelines from regulatory bodies (WHO, FDA, EMA)

- Stakeholder engagement protocols

Methodology:

- Bias Audit: Evaluate model performance disparities across demographic groups, disease subtypes, and molecular classes.

- Privacy Impact Assessment: Analyze data handling practices against GDPR, HIPAA, and other relevant privacy regulations.

- Dual-Use Risk Evaluation: Assess potential for malicious application and implement appropriate safeguards.

- Transparency Documentation: Complete comprehensive documentation of model capabilities, limitations, and appropriate use cases.

- Stakeholder Impact Analysis: Identify and evaluate potential effects on patients, researchers, healthcare systems, and society.

Validation Metrics:

- Fairness disparity scores (performance variation across protected groups)

- Privacy preservation metrics (re-identification risk, data leakage potential)

- Transparency index (completeness of documentation and limitation disclosure)

- Stakeholder impact score (breadth and equity of benefit distribution)

Essential Research Reagents and Computational Tools

Table 3: Key Research Reagent Solutions for AI Drug Discovery Validation

| Reagent/Tool Category | Specific Examples | Primary Function in RICE Validation | Implementation Considerations |

|---|---|---|---|

| Chemical Structure Encoders | SMILES, SELFIES, Graph Neural Networks | Convert molecular structures into machine-readable formats for model training and prediction | SMILES offers simplicity but can generate invalid structures; SELFIES provides guaranteed validity |

| Reaction Databases | USPTO, Reaxys, Pistachio | Provide curated chemical transformations for training metabolic prediction and synthesizability models | Data quality varies significantly; require careful preprocessing and standardization |

| Protein Structure Predictors | AlphaFold, RoseTTAFold | Generate 3D protein structures for target-based drug discovery and binding affinity prediction | Accuracy varies across protein families; confidence metrics crucial for reliability assessment |

| Toxicity Prediction Services | ProTox, DeepTox, ADMET Predictor | Provide benchmark toxicity predictions for model validation and comparative analysis | Different tools cover varying endpoint types; ensemble approaches often improve reliability |

| Synthesizability Assessment | SYBA, SCScore, RAscore | Evaluate synthetic accessibility of AI-generated molecules prior to experimental validation | Scores are relative rather than absolute; require calibration with specific synthetic capabilities |

| Feature Importance Tools | SHAP, LIME, Integrated Gradients | Interpret model predictions by quantifying contribution of input features to output decisions | Different methods may yield varying explanations; multiple approaches recommended for validation |

| Bias Detection Frameworks | AI Fairness 360, Fairlearn | Identify performance disparities across demographic groups or molecular classes | Require careful definition of protected attributes and disparity metrics relevant to context |

| Adversarial Attack Libraries | Advertorch, CleverHans, Foolbox | Generate adversarial examples to test model robustness and identify potential failure modes | Should simulate realistic perturbations rather than purely mathematical constructs |

The RICE Framework provides a comprehensive, structured approach for validating AI-based drug discovery models, addressing critical dimensions of Robustness, Interpretability, Controllability, and Ethicality that collectively determine real-world utility and regulatory acceptability. As AI technologies continue to evolve and integrate more deeply into pharmaceutical research, systematic application of this framework will be essential for ensuring that AI-driven discoveries translate reliably into safe, effective therapeutics.

The comparative analysis presented demonstrates that while current AI models show promising capabilities across the RICE dimensions, significant variation exists in how different approaches address these critical requirements. Models like Metabolite Translator and SynFormer exemplify the principled integration of domain knowledge and practical constraints that characterizes effective AI drug discovery tools [14] [12]. The experimental protocols and research reagents cataloged provide practical resources for implementing rigorous validation practices that align with emerging regulatory guidelines from the FDA, EMA, and WHO [11] [13].

Future advancements in AI for drug discovery will need to continue balancing predictive power with the fundamental requirements encapsulated in the RICE Framework. As noted by regulatory experts, successful AI regulatory compliance requires proactive engagement with regulatory agencies, cross-disciplinary collaboration, and lifecycle management that extends beyond initial model development [11]. By adopting structured validation approaches like the RICE Framework, researchers and drug development professionals can accelerate the translation of AI innovations into transformative therapies while maintaining the rigorous standards required for patient safety and therapeutic efficacy.

The integration of Artificial Intelligence (AI) into drug development represents a paradigm shift, offering unprecedented opportunities to enhance efficiency, accuracy, and speed across the pharmaceutical lifecycle [15]. From identifying novel drug candidates to optimizing clinical trials and monitoring post-market safety, AI technologies are poised to address long-standing inefficiencies in one of the most resource-intensive sectors in healthcare [16]. However, this transformative potential is accompanied by significant regulatory challenges, including concerns about algorithmic transparency, data integrity, model robustness, and clinical validity [17].

Recognizing these challenges, regulatory agencies worldwide are developing frameworks to ensure that AI tools used in critical decision-making processes meet rigorous standards for safety and effectiveness. The U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA) have emerged as pivotal figures in shaping the global regulatory landscape for AI in pharmaceuticals [16]. Their evolving guidance documents reflect a concerted effort to balance innovation with patient safety, establishing clear expectations for the validation of AI models throughout the drug development pipeline.

This comparative guide examines the current regulatory expectations from the FDA and EMA regarding AI validation, providing researchers, scientists, and drug development professionals with a structured framework for navigating these complex requirements. By synthesizing the most recent guidance documents, discussion papers, and policy statements, this analysis aims to support the development of robust, compliant AI applications that accelerate the delivery of new therapies to patients.

Comparative Analysis of FDA and EMA Regulatory Frameworks

Foundational Principles and Regulatory Philosophy

The FDA and EMA share common objectives in regulating AI for drug development, notably ensuring patient safety, product quality, and the reliability of evidence submitted to support marketing authorization. However, their regulatory philosophies and implementation approaches reflect distinct institutional traditions and risk-management strategies [16].

The U.S. FDA has adopted a pragmatic, risk-based approach that emphasizes the specific "context of use" (COU) of an AI model [18] [19] [20]. This framework is designed to be adaptable to the rapidly evolving AI landscape, focusing on establishing "model credibility" through a structured assessment process tailored to the model's influence on regulatory decisions and the potential consequences of incorrect outputs [18]. The FDA's guidance is primarily non-binding and recommends early engagement with sponsors to set expectations for AI model validation [19].

The European EMA demonstrates a more structured and cautious approach, prioritizing rigorous upfront validation and comprehensive documentation before AI systems are integrated into drug development [16]. The EMA's framework, outlined in its "AI in Medicinal Product Lifecycle Reflection Paper," emphasizes a risk-based approach while maintaining stronger alignment with traditional pharmaceutical regulations and quality-by-design principles [16]. The EMA has also reached a significant milestone with its first qualification opinion on AI methodology in March 2025, accepting clinical trial evidence generated by an AI tool for diagnosing inflammatory liver disease [16].

Table 1: Core Regulatory Principles and Philosophies

| Aspect | U.S. FDA | European EMA |

|---|---|---|

| Primary Approach | Risk-based, context-specific credibility assessment | Structured, upfront validation with qualified AI methodologies |

| Guidance Status | Draft guidance (January 2025) [18] [19] | Reflection paper (October 2024) with specific qualification opinions [16] |

| Foundation | Risk-based credibility framework centered on "Context of Use" (COU) [18] | Risk-based approach integrated into medicinal product lifecycle [16] |

| Key Emphasis | Establishing model credibility for specific decision-making tasks [20] | Rigorous validation, documentation, and integration with existing GxP systems [16] |

Scope and Applicability

The scope of AI applications covered by FDA and EMA guidance reveals important distinctions in regulatory priorities and focus areas. Both agencies concentrate on AI models that impact patient safety, drug quality, or the reliability of study results, but they differ in their specific exclusions and areas of emphasis [18] [16].

The FDA's draft guidance explicitly excludes AI models used solely for drug discovery or those employed to streamline operational efficiencies that do not impact patient safety, drug quality, or study reliability [18] [20]. This exclusion reflects the FDA's current focus on AI applications that directly support regulatory decision-making for products already in the development pipeline. The guidance applies broadly to AI use in clinical trial design and management, patient evaluation, endpoint adjudication, clinical data analysis, digital health technologies for drug development, pharmacovigilance, pharmaceutical manufacturing, and real-world evidence generation [18].

The EMA's framework takes a broader lifecycle perspective, encompassing AI applications from discovery through post-market surveillance without explicit exclusions for discovery phase applications [16]. This comprehensive scope aligns with the EMA's integrated approach to medicinal product regulation, recognizing that AI tools may have implications across the entire product lifecycle. The agency emphasizes that AI systems used in the context of clinical trials must comply with Good Clinical Practice (GCP) guidelines, with high-impact systems subject to comprehensive assessment during authorization procedures [16].

Risk Classification and Credibility Assessment

Both agencies employ risk-based frameworks to determine the level of scrutiny required for AI validation, but they differ in their specific risk classification methodologies and assessment criteria.

The FDA employs a detailed seven-step, risk-based credibility assessment framework that forms the core of its regulatory approach [18] [19] [20]. This process begins with defining the specific "question of interest" that the AI model will address and precisely delineating its "context of use" [18]. Risk assessment considers two primary factors: "model influence risk" (how much the AI output influences decision-making) and "decision consequence risk" (the potential impact of an incorrect decision on patient safety or product quality) [18]. Models with higher influence and consequence risks require more extensive validation and documentation.

The EMA's risk classification system, while similarly risk-based, places greater emphasis on the intended purpose of the AI system and its impact on critical decision points within the medicinal product lifecycle [16]. High-risk applications include those where AI outputs directly influence patient eligibility for treatments, clinical endpoint adjudication, or safety determinations [16]. The EMA expects comprehensive validation evidence for these high-risk applications, including analytical validation (establishing technical performance), clinical validation (demonstrating correlation with clinical outcomes), and organizational validation (ensuring appropriate governance and workflow integration) [16].

Table 2: Risk Classification and Validation Requirements

| Risk Level | FDA Examples & Requirements [18] [19] | EMA Expectations [16] |

|---|---|---|

| High Risk | - AI determines patient risk classification for life-threatening events- Fully automated decisions impacting patient safety- Comprehensive details on architecture, data, training, validation | - AI directly influences patient eligibility or treatment decisions- Requires analytical, clinical, and organizational validation- Comprehensive documentation and rigorous assessment |

| Moderate Risk | - AI identifies manufacturing batches out-of-specification but requires human confirmation- Intermediate level of disclosure | - AI supports clinical trial site selection or data collection- Substantial evidence of performance and robustness |

| Low Risk | - AI assists with operational workflows not impacting safety or quality- Minimal information may be requested | - AI used for literature screening or administrative task automation- Focus on data integrity and basic performance metrics |

Documentation and Submission Requirements

Documentation requirements represent a critical component of AI validation, providing regulatory agencies with the evidence needed to assess model credibility and appropriateness for the intended context of use.

The FDA expects sponsors to develop and execute a "credibility assessment plan" that documents how the AI model was developed, trained, evaluated, and monitored [18] [19]. This plan should include a detailed description of the model architecture, data sources and characteristics, training methodologies, validation processes, performance metrics, and approaches to addressing potential biases [18]. For higher-risk models, the FDA may request extensive information covering all aspects of model development and deployment. The guidance recommends that sponsors discuss with the FDA "whether, when, and where" to submit the credibility assessment report, which could be included in a regulatory submission, meeting package, or made available upon request during inspections [19].

The EMA emphasizes comprehensive documentation integrated within the overall marketing authorization application [16]. This includes detailed information about the AI model's development process, training data representativeness, validation results against appropriate benchmarks, and plans for lifecycle management [16]. The EMA places particular importance on the explainability of AI outputs and the clinical relevance of the model's predictions, requiring clear documentation of how the model's outputs relate to clinically meaningful endpoints [16].

Lifecycle Management and Post-Market Monitoring

Both agencies recognize that AI models may evolve over time and require ongoing monitoring and maintenance to ensure continued performance and suitability for their intended use.

The FDA's draft guidance specifically addresses "lifecycle maintenance" for AI models, noting that changes in input data or deployment environments may affect model performance [18] [19]. Sponsors are expected to maintain detailed lifecycle maintenance plans as part of their pharmaceutical quality systems, with summaries included in marketing applications [19]. These plans should describe activities for monitoring model performance, detecting "model drift" or performance degradation, and implementing appropriate retraining or revalidation procedures when needed [18]. Certain changes impacting model performance may need to be reported to the FDA in accordance with existing regulatory requirements for post-approval changes [19].

The EMA similarly emphasizes continuous monitoring and quality management throughout the AI system's lifecycle [16]. The agency expects robust processes for tracking model performance in real-world settings, detecting data drift or concept drift, and implementing version control and change management procedures [16]. The EMA's framework aligns with existing pharmacovigilance requirements, treating significant changes to AI models as potential modifications to the medicinal product's evidence base that may require regulatory notification or approval [16].

Technical Requirements for AI Validation

Data Management and Quality Standards

High-quality data forms the foundation of credible AI models, and both agencies establish rigorous expectations for data management practices throughout the model lifecycle.

The FDA emphasizes comprehensive data characterization, including detailed descriptions of data sources, collection methods, cleaning procedures, and annotation protocols [18]. The guidance highlights the importance of data quality, diversity, and relevance to the intended patient population, with particular attention to identifying and mitigating potential biases in training datasets [18]. Sponsors should provide evidence of appropriate segregation between training, tuning, and validation datasets to prevent overfitting and ensure independent performance assessment [18]. For models using real-world data, the FDA expects thorough documentation of data provenance and processing transformations [19].

The EMA's requirements align closely with established principles of data integrity (ALCOA+) - ensuring data are Attributable, Legible, Contemporaneous, Original, and Accurate [16]. The agency emphasizes the importance of dataset representativeness, requiring that training and validation data adequately reflect the target population and use environments [16]. Metadata capture is particularly emphasized, including information about data collection conditions, preprocessing steps, and annotation criteria, to enable proper interpretation and reuse of data assets [16] [17].

Data Management Workflow for AI Validation: This diagram illustrates the sequential process for managing data throughout the AI model lifecycle, from initial collection through comprehensive documentation.

Model Development and Performance Evaluation

Robust model development and rigorous performance evaluation are essential components of AI validation, with both agencies establishing detailed expectations for these processes.

The FDA recommends comprehensive model description including architecture details, feature selection processes, optimization methods, and tuning procedures [18]. Model evaluation should include appropriate performance metrics tailored to the context of use, with testing against independent datasets to demonstrate generalizability [18]. The guidance emphasizes the importance of identifying and documenting model limitations, potential failure modes, and approaches to quantifying uncertainty in predictions [18]. For models with customizable features or adaptive components, sponsors should provide detailed descriptions of the technical elements that enable and control these capabilities [21].

The EMA places strong emphasis on clinical validity and relevance, requiring demonstration that model outputs correlate with clinically meaningful endpoints [16]. Performance evaluation should include appropriate benchmarking against established methods or clinical standards, with particular attention to robustness testing across relevant subpopulations and clinical scenarios [16]. The agency also emphasizes the importance of model explainability, especially for high-risk applications, requiring that developers provide sufficient information to enable healthcare professionals to understand and appropriately interpret model outputs [16].

Table 3: Essential Research Reagent Solutions for AI Validation

| Reagent Category | Specific Examples | Function in AI Validation |

|---|---|---|

| Reference Standards | Ground truth datasets, Benchmarking corpora, Qualified medical image archives | Provide validated reference points for training and evaluating AI model performance [17] |

| Data Annotation Tools | Specialized labeling software, Clinical terminology standards, Structured annotation frameworks | Enable consistent, accurate labeling of training data with proper metadata capture [16] |

| Model Architecture Libraries | TensorFlow, PyTorch, Scikit-learn, MONAI | Provide standardized implementations of algorithms and neural network architectures [17] |

| Bias Detection Frameworks | AI Fairness 360, Fairlearn, Aequitas | Identify and quantify potential biases in training data and model outputs [18] |

| Performance Validation Suites | Model cards, Benchmarking datasets (e.g., MoleculeNet), Evaluation metrics | Standardize assessment of model performance, robustness, and generalizability [17] |

Transparency and Explainability Requirements

Transparency and explainability represent critical considerations for AI validation, particularly for models supporting high-stakes regulatory decisions.

The FDA emphasizes methodological transparency rather than mandating specific technical approaches to explainability [18]. The guidance acknowledges the challenges in interpreting complex AI models but stresses the importance of providing sufficient information to enable regulatory assessment of model reliability [18] [19]. For higher-risk applications, the FDA may expect more detailed information about how models reach their conclusions, potentially including approaches such as feature importance analyses or example-based explanations [21]. The agency also encourages the use of "model cards" or similar frameworks to communicate key model characteristics, performance metrics, and limitations in a standardized format [21].

The EMA places stronger explicit emphasis on explainability, particularly for models that directly influence clinical decisions [16]. The agency expects that AI systems should be "transparent and testable," with outputs that can be interpreted and understood by relevant experts [16]. This includes requirements for appropriate visualization of model outputs, clear documentation of limitations and appropriate use cases, and provision of information that helps users understand the basis for model predictions [16]. The EMA's reflection paper suggests that for certain high-risk applications, black-box models may be unacceptable without additional validation approaches to ensure interpretability [16].

Compliance Strategies and Implementation Frameworks

Pre-Submission Engagement and Regulatory Interaction

Early and strategic engagement with regulatory agencies represents a critical success factor for AI-based drug development programs.

The FDA strongly encourages early engagement through various mechanisms including Q-Submission meetings, INTERACT meetings, and model-informed drug development (MIDD) discussions [19] [20]. These interactions provide opportunities to align on the appropriateness of proposed credibility assessment activities, identify potential challenges, and establish expectations for the level of evidence needed to support the proposed context of use [19]. The FDA recommends discussing "whether, when, and where" to submit credibility assessment reports, recognizing that submission requirements may vary based on model risk and application type [19].

The EMA offers similar opportunities for early dialogue through its innovation task forces and scientific advice procedures [16]. These interactions are particularly valuable for novel AI methodologies without established regulatory precedents, allowing sponsors to obtain agency feedback on validation strategies and evidence requirements [16]. The EMA has also established specific procedures for qualifying novel drug development tools, including AI methodologies, which can provide regulatory certainty before significant investment in implementation [16].

Quality Management and Governance Structures

Robust quality management and governance structures provide the foundation for sustainable AI compliance throughout the product lifecycle.

The FDA's expectations align with existing quality system regulations, emphasizing design controls, documentation practices, and change management procedures [21]. The guidance suggests that AI model development should incorporate principles of Good Machine Learning Practice (GMLP), including representative data collection, human-centered design practices, and comprehensive performance evaluation [16]. Manufacturers should maintain detailed design history files documenting model development decisions, with particular attention to risk management activities addressing AI-specific hazards such as data drift, overfitting, and performance degradation in real-world settings [21].

The EMA emphasizes pharmaceutical quality systems that encompass AI tools used in manufacturing, quality control, and clinical development [16]. This includes established change management procedures, version control, and comprehensive documentation practices integrated with existing quality management systems [16]. The agency expects clear accountability structures and governance frameworks defining roles and responsibilities for AI system monitoring, maintenance, and decision-making throughout the product lifecycle [16].

AI Governance and Quality Management Framework: This diagram outlines the key components of a comprehensive governance structure for AI systems in drug development.

Lifecycle Management and Change Control

Effective lifecycle management ensures that AI models remain credible and fit-for-purpose as they evolve in response to new data and changing environments.

The FDA recommends detailed "lifecycle maintenance plans" that describe activities for monitoring model performance, detecting data drift or concept drift, and implementing appropriate retraining or recalibration procedures [18] [19]. These plans should be commensurate with the model's risk profile and complexity, with higher-risk applications warranting more rigorous monitoring and control mechanisms [19]. The FDA acknowledges the similarity between lifecycle maintenance plans and Predetermined Change Control Plans (PCCPs) established for AI-enabled medical devices, suggesting that sponsors may benefit from considering similar approaches for drug-related AI applications [19].

The EMA's approach to lifecycle management aligns with established procedures for post-authorization changes to medicinal products [16]. Significant modifications to AI models that impact their output or use in critical decision-making may require regulatory notification or approval depending on the potential impact on product quality, safety, or efficacy [16]. The agency expects robust version control, comprehensive documentation of model changes, and clear criteria for determining when model updates warrant additional validation or regulatory review [16].

The regulatory landscape for AI validation in drug development is rapidly evolving, with both the FDA and EMA establishing structured frameworks to ensure the credibility and reliability of AI tools supporting critical decisions. While differences exist in their specific approaches and emphasis, both agencies share common foundational principles centered on risk-based assessment, comprehensive validation, and lifecycle management.

For researchers, scientists, and drug development professionals, successful navigation of this landscape requires a proactive, strategic approach that integrates regulatory considerations throughout the AI development process. Key success factors include:

- Early and Continuous Engagement: Regular dialogue with regulatory agencies to align on validation strategies and evidence requirements [19] [20]

- Risk-Proportionate Validation: Tailoring validation activities to the model's potential impact on patient safety and product quality [18] [16]

- Comprehensive Documentation: Maintaining detailed records of model development, validation, and performance monitoring [18] [16]

- Robust Governance: Implementing clear accountability structures and quality management systems for AI lifecycle management [16] [21]

- Strategic Intellectual Property Management: Balancing patent protection with regulatory transparency requirements, particularly for innovative AI methodologies [18]

As both agencies continue to refine their approaches based on accumulating experience with AI applications, drug development professionals should anticipate increasing regulatory specificity and potentially greater convergence between FDA and EMA expectations. By establishing strong foundations in current requirements while maintaining flexibility for future evolution, organizations can position themselves to leverage AI technologies effectively while ensuring compliance and maintaining patient safety as their highest priority.

The Critical Role of High-Quality, Diverse, and Unbiased Training Data

The adoption of Artificial Intelligence (AI) represents a paradigm shift in pharmaceutical research, offering the potential to dramatically accelerate timelines and reduce the immense costs traditionally associated with bringing a new drug to market. AI-driven drug discovery can span over a decade and cost more than $2 billion, with nearly 90% of drug candidates failing due to insufficient efficacy or safety concerns [22]. However, the performance and reliability of these AI models are fundamentally constrained by the quality of their training data. Models trained on biased, sparse, or noisy data can produce unrealistic molecular outputs or inaccurate target predictions, ultimately undermining the drug discovery process and wasting valuable resources [23] [24]. This guide objectively compares the performance of AI models built on different data foundations and details the experimental protocols necessary for their rigorous validation, framing this examination within the broader thesis that data quality is the most critical determinant of success in AI-based drug discovery.

The Centrality of Data Quality in AI Model Performance

Defining Data Quality in a Biological Context

In AI-driven drug discovery, "data quality" encompasses several interdependent characteristics: completeness, diversity, standardization, and accuracy. High-quality data must be generated under controlled, reproducible conditions to minimize experimental noise and technical artifacts that can mislead AI models [25]. Furthermore, the data must be representative of the broad biological and chemical space to which the model will be applied; this includes diversity in cell types, protein families, disease mechanisms, and patient populations to ensure model generalizability and mitigate bias [24].

Performance Comparison: High-Quality vs. Conventional Datasets

The table below summarizes a comparative analysis of AI model performance when trained on high-quality, fit-for-purpose datasets versus conventional public data sources.

Table 1: Performance Comparison of AI Models on Different Data Types

| Performance Metric | Models Trained on High-Quality, Standardized Data | Models Trained on Conventional Public Datasets |

|---|---|---|

| Target Identification Accuracy | Improved identification of novel, druggable targets with stronger genetic evidence [24] [22]. | Higher risk of false positives and focus on well-established protein families (e.g., kinases, GPCRs) [24]. |

| Molecular Generation Success | Generation of novel molecules with optimized, balanced profiles for efficacy, safety, and synthesizability [23]. | Generation of molecules that may be invalid, difficult to synthesize, or have unfavorable ADMET properties [23]. |

| Generalizability | Higher likelihood of performance across diverse biological contexts and patient populations [25]. | Performance may be brittle and limited to specific biological contexts represented in the training data [24]. |

| Clinical Translation | AI-discovered drugs reported to have an 80-90% success rate in Phase I trials [22]. | Traditionally discovered drugs have a 40-65% success rate in Phase I trials [22]. |

| Representative Dataset | Recursion's RxRx3-core (standardized HUVEC cell microscopy) [25]. | Public datasets like GenBank, ChEMBL, PubMed [25]. |

Experimental Benchmarking for Data and Model Validation

Core Principles of Experimental Benchmarking

Experimental benchmarking is a critical methodology for validating AI models, wherein the predictions of a non-experimental (in silico) model are compared against results from controlled laboratory experiments (the gold standard) [26]. This process allows researchers to calibrate the bias and quantify the accuracy of their AI-driven approaches. The most instructive benchmarking studies are conducted on a large scale and compare in silico and experimental work that investigates the same outcome in the same biological context [26].

Protocol for Benchmarking an AI Target Identification Model

This protocol provides a framework for validating an AI model designed to discover novel disease-associated protein targets.

Step 1: Model Training and Initial Prediction. Train the AI model on a curated dataset integrating multiomics data (e.g., genomics, proteomics), biomedical literature, and protein structure information. Use the trained model to generate a ranked list of high-confidence, novel protein targets predicted to be involved in a specific disease pathway [24] [22].

Step 2: In Silico Cross-Validation. Perform internal validation using computational methods. This includes:

- Genetic Evidence Check: Use resources like genome-wide association studies (GWAS) to assess if the predicted targets have prior genetic support. The presence of genetic evidence can increase the odds of a target succeeding in clinical trials by 80% [24].

- Druggability Prediction: Employ structure-based models (e.g., docking simulations) to predict whether the protein has a viable binding pocket for a small molecule [24].

- Pathway Analysis: Ensure the target is placed in a biologically plausible disease pathway [24].

Step 3: Experimental Validation in the Wet Lab. The top-ranked predictions from the in silico phase must be tested empirically. A key approach is target deconvolution using CRISPR-Cas9 gene editing [24] [25].

- Cell Culture: Use a relevant human cell line (e.g., HUVEC cells as used in the RxRx3 dataset) for the disease context [25].

- Genetic Perturbation: Perform CRISPR-Cas9 knockouts of the AI-predicted target genes.

- Phenotypic Screening: Use high-content microscopy and automated assays to capture the cellular phenotypes resulting from the gene knockouts.

- Outcome Measurement: Compare the observed phenotypes against known disease-associated phenotypes. A successful prediction is one where the knockout produces a phenotypic change that ameliorates the disease model phenotype [25].

Step 4: Bias and Performance Calibration. Compare the experimental results with the AI model's original predictions. Calculate metrics such as the false discovery rate (FDR) and precision to quantify the model's performance and calibrate its bias for future iterations [26]. This step closes the loop, informing refinements to both the AI model and the training data strategy.

The following workflow diagrams the complete benchmarking process, from data integration to model refinement.

Successful experimental benchmarking relies on a suite of specific research reagents and computational tools. The table below details key solutions for the validation workflow described above.

Table 2: Key Research Reagent Solutions for Experimental Validation

| Reagent / Resource | Function in Validation | Application Example |

|---|---|---|

| CRISPR-Cas9 Gene Editing Systems | Precisely knocks out AI-predicted target genes in cell lines to study functional loss [24] [25]. | Validating the essentiality of a novel protein target by observing the phenotypic consequence of its knockout [25]. |

| High-Content Screening (HCS) Microscopy | Automatically captures high-resolution images of perturbed cells, generating rich, quantitative phenotypic data [25]. | Generating datasets like RxRx3-core to train and benchmark AI models on cellular morphology changes [25]. |

| Curated Public Datasets (e.g., RxRx3-core) | Provides standardized, high-quality public benchmarks for training and testing microscopy-based AI models [25]. | Serving as a compact, accessible benchmark (18GB) for evaluating zero-shot drug-target interaction prediction [25]. |

| Protein Structure Prediction Models (e.g., AlphaFold) | Provides high-quality 3D protein structures for targets where lab-resolved structures are unavailable, enabling structure-based drug design [24] [22]. | Predicting binding pockets and performing molecular docking simulations on novel AI-prioritized targets [22]. |

| Pharmacogenomic Databases (e.g., UK Biobank, TCGA) | Provides large-scale genetic and clinical data to uncover correlations between targets and disease, strengthening genetic evidence [24] [25]. | Assessing if a novel AI-predicted target has links to disease in human population data, bolstering validation confidence [24]. |

The transformative potential of AI in drug discovery is inextricably linked to the quality, diversity, and lack of bias in its underlying training data. As demonstrated through performance comparisons and experimental benchmarking protocols, models built on fit-for-purpose, standardized data consistently outperform those reliant on noisy or limited public datasets. The transition from a model-centric to a data-centric AI approach is therefore critical. This entails investing in the generation of high-quality, multimodal data, rigorously validating model outputs against biological experiments, and actively addressing data biases. By prioritizing the integrity of the data foundation, researchers can fully leverage AI to illuminate novel biological mechanisms, design safer and more effective therapeutics, and ultimately accelerate the delivery of new medicines to patients.

The integration of Artificial Intelligence (AI) into drug discovery has ushered in a new era of potential, promising to accelerate target identification, compound screening, and optimization of therapeutic candidates. However, the inherent opacity of many sophisticated AI models, particularly deep learning systems, poses a significant "black box" problem that limits their interpretability and acceptance within the pharmaceutical research community [27]. In high-stakes, regulated environments like drug development, a perfect prediction means little if the reasoning behind it remains unclear [28]. Explainable AI (XAI) has therefore emerged as a critical field, aiming to bridge the gap between powerful AI predictions and the human-understandable rationale needed for scientific validation, trust, and regulatory acceptance [27] [29].

The challenge extends beyond mere technical performance. In highly regulated environments such as submissions to the FDA or EMA, explainability is not a "nice to have" but a prerequisite for acceptance [28]. Regulatory agencies expect AI-driven decisions to be transparent, auditable, and scientifically justified. When a model flags a compound as high-risk, reviewers must understand the reasoning in terms they recognize—such as mechanism of action, toxicity pathways, or target interactions—not just a probability score [28]. This review will objectively compare the performance and methodologies of various XAI approaches, framing the discussion within the broader thesis of validating AI-based drug discovery models.

Core Concepts: From Black Boxes to Glass Box Models

In AI-driven drug discovery, not all models are created equal when it comes to transparency. The fundamental distinction lies between "black box" and "glass box" (Explainable AI) models.

Traditional "Black Box" Models: These models, which can include complex deep neural networks and ensemble methods, can achieve outstanding predictive accuracy. However, their internal decision-making process is hidden from the user [28]. They deliver outputs without showing the reasoning behind them, much like receiving a lab result with no explanation of the methodology used to obtain it. This lack of transparency creates significant barriers to their adoption in scientific and regulated environments.

Explainable AI (XAI) Models: These are built with methods that make their inner workings more transparent and can explain why a specific prediction or recommendation was made [28]. XAI helps scientists validate results, detect potential biases, and build trust in the system. The overarching goal of XAI is aligned with the RICE principles—Robustness, Interpretability, Controllability, and Ethicality—which are increasingly seen as foundational for responsible AI in healthcare [30].

Table 1: Core Objectives of AI Alignment (RICE) in Drug Discovery

| Objective | Description | Significance in Drug Discovery |

|---|---|---|

| Robustness | The capacity of an AI system to maintain stability and dependability amid uncertainties or adversarial attacks [30]. | Ensures model reliability across diverse chemical spaces and biological contexts. |

| Interpretability | The ability to provide clear explanations or reasoning for decisions, facilitating user comprehension [30]. | Enables scientists to validate predictions against domain knowledge and generate testable hypotheses. |

| Controllability | The ability to guide and constrain model behavior to align with human intentions. | Prevents the generation of unsafe or non-synthesizable compounds. |

| Ethicality | Ensuring model decisions are fair, unbiased, and respect human values and well-being. | Mitigates biases in data or algorithms that could lead to unfair treatment outcomes or skewed research [30]. |

Comparative Analysis of XAI Techniques and Model Performance

A variety of XAI techniques have been developed to address the black box problem, each with distinct methodologies, applications, and performance characteristics. The following table summarizes prominent approaches and their experimental performance in benchmark drug discovery tasks.

Table 2: Performance Comparison of Explainable AI Techniques on Molecular Property Prediction

| XAI Technique | Model Category | Key Methodology | Reported Performance (AUC/Accuracy) | Primary Application in Drug Discovery |

|---|---|---|---|---|

| Concept Whitening (CW) on GNNs [31] | Self-Interpretable | Aligns latent space axes with human-defined concepts (e.g., molecular descriptors) to identify relevant structural parts. | Classification Performance Improvement on MoleculeNet datasets [31]. | Molecular property prediction, QSAR models. |

| SHapley Additive exPlanations (SHAP) [28] [27] | Post-hoc Model-Agnostic | Uses cooperative game theory to quantify each feature's marginal contribution to a prediction. | N/A (Feature importance quantification) | Biomarker prioritization, patient stratification, ADMET prediction. |

| Local Interpretable Model-agnostic Explanations (LIME) [27] | Post-hoc Model-Agnostic | Approximates a black-box model locally with an interpretable model (e.g., linear classifier) to explain individual predictions. | N/A (Local explanation fidelity) | Explaining individual compound predictions for chemists. |

Experimental Protocol for Evaluating Self-Interpretable GNNs with Concept Whitening

The adaptation of Concept Whitening (CW) for Graph Neural Networks (GNNs) represents a move towards inherently interpretable models, rather than applying explanations post-hoc. The detailed experimental methodology, as outlined in research, is as follows [31]:

- Dataset and Benchmarking: Models are trained and evaluated on several public benchmark datasets from MoleculeNet (e.g., for toxicity or hydrophobicity prediction). This provides a standardized ground for comparison.

- Model Architecture and Training:

- Base GNNs: Popular spatial convolutional GNN architectures are used as the backbone, including Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and Graph Isomorphism Networks (GINs).

- Integration of CW: The CW module is added to the network. This module is designed to align the axes of the network's latent space with pre-defined molecular concepts (e.g., molecular weight, polarity, or presence of specific functional groups).

- Training Objective: The model is trained not only to correctly predict the molecular property but also to organize its internal representations according to the supplied concepts.

- Interpretation and Evaluation: