Navigating the Lead Optimization Pipeline: Key Challenges and Advanced Solutions for Drug Discovery

This article provides a comprehensive overview of the critical challenges and state-of-the-art solutions in the lead optimization pipeline for drug discovery professionals.

Navigating the Lead Optimization Pipeline: Key Challenges and Advanced Solutions for Drug Discovery

Abstract

This article provides a comprehensive overview of the critical challenges and state-of-the-art solutions in the lead optimization pipeline for drug discovery professionals. It explores the foundational goals of balancing efficacy and safety, details cutting-edge methodological advances like AI and structure-based design, addresses common troubleshooting scenarios for ADMET properties, and outlines rigorous validation frameworks for candidate selection. By synthesizing these four core intents, the article serves as a strategic guide for researchers and scientists aiming to improve the efficiency and success rate of progressing lead compounds to viable clinical candidates.

Defining Lead Optimization: Core Objectives and Critical Hurdles in Drug Discovery

Troubleshooting Guide: Common Lead Optimization Challenges

#1 Poor Solubility and Permeability

Problem: Lead compound shows excellent target binding in biochemical assays but poor cellular activity due to low solubility or membrane permeability.

Troubleshooting Steps:

- Measure key physicochemical properties: Calculate cLogP, polar surface area, and hydrogen bond donors/acceptors

- Perform in vitro assays: Use Caco-2 cell monolayers for permeability assessment; shake-flask method for solubility

- Structural modifications:

- Introduce ionizable groups or reduce lipophilicity if cLogP > 5

- Reduce rotatable bonds and polar surface area if >140 Ų

- Consider prodrug strategies for problematic scaffolds

Validation Experiment:

- Measure solubility in biologically relevant buffers (PBS, FaSSIF)

- Confirm cellular activity restoration in cell-based assays with equivalent target engagement

#2 Metabolic Instability

Problem: Compound shows promising potency but rapid clearance in microsomal stability assays.

Troubleshooting Steps:

- Identify metabolic soft spots: Use liver microsomal incubation with LC-MS/MS analysis

- Employ metabolic stabilization strategies:

- Block or substitute labile functional groups (e.g., N-dealkylation sites)

- Introduce deuterium at metabolic hot spots

- Modify steric environment around susceptible sites

- Validate improvements: Compare intrinsic clearance in human liver microsomes

Key Parameters to Monitor:

- Half-life (t₁/₂) in liver microsomes and hepatocytes

- Intrinsic clearance (CLint) values

- Metabolite identification

#3 Off-Target Toxicity

Problem: Lead compound shows unexpected cytotoxicity or activity against pharmacologically related off-targets.

Troubleshooting Steps:

- Profile against common antitargets: Screen against hERG, CYP enzymes, and related gene family members

- Apply structural insights:

- Analyze binding mode differences between primary target and off-targets

- Introduce selectivity-enhancing modifications guided by structural biology

- Implement early safety pharmacology:

- hERG binding assay (patch clamp for confirmed binders)

- Phospholipidosis potential assessment

- Genotoxicity screening (Ames test)

Acceptance Criteria:

- >30-fold selectivity over related targets

- IC₅₀ > 30 μM for hERG binding

- Negative in Ames test

#4 In Vivo Efficacy-Potency Disconnect

Problem: Compound demonstrates excellent cellular potency but fails to show efficacy in animal models.

Troubleshooting Steps:

- Evaluate pharmacokinetic parameters:

- Measure plasma protein binding

- Determine volume of distribution and half-life

- Assess bioavailability through IV and PO dosing

- Analyze tissue distribution: Use cassette dosing or quantitative whole-body autoradiography

- Consider pharmacological factors:

- Verify target engagement in vivo (PD biomarkers)

- Assess receptor occupancy requirements

Critical PK Parameters for Efficacy:

- Free drug exposure (AUC) > cellular IC₅₀

- Appropriate half-life for dosing regimen

- Adequate tissue penetration where relevant

Experimental Protocols for Key Lead Optimization Assays

Protocol 1: Comprehensive ADME Profiling

Purpose: Systematically evaluate absorption, distribution, metabolism, and excretion properties of lead compounds.

Materials:

- Test compounds (10 mM DMSO stocks)

- Human liver microsomes (pooled)

- Caco-2 cell monolayers (21-day differentiated)

- MDCK-MDR1 cells

- Plasma from relevant species

- LC-MS/MS system for analysis

Procedure:

- Metabolic Stability:

- Incubate 1 μM compound with 0.5 mg/mL liver microsomes + NADPH

- Sample at 0, 5, 15, 30, 60 minutes

- Calculate half-life and intrinsic clearance

Permeability Assessment:

- Apply 10 μM compound to apical chamber of Caco-2 inserts

- Sample both chambers at 30, 60, 90, 120 minutes

- Calculate apparent permeability (Papp) and efflux ratio

Plasma Protein Binding:

- Use rapid equilibrium dialysis device

- Incubate 1 μM compound with plasma for 4 hours at 37°C

- Calculate free fraction

Data Analysis:

- Classify compounds using established criteria (e.g., Lipinski's Rule of Five, CNS MPO)

- Prioritize compounds with CLint < 15 μL/min/mg, Papp > 5 × 10⁻⁶ cm/s, efflux ratio < 2.5

Protocol 2: Binding Kinetics and Target Engagement

Purpose: Characterize binding kinetics and cellular target engagement for lead compounds.

Materials:

- Purified target protein

- TR-FRET or SPR instrumentation

- Relevant cell lines expressing target

- Radioligands or fluorescent probes as appropriate

Procedure:

- Binding Kinetics:

- Use surface plasmon resonance (SPR) to measure association (kon) and dissociation (koff) rates

- Fit data to 1:1 binding model to calculate kinetic parameters

- Determine residence time (1/koff)

- Cellular Target Engagement:

- Use cellular thermal shift assay (CETSA) or target engagement assays

- Treat cells with compound, lyse, and measure target stability

- Calculate EC₅₀ for cellular target engagement

Interpretation:

- Prioritize compounds with longer residence time when sustained target coverage is needed

- Correlate cellular engagement with functional activity

Essential Research Reagent Solutions

Table: Key Research Reagents for Lead Optimization

| Reagent/Category | Function in Lead Optimization | Example Applications |

|---|---|---|

| Liver Microsomes | Predict metabolic clearance | Metabolic stability assays, metabolite identification |

| Transporter-Expressing Cells (MDCK-MDR1, BCRP) | Assess permeability and efflux | P-gp efflux ratio determination, bioavailability prediction |

| hERG Channel Assays | Evaluate cardiac safety risk | Patch clamp, binding assays for early cardiac safety |

| Plasma Protein Binding Kits | Determine free drug fraction | Equilibrium dialysis, ultrafiltration for PK/PD modeling |

| Target-Specific Binding Assays | Measure potency and selectivity | TR-FRET, SPR for Ki determination and binding kinetics |

| Cellular Phenotypic Assay Kits | Assess functional activity in disease-relevant models | High-content imaging, pathway reporter assays |

AI-Enhanced Lead Optimization Workflows

Current AI Applications in Lead Optimization

Table: AI-Driven Solutions for Efficacy-Safety Optimization

| AI Technology | Application in Lead Optimization | Reported Impact |

|---|---|---|

| Free Energy Perturbation (FEP+) | Binding affinity prediction for structural analogs | ~70% reduction in synthesized compounds needed [1] |

| Generative Chemical AI | De novo design of compounds with optimal properties | 10x fewer compounds synthesized to reach candidate [1] |

| Deep Learning QSAR | ADMET property prediction from chemical structure | 25-50% reduction in preclinical timelines [2] |

| Multi-Parameter Optimization | Balancing potency, selectivity, and ADMET | Improved candidate quality and reduced attrition [3] |

Troubleshooting AI Implementation

Problem: FEP+ fails to provide reliable rank ordering in lead optimization campaigns.

Solutions:

- Validate force fields for specific chemical series

- Implement ensemble docking to account for protein flexibility

- Combine with experimental data for hybrid modeling

- Use alternative scoring functions for challenging targets [4]

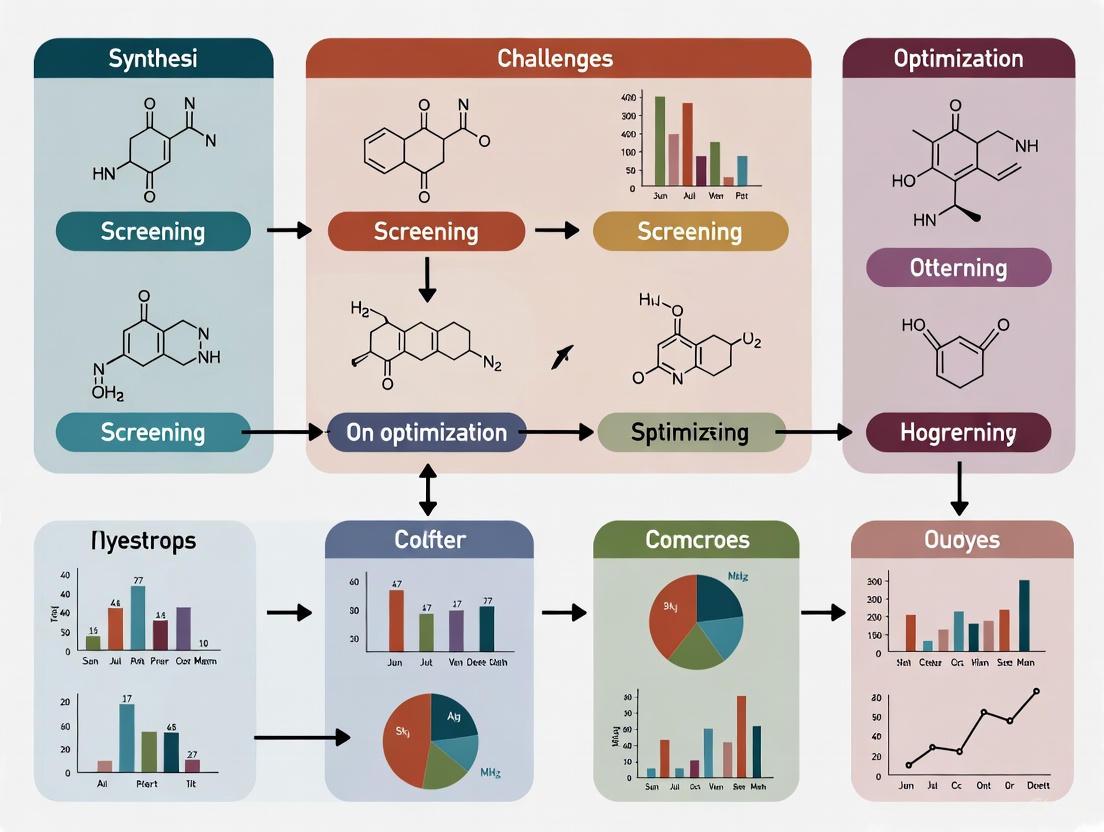

Workflow Visualization

Lead Optimization Decision Workflow

AI-Enhanced Lead Optimization Cycle

Frequently Asked Questions (FAQs)

#1 How many compounds should we expect to synthesize during lead optimization?

Answer: The number varies by program, but AI-enhanced platforms report reaching clinical candidates with 70-90% fewer synthesized compounds. Traditional programs often require 500-5,000 compounds, while AI-driven approaches have achieved candidates with only 136 compounds in some cases [1].

#2 When should we incorporate in vivo studies into lead optimization?

Answer: Begin in vivo PK studies once you have compounds with:

- Cellular potency < 100 nM

- Acceptable in vitro ADME properties (CLint < 15 μL/min/mg, Papp > 5 × 10⁻⁶ cm/s)

- Clean initial safety profile (hERG IC₅₀ > 30 μM, >30-fold selectivity) Progress to efficacy studies only after demonstrating adequate exposure and target engagement.

#3 How do we balance multiple optimization parameters when they conflict?

Answer: Implement multi-parameter optimization strategies:

- Use quantitative structure-activity relationship (QSAR) models to identify optimal property ranges

- Establish minimal acceptable criteria for each parameter

- Prioritize parameters based on clinical relevance (safety > PK > potency)

- Leverage AI platforms that can simultaneously optimize multiple parameters [3]

#4 What are the most common reasons for failure in lead optimization?

Answer: Primary failure modes include:

- Inability to achieve adequate bioavailability or exposure (∼40%)

- Unexpected toxicity findings (∼25%)

- Insufficient efficacy in vivo despite good potency in vitro (∼20%)

- Pharmaceutical development challenges (∼15%) [5]

#5 How has AI changed lead optimization timelines and success rates?

Answer: Companies using integrated AI platforms report:

- 25-50% reduction in preclinical timelines [2]

- 70% faster design cycles [1]

- Advancement to clinical trials in 18 months vs. traditional 3-6 years [6] However, success rates in later stages remain to be fully demonstrated as most AI-derived drugs are still in early clinical trials [1].

Frequently Asked Questions (FAQs)

FAQ 1: What are the most critical ADMET-related challenges causing drug candidate failure? A significant challenge is the high attrition rate of drug candidates due to unforeseen toxicity and poor pharmacokinetic properties. Specifically, approximately 56% of drug candidates fail due to safety problems, which often manifest during costly preclinical animal studies [7]. This creates a "whack-a-mole" problem where improving one property (e.g., potency) negatively impacts another (e.g., metabolic stability) [8]. The primary issue is that comprehensive toxicity profiling is often deferred until late stages due to a misalignment of incentives, where early-stage research prioritizes demonstrating efficacy to secure funding [7].

FAQ 2: Which toxicity endpoints should we prioritize for early-stage screening? Early screening should focus on endpoints frequently linked to clinical failure and post-market withdrawal. Key organ-specific toxicities include:

- Hepatotoxicity (Drug-Induced Liver Injury) [9] [10]

- Cardiotoxicity (particularly hERG channel blockade) [9]

- Carcinogenicity [10]

- Acute toxicity [10]

- Genotoxicity/Mutagenicity [9]

AI models are now capable of predicting these endpoints based on diverse molecular representations, helping to flag risks earlier in the pipeline [9].

FAQ 3: What are the main limitations of traditional toxicity testing methods? Traditional methods face several limitations [10] [7]:

- High costs and low throughput of in vitro assays and animal studies

- Long experimental cycles

- Uncertainty in cross-species extrapolation due to species differences

- Incomplete screening panels (e.g., testing only 10 off-targets cannot capture all potential toxicity manifestations)

- Diagnostic limitations: Animal study readouts (e.g., raised liver enzymes) confirm problems but often provide no guidance on molecular causes or solutions [7]

FAQ 4: How can we effectively integrate AI-based toxicity prediction into our lead optimization workflow? Implement a systematic workflow with these key stages [9]:

- Data Collection: Gather toxicity data from public databases (ChEMBL, Tox21, DrugBank) and proprietary sources

- Data Preprocessing: Handle missing values, standardize molecular representations (SMILES, molecular graphs), perform feature engineering

- Model Development: Apply appropriate algorithms (Random Forest, XGBoost, Graph Neural Networks) based on data structure and task complexity

- Evaluation & Validation: Use performance metrics (accuracy, precision, recall, AUROC for classification; MSE, RMSE, MAE for regression) and interpretability techniques (SHAP)

Integrate these models into virtual screening pipelines to filter potentially toxic compounds before in vitro assays [9].

FAQ 5: Which databases provide reliable toxicity data for model training? Several publicly available databases provide high-quality toxicity data suitable for training AI/ML models, as summarized in the table below.

Table 1: Key Databases for Toxicity Data and ADMET Prediction

| Database Name | Data Scope & Size | Key Features & Applications |

|---|---|---|

| Tox21 [9] | 8,249 compounds across 12 targets | Qualitative toxicity data focused on nuclear receptor and stress response pathways; benchmark for classification models |

| ToxCast [9] | ~4,746 chemicals across hundreds of endpoints | High-throughput screening data for in vitro toxicity profiling; broad mechanistic coverage |

| ChEMBL [10] | Manually curated bioactive molecules | Compound structures, bioactivity data, drug target information, and ADMET data; supports activity clustering and similarity searches |

| DrugBank [10] | Comprehensive drug information | Detailed drug data, targets, pharmacological information, clinical trials, adverse reactions, and drug interactions |

| hERG Central [9] | >300,000 experimental records | Extensive data on hERG channel inhibition; supports classification and regression tasks for cardiotoxicity |

| DILIrank [9] | 475 compounds | Annotated hepatotoxic potential; crucial for predicting drug-induced liver injury |

| PubChem [10] | Massive chemical substance data | Chemical structures, activity, and toxicity information from scientific literature and experimental reports |

Experimental Protocols & Workflows

Protocol 1: Developing an AI-Based Toxicity Prediction Model

This protocol outlines the methodology for creating a robust toxicity prediction model using machine learning, adapted from recent literature [9].

1. Data Collection and Curation

- Source Data: Extract compound structures and corresponding toxicity labels from reliable databases (see Table 1). For general toxicity, Tox21 and ToxCast are recommended starting points. For specific organ toxicity, use dedicated datasets like DILIrank (liver) or hERG Central (cardiac) [9].

- Label Encoding: For classification tasks (e.g., toxic/non-toxic), encode experimental results as binary labels. For regression tasks (e.g., predicting IC₅₀ or LD₅₀), use continuous values from experimental measurements [9].

2. Data Preprocessing and Feature Engineering

- Molecular Representation:

- SMILES Strings: Use Simplified Molecular Input Line Entry System strings as direct input for sequence-based models (e.g., Transformers) [9].

- Molecular Descriptors: Calculate traditional chemical descriptors (molecular weight, clogP, number of rotatable bonds) using tools like RDKit [9].

- Graph Representations: Represent molecules as graphs where atoms are nodes and bonds are edges for Graph Neural Networks (GNNs) [9].

- Data Cleaning: Handle missing values through imputation or removal. Standardize features by scaling to normalize numerical ranges [9].

- Data Splitting: Split dataset into training, validation, and test sets using scaffold-based splitting to evaluate model generalizability to novel chemical structures and prevent data leakage [9].

3. Model Selection and Training

- Algorithm Choice: Select algorithms based on data type and task complexity [9]:

- Structured Data (Descriptors): Random Forest, XGBoost, Support Vector Machines (SVMs)

- Graph Data (Molecular Structures): Graph Neural Networks (GNNs)

- Sequence Data (SMILES): Transformer-based models

- Training Procedure: Implement cross-validation on the training set to optimize hyperparameters and prevent overfitting. For imbalanced datasets, employ techniques like oversampling, undersampling, or custom loss functions [9].

4. Model Evaluation and Interpretation

- Performance Metrics [9]:

- Classification: Accuracy, Precision, Recall, F1-score, Area Under ROC Curve (AUROC)

- Regression: Mean Squared Error (MSE), Root MSE (RMSE), Mean Absolute Error (MAE), R²

- Model Interpretability: Use techniques like SHAP (SHapley Additive exPlanations) or attention visualization to identify structural features or substructures associated with toxicity, enhancing trust and providing actionable insights [9].

Diagram: AI-Based Toxicity Prediction Model Workflow

Protocol 2: Integrated Lead Optimization with ADMET Screening

This protocol describes how to incorporate computational ADMET prediction into a lead optimization pipeline to reduce late-stage failures, based on successful industry implementations [8].

1. Early-Stage Virtual Screening

- Platform Integration: Implement AI-based ADMET prediction tools (e.g., Inductive Bio's Compass platform) that provide real-time toxicity and pharmacokinetic predictions during compound design [8].

- Multi-Parameter Optimization: Simultaneously evaluate potency, selectivity, and key ADMET parameters (e.g., solubility, metabolic stability, hERG inhibition) rather than sequential optimization [8].

- Design-Predict-Test Cycle: Before synthesis, use AI models to screen virtual compound libraries and prioritize designs with optimal drug-like properties [8].

2. Experimental Validation

- In Vitro Testing: For AI-predicted top candidates, conduct focused in vitro assays to validate key ADMET properties [10]:

- Cytotoxicity: MTT or CCK-8 assays [10]

- Metabolic Stability: Microsomal or hepatocyte stability assays

- Permeability: Caco-2 or PAMPA assays

- hERG Inhibition: Patch clamp or binding assays

- Iterative Refinement: Use experimental results to refine AI models through continuous learning loops. Weekly experimental feedback from partners drives ongoing model improvements [8].

3. Advanced Profiling

- Mechanistic Investigation: For compounds showing toxicity signals, employ transcriptomics or metabolomics to elucidate potential mechanisms and molecular initiating events [11].

- Structural Alert Identification: Use model interpretability methods (e.g., SHAP, attention maps) to identify toxicophores and guide structural modifications [9].

Diagram: Integrated Lead Optimization with ADMET Screening

The Scientist's Toolkit: Essential Research Reagents & Databases

Table 2: Key Research Reagents and Computational Tools for ADMET and Toxicity Studies

| Tool Category | Specific Tool/Database | Function & Application |

|---|---|---|

| Public Toxicity Databases | Tox21, ToxCast, DILIrank, hERG Central | Provide curated toxicity data for model training and validation; benchmark compounds against known toxic profiles [9] [10] |

| Chemical & Bioactivity Databases | ChEMBL, DrugBank, PubChem | Source for chemical structures, bioactivity data, and ADMET properties; support similarity searches and clustering [10] |

| In Vitro Assay Kits | MTT Assay, CCK-8 Assay | Measure compound cytotoxicity in cell cultures; validate AI-predicted toxicity signals [10] |

| Molecular Descriptor Tools | RDKit, PaDEL-Descriptor | Calculate chemical features and molecular descriptors from structures for machine learning input [9] |

| AI/ML Modeling Frameworks | Scikit-learn, PyTorch, TensorFlow, DeepGraphLibrary | Implement machine learning (Random Forest, XGBoost) and deep learning (GNNs, Transformers) models [9] |

| Model Interpretability Tools | SHAP, LIME, Attention Visualization | Explain model predictions; identify structural features associated with toxicity [9] |

Table 3: Key Quantitative Data on Drug Attrition and Toxicity Prediction

| Metric Category | Specific Metric | Value or Range | Context & Implications |

|---|---|---|---|

| Drug Attrition Rates | Failure due to safety/toxicity | ~56% of drug candidates [7] | Primary reason for failure beyond pharmacodynamic factors; highlights need for early prediction |

| Toxicity Dataset Sizes | Tox21 Dataset | 8,249 compounds across 12 targets [9] | Benchmark dataset for nuclear receptor and stress response pathway toxicity |

| ToxCast Dataset | ~4,746 chemicals across hundreds of endpoints [9] | High-throughput screening data for in vitro toxicity profiling | |

| hERG Central | >300,000 experimental records [9] | Extensive data for cardiotoxicity prediction (classification & regression) | |

| Model Performance Metrics | AUROC (Area Under ROC Curve) | Varies by endpoint and model | Key metric for classification performance; higher values indicate better true positive vs. false positive tradeoff [9] |

| RMSE (Root Mean Square Error) | Varies by endpoint and model | Key metric for regression performance; lower values indicate higher prediction accuracy [9] |

FAQs on Risk Tolerance in Drug Development

What is the difference between risk appetite and risk tolerance in pharmaceutical development?

Risk Appetite is the high-level, strategic amount and type of risk an organization is willing to accept to achieve its objectives and pursue value. It is a broad "speed limit" set by leadership [12] [13]. For example, a company's leadership might declare a "low risk appetite for patient safety violations."

Risk Tolerance is the more tactical, acceptable level of variation in achieving specific objectives. It is the measurable "leeway" or specific thresholds applied to daily operations and experiments [12] [13]. An example of risk tolerance is setting a limit of "≤1 minor data integrity deviation per research site per quarter" [12].

How is patient risk tolerance quantitatively measured to inform trial design?

Patient risk tolerance is often measured using Discrete Choice Experiments (DCEs). This method quantifies the trade-offs patients are willing to make between treatment benefits and risks [14].

- Methodology: Patients are presented with a series of binary choices between different hypothetical treatment profiles. Each profile is defined by attributes such as efficacy and potential side effects, each with different levels [14].

- Example: In a study for a rheumatoid arthritis treatment, attributes included the chance of stopping disease progression (50%, 70%, 90%), increased chance of death in the first year (3%, 9%, 15%), and chance of chronic graft-versus-host disease (3%, 9%, 15%) [14].

- Data Analysis: The choice data is analyzed using statistical models (e.g., a logit model) to determine the relative importance of each attribute and calculate how much of a risk patients are willing to accept for a given increase in benefit [14]. The rheumatoid arthritis study found patients were willing to accept a 3% increase in the risk of death for a 10% increase in the chance of stopping disease progression [14].

What factors influence a company's risk appetite for a new therapeutic program?

Several strategic factors shape an organization's willingness to take on risk [12]:

- Company Size and Market Presence: Larger companies with diverse portfolios may have a higher risk tolerance for new market entry but remain extremely conservative regarding GMP compliance and patient safety [12].

- R&D Intensity and Pipeline Stage: Companies heavily invested in early-stage research may have a higher tolerance for technical and scientific uncertainty to achieve breakthrough innovation [12].

- Therapeutic Area and Competitive Landscape: In highly competitive areas (e.g., oncology), companies may "front-load" development, pursuing multiple indications in parallel despite higher costs and risks to establish leadership [15]. The regulatory landscape, including potential price controls, can also incentivize accelerated, higher-risk development strategies [16] [15].

- Global Operations: Companies operating internationally must adjust their risk appetite to account for different regulatory environments, such as the EU's strict AI Act for medical products [12].

How is risk tolerance implemented and monitored in a Quality Management System (QMS)?

Risk tolerance is operationalized by integrating it into the fabric of the QMS through specific tools and techniques [12]:

- Key Risk Indicators (KRIs) and Dashboards: Establish measurable metrics linked to risk tolerance limits (e.g., batch failure rate, out-of-specification rate). Real-time dashboards track these KRIs and trigger alerts when tolerances are approached or exceeded [12].

- Risk Registers: Update risk registers to include columns for risk appetite and tolerance statements, ensuring a clear and auditable logic chain from risk identification to control [12].

- Embedding in Procedures: Incorporate risk tolerance thresholds directly into Standard Operating Procedures (SOPs), change control processes, and supplier quality agreements to guide daily decision-making [12].

Experimental Protocols for Assessing Risk Tolerance

Protocol 1: Discrete Choice Experiment for Patient Risk-Benefit Preference

This protocol outlines the steps to quantify patient risk tolerance for a therapeutic candidate [14].

- Define Attributes and Levels: Identify key efficacy, safety, and administration attributes of the therapy (e.g., progression-free survival, severe adverse event rate, mode of administration). Assign realistic levels to each attribute (e.g., 5%, 10%, 15% for severe adverse event rate).

- Experimental Design: Use statistical software to generate a set of binary choice tasks using an orthogonal main effects design. This design ensures attributes are varied independently to efficiently estimate their impact.

- Survey Presentation: Program the choice tasks into a survey. Each task should present two hypothetical treatment profiles and ask the participant to choose their preferred option. It is recommended to include a "neither" option. The survey should also collect demographic and clinical data from participants.

- Participant Recruitment: Recruit participants from the target patient population, ideally from disease registries or clinical sites. Ensure informed consent is obtained.

- Data Collection: Administer the survey to participants.

- Statistical Analysis: Analyze the choice data using a multinomial or mixed logit model. The model estimates the utility (preference) weight for each level of each attribute.

- Calculation of Trade-Offs: Use the estimated utility weights to calculate the trade-offs patients are willing to make. For example, compute the willingness-to-accept an increase in a specific risk for a unit increase in efficacy.

Protocol 2: Establishing Internal Risk Tolerance Thresholds for Development Decisions

This protocol provides a framework for R&D teams to define their own risk tolerance for key go/no-go decisions.

- Identify Critical Decision Points: Map the lead optimization and development pipeline to identify critical decision points (e.g., candidate nomination, IND submission, Phase III initiation).

- Define Key Value Drivers: For each decision point, list the key value drivers (e.g., potency, selectivity, predicted human efficacious dose, manufacturability cost).

- Set Quantitative Thresholds: For each value driver, establish quantitative thresholds for risk tolerance based on available data, competitor benchmarks, and target product profile requirements. Categorize thresholds as "Go" (acceptable), "Mitigate" (requires risk reduction), or "No-Go" (unacceptable).

- Create a Risk Tolerance Matrix: Develop a matrix that visually maps the decision points against the value drivers and their respective tolerance thresholds.

- Integrate with Governance: Present the risk tolerance matrix to leadership for formal approval. Integrate the matrix into stage-gate review processes to ensure consistent and objective decision-making.

Data Presentation: Quantitative Risk Tolerance Metrics

Table 1: Willingness-to-Accept Risk Trade-offs from a Rheumatoid Arthritis Study [14]

This table summarizes the quantitative trade-offs patients with severe rheumatoid arthritis were willing to make for a potential curative therapy.

| Benefit Increase | Risk Increase Patients Were Willing to Accept | Contextual Note |

|---|---|---|

| 10% increase in chance of stopping disease progression | 3% increase in risk of death | For patients who had failed multiple prior therapies |

| 10% increase in chance of stopping disease progression | 6% increase in chance of chronic GVHD | For patients who had failed multiple prior therapies |

Table 2: Examples of Risk Appetite and Tolerance Statements for Different Functions [12]

This table provides illustrative examples of how high-level risk appetite is translated into measurable risk tolerance across R&D functions.

| Functional Area | Risk Appetite Statement (Strategic) | Risk Tolerance Statement (Measurable) |

|---|---|---|

| Patient Safety & GCP | Zero tolerance for non-compliance that could cause patient harm. | ≤1 critical finding in GCP audit per year; 100% verification of CAPA effectiveness within 30 days. |

| Data Integrity | Low appetite for ALCOA+ deviations. | ≤1 minor data integrity deviation per site per quarter. |

| Supply Chain & CMC | Moderate appetite for accelerated supplier onboarding to meet development timelines. | ≤5% waivers for required PPAP/technical files, with mandatory post-approval audits within 60 days. |

Visualization: Risk Tolerance Framework

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Risk Tolerance and Preference Research

| Research Item | Function/Brief Explanation |

|---|---|

| Discrete Choice Experiment (DCE) Software | Software platforms (e.g., Sawtooth Software, Ngene) used to design statistically efficient choice tasks and analyze the resulting preference data. |

| Patient Registry/Cohort Access | Pre-established groups of patients (e.g., from clinical sites or disease-specific registries) essential for recruiting participants for preference studies that represent the target population. |

| Validated Risk Tolerance Scales | Standardized psychometric surveys (e.g., Risk-Taking Scale, Need for Cognitive Closure Scale) used to quantitatively measure risk attitudes of internal stakeholders or clinicians [17]. |

| Key Risk Indicator (KRI) Dashboard | A visual management tool (often part of a Quality Management System) that displays real-time metrics against pre-defined risk tolerance limits, enabling proactive risk management [12]. |

| Regulatory Guidance Database | A curated repository of health authority documents (FDA, EMA guidances, ICH Q9(R1)) that provides the framework for defining acceptable risk in a regulated environment [12] [18]. |

FAQs: Navigating Toxicity in Lead Optimization

Why is toxicity a major cause of clinical attrition, and how can early discovery address this?

Toxicity remains a primary reason for drug candidate failure because issues often remain undetected until clinical phases. Discovery toxicology aims to identify and remove the most toxic compounds from the portfolio before entry into humans to reduce clinical attrition due to toxicity [19]. This is achieved by integrating safety assessments early into the lead optimization phase, balancing potency improvements with parallel evaluation of toxicity, metabolic stability, and selectivity [20].

What are the most common shortcomings in project proposals regarding toxicity assessment?

Analysis of rejected funding applications reveals frequent critical shortcomings [21]:

- Insufficient in vitro activity testing: Leads often lack sufficient potency or are not tested against a broad enough panel of isolates to properly evaluate the spectrum and potential issues.

- Little awareness of toxicological issues: This includes programs with historical liabilities and inadequate preliminary toxicology studies.

- Insufficient characterization: Projects frequently lack sufficient data on the structure-activity relationship (SAR) to support a solid medicinal chemistry strategy.

Which tools and models are most effective for predicting human toxicity early on?

A holistic framework relying on integrated use of qualified in silico, in vitro, and in vivo models provides the most robust risk assessment [19]. Effective tools include [20] [22]:

- In silico predictive tools: AI/ML and computational models to predict properties and prioritize compounds.

- High-throughput in vitro screening: For early safety markers and ADME-Tox profiling.

- Advanced in vivo models: Such as zebrafish, which offer a vertebrate system for highly predictive evaluation of developmental toxicity, cardiotoxicity, and hepatotoxicity at a lower cost and higher throughput than traditional models.

Troubleshooting Guides

Issue: Lead compound shows promising potency but unacceptable toxicity in preliminary assays

Diagnosis: The chemical structure likely causes off-target effects or has inherent properties (e.g., high lipophilicity) leading to toxicity [20].

Recommended Actions:

- Explore Structure-Activity Relationship (SAR): Systematically synthesize and test analogs to identify which structural motifs correlate with both activity and toxicity [20].

- Profile against off-target panels: Use secondary pharmacological screens to identify specific off-target interactions (e.g., with hERG, kinases) that may be responsible [19].

- Optimize physicochemical properties: Reduce lipophilicity and fine-tune solubility to improve the overall safety profile, as fixing one parameter often creates new obstacles requiring a balanced approach [20].

- Utilize predictive models: Employ AI/ML tools and FEP+ simulations to virtually screen analogs and suggest chemical transformations with a higher likelihood of reducing toxicity [20] [4].

Issue: In vivo model fails to predict human toxicity, leading to late-stage attrition

Diagnosis: The chosen preclinical model may have metabolic or physiological differences that limit its translatability to humans [22] [21].

Recommended Actions:

- Select a more predictive model: Consider adopting zebrafish models that bridge the gap between in vitro assays and mammalian models, allowing for multi-organ toxicity assessment in a vertebrate system [22].

- Incorporate pharmacometabonomics: Use NMR-based pharmacometabonomics to select the most appropriate animal model whose metabolic profile is most similar to humans for the specific target or pathway [22].

- Strengthen in vitro profiling: Expand in vitro ADME-Tox profiling to include assays with higher human relevance, such as those using human primary cells or 3D organoids [19] [22].

- Conduct mechanistic studies: Perform detailed mechanism of action and target validation studies to better understand the biological basis of any observed toxicity and its potential relevance to humans [21].

Issue: Difficulty balancing multiple compound properties during optimization

Diagnosis: Lead optimization requires simultaneously improving potency, selectivity, solubility, metabolic stability, and safety. Enhancing one property can adversely affect another [20].

Recommended Actions:

- Implement a structured decision framework: Define clear criteria for the progression of a compound (go/no-go decisions) based on the Target Candidate Profile for your therapeutic area [19] [21].

- Use multi-parameter optimization tools: Leverage software platforms that support simultaneous analysis of multiple parameters to help prioritize compounds with the best overall balance of properties [20].

- Adopt iterative design-make-test-analyze cycles: Use rapid synthesis and high-throughput testing to quickly generate data and inform the next round of chemical design, embracing the iterative nature of the process [20].

Data Presentation: Safety Monitoring & Compound Profiling

Statistical Rules for Safety Monitoring in Clinical Trials

The table below compares operating characteristics of different statistical methods for constructing safety stopping rules in a clinical trial scenario with a maximum of 60 patients, an acceptable toxicity rate (p0) of 20%, and an unacceptable rate (p1) of 40% [23].

| Monitoring Method | Overall Type I Error Rate | Expected Toxicities under p0 | Power to detect p1 | Key Characteristic |

|---|---|---|---|---|

| Pocock Test | 0.05 | 10.2 | 0.75 | Aggressive early stopping, permissive late stopping |

| O'Brien-Fleming Test | 0.05 | 11.5 | 0.82 | Conservative early stopping, powerful late monitoring |

| Beta-Binomial (Weak Prior) | 0.05 | 10.1 | 0.74 | Similar to Pocock; good for minimizing expected toxicities |

| Beta-Binomial (Strong Prior) | 0.05 | 11.4 | 0.81 | Similar to O'Brien-Fleming; higher power |

In Vitro ADME-Tox Profiling Assays

Early in vitro profiling is critical for "failing early and failing cheap" [22]. The following table outlines key assays for lead characterization.

| Assay Category | Specific Test | Primary Function | Key Outcome |

|---|---|---|---|

| Physicochemical Profiling | Lipophilicity (LogP), Solubility, pKa | Measures fundamental compound properties | Guides SAR to optimize solubility and reduce toxicity risk |

| In Vitro ADME/PK | Metabolic Stability (Microsomes), Caco-2 Permeability, Plasma Protein Binding | Predicts compound behavior in a biological system | Identifies compounds with poor metabolic stability or absorption |

| Toxicological Assessment | hERG Inhibition, Cytotoxicity (e.g., HepG2), Genotoxicity (Ames) | Screens for specific organ toxicities and genetic damage | Flags compounds with cardiac, hepatic, or mutagenic risk |

Experimental Protocols

Protocol 1: In Vitro ADME-Tox Profiling for Lead Prioritization

Objective: To generate an early ADME-Tox profile for lead compounds to prioritize them for further optimization [22].

Methodology:

- Physicochemical Profiling:

- Solubility: Shake-flask method. Dissolve compound in PBS (pH 7.4) and quantify concentration in supernatant after equilibrium via HPLC-UV.

- Lipophilicity: Determine the partition coefficient (LogP) between octanol and water using HPLC or shake-flask method.

- In Vitro Metabolic Stability:

- Incubate compound (1 µM) with human liver microsomes (0.5 mg/mL) in the presence of NADPH.

- Take time-points (0, 5, 15, 30, 60 min) and stop the reaction with cold acetonitrile.

- Analyze by LC-MS/MS to determine the percentage of parent compound remaining. Calculate in vitro half-life (T1/2) and intrinsic clearance (CLint).

- Cytotoxicity Screening:

- Treat human hepatoma cell line (e.g., HepG2) with a range of compound concentrations for 72 hours.

- Measure cell viability using a standard MTT or CellTiter-Glo assay.

- Calculate the half-maximal cytotoxic concentration (CC50).

Protocol 2: Zebrafish Toxicity and Efficacy Screening

Objective: To evaluate the in vivo toxicity and efficacy of a lead compound in a zebrafish model, bridging in vitro and mammalian in vivo data [22].

Methodology:

- Acute Toxicity Assay:

- Animal Model: Wild-type zebrafish embryos.

- Dosing: At 24 hours post-fertilization (hpf), dechorionate embryos and array into 24-well plates (n=10/group). Expose to a logarithmic dilution series of the test compound (e.g., 0.1, 1, 10, 100 µM) or vehicle control.

- Endpoint Monitoring: Record mortality, hatch rate, and gross morphological malformations (e.g., pericardial edema, yolk sac edema, tail curvature) daily for up to 96 hpf.

- Analysis: Determine the LC50 (lethal concentration for 50%) and TD50 (teratogenic concentration for 50%).

- Cardiotoxicity Assay:

- Animal Model: Transgenic zebrafish lines with fluorescently tagged cardiomyocytes (e.g., cmlc2:GFP).

- Dosing: Expose embryos to sub-lethal concentrations of the compound.

- Endpoint Monitoring: At 48-72 hpf, use high-speed video microscopy to capture heartbeats. Quantify heart rate, arrhythmia, and atrial-to-ventricular ratios.

- Efficacy Testing in Disease Models:

- Model Generation: Utilize relevant zebrafish disease models (e.g., angiogenesis inhibition models, infection models).

- Dosing: Treat larvae with the compound at non-toxic concentrations.

- Endpoint Analysis: Assess efficacy using phenotype-specific readouts (e.g., vessel length in angiogenesis assays, bacterial load in infection models).

The Scientist's Toolkit: Research Reagent Solutions

| Tool / Reagent | Supplier Examples | Function in Experiment |

|---|---|---|

| Human Liver Microsomes | XenoTech, Corning | In vitro model of human Phase I metabolism to assess metabolic stability [22]. |

| Caco-2 Cell Line | ATCC, Sigma-Aldrich | Human colorectal adenocarcinoma cell line used as an in vitro model of intestinal permeability [22]. |

| Zebrafish Embryos | Zebrafish International Resource Center (ZIRC) | Vertebrate model for high-throughput, cost-effective in vivo toxicity and efficacy screening [22]. |

| hERG-Expressed Cell Line | ChanTest (Eurofins), Thermo Fisher | Cell line engineered to express the hERG potassium channel for predicting cardiotoxicity risk (QT prolongation) [22]. |

| Stable Target Protein | Creative Biostructure, internal expression | Purified, functional protein for biophysical binding assays and crystallography to guide SAR [19]. |

| NMR-based Pharmacometabonomics Platform | Creative Biostructure, Bruker | Technology to select optimal preclinical animal models based on metabolic similarity to humans [22]. |

Advanced Tools and Techniques: From AI-Driven Design to Experimental Assays

Frequently Asked Questions (FAQs)

Q1: What are the most common file format errors in molecular docking, and how can I avoid them? A common error is using the incorrect file format for ligands. Docking tools like AutoDock Vina require specific formats such as PDBQT. If you start with an SDF file from a database like ZINC, you must convert it to PDBQT using a tool like Open Babel. Attempting to use an SDF file directly in the docking step will result in a failure [24] [25].

Q2: Why does my virtual screening yield molecules with good binding affinity but poor drug-like properties? This is a classic challenge in lead optimization. A comprehensive drug design protocol should integrate multiple filters. After an initial docking screen for binding affinity, you should employ:

- Machine Learning (ML) Classifiers: Train models to distinguish between active and inactive compounds based on chemical descriptors [24].

- ADME-T Prediction: Evaluate Absorption, Distribution, Metabolism, Excretion, and Toxicity properties early on [24].

- PASS Prediction: Assess the potential biological activity spectra of the hit compounds [24].

Q3: My docking results are inconsistent with experimental data. What could be wrong? This can stem from several challenges in the Structure-Based Drug Design (SBDD) pipeline:

- Protein Flexibility: The static protein structure used in docking may not represent the dynamic conformations it adopts in solution. Molecular Dynamics (MD) simulations can help account for this flexibility and assess the stability of the docked complex [24] [26].

- Ligand Preparation: Errors in generating the correct 3D structure, stereochemistry, tautomers, or protonation states of the ligand at physiological pH can lead to inaccurate results. Always double-check the preparation steps [26].

- Scoring Function Limitations: Scoring functions are imperfect and may not accurately estimate binding affinity for all ligand classes. It is often advisable to use multiple scoring functions or more advanced methods like Free Energy Perturbation (FEP) calculations for critical compounds [26].

Q4: How can I generate novel drug candidates for a target with no known inhibitors? Generative AI models, such as Deep Hierarchical Variational Autoencoders (e.g., DrugHIVE), are designed for this task. These models learn the joint probability distribution of ligands bound to their receptors from structural data and can generate novel molecules conditioned on the 3D structure of your target's binding site, even for proteins with only AlphaFold-predicted structures [27].

Troubleshooting Guides

Docking Tool Installation and Setup Errors

| Symptom | Possible Cause | Solution |

|---|---|---|

| Installation of a drug design package (e.g., DrugEx) fails with dependency errors. | Incompatible versions of Python libraries (e.g., scikit-learn). | Ensure you are using the latest pip version. After installation, try pip install --upgrade scikit-learn to resolve conflicts [28]. |

| GPU-accelerated tool runs slowly or fails to detect GPU. | Lack of GPU compatibility or incorrect CUDA version. | Verify that your GPU is compatible and that you have the required version of CUDA (e.g., CUDA 9.2 for some tools) installed [28]. |

| Tutorial data works, but personal data fails in a Galaxy server workflow. | The tool parameters may not be suitable for your specific data format or size. | Check the "info" field of your input dataset for warnings. Compare your parameter settings against those used in the tutorial. Consider using the provided Docker image for a controlled environment [25]. |

Molecular Docking and Scoring Inconsistencies

| Symptom | Possible Cause | Solution |

|---|---|---|

| The docked ligand pose has unrealistic bond geometries or clashes. | An invalid ligand 3D structure was used as input. | Re-prepare the ligand structure, ensuring correct stereochemistry, tautomeric form, and protonation states at pH 7.4 [26]. |

| A known active compound scores poorly (high binding energy) in docking. | 1. Inaccurate protein structure: The binding site may be in a non-receptive conformation.2. Limitations of the scoring function. | 1. Use a different protein structure (e.g., from a different crystal form) or employ an ensemble docking approach.2. Validate the docking protocol by re-docking a known native ligand and confirming it reproduces the experimental pose. |

| Difficulty in rationalizing structure-activity relationships (SAR) based on docking poses. | The single, static pose obtained may not represent the binding mode across the congeneric series. | Use Molecular Dynamics (MD) simulations to generate an ensemble of receptor conformations for docking or to assess the stability of the docked pose over time [24] [26]. |

Virtual Screening and Hit Optimization Challenges

| Symptom | Possible Cause | Solution |

|---|---|---|

| High hit rate in virtual screening, but compounds are inactive in assays. | The screening identified "promiscuous binders" or compounds with undesirable motifs (e.g., PAINS - Pan-Assay Interference Compounds). | Apply PAINS filters during the compound filtering stage. Use tools like the Directory of Useful Decoys (DUD-E) to generate benchmark datasets and test the selectivity of your screening protocol [24] [29]. |

| Optimizing a lead compound for binding affinity inadvertently makes it synthetically intractable or toxic. | The optimization strategy focused on a single objective (binding affinity). | Adopt a multi-objective optimization strategy that simultaneously optimizes binding energy, synthetic accessibility, and ADMET properties [30]. |

| The chemical space of available commercial libraries is limiting for finding novel scaffolds. | You have exhausted the "easily accessible" chemical space. | Utilize generative AI models (e.g., DrugHIVE, DrugEx) for de novo drug design. These can perform "scaffold hopping" to generate novel molecular structures with desired properties [28] [27]. |

Experimental Protocols & Workflows

Protocol: Structure-Based Virtual Screening for Lead Identification

This protocol is adapted from a study identifying natural inhibitors of βIII-tubulin [24].

1. Homology Modeling and Target Preparation:

- Retrieve the target protein sequence from a database like UniProt (ID: Q13509 for βIII-tubulin).

- Identify a suitable template structure (e.g., PDB ID: 1JFF) with high sequence identity.

- Use software like Modeller to generate a 3D homology model. Select the final model based on a low DOPE score and validate its stereo-chemical quality using a Ramachandran plot (e.g., with PROCHECK) [24].

2. Compound Library Preparation:

- Obtain a library of compounds (e.g., 89,399 natural compounds from the ZINC database) in SDF format.

- Convert the SDF files into PDBQT format using Open Babel to add atomic coordinates and partial charges [24].

3. High-Throughput Virtual Screening:

- Define the binding site coordinates (e.g., the 'Taxol site').

- Use a docking tool like AutoDock Vina to screen the entire library.

- Select the top hits (e.g., 1,000 compounds) based on the best (lowest) binding energy [24].

4. Machine Learning-Based Hit Refinement:

- Training Data: Prepare a dataset of known active (Taxol-site targeting) and inactive (non-Taxol targeting) compounds. Generate decoys with similar physicochemical properties but different topologies using the DUD-E server.

- Descriptor Calculation: Calculate molecular descriptors for both training and test (your top hits) datasets using software like PaDEL-Descriptor.

- Model Training & Prediction: Train a supervised ML classifier (e.g., with 5-fold cross-validation) to distinguish active from inactive molecules. Use this model to predict the activity of your top hits, narrowing the list to the most promising active candidates (e.g., 20 compounds) [24].

5. ADME-T and Biological Property Evaluation:

- Subject the ML-refined hits to in silico ADME-T (Absorption, Distribution, Metabolism, Excretion, and Toxicity) and PASS (Prediction of Activity Spectra for Substances) analysis to filter for compounds with desirable drug-like and pharmacokinetic properties [24].

6. Molecular Dynamics Validation:

- Perform MD simulations (e.g., for 100 ns or more) on the apo protein and the protein-ligand complexes.

- Analyze trajectories using RMSD (root mean square deviation), RMSF (root mean square fluctuation), Rg (radius of gyration), and SASA (solvent accessible surface area) to confirm the complex's stability and the ligand's impact on protein dynamics [24].

Workflow for Structure-Based Virtual Screening

Protocol: Multi-Objective Optimization for Molecular Docking

This protocol addresses the challenge of single-objective scoring by considering multiple, sometimes competing, energy terms [30].

1. Problem Formulation:

- Define the molecular docking problem as a Multi-Objective Problem (MOP). A common approach is to minimize two objectives simultaneously:

- Intermolecular Energy (Einter): The energy of interaction between the ligand and the receptor.

- Intramolecular Energy (Eintra): The internal energy of the ligand itself [30].

2. Algorithm Selection:

- Choose a Multi-Objective Optimization Algorithm. Studies have compared several, including:

- NSGA-II (Non-dominated Sorting Genetic Algorithm II)

- SMPSO (Speed-constrained Multi-objective PSO)

- GDE3 (Generalized Differential Evolution 3)

- MOEA/D (Multi-objective Evolutionary Algorithm based on Decomposition)

- SMS-EMOA (S-metric Selection EMOA) [30].

3. Integration with Docking Software:

- Integrate the chosen algorithm with a docking energy function (e.g., from AutoDock 4.2). The optimization algorithm will generate ligand conformations and orientations, which are evaluated by the docking software's energy function.

4. Result Analysis:

- The output is not a single solution but a Pareto front—a set of non-dominated solutions. A solution is "Pareto optimal" if no other solution is better in all objectives. Analysts can then select a solution from this front that offers the best trade-off for their specific needs [30].

Multi-Objective Docking Workflow

| Category | Item/Software/Database | Primary Function |

|---|---|---|

| Target & Structure Databases | RCSB Protein Data Bank (PDB) | Repository for experimentally-determined 3D structures of proteins and nucleic acids [24]. |

| UniProt | Comprehensive resource for protein sequence and functional information [24]. | |

| AlphaFold Database | Repository of highly accurate predicted protein structures from AlphaFold [27]. | |

| Compound Libraries | ZINC Database | Curated collection of commercially available chemical compounds for virtual screening, provided in ready-to-dock 3D formats [24] [29]. |

| ChEMBL | Manually curated database of bioactive molecules with drug-like properties, containing binding and functional assay data [29]. | |

| Software & Tools | AutoDock Vina | Widely used program for molecular docking and virtual screening [24]. |

| Open Babel | A chemical toolbox designed to speak the many languages of chemical data, crucial for file format conversion [24]. | |

| PaDEL-Descriptor | Software to calculate molecular descriptors and fingerprints for quantitative structure-activity relationship (QSAR) and machine learning studies [24]. | |

| GROMACS / NAMD | High-performance molecular dynamics simulation packages for simulating biomolecular systems [24]. | |

| DrugHIVE | A deep hierarchical generative model for de novo structure-based drug design [27]. | |

| Benchmarking & Validation | DUD-E (Directory of Useful Decoys: Enhanced) | Provides benchmarking datasets to test docking algorithms, with active compounds and property-matched decoys [24] [29]. |

Troubleshooting Guides

Guide 1: Troubleshooting Generative Model Output

Problem: Generated molecules are chemically invalid or lack desired activity profiles. Background: This issue often arises during the fine-tuning of generative deep learning models on limited target-specific data, leading to a failure in learning valid chemical rules or relevant structure-activity relationships [31].

| Problem | Potential Root Cause | Diagnostic Steps | Solution |

|---|---|---|---|

| High rate of invalid SMILES strings [32]. | Model struggles with SMILES syntax; insufficient transfer learning. | Calculate the percentage of invalid SMILES in a generated batch. Check reconstruction accuracy on validation sets [31]. | Switch to a representation like SELFIES that guarantees molecular validity. Apply transfer learning: pre-train on a large general dataset (e.g., ZINC) before fine-tuning on target data [31] [32]. |

| Generated molecules are chemically similar but lack potency. | Mode collapse; model explores a limited chemical space. | Analyze the structural diversity (e.g., Tanimoto similarity) of generated molecules. | Implement sampling enhancement and add regularization (e.g., Gaussian noise to state vectors) during training to encourage exploration [31]. |

| Molecules have good predicted affinity but poor selectivity. | Model optimization focused solely on primary target activity. | Profile generated compounds against off-target panels using in silico models. | Retrain the model with a multi-task objective, incorporating selectivity scores or negative data from off-targets into the loss function. |

Guide 2: Troubleshooting Experimental Validation

Problem: AI-designed compounds perform poorly in in vitro or in vivo assays. Background: A disconnect between computational predictions and experimental results can stem from inadequate property prediction or overfitting to the training data [1] [32].

| Problem | Potential Root Cause | Diagnostic Steps | Solution |

|---|---|---|---|

| Potent in silico binding, but no cellular activity. | Poor ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties, such as low cell permeability [33]. | Run in silico ADMET predictions. Check for excessive molecular weight or lipophilicity. | Integrate ADMET filters during the generative process, not just as a post-filter. Use models trained on physicochemical properties [33]. |

| Inconsistency between in vitro and in vivo efficacy. | Unfavorable pharmacokinetics (PK) or unaccounted for in vivo biology [1]. | Review PK/PD data from lead optimization. | Adopt a "patient-first" strategy. Incorporate patient-derived biology (e.g., high-content phenotypic screening on patient tissue samples) earlier in the discovery workflow [1]. |

| Inability to reproduce a competitor's reported activity. | Data bias or overfitting to the specific chemical series in the training data. | Perform a chemical space analysis to see if your training set covers diverse scaffolds. | Enrich the training dataset with diverse chemotypes. Use data augmentation or apply reinforcement learning to balance multiple properties [32]. |

Frequently Asked Questions (FAQs)

Q1: My generative model produces molecules with high predicted affinity, but they are difficult to synthesize. How can I improve synthesizability? A1: This is a common challenge in de novo design [32]. To address it:

- Incorporate Synthesizability Directly: Use a generative model that employs fragment-based molecular representations (e.g., SAFE, GroupSELFIES, fragSMILES), which are built from chemically meaningful and often synthetically accessible building blocks [32].

- Use a Synthesizability Score: Integrate a synthetic accessibility (SA) score as a penalty or reward term in your model's objective function during the reinforcement learning phase. This guides the model towards more practical structures [32].

Q2: What is the most effective deep learning architecture for generating novel, target-specific scaffolds? A2: While many architectures exist, a distribution-learning conditional Recurrent Neural Network (cRNN) has been proven effective for this specific task [31]. Its key advantages are:

- No Goal Function Needed: It avoids the pitfalls of goal-directed models that can generate numerically optimal but impractical molecules.

- Capacity for Novelty: When combined with transfer learning, regularization, and sampling enhancement, this architecture can generate molecules with previously unreported scaffolds that are still target-specific, as demonstrated by the discovery of the novel RIPK1 inhibitor RI-962 [31].

Q3: We've advanced an AI-designed candidate to the clinic, but overall success rates remain low. Is AI just producing "faster failures"? A3: This is a critical question in the field [1]. While no AI-discovered drug has yet received full market approval, the technology is demonstrating profound value by:

- Dramatically Compressing Early-Stage Timelines: Companies like Insilico Medicine and Exscientia have advanced candidates from target to clinic in under two years, a fraction of the traditional 5-year timeline [1].

- Improving Medicinal Chemistry Efficiency: Exscientia's CDK7 inhibitor program achieved a clinical candidate after synthesizing only 136 compounds, compared to the thousands typically required, suggesting higher-quality leads [1]. The current wave of AI-designed drugs in Phase I/II trials is the true test of whether these efficiency gains translate into better clinical outcomes [1].

Q4: How can I visualize and interpret what my generative model has learned? A4: Visualization is key to debugging and understanding deep learning models [34].

- For Architecture: Use tools like

PyTorchViz(for PyTorch) orplot_model(for Keras) to generate a graph of your model's layers and data flow [34]. - For Interpretability: Generate activation heatmaps or use deep feature factorization to uncover which high-level concepts and input features (e.g., specific molecular sub-structures) the model uses to make decisions [34].

Experimental Protocol: Discovery of a Novel RIPK1 Inhibitor via Generative Deep Learning

This protocol details the methodology from a published study that discovered a potent and selective RIPK1 inhibitor, RI-962, using a generative deep learning model [31].

Model Establishment and Training

- Model Architecture: A Generative Deep Learning (GDL) model based on a distribution-learning conditional Recurrent Neural Network (cRNN) with Long Short-Term Memory (LSTM) was implemented [31].

- Molecular Representation: Molecules were represented as SMILES strings and encoded using one-hot encoding for model input [31] [32].

- Transfer Learning: The model was first pre-trained on a large-scale source dataset (~16 million molecules from ZINC12) to learn general chemical rules. It was then fine-tuned on a target dataset of 1,030 known RIPK1 inhibitors [31].

- Regularization Enhancement: Gaussian noise was added to the model's state vector during training to improve its generalization capability [31].

- Sampling Enhancement: During the inference/generation phase, new molecules were created by sampling random state vectors from the learned latent space [31].

Compound Generation and Virtual Screening

- Library Generation: The trained GDL model was used to generate a tailor-made virtual compound library targeting RIPK1.

- Virtual Screening: This generated library was screened in silico to prioritize molecules with high predicted binding affinity and selectivity for RIPK1.

Experimental Validation

- Bioactivity Evaluation: Top-ranking virtual hits were synthesized and tested in in vitro biochemical and cellular assays to confirm RIPK1 inhibition and potency (IC50).

- Selectivity Profiling: The lead compound (RI-962) was profiled against a panel of other kinases to establish selectivity.

- Structural Biology: The binding mode was confirmed by solving the X-ray crystal structure of RIPK1 in complex with RI-962.

- Cellular Efficacy: The compound's ability to protect cells from necroptosis (a form of cell death mediated by RIPK1) was demonstrated in vitro.

- In Vivo Efficacy: Good in vivo efficacy was confirmed in two separate murine models of inflammatory disease.

Research Reagent Solutions

The following table lists key computational and experimental reagents used in AI-driven discovery campaigns for potent and selective inhibitors, as exemplified by the RIPK1 case study [31] and industry platforms [1].

| Research Reagent | Function in AI-Driven Discovery | Example / Notes |

|---|---|---|

| ZINC Database [31] | A large, publicly available database of commercially available compounds for pre-training generative models. | Provides ~16 million molecular structures to teach models general chemical rules. |

| Conditional RNN (cRNN) [31] | The core generative model architecture that creates new molecules conditioned on a target-specific data distribution. | Balances output specificity; can be guided by molecular descriptors. |

| SMILES/SELFIES [32] | String-based molecular representations that allow deep learning models to process chemical structures as sequences. | SELFIES is preferred when guaranteed molecular validity is required. |

| RIPK1 Biochemical Assay | An in vitro test to measure the half-maximal inhibitory concentration (IC50) of generated compounds against the RIPK1 kinase. | Used to validate the primary activity of AI-generated hits. |

| Kinase Selectivity Panel | A broad profiling assay to test lead compounds against a wide range of other kinases. | Critical for confirming that a potent inhibitor (e.g., RI-962) is also selective, reducing off-target risk [31]. |

| Patient-Derived Cell Assays [1] | High-content phenotypic screening of AI-designed compounds on real patient tissue samples (e.g., tumor biopsies). | Used by companies like Exscientia to ensure translational relevance and biological efficacy early in the pipeline. |

AI-Designed Small Molecules in Clinical Trials (as of 2024-2025)

The table below summarizes a selection of AI-designed small molecules that have progressed to clinical trials, demonstrating the output of platforms from leading companies [1] [33].

| Small Molecule | Company | Target | Clinical Stage (as of 2024-2025) | Indication |

|---|---|---|---|---|

| INS018-055 | Insilico Medicine | TNIK | Phase 2a | Idiopathic Pulmonary Fibrosis (IPF) [33] |

| GTAEXS-617 | Exscientia | CDK7 | Phase 1/2 | Solid Tumors [1] [33] |

| EXS-74539 | Exscientia | LSD1 | Phase 1 | Oncology [1] |

| REC-4881 | Recursion | MEK | Phase 2 | Familial Adenomatous Polyposis [33] |

| REC-3964 | Recursion | C. diff Toxin | Phase 2 | Clostridioides difficile Infection [33] |

| ISM-6631 | Insilico Medicine | Pan-TEAD | Phase 1 | Mesothelioma & Solid Tumors [33] |

| ISM-3091 | Insilico Medicine | USP1 | Phase 1 | BRCA Mutant Cancer [33] |

| RLY-2608 | Relay Therapeutics | PI3Kα | Phase 1/2 | Advanced Breast Cancer [33] |

High-Throughput and Ultra-High-Throughput Screening (HTS/UHTS) in Optimization

High-Throughput Screening (HTS) and Ultra-High-Throughput Screening (UHTS) are foundational technologies in modern drug discovery, serving as critical engines for identifying and optimizing potential therapeutic compounds. Within the lead optimization pipeline, these technologies enable researchers to rapidly test hundreds of thousands of chemical compounds against biological targets to identify promising "hit" molecules [20] [35]. This process is particularly vital for addressing urgent global health challenges, such as the development of novel antimalarial drugs in the face of increasing drug resistance [35].

The transition from initial hit identification to a viable lead compound represents a significant bottleneck in drug development. HTS/UHTS methodologies help overcome this bottleneck by providing the extensive data necessary for informed decision-making. When combined with meta-analysis approaches, HTS creates a robust method for screening candidate compounds, enabling the identification of new chemical entities with confirmed in vivo activity as potential treatments for drug-resistant diseases [35]. The integration of these technologies into the lead optimization workflow has become indispensable for efficiently navigating the vast chemical space of potential therapeutics.

Key Quantitative Data in HTS/UHTS

The following table summarizes critical quantitative parameters and metrics from recent HTS studies, providing benchmarks for experimental design and hit selection in lead optimization.

Table 1: Key Quantitative Parameters in HTS/UHTS Studies

| Parameter | Typical Range / Value | Context and Significance |

|---|---|---|

| Library Size | 9,547 - >100,000 compounds [36] [35] | Scope of screening effort; impacts probability of identifying novel hits. |

| Primary Screening Concentration | 10 µM [35] | Standard initial test concentration for identifying active compounds. |

| IC₅₀ Threshold for Hit Confirmation | < 1 µM [35] | Potency cutoff for designating compounds as confirmed hits. |

| HTS Hit Rate | Top 3% of screened library [35] | Initial identification of active compounds for further investigation. |

| Data Generation Capacity | 200+ million data points from 450+ screens [36] | Demonstrates scale and output of established HTS centers. |

| Screening Throughput | >100,000 compounds per day [37] | Measures the operational speed of HTS/UHTS systems. |

| Animal Model Efficacy | 81.4% - 96.4% parasite suppression [35] | In vivo validation of hits identified through HTS and meta-analysis. |

Troubleshooting Common HTS/UHTS Experimental Issues

FAQ 1: How can I determine if systematic error is affecting my HTS data, and what correction methods are available?

Issue: Systematic measurement errors can produce false positives or false negatives, critically impacting hit selection [37].

Diagnosis and Solutions:

- Statistical Detection: Prior to any correction, apply statistical tests to confirm the presence of systematic error. The Student's t-test has been identified as an accurate method for this assessment. Applying correction methods to error-free data can introduce bias and lead to inaccurate hit selection [37].

- Visual Inspection: Analyze the hit distribution surface of your assay. In the absence of systematic error, hits should be evenly distributed across well locations. Row, column, or specific well patterns indicate location-dependent systematic error [37].

- Common Normalization Methods:

- B-score Normalization: Uses a two-way median polish procedure to account for row and column effects within plates, followed by normalization of residuals by their median absolute deviation (MAD) [37].

- Well Correction: Applies a least-squares approximation and Z-score normalization across all plates for each specific well location to remove biases affecting the entire assay [37].

- Z-score Normalization: A plate-based method that normalizes raw measurements using the mean (µ) and standard deviation (σ) of all measurements on a given plate: x̂ij = (xij - µ) / σ [37].

FAQ 2: What criteria should I use to triage hits from a primary HTS screen for lead optimization?

Issue: Selecting the right hits from a large primary dataset is crucial for efficient resource allocation in downstream optimization.

Prioritization Framework: Beyond simple potency (IC₅₀), employ a multi-parameter prioritization strategy [35]:

- Novelty: Prioritize compounds without previously published research related to your target disease (e.g., Plasmodium for malaria) to ensure innovation and avoid patent conflicts [35].

- Safety and Tolerability: Filter for compounds with favorable in vitro cytotoxicity (CC₅₀) and high selectivity index (SI), and those with high median lethal dose (LD₅₀) or maximum tolerated dose (MTD) in animal models (>20 mg/kg) [35].

- Pharmacokinetics (PK): Select compounds with promising PK profiles, such as a maximum serum concentration (Cmax) greater than the concentration required for 100% inhibition (IC₁₀₀) and a half-life (T₁/₂) exceeding 6 hours [35].

- Activity Against Resistant Strains: For infectious diseases, validate hits against drug-resistant strains (e.g., CQ- and ART-resistant malaria strains) early to ensure clinical relevance [35].

FAQ 3: How can I improve the translation ofin vitroHTS hits to successfulin vivolead candidates?

Issue: Many compounds active in biochemical assays fail in animal models due to poor bioavailability, unexpected toxicity, or off-target effects [20].

Strategies for Success:

- Integrate Meta-Analysis: Combine HTS data with a systematic review of existing literature and databases. This bioinformatic triage uses prior knowledge on parameters like mechanism of action, safety, and PK to de-risk candidates before costly in vivo studies [35].

- Early ADMET Profiling: Incorporate absorption, distribution, metabolism, excretion, and toxicity (ADMET) assays early in the workflow. Balance potency with optimal lipophilicity, solubility, and metabolic stability [20].

- Leverage Patient-Derived Biology: Where possible, use high-content phenotypic screening on patient-derived samples (e.g., tumor samples) to ensure translational relevance beyond simple in vitro models [1].

Detailed Experimental Protocols

Protocol 1: Image-Based Phenotypic HTS for Antimalarial Drug Discovery

This protocol outlines a robust method for identifying active compounds against intracellular pathogens, as used in a 2025 study [35].

1. Compound Library and Plate Preparation:

- Utilize an in-house library (e.g., ~9,500 small molecules, including FDA-approved compounds).

- Prepare stock solutions in 100% DMSO and store at -20°C.

- Using a liquid handler (e.g., Hummingwell, CyBio), transfer 5 µL of compound diluted in PBS into 384-well glass plates for a final screening concentration of 10 µM.

2. Parasite Culture and Synchronization:

- Culture Plasmodium falciparum parasites (include drug-sensitive and resistant strains) in O+ human RBCs in complete RPMI 1640 medium at 37°C under a mixed gas environment (1% O₂, 5% CO₂ in N₂).

- Double-synchronize parasite cultures at the ring stage using 5% (wt/vol) sorbitol treatment.

3. Assay and Incubation:

- Dispense synchronized P. falciparum cultures (1% schizont-stage parasites at 2% haematocrit) into compound-treated 384-well plates.

- Incubate plates for 72 hours at 37°C in a malaria culture chamber.

4. Staining and Image Acquisition:

- After incubation, dilute the assay plate to 0.02% haematocrit and transfer to PhenolPlate 384-well ULA-coated microplates.

- Stain and fix the culture using a solution containing:

- 1 µg/mL wheat agglutinin–Alexa Fluor 488 conjugate (stains RBCs).

- 0.625 µg/mL Hoechst 33342 (nucleic acid stain).

- 4% paraformaldehyde.

- Incubate for 20 minutes at room temperature.

- Acquire images using a high-content imaging system (e.g., Operetta CLS) with a 40x water immersion lens. Capture nine image fields per well.

5. Image and Data Analysis:

- Transfer acquired images to analysis software (e.g., Columbus v2.9).

- Quantify parasite viability and load based on fluorescence signals.

- Perform dose-response curves for hit confirmation (typical range: 10 µM to 20 nM).

Protocol 2: Systematic Error Detection in HTS Data

This protocol provides a step-by-step method for diagnosing systematic errors, a critical quality control step [37].

1. Data Preparation:

- Compile raw measurement data from the HTS run, including plate identifiers, well locations (row and column), and assay readings.

2. Hit Selection and Surface Creation:

- Apply a preliminary hit selection threshold (e.g., µ - 3σ, where µ and σ are the mean and standard deviation of all assay compounds).

- Create a hit distribution surface by counting the number of selected hits for each unique well location (e.g., A01, A02, ... P24) across all screened plates.

3. Statistical Testing:

- Visually inspect the hit distribution surface for non-uniform patterns (e.g., entire rows/columns with high hit counts).

- Apply a Student's t-test to compare the distribution of measurements from different plate regions (e.g., edge wells vs. center wells). A significant p-value suggests the presence of systematic error.

- Note: The Discrete Fourier Transform (DFT) method can also be used as a precursor to the Kolmogorov-Smirnov test, but the t-test is recommended for its accuracy [37].

4. Decision Point:

- If systematic error is statistically confirmed, proceed with a correction method like B-score or Well correction.

- If no significant systematic error is detected, avoid applying these corrections to prevent introducing bias into the data.

Essential Research Reagent Solutions

The following table catalogs key reagents, tools, and technologies essential for implementing and troubleshooting HTS/UHTS workflows.

Table 2: Key Research Reagents and Tools for HTS/UHTS

| Reagent / Tool / Technology | Function and Application in HTS/UHTS |

|---|---|

| FDA-Approved Compound Library [35] | A collection of clinically used molecules; excellent starting point for drug repurposing and identifying scaffolds with known human safety profiles. |

| Operetta CLS High-Content Imaging System [35] | Automated microscope for image-based phenotypic screening; enables multiparameter analysis of cellular phenotypes. |

| Columbus Image Data Analysis Software [35] | Platform for storing and analyzing high-content screening images; critical for extracting quantitative data from complex phenotypes. |

| Hummingwell Liquid Handler [35] | Automated instrument for precise transfer of compound solutions and reagents into microplates; essential for assay reproducibility and throughput. |

| Wheat Agglutinin–Alexa Fluor 488 [35] | Fluorescent lectin that binds to red blood cell membranes; used in phenotypic screens to segment and identify infected vs. uninfected cells. |

| Hoechst 33342 [35] | Cell-permeable nucleic acid stain; used to label parasite DNA and quantify parasite load within host cells. |

| B-score Normalization Algorithm [37] | A statistical method for removing row and column effects from plate-based assay data, improving data quality and hit identification accuracy. |

| AI/ML-Driven Design Platforms [20] [1] | Software (e.g., Exscientia's platform) that uses AI to design novel compounds and prioritize synthesis, dramatically compressing the Design-Make-Test-Analyze (DMTA) cycle. |

Workflow and Pathway Visualizations

HTS in Lead Optimization Workflow

Systematic Error Detection & Correction