Industrial Validation of Machine Learning Models for ADMET Prediction: Strategies, Challenges, and Best Practices

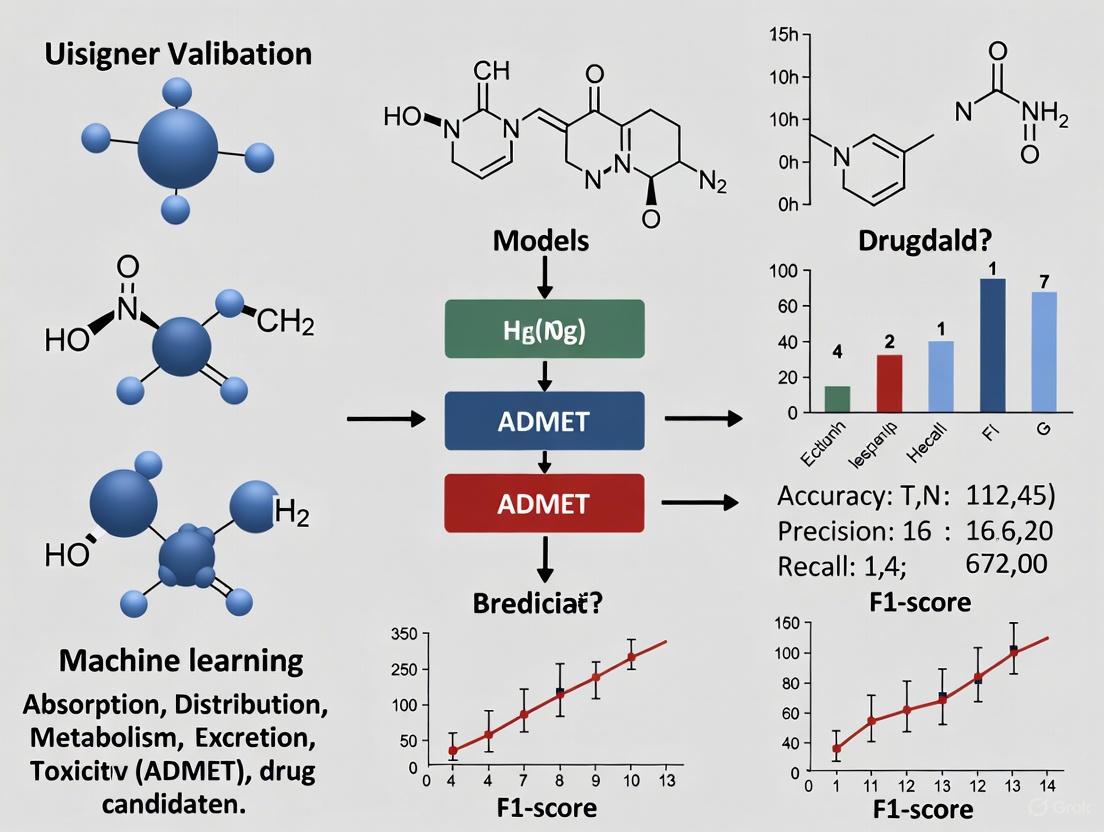

This article provides a comprehensive guide for researchers and drug development professionals on validating machine learning (ML) models for industrial ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) prediction.

Industrial Validation of Machine Learning Models for ADMET Prediction: Strategies, Challenges, and Best Practices

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on validating machine learning (ML) models for industrial ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) prediction. It explores the foundational need for robust ML models in reducing late-stage drug attrition and details state-of-the-art methodologies, from feature representation to advanced algorithms like graph neural networks. The content addresses critical troubleshooting aspects, including data quality and model interpretability, and culminates in rigorous validation and comparative frameworks essential for industrial deployment. By synthesizing recent advances and practical case studies, this resource aims to equip scientists with the knowledge to build trustworthy, translatable ML models that accelerate drug discovery.

Why Machine Learning is Revolutionizing Industrial ADMET Prediction

Drug discovery and development is a long, costly, and high-risk process that takes over 10-15 years with an average cost of over $1-2 billion for each new drug approved for clinical use [1]. For any pharmaceutical company or academic institution, advancing a drug candidate to phase I clinical trial represents a significant achievement after rigorous preclinical optimization. However, nine out of ten drug candidates that enter clinical studies fail during phase I, II, III clinical trials and drug approval [1]. This 90% failure rate represents only candidates that reach clinical trials; when including preclinical candidates, the overall failure rate is even higher [1].

Analyses of clinical trial data from 2010-2017 reveal four primary reasons for drug candidate failure [2] [1]:

- Lack of clinical efficacy (40-50%)

- Unmanageable toxicity (30%)

- Poor drug-like properties (10-15%)

- Lack of commercial needs and poor strategic planning (10%)

Notably, poor drug metabolism and pharmacokinetics (DMPK) properties and unmanageable toxicity—collectively termed ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) issues—account for 40-45% of all clinical failures [2]. This review examines the direct link between poor ADMET properties and clinical attrition, with a specific focus on validating machine learning models for industrial ADMET prediction research.

Table 1: Primary Causes of Clinical Attrition in Drug Development

| Failure Cause | Attribution Rate | Key ADMET Components |

|---|---|---|

| Lack of Clinical Efficacy | 40-50% | Inadequate tissue exposure/target engagement |

| Unmanageable Toxicity | 30% | Organ-specific accumulation, metabolic activation, hERG inhibition |

| Poor Drug Properties | 10-15% | Solubility, permeability, metabolic stability, bioavailability |

| Commercial/Strategic Issues | ~10% | Not ADMET-related |

The Direct Link Between ADMET Properties and Clinical Failure

Historical Progress and Persistent Challenges

Fifty years ago, poor drug properties accounted for nearly 40% of candidate attrition, but rigorous selection criteria during drug optimization have reduced this to 10-15% today [2] [1]. This improvement stems from implementing early screening for fundamental properties including solubility, permeability, protein binding, metabolic stability, and in vivo pharmacokinetics [1]. Established criteria such as the "Rule of Five" (molecular weight <500, cLogP<5, H-bond donors<5, H-bond acceptors<10) have provided valuable guidelines for chemical structure design [1].

Despite these advances, unmanageable toxicity remains a persistent challenge, causing 30% of clinical failures [2]. Toxicity can result from both off-target and on-target effects. For off-target toxicity, comprehensive screening against known toxicity targets (e.g., hERG for cardiotoxicity) is routinely performed [1]. However, addressing on-target toxicity—caused by inhibition of the disease-related target itself—often has limited solutions beyond dose titration [1]. A critical factor in both toxicity types is drug accumulation in vital organs, yet no well-developed strategy exists to optimize drug candidates to reduce tissue accumulation in major vital organs [1].

The STAR Framework: Integrating Tissue Exposure

A proposed framework called Structure–Tissue Exposure/Selectivity–Activity Relationship (STAR) offers a comprehensive approach to improve drug optimization by classifying drug candidates based on both potency/selectivity and tissue exposure/selectivity [1]:

- Class I: High specificity/potency and high tissue exposure/selectivity (low dose, superior efficacy/safety)

- Class II: High specificity/potency but low tissue exposure/selectivity (high dose, high toxicity)

- Class III: Adequate specificity/potency with high tissue exposure/selectivity (low dose, manageable toxicity)

- Class IV: Low specificity/potency and low tissue exposure/selectivity (inadequate efficacy/safety)

This framework highlights how the current overemphasis on potency/specificity optimization using structure-activity relationship (SAR) while overlooking tissue exposure/selectivity using structure-tissue exposure/selectivity-relationship (STR) may mislead drug candidate selection and impact the balance of clinical dose/efficacy/toxicity [1].

Computational ADMET Prediction: Tools and Platforms

The critical role of ADMET properties in clinical success has driven the development of computational prediction tools. These platforms leverage machine learning and quantitative structure-activity relationship (QSAR) models to enable early assessment of ADMET properties before costly experimental work begins.

Table 2: Comprehensive Comparison of ADMET Prediction Platforms

| Platform | Endpoints Covered | Data Source | Core Methodology | Key Features |

|---|---|---|---|---|

| ADMETlab 3.0 [3] | 119 features | 400,000+ entries from ChEMBL, PubChem, OCHEM | Multi-task DMPNN with molecular descriptors | API functionality, uncertainty estimation, no login required |

| admetSAR 2.0 [4] | 18 key ADMET properties | FDA-approved drugs, ChEMBL, withdrawn drugs | SVM, RF, kNN with molecular fingerprints | ADMET-score for comprehensive drug-likeness evaluation |

| PharmaBench [5] | 11 ADMET datasets | 52,482 entries from curated public sources | Multi-agent LLM system for data extraction | Specifically designed for AI model development |

| SwissADME [3] | Physicochemical and ADME properties | Not specified in sources | Not specified in sources | Free web tool |

| ProTox-II [3] | Toxicity endpoints | Not specified in sources | Not specified in sources | Free web tool |

Benchmarking Studies and Performance Validation

Comprehensive benchmarking of computational ADMET tools reveals valuable insights into their predictive performance. A 2024 evaluation of twelve software tools implementing QSAR models for 17 physicochemical and toxicokinetic properties found that models for physicochemical properties (R² average = 0.717) generally outperformed those for toxicokinetic properties (R² average = 0.639 for regression, average balanced accuracy = 0.780 for classification) [6].

This study employed rigorous data curation procedures, including:

- Standardization of chemical structures using RDKit functions

- Removal of inorganic and organometallic compounds

- Neutralization of salts

- Elimination of duplicates at SMILES level

- Outlier detection using Z-score analysis (removing data points with Z-score >3)

The research emphasized evaluating model performance within the applicability domain and identified several tools with good predictivity across different properties [6].

Experimental Protocols for ADMET Model Validation

Data Preprocessing and Cleaning Protocols

Robust machine learning models for ADMET prediction require meticulous data preprocessing. The following protocol has been validated across multiple studies [7] [5] [6]:

Structure Standardization

- Remove inorganic salts and organometallic compounds

- Extract organic parent compounds from salt forms

- Adjust tautomers for consistent functional group representation

- Canonicalize SMILES strings using tools like RDKit

Data Deduplication and Consistency Checking

- For continuous data: remove duplicates with standardized standard deviation >0.2, average values if difference is lower

- For binary classification: keep only compounds with consistent labels

- Remove compounds with ambiguous values across different datasets

Experimental Condition Normalization (for multi-source data integration)

- Extract experimental conditions (buffer type, pH, procedure) using LLM-based systems [5]

- Filter data based on standardized experimental conditions

- Convert results to consistent units

The impact of proper data cleaning is significant. In one study, data cleaning resulted in the removal of various problematic compounds, including salt complexes with differing properties and compounds with inconsistent measurements [7].

Model Training and Evaluation Framework

Recent studies have established sophisticated workflows for developing and validating ADMET prediction models [7] [8]:

Feature Representation Selection

- Evaluate classical descriptors (RDKit descriptors), fingerprints (Morgan fingerprints), and deep neural network representations

- Implement structured approach to feature selection beyond conventional concatenation

- Assess combination of representations through iterative testing

Model Architecture Comparison

- Compare classical algorithms (Random Forests, SVM) with deep learning architectures (DMPNN, MPNN)

- Apply hyperparameter optimization using Bayesian methods

- Implement multi-task learning frameworks when appropriate

Validation Strategies

- Employ cross-validation with statistical hypothesis testing

- Utilize both random and scaffold splits to assess generalization

- Test model performance on external datasets from different sources

- Incorporate uncertainty estimation using evidential deep learning techniques

ADMET Model Validation Workflow

Machine Learning Advancements in ADMET Prediction

Representation Learning and Feature Engineering

The choice of molecular representation significantly impacts model performance in ADMET prediction. Recent benchmarking studies address the conventional practice of combining different representations without systematic reasoning [7]. Key representation types include:

- Classical Descriptors and Fingerprints: RDKit descriptors, Morgan fingerprints

- Deep Neural Network Representations: Learned features from graph neural networks

- Hybrid Approaches: Combining multiple representation types

A structured approach to feature selection that moves beyond simple concatenation has demonstrated improved model reliability [7]. The integration of cross-validation with statistical hypothesis testing adds a crucial layer of reliability to model assessments, particularly important in the noisy domain of ADMET prediction [7].

Critical Evaluation of Model Generalization

A fundamental challenge in ADMET prediction is assessing how well models trained on one dataset perform on data from different sources. Practical evaluation scenarios must include:

- Performance on External Datasets: Testing models trained on one source against data from different sources [7]

- Scaffold Split Validation: Assessing performance on novel molecular scaffolds not seen during training

- Multi-Source Data Integration: Combining data from different sources to mimic real-world scenarios where external data supplements internal data

These evaluations reveal that the optimal model and feature choices are highly dataset-dependent, with no single approach universally outperforming others across all ADMET endpoints [7].

Table 3: Research Reagent Solutions for Computational ADMET Prediction

| Resource Category | Specific Tools | Function | Access |

|---|---|---|---|

| ADMET Prediction Platforms | ADMETlab 3.0, admetSAR 2.0, SwissADME, ProTox-II | Comprehensive ADMET endpoint prediction | Web-based, some with API access |

| Cheminformatics Toolkits | RDKit, OpenBabel | Molecular descriptor calculation, fingerprint generation, structure manipulation | Open-source |

| Machine Learning Frameworks | Scikit-learn, Chemprop, DeepChem | Model building, hyperparameter optimization, validation | Open-source |

| Public Data Repositories | ChEMBL, PubChem, BindingDB, TDC | Source of experimental ADMET data for model training | Public access |

| Curated Benchmark Datasets | PharmaBench, MoleculeNet, B3DB | Pre-curated datasets for model evaluation | Public access |

| Validation and Benchmarking Tools | Custom scripts for applicability domain assessment, uncertainty quantification | Model performance evaluation, reliability estimation | Research implementations |

The high cost of ADMET failure in clinical development—accounting for 40-45% of attrition—demands robust computational approaches for early risk assessment. Machine learning models for ADMET prediction have demonstrated significant promise, with modern platforms covering hundreds of endpoints and utilizing sophisticated deep learning architectures. However, reliable implementation requires:

- Rigorous Data Curation: Addressing data quality issues through standardized cleaning protocols

- Comprehensive Validation: Employing cross-validation with statistical testing and external dataset evaluation

- Uncertainty Quantification: Implementing evidential deep learning to assess prediction reliability

- Applicability Domain Assessment: Recognizing model limitations for novel chemical scaffolds

The ongoing development of curated benchmark datasets like PharmaBench, coupled with structured approaches to feature selection and model validation, provides the foundation for more reliable ADMET predictions in industrial drug discovery. As these computational tools become increasingly integrated into early-stage screening, they offer the potential to significantly reduce clinical attrition rates by identifying ADMET liabilities before candidates enter the costly clinical development phase.

The future of ADMET prediction lies not in seeking universal models, but in developing context-aware approaches that acknowledge dataset dependencies and provide reliable uncertainty estimates—ultimately enabling drug discovery teams to make more informed decisions about which compounds to advance in the development pipeline.

The journey from traditional Quantitative Structure-Activity Relationship (QSAR) modeling to modern machine learning (ML) represents a fundamental transformation in how researchers predict the biological behavior of chemical compounds. This evolution is particularly crucial in the assessment of absorption, distribution, metabolism, excretion, and toxicity (ADMET) properties, which remain a critical bottleneck in drug discovery and development [8]. The typical drug discovery process spans 10-15 years of rigorous research and testing, with unfavorable ADMET properties representing a major cause of candidate failure, contributing to significant consumption of time, capital, and human resources [8]. This review systematically examines the technological evolution from classical QSAR to contemporary ML approaches, providing performance comparisons, methodological frameworks, and practical guidance for researchers navigating this rapidly advancing field.

Traditional QSAR approaches, formally established in the early 1960s with the works of Hansch and Fujita and Free and Wilson, have long served as cornerstone methodologies in ligand-based drug design [9]. These methods operate on the fundamental principle that biological activity can be correlated with quantitative molecular descriptors through mathematical relationships, typically employing regression or classification models [10]. For decades, QSAR methodologies provided the primary computational tools for predicting compound properties before synthesis and testing. However, the emergence of machine learning—defined as a "field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalise to unseen data"—has catalyzed a paradigm shift in predictive capabilities [11].

Modern machine learning approaches have demonstrated remarkable potential in deciphering complex structure-property relationships that challenge traditional QSAR methods [12]. The application of ML in drug discovery is experiencing significant market growth, particularly in lead optimization segments, driven by the ability of ML algorithms to analyze massive datasets and identify patterns that escape conventional approaches [13]. This comprehensive review examines the comparative performance, methodological evolution, and practical implementation of these approaches within industrial ADMET prediction research, providing researchers with the framework needed to navigate this rapidly evolving landscape.

Historical Context and Methodological Evolution

The Foundations of Traditional QSAR

The conceptual roots of QSAR extend back approximately a century to observations by Meyer and Overton that the narcotic properties of anesthetizing gases and organic solvents correlated with their solubility in olive oil [9]. A significant advancement came with the introduction of Hammett constants in the 1930s, which quantified the effects of substituents on reaction rates in organic molecules [9]. The formal establishment of QSAR methodology in the early 1960s with the contributions of Hansch and Fujita, who extended Hammett's equation by incorporating electronic properties and hydrophobicity parameters, marked the beginning of quantitative modeling in medicinal chemistry [9]. The Free-Wilson approach concurrently developed the concept of additive substituent contributions to biological activity.

Traditional QSAR modeling follows a well-defined workflow beginning with a library of chemically related compounds with experimentally determined biological activities. Molecular descriptors—numerical representations of structural and physicochemical properties—are calculated for these compounds [8] [10]. These descriptors encompass a wide range of molecular features, from simple physicochemical properties (e.g., logP, molecular weight) to more complex topological and electronic parameters [8]. The resulting numerical data is then correlated with biological activities using statistical methods such as multiple linear regression (MLR) or partial least squares (PLS) to generate predictive models [10] [14]. The core assumption underpinning these approaches is that similar molecules exhibit similar activities, though this principle encounters limitations captured in the "SAR paradox," which acknowledges that not all similar molecules have similar activities [10].

The Machine Learning Revolution

Machine learning emerged as a distinct field from the broader pursuit of artificial intelligence, with foundational work beginning in the 1940s with the first mathematical modeling of neural networks by Walter Pitts and Warren McCulloch [15] [16]. The term "machine learning" was formally coined by Arthur Samuel in 1959, who defined it as a computer's ability to learn without being explicitly programmed [11] [15]. The field experienced several waves of innovation and periods of reduced interest (known as "AI winters"), including after the critical Lighthill Report in 1973, which led to significant reductions in research funding [15] [16].

The resurgence of neural networks in the 1990s, powered by increasing digital data availability and improved computational resources, laid the groundwork for modern deep learning [16]. The 2010s witnessed breakthroughs in deep learning architectures, reinforcement learning, and natural language processing, culminating in the sophisticated ML applications transforming drug discovery today [15] [16]. Machine learning approaches differ fundamentally from traditional QSAR in their ability to automatically learn complex patterns and representations from raw data without heavy reliance on manually engineered features or pre-defined molecular descriptors [12].

Comparative Methodological Frameworks

The fundamental differences between traditional QSAR and modern ML approaches are visualized in their respective workflows:

Performance Comparison: Quantitative Experimental Evidence

Direct Performance Benchmarking

Rigorous comparative studies provide compelling evidence of the performance advantages offered by machine learning approaches. A landmark study directly comparing deep neural networks (DNN) with traditional QSAR methods across different training set sizes demonstrated superior predictive accuracy for ML approaches, particularly with limited data [14].

Table 1: Predictive Performance (R²) Comparison Between Modeling Approaches

| Training Set Size | Deep Neural Networks | Random Forest | Partial Least Squares | Multiple Linear Regression |

|---|---|---|---|---|

| 6069 compounds | 0.90 | 0.89 | 0.65 | 0.68 |

| 3035 compounds | 0.89 | 0.87 | 0.45 | 0.47 |

| 303 compounds | 0.84 | 0.82 | 0.24 | 0.25 |

This comprehensive comparison utilized a database of 7,130 molecules with reported inhibitory activities against MDA-MB-231 breast cancer cells, employing extended connectivity fingerprints (ECFPs) and functional-class fingerprints (FCFPs) as molecular descriptors [14]. The results demonstrate that machine learning methods (DNN and Random Forest) maintain significantly higher predictive accuracy (R² > 0.80) even with substantially reduced training set sizes, while traditional QSAR methods (PLS and MLR) experience dramatic performance degradation with smaller datasets [14]. This advantage is particularly valuable in early-stage drug discovery programs where experimental data is often limited.

ADMET Prediction Performance

In industrial ADMET prediction, ML approaches have demonstrated transformative potential. Recent benchmarking initiatives such as the Polaris ADMET Challenge have revealed that multi-task architectures trained on diverse datasets achieve 40-60% reductions in prediction error across critical endpoints including human and mouse liver microsomal clearance, solubility (KSOL), and permeability (MDR1-MDCKII) [17]. These improvements highlight that data diversity and representativeness, combined with advanced algorithms, are the dominant factors driving predictive accuracy and generalization in ADMET prediction [17].

ML-based ADMET models provide rapid, cost-effective, and reproducible alternatives that integrate seamlessly with existing drug discovery pipelines [8]. Specific case studies illustrate the successful deployment of ML models for predicting solubility, permeability, metabolism, and toxicity endpoints, outperforming traditional QSAR approaches [8] [12]. Graph neural networks, ensemble methods, and multitask learning frameworks have demonstrated particular effectiveness in capturing the complex, non-linear relationships between chemical structures and ADMET properties [12].

Table 2: ADMET Endpoint Prediction Performance Comparison

| ADMET Endpoint | Traditional QSAR Performance | Modern ML Performance | Key Advancing Technologies |

|---|---|---|---|

| Solubility | Moderate (R² ~0.6-0.7) | High (R² ~0.8-0.9) | Graph Neural Networks, Ensemble Methods |

| Permeability | Variable (Accuracy ~70-80%) | Improved (Accuracy ~85-90%) | Deep Learning, Multitask Learning |

| Metabolism | Limited by congeneric series | Expanded scaffold coverage | Federated Learning, Representation Learning |

| Toxicity | Structural alert dependence | Pattern recognition across scaffolds | Deep Featurization, Explainable AI |

Experimental Protocols and Methodological Details

Traditional QSAR Modeling Protocol

Data Curation and Chemical Space Definition: Traditional QSAR requires a congeneric series of compounds with measured biological activities. The chemical space should be carefully defined through principal component analysis (PCA) or similar techniques to ensure model applicability domains are properly characterized [9]. Typically, 20-50 compounds with moderate structural diversity but shared core scaffolds are utilized.

Descriptor Calculation and Selection: Molecular descriptors are calculated using software such as Dragon, MOE, or RDKit, generating hundreds to thousands of numerical descriptors representing topological, electronic, and physicochemical properties [8] [10]. Feature selection employs filter methods (correlation analysis), wrapper methods (genetic algorithms), or embedded methods (LASSO) to reduce dimensionality and avoid overfitting [8].

Model Development and Validation: Multiple Linear Regression (MLR) or Partial Least Squares (PLS) are used to establish quantitative relationships between descriptors and biological activity [10] [14]. Validation follows OECD guidelines including internal validation (leave-one-out cross-validation), external validation (training/test set splits), and Y-scrambling to ensure robustness [10]. The applicability domain must be explicitly defined to identify compounds for which predictions are reliable.

Modern Machine Learning Protocol

Data Preparation and Augmentation: ML approaches thrive on larger, more diverse datasets (hundreds to thousands of compounds) [14]. Data augmentation techniques including synthetic minority oversampling are employed to address class imbalance. Representational learning approaches automatically generate features from molecular structures, eliminating manual descriptor calculation [12].

Algorithm Selection and Training: For structured data, Random Forest and Gradient Boosting methods often provide strong baseline performance [14]. For raw molecular structures, Graph Neural Networks (GNNs) directly operate on molecular graphs, while Transformers process SMILES representations [12]. Multitask learning jointly trains related endpoints (e.g., multiple ADMET properties) to improve generalization through shared representations [12].

Advanced Validation and Deployment: Scaffold-based split validation ensures evaluation across structurally novel compounds rather than random splits [17]. Federated learning approaches enable training across distributed datasets without centralizing sensitive data, addressing data privacy concerns while expanding chemical coverage [17]. Model interpretability techniques including SHAP analysis and attention mechanisms provide mechanistic insights into predictions [12].

Table 3: Essential Research Tools for Predictive Modeling

| Tool/Resource | Category | Function | Representative Examples |

|---|---|---|---|

| Molecular Descriptor Software | Traditional QSAR | Calculates quantitative descriptors for QSAR modeling | Dragon, MOE, RDKit [8] |

| Fingerprinting Algorithms | Ligand-Based Methods | Generates molecular representations for similarity assessment | ECFP, FCFP, Atom-Pair Fingerprints [14] |

| Deep Learning Frameworks | Modern ML | Provides infrastructure for neural network model development | PyTorch, TensorFlow, DeepChem [12] |

| Graph Neural Network Libraries | Modern ML | Implements graph-based learning for molecular structures | DGL-LifeSci, PyTorch Geometric [12] |

| Federated Learning Platforms | Collaborative ML | Enables multi-institutional model training without data sharing | Apheris, MELLODDY [17] |

| Benchmark Datasets | Model Evaluation | Provides standardized data for performance comparison | Polaris ADMET Challenge, MoleculeNet [17] |

Implementation Pathways and Industrial Applications

Integration Strategies for Research Organizations

The transition from traditional QSAR to modern ML requires strategic implementation planning. For organizations with extensive historical QSAR expertise and well-established congeneric series, a hybrid approach that gradually incorporates ML elements offers a practical pathway. Initial implementation might involve using Random Forest or Gradient Boosting methods on existing descriptor sets to capture non-linear relationships while maintaining interpretability [14]. This provides immediate performance benefits while building institutional familiarity with ML concepts.

For new research programs without historical modeling baggage, direct adoption of modern deep learning approaches leveraging graph neural networks or transformer architectures is recommended [12]. These approaches minimize manual feature engineering and demonstrate superior performance on diverse chemical series, particularly for complex ADMET endpoints with multifactorial determinants [12].

Addressing Implementation Challenges

The implementation of ML approaches presents distinct challenges including data requirements, computational resources, and specialized expertise [13]. Successful organizations address these constraints through cloud-based infrastructure, strategic hiring, and targeted training programs for existing computational chemists [13]. The computational demands of training complex ML models represent a significant barrier, particularly for smaller organizations [13].

Federated learning approaches are emerging as a powerful strategy to overcome data limitations while preserving intellectual property [17]. By enabling model training across distributed datasets without centralizing sensitive data, federated learning systematically expands the effective domain of ADMET models, addressing the fundamental limitation of isolated modeling efforts [17]. Industry consortia such as the MELLODDY project have demonstrated that federated learning across multiple pharmaceutical companies consistently improves model performance compared to single-organization training [17].

Industrial Applications and Impact

In industrial drug discovery, ML-driven ADMET prediction has evolved from a secondary screening tool to a cornerstone in clinical precision medicine applications [12]. Specific implementations include personalized dosing recommendations based on predicted metabolic profiles, therapeutic optimization for special patient populations, and safety prediction for novel chemical modalities [12]. Lead optimization represents the most dominant application segment for ML in drug discovery, capturing approximately 30% of market share due to its critical impact on compound attrition [13].

The therapeutic area of oncology has been particularly transformed by ML approaches, representing 45% of the machine learning in drug discovery market [13]. The complexity of cancer targets and the need for personalized therapeutic approaches has driven adoption of ML for target identification, compound optimization, and ADMET prediction in oncology pipelines [13]. The continued expansion into neurological disorders represents the fastest-growing therapeutic application as researchers address the unique challenges of blood-brain barrier penetration and CNS safety profiles [13].

The evolution from traditional QSAR to modern machine learning represents a fundamental shift in predictive modeling capabilities for drug discovery. While traditional QSAR methods remain valuable for congeneric series with limited data, machine learning approaches demonstrate superior predictive accuracy, especially for complex ADMET endpoints and structurally diverse compound collections. The performance advantages of ML methods become particularly pronounced with larger, more diverse datasets and when predicting properties for novel chemical scaffolds outside traditional applicability domains.

For research organizations navigating this transition, a phased implementation strategy based on existing infrastructure and data assets is recommended. Initial focus should be on augmenting traditional QSAR workflows with tree-based methods, progressively advancing to deep learning approaches as data assets and computational capabilities mature. Participation in federated learning initiatives provides access to expanded chemical space coverage without compromising intellectual property, addressing the fundamental data limitations that constrain isolated modeling efforts.

As machine learning continues to transform ADMET prediction, the integration of multimodal data sources, advances in model interpretability, and the development of regulatory frameworks for computational predictions will shape the next chapter in this evolving field. Organizations that strategically balance methodological rigor with practical implementation considerations will be best positioned to leverage these advancements in reducing late-stage attrition and accelerating the development of safer, more effective therapeutics.

In modern drug discovery, the evaluation of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties represents a critical bottleneck that significantly contributes to the high attrition rate of drug candidates [8]. The pharmaceutical industry faces substantial challenges as unfavorable ADMET properties have been recognized as a major cause of failure for potential molecules, contributing to enormous consumption of time, capital, and human resources [8]. Traditional experimental approaches for ADMET assessment, while valuable, are often time-consuming, cost-intensive, and limited in scalability, rendering them impractical for screening the vast libraries of potential drug candidates available today [8] [18].

The evolution of machine learning (ML) and artificial intelligence (AI) has revolutionized this landscape, offering computational approaches that provide rapid, cost-effective, and reproducible alternatives that integrate seamlessly with existing drug discovery pipelines [8] [19]. These in silico methodologies enable preliminary screening of extensive drug libraries preceding preclinical studies, significantly reducing costs and expanding the scope of drug discovery efforts [8]. The advancement has been particularly transformative for early-stage risk assessment and compound prioritization, allowing researchers to identify potential ADMET issues before committing to expensive synthetic and experimental workflows [18] [20].

This guide examines the core ADMET properties essential for drug development, objectively compares the performance of various machine learning approaches in predicting these properties, and provides detailed methodologies for model validation suited for industrial research settings. By framing this discussion within the broader context of ML model validation, we aim to provide drug development professionals with a comprehensive resource for implementing robust ADMET prediction strategies in their workflows.

Core ADMET Properties: Key Prediction Targets and Their Impact

ADMET properties encompass a complex set of pharmacokinetic and toxicological parameters that collectively determine the viability of a drug candidate. Understanding and accurately predicting these properties is essential for developing safe and effective therapeutics.

Absorption Properties

Absorption refers to how a drug enters the bloodstream from its administration site. For orally administered drugs, this primarily occurs through the gastrointestinal tract [8] [20]. Key properties influencing absorption include:

- Solubility: A drug must demonstrate adequate aqueous solubility to be absorbed and reach therapeutic concentrations [20]. Poor solubility remains a common challenge in early drug development.

- Lipophilicity (LogP/LogD): This critical balance determines membrane permeability. If a drug is too hydrophilic, it cannot cross cell membranes; if too lipophilic, it may become trapped in fatty tissues or membranes [20].

- Intestinal Permeability: The ability to cross the intestinal epithelium, frequently assessed using Caco-2 cell models that mimic human intestinal epithelium [21] [20].

- Human Intestinal Absorption (HIA): The extent of absorption through the human gastrointestinal tract, a key parameter for oral drugs [20].

- Transporter-Mediated Absorption: Involvement of protein transporters such as P-glycoprotein (P-gp) that can actively efflux drugs back into the intestinal lumen, reducing overall absorption [20].

Distribution Properties

Distribution encompasses how a drug travels throughout the body and reaches its target site of action. Key distribution parameters include:

- Plasma Protein Binding (PPB): The reversible binding of drugs to plasma proteins (primarily albumin and globulin) affects both pharmacokinetic and pharmacodynamic properties, as only the unbound fraction can exhibit pharmacological effects and be excreted [20].

- Blood-Brain Barrier (BBB) Penetration: A semipermeable membrane that protects the brain from harmful substances. BBB penetration is crucial for central nervous system (CNS)-targeted drugs but undesirable for non-CNS therapeutics to avoid potential side effects [20].

- Volume of Distribution (Vd): A theoretical volume that quantifies the distribution of a drug throughout the body relative to its concentration in blood plasma [7].

Metabolism Properties

Metabolism involves the biochemical modification of drugs, primarily by liver enzymes, which typically converts lipophilic compounds into more hydrophilic metabolites for excretion [20]. Key metabolic considerations include:

- Cytochrome P450 (CYP) Enzymes: This superfamily of enzymes metabolizes 75-90% of hepatically cleared drugs, making CYP inhibition and induction studies essential for assessing potential metabolic interactions [18] [20].

- Phase I Metabolism: Includes oxidation, reduction, and hydrolysis reactions that introduce or expose polar functional groups [20].

- Phase II Metabolism: Conjugation reactions that add charged groups (e.g., glucuronic acid, sulfate) to increase water solubility and molecular weight for excretion [20].

- Metabolic Stability: Reflects how rapidly a drug is metabolized, directly impacting its half-life and dosing frequency [20].

Excretion Properties

Excretion refers to how the body eliminates drugs and their metabolites. Key factors include:

- Molecular Weight: Small molecules are primarily removed through renal excretion, while larger compounds may undergo biliary excretion [20].

- Passive Excretion: Influenced by flow rate, lipophilicity (LogP), protein binding, and pKa, all affecting how drugs are reabsorbed and excreted [20].

- Active Transport: Hepatic metabolism and active drug transport by biliary transporters represent important excretion pathways [20].

- Clearance: The volume of plasma cleared of drug per unit time, a critical parameter for determining dosing regimens [7].

Toxicity Properties

Toxicity encompasses potential harmful effects of drugs or their metabolites. Critical toxicity endpoints include:

- hERG Inhibition: Blockade of the potassium channel encoded by the human Ether-Ã -go-go-Related Gene can cause QT interval prolongation and life-threatening cardiac arrhythmias [18] [20].

- Hepatotoxicity: Liver injury represents a common factor in post-approval drug withdrawals, making early assessment crucial [18].

- Mutagenicity: The ability to cause DNA mutations, typically assessed through in silico models that identify structural alerts associated with genetic damage [20].

- Skin Sensitization: The potential to cause allergic skin reactions [20].

- Carcinogenicity: The potential to cause cancer, often requiring long-term studies [22].

Table 1: Core ADMET Properties and Their Impact on Drug Development

| ADMET Category | Specific Property | Measurement/Units | Impact on Drug Development |

|---|---|---|---|

| Absorption | Aqueous Solubility | LogS or μg/mL | Determines bioavailability and formulation strategy |

| Caco-2 Permeability | Papp (10â»â¶ cm/s) | Predicts intestinal absorption for oral drugs | |

| Human Intestinal Absorption (HIA) | % Absorbed | Estimates fraction absorbed in humans | |

| P-glycoprotein Inhibition | IC₅₀ (μM) | Identifies drug-transporter interactions | |

| Distribution | Plasma Protein Binding (PPB) | % Bound | Affects free drug concentration and efficacy |

| Blood-Brain Barrier Penetration | LogBB or LogPS | Critical for CNS-targeted and non-CNS drugs | |

| Volume of Distribution | L/kg | Indicates extent of tissue distribution | |

| Metabolism | CYP450 Inhibition | IC₅₀ (μM) | Predicts drug-drug interaction potential |

| Metabolic Stability | Half-life or Clearance | Affects dosing frequency and exposure | |

| Metabolite Identification | Structural identification | Identifies active/toxic metabolites | |

| Excretion | Renal Clearance | mL/min/kg | Determines renal elimination pathway |

| Biliary Excretion | % of dose | Important for drugs cleared hepatically | |

| Toxicity | hERG Inhibition | IC₅₀ (μM) | Assesses cardiotoxicity risk |

| Hepatotoxicity | Binary or severity score | Predicts potential liver injury | |

| Mutagenicity (Ames Test) | Binary (Yes/No) | Identifies genotoxic compounds | |

| Skin Sensitization | Binary or potency class | Predicts allergic contact dermatitis |

Machine Learning Approaches for ADMET Prediction

The application of machine learning in ADMET prediction has evolved significantly, with various algorithms demonstrating different strengths depending on the specific property being predicted and the available data.

Algorithm Selection and Performance Comparison

Multiple studies have systematically evaluated ML algorithms for ADMET endpoints. In predicting Caco-2 permeability, XGBoost generally provided better predictions than comparable models for test sets, demonstrating the effectiveness of boosting algorithms for this endpoint [21]. Similarly, tree-based methods including Random Forests have shown strong performance across multiple ADMET prediction tasks [23].

The comparison between traditional quantitative structure-activity relationship (QSAR) models and more recent deep learning approaches reveals that while deep neural networks can capture complex molecular patterns, their advantages over simpler methods are sometimes limited given typical dataset sizes and quality in the ADMET domain [7]. Ensemble methods that combine multiple individual models have proven particularly effective for handling high-dimensionality issues and unbalanced datasets commonly encountered in ADMET data [23].

Table 2: Machine Learning Algorithm Performance for ADMET Prediction

| Algorithm Category | Specific Algorithms | Best Use Cases | Performance Notes |

|---|---|---|---|

| Tree-Based Methods | Random Forest, XGBoost, LightGBM, CatBoost | Caco-2 permeability, metabolic stability, toxicity classification | Generally strong performance; XGBoost superior for permeability prediction [21] |

| Deep Learning Methods | Message Passing Neural Networks (MPNN), DMPNN, CombinedNet | Complex molecular patterns, multi-task learning | Can capture intricate structure-activity relationships; performance gains variable [21] [7] |

| Support Vector Machines | SVM with linear and RBF kernels | Classification tasks with clear margins | Effective for binary classification of toxicity endpoints [23] |

| Ensemble Methods | Multiple classifier systems, stacked models | Handling unbalanced datasets, improving prediction robustness | Addresses high-dimensionality issues common in ADMET data [23] |

| Gaussian Processes | GP models with various kernels | Uncertainty quantification, well-calibrated predictions | Superior performance in bioactivity assays; mixed results for ADMET [7] |

Molecular Representations and Feature Engineering

The representation of molecular structures significantly impacts model performance. Common approaches include:

- Molecular Descriptors: Numerical representations conveying structural and physicochemical attributes based on 1D, 2D, or 3D structures, with software tools available to calculate over 5000 different descriptors [8].

- Fingerprints: Fixed-length representations such as Morgan fingerprints (also known as circular fingerprints) that capture molecular substructures [21].

- Graph-Based Representations: Molecular graphs where atoms represent nodes and bonds represent edges, particularly suited for graph neural networks [21].

- Learned Representations: Embeddings such as Mol2Vec that use neural networks to generate task-specific molecular representations [18].

Recent advances involve learning task-specific features by representing molecules as graphs and applying graph convolutions to these explicit molecular representations, which has achieved unprecedented accuracy in ADMET property prediction [8]. Hybrid approaches that combine multiple representation types, such as Mol2Vec embeddings with curated molecular descriptors, have demonstrated enhanced predictive accuracy [18].

Feature Selection Strategies

Effective feature selection is crucial for building robust ADMET prediction models. Three primary approaches dominate:

- Filter Methods: Applied during pre-processing to select features without relying on specific ML algorithms, efficiently eliminating duplicated, correlated, and redundant features [8].

- Wrapper Methods: Iteratively train algorithms using feature subsets, dynamically adding and removing features based on previous training iterations, typically yielding superior accuracy at higher computational cost [8].

- Embedded Methods: Integrate feature selection directly into the learning algorithm, combining the speed of filter methods with the accuracy of wrapper approaches [8].

Studies have demonstrated that feature quality is more important than feature quantity, with models trained on non-redundant data achieving accuracy exceeding 80% compared to those trained on all available features [8].

Validation Frameworks for Industrial ADMET Prediction

Robust validation of ADMET prediction models is essential for their successful implementation in industrial drug discovery settings. This requires rigorous assessment of predictive performance, generalizability, and applicability to novel chemical space.

Benchmark Datasets and Performance Metrics

The development of comprehensive benchmark datasets has significantly advanced ADMET model validation. PharmaBench represents one such effort, comprising eleven ADMET datasets with 52,482 entries designed to serve as an open-source resource for AI model development [5]. This addresses limitations of earlier benchmarks that often included only a small fraction of publicly available data or compounds that differed substantially from those used in industrial drug discovery pipelines [5].

Standard performance metrics for ADMET prediction models include:

- Regression Tasks: R² (coefficient of determination), RMSE (root mean square error), and MAE (mean absolute error) [21].

- Classification Tasks: Accuracy, precision, recall, F1-score, and AUC-ROC (area under the receiver operating characteristic curve) [20].

- Model Robustness: Y-randomization tests to verify models learn true structure-property relationships rather than dataset artifacts [21].

- Applicability Domain Analysis: Assesses the chemical space where models can provide reliable predictions [21].

Cross-Validation and Statistical Testing

Beyond simple train-test splits, robust validation requires cross-validation combined with statistical hypothesis testing to provide more reliable model comparisons [7]. This approach is particularly important in the ADMET domain where datasets may be noisy or limited in size. The use of scaffold splits that separate structurally distinct molecules provides a more challenging and realistic assessment of model generalizability compared to random splits [7].

Transferability to Industrial Settings

A critical question for ADMET models is their performance when applied to proprietary pharmaceutical company datasets. Studies evaluating the transferability of models trained on public data to internal industry datasets have found that boosting models retain a degree of predictive efficacy when applied to industry data, though performance typically decreases compared to internal models [21]. This highlights the importance of fine-tuning public models on proprietary data when possible.

Prospective Validation and Blind Challenges

Perhaps the most rigorous validation comes from prospective testing on compounds not previously seen by the model, often implemented through blind challenges [24]. Initiatives like OpenADMET are organizing regular blind challenges focused on ADMET endpoints to provide realistic assessment of model performance and drive methodological advances [24].

The following workflow diagram illustrates a comprehensive validation framework for industrial ADMET prediction models:

Diagram 1: ADMET Model Validation Workflow

Experimental Protocols and Methodologies

Data Curation and Preprocessing

High-quality data curation is fundamental to building reliable ADMET prediction models. Standardized protocols include:

- Molecular Standardization: Using tools like RDKit MolStandardize to achieve consistent tautomer canonical states and final neutral forms while preserving stereochemistry [21].

- Duplicate Handling: Calculating mean values and standard deviations for duplicate entries, retaining only entries with standard deviation ≤ 0.3 to minimize uncertainty [21].

- Salt Stripping: Removing salt components to isolate the parent organic compound for consistent property prediction [7].

- Data Cleaning: Removing inorganic salts, organometallic compounds, and addressing inconsistent SMILES representations and measurement ambiguities [7].

Large Language Models (LLMs) have recently been applied to automate the extraction of experimental conditions from assay descriptions in biomedical databases, facilitating the creation of more consistent benchmarks like PharmaBench [5].

Model Training and Optimization Protocols

Comprehensive model evaluation involves comparing multiple algorithms with different molecular representations. A typical protocol includes:

- Data Splitting: Dividing datasets into training, validation, and test sets in ratios such as 8:1:1, ensuring identical distribution across datasets [21]. Scaffold splits that separate structurally distinct molecules provide more challenging evaluation.

- Algorithm Comparison: Evaluating diverse methods including XGBoost, Random Forests, Support Vector Machines, and deep learning models like Message Passing Neural Networks [21] [7].

- Hyperparameter Optimization: Systematically tuning model parameters using validation sets to identify optimal configurations for each algorithm type [7].

- Feature Selection: Iteratively combining different molecular representations (descriptors, fingerprints, embeddings) to identify optimal feature sets [7].

Uncertainty Quantification and Applicability Domain

Reliable ADMET prediction requires assessing model confidence and defining applicability domains. Approaches include:

- Applicability Domain Analysis: Determining the chemical space where models can provide reliable predictions based on training data similarity [21].

- Uncertainty Estimation: Implementing methods to quantify both aleatoric (data inherent) and epistemic (model) uncertainty, with Gaussian Process models showing particular promise for well-calibrated uncertainty estimates [7].

- Consensus Modeling: Combining predictions from multiple models or endpoints to generate more reliable consensus scores [18] [20].

Table 3: Research Reagent Solutions for ADMET Prediction

| Resource Category | Specific Tools/Resources | Primary Function | Key Features |

|---|---|---|---|

| Comprehensive Platforms | StarDrop, ADMETlab 3.0, Receptor.AI | Multi-endpoint ADMET prediction | Integrated workflows, uncertainty estimation, consensus scoring [18] [20] |

| Specialized Prediction Tools | pkCSM, ADMET Predictor, Derek Nexus | Specific ADMET endpoint prediction | Targeted models for properties like toxicity (Derek Nexus) or pharmacokinetics (pkCSM) [18] [20] [22] |

| Cheminformatics Libraries | RDKit, DeepChem, Mordred | Molecular descriptor calculation and model building | Open-source, customizable pipelines for descriptor calculation and ML [21] [18] |

| Benchmark Datasets | PharmaBench, TDC, MoleculeNet | Model training and benchmarking | Curated datasets for standardized comparison of ADMET models [5] [7] |

| Validation Frameworks | OpenADMET, Polaris, ASAP Initiatives | Prospective model validation | Blind challenges and community benchmarking for realistic assessment [24] |

The landscape of ADMET prediction has been transformed by machine learning approaches that now provide reliable tools for early assessment of critical pharmacokinetic and toxicological properties. Tree-based methods like XGBoost and Random Forests consistently demonstrate strong performance across multiple ADMET endpoints, while deep learning approaches offer promise for capturing complex structure-activity relationships, particularly as dataset quality and size improve.

Robust validation remains paramount for successful industrial implementation, requiring comprehensive approaches that extend beyond simple train-test splits to include cross-validation with statistical testing, applicability domain analysis, transferability assessment, and prospective blind challenges. Initiatives like PharmaBench and OpenADMET are addressing critical needs for standardized benchmarks and realistic validation frameworks.

As the field advances, key areas for continued development include improved uncertainty quantification, better integration of multi-task learning, enhanced molecular representations, and more effective strategies for combining public and proprietary data. By adopting systematic approaches to model building and validation, drug development professionals can leverage ADMET prediction to significantly reduce late-stage failures and accelerate the development of safer, more effective therapeutics.

In modern drug discovery, the attrition of candidate compounds due to unfavorable Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties remains a primary cause of failure in later development stages, consuming significant time and capital [8]. The industrial imperative is clear: integrate more predictive and robust computational tools to front-load risk assessment. Machine learning (ML) models for ADMET prediction have emerged as transformative tools for this purpose, offering the potential to prioritize compounds with optimal pharmacokinetic and safety profiles early in the pipeline [17] [8]. However, not all models are created equal. Their utility in an industrial context is dictated by rigorous validation, demonstrable performance on chemically relevant space, and the ability to generalize to proprietary compound libraries. This guide provides an objective comparison of current ML methodologies, focusing on their validation and practical application in de-risking drug development.

Benchmarking ML Models for ADMET Prediction

The performance of an ADMET model is not absolute but is contingent upon the data and molecular representations used. A systematic approach to benchmarking reveals that model architecture, feature selection, and data diversity are critical drivers of predictive accuracy.

Comparative Performance of Algorithms and Representations

A 2025 benchmarking study addressing the practical impact of feature representations provides key quantitative insights. The study evaluated a range of algorithms and molecular representations across multiple ADMET datasets, using statistical hypothesis testing to ensure robust comparisons [7].

Table 1: Performance Comparison of ML Models and Feature Representations on ADMET Tasks

| Model Architecture | Feature Representation | Key Findings / Performance Note |

|---|---|---|

| Random Forest (RF) | RDKit Descriptors, Morgan Fingerprints | Found to be a generally well-performing and robust architecture in comparative studies [7]. |

| LightGBM / CatBoost | RDKit Descriptors, Morgan Fingerprints, Combinations | Gradient boosting frameworks often yielded strong results, sometimes outperforming other models [7]. |

| Support Vector Machine (SVM) | RDKit Descriptors, Morgan Fingerprints | Performance varied significantly and was often outperformed by tree-based methods [7]. |

| Message Passing Neural Network (MPNN) | Molecular Graph (Intrinsic) | Shows promise but may be outperformed by fixed representations and classical models like Random Forest on some tasks [7]. |

| XGBoost | Morgan Fingerprints + RDKit 2D Descriptors | Provided generally better predictions for Caco-2 permeability compared to RF, SVM, and deep learning models [25]. |

The Critical Role of Data Quality and Curation

The foundation of any reliable model is high-quality, curated data. Public ADMET datasets are often plagued by inconsistencies, including duplicate measurements with varying values, inconsistent binary labels for the same structure, and fragmented SMILES strings [7]. A robust data cleaning pipeline is, therefore, an essential first step. This includes:

- Standardizing SMILES Representations: Using tools to generate consistent canonical representations and adjust tautomers [7].

- Handling Salts and Inorganics: Removing inorganic salts and extracting the organic parent compound from salt forms [7].

- Deduplication: Removing duplicate entries, especially those with inconsistent target values, is critical for preventing model overfitting [7].

The emergence of larger, more pharmaceutically relevant benchmarks like PharmaBench—which uses a multi-agent LLM system to extract and standardize experimental conditions from over 14,000 bioassays—is addressing previous limitations in dataset size and chemical diversity [5].

Experimental Protocols for Model Validation

For a model to be trusted in an industrial setting, it must be validated using protocols that mimic real-world challenges. The following methodologies represent current best practices.

Structured Workflow for Model Development and Evaluation

A robust ML workflow extends from raw data to a statistically validated model ready for deployment [7] [8].

Diagram 1: Robust model development workflow.

1. Data Cleaning and Standardization: As previously described, this step ensures molecular consistency and removes noise [7]. 2. Data Splitting: Using scaffold splitting (grouping compounds by their core Bemis-Murcko scaffold) is crucial for a realistic assessment of a model's ability to generalize to novel chemotypes, which is a common requirement in drug discovery projects [7] [5]. 3. Feature Engineering and Selection: Instead of arbitrarily concatenating all available feature representations (e.g., descriptors, fingerprints), a structured, iterative approach to identify the best-performing combination for a specific dataset leads to more reliable models [7]. 4. Model Training with Hyperparameter Tuning: Model hyperparameters are optimized in a dataset-specific manner to ensure peak performance [7]. 5. Model Evaluation with Statistical Hypothesis Testing: Beyond simple cross-validation, comparing models using statistical hypothesis tests (e.g., t-tests on cross-validation folds) adds a layer of reliability, helping to ensure that performance improvements are statistically significant and not due to random chance [7].

Protocol for Assessing Practical Utility and Transferability

A model's performance on a held-out test set from the same data source is often an optimistic estimate of its real-world performance. A more industrially relevant protocol involves:

- External Validation on Different Data Sources: Training a model on one public dataset and evaluating it on a different one, or on an internal pharmaceutical company dataset, for the same property [7] [25]. This tests the model's transferability and highlights the impact of inter-laboratory assay variability.

- Combining Data Sources: Evaluating the performance boost achieved by supplementing internal data with external public data, mimicking a common industrial scenario for expanding chemical space coverage [7].

A study on Caco-2 permeability demonstrated this by training models on public data and then validating them on an internal dataset from Shanghai Qilu, showing that boosting models like XGBoost retained a degree of predictive efficacy in this industrial transfer [25].

The Scientist's Toolkit: Essential Research Reagents & Solutions

Building and validating industrial-strength ADMET models requires a suite of software tools and data resources.

Table 2: Key Research Reagents for ADMET ML Modeling

| Tool / Resource | Type | Primary Function |

|---|---|---|

| RDKit | Cheminformatics Software | An open-source toolkit for calculating molecular descriptors (rdkit_desc), generating fingerprints (e.g., Morgan), and standardizing chemical structures [7] [25]. |

| Therapeutics Data Commons (TDC) | Data Repository | Provides curated benchmarks and leaderboards for ADMET properties, facilitating model comparison and access to public datasets [7]. |

| PharmaBench | Benchmark Dataset | A comprehensive, LLM-curated benchmark of 11 ADMET properties designed to be more representative of drug discovery compounds [5]. |

| Chemprop | Deep Learning Library | A specialized software package for training Message Passing Neural Networks (MPNNs) on molecular graphs [7] [25]. |

| Scikit-learn | ML Library | A widely used Python library for implementing classical ML models (RF, SVM) and evaluation metrics [5]. |

| N-(3-Hydroxyoctanoyl)-DL-homoserine lactone | N-(3-Hydroxyoctanoyl)-DL-homoserine lactone, MF:C12H21NO4, MW:243.30 g/mol | Chemical Reagent |

| Maridomycin II | Maridomycin II, CAS:35908-45-3, MF:C42H69NO16, MW:844.0 g/mol | Chemical Reagent |

Advancing Predictions: Federated Learning and Future Pathways

To overcome the limitations of isolated datasets, federated learning (FL) has emerged as a powerful paradigm for enhancing model applicability without sharing proprietary data.

Diagram 2: Federated learning cycle for cross-pharma collaboration.

In an FL framework, a global model is trained collaboratively across multiple pharmaceutical organizations. Each participant trains the model on its private data locally and shares only model parameter updates (not the data itself) with a central server for aggregation [17]. This process:

- Systematically Expands the Model's Applicability Domain: By learning from a much broader and more diverse chemical space, federated models demonstrate increased robustness when predicting compounds with novel scaffolds [17].

- Delivers Tangible Performance Gains: The MELLODDY project, a large-scale cross-pharma FL initiative, demonstrated that federation consistently unlocks performance benefits in QSAR models without compromising the confidentiality of proprietary information [17]. These benefits are most pronounced in multi-task learning settings for pharmacokinetic and safety endpoints [17].

Performance Data and Industrial Validation

The ultimate test for any model is its performance in industrial practice, measured through relevant metrics and successful transferability studies.

Table 3: Industrial Validation and Cross-Pharma Performance

| Validation Context | Model / Approach | Reported Outcome / Metric |

|---|---|---|

| Caco-2 Permeability Transfer | XGBoost (on public data) | Retained predictive efficacy when validated on Shanghai Qilu's in-house dataset, demonstrating industrial transferability [25]. |

| Cross-Pharma Federation | Federated Learning (MELLODDY) | Consistently outperformed local baselines; performance improvements scaled with the number and diversity of participating organizations [17]. |

| Polaris ADMET Challenge | Multi-task Models on Broad Data | Achieved 40–60% reductions in prediction error for endpoints like clearance and solubility compared to single-task models [17]. |

The industrial imperative for efficient and de-risked drug development is being answered by a new generation of rigorously validated and collaborative machine learning models. The evidence shows that no single algorithm dominates all tasks; rather, a disciplined approach combining robust data curation, structured feature selection, and rigorous statistical evaluation is paramount. The future of predictive ADMET science lies in embracing collaborative frameworks like federated learning, which break down data silos to create models with truly generalizable power. By adopting these advanced tools and validation standards, researchers and drug developers can significantly enhance the precision of early-stage candidate selection, thereby accelerating the journey of effective and safe therapeutics to patients.

Building Robust ML Models for ADMET: Algorithms, Data, and Feature Engineering

In contemporary drug discovery, the evaluation of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties represents a critical determinant of clinical success, with poor pharmacokinetic profiles and unforeseen toxicity accounting for a substantial proportion of late-stage drug attrition [12]. Traditional experimental methods for ADMET assessment, while reliable, are notoriously resource-intensive, time-consuming, and limited in scalability, creating a significant bottleneck in pharmaceutical development [8]. The integration of machine learning (ML) models into this domain has ushered in a transformative paradigm, offering scalable, efficient computational alternatives that can decipher complex structure-property relationships and enable high-throughput predictions during early-stage compound screening [12]. Among the plethora of available algorithms, XGBoost, Random Forests, and various Deep Learning architectures have emerged as particularly prominent tools, each bringing distinct strengths and limitations to the challenging task of ADMET prediction.

This guide provides a comprehensive, objective comparison of these three algorithmic approaches, focusing specifically on their performance, implementation requirements, and practical applicability within industrial ADMET prediction research. By synthesizing recent benchmark studies and industrial validation cases, we aim to equip researchers, scientists, and drug development professionals with the empirical insights necessary to select appropriate algorithms for their specific ADMET prediction tasks, ultimately supporting more efficient drug discovery pipelines and reduced late-stage compound attrition.

Methodology: Benchmarking Framework for ADMET Prediction Models

Data Curation and Preprocessing Standards

The development of robust ADMET prediction models necessitates rigorous data curation and preprocessing protocols. High-quality data forms the foundation of reliable machine learning models. Current benchmarking studies typically aggregate data from multiple public sources such as ChEMBL, PubChem, and the Therapeutics Data Commons (TDC), followed by extensive standardization procedures [7] [5]. Critical preprocessing steps include: molecular standardization to achieve consistent tautomer canonical states and final neutral forms; removal of inorganic salts and organometallic compounds; extraction of organic parent compounds from salt forms; and deduplication with retention criteria requiring consistent target values (exactly the same for binary tasks, within 20% of the inter-quartile range for regression tasks) [7]. For industrial validation, it is crucial to address dataset shift concerns by employing both random and scaffold-based splitting methods, the latter of which assesses model performance on structurally novel compounds by splitting data based on molecular scaffolds [7] [25].

The emergence of more comprehensive benchmark sets like PharmaBench, which comprises 52,482 entries across eleven ADMET endpoints, represents a significant advancement over earlier benchmarks that were often limited in size and chemical diversity [5]. This expansion addresses previous criticisms that benchmark compounds differed substantially from those typically encountered in industrial drug discovery pipelines, where molecular weights commonly range from 300 to 800 Dalton compared to the lower averages (e.g., 203.9 Dalton in the ESOL dataset) found in earlier benchmarks [5].

Molecular Representations and Feature Engineering

The representation of chemical structures fundamentally influences model performance. Research indicates that effective feature engineering plays a crucial role in improving ADMET prediction accuracy [8]. Commonly employed representations include:

- MolecularDescriptors: RDKit 2D descriptors providing comprehensive physicochemical property information.

- Fingerprints: Structural fingerprints like Morgan fingerprints (also known as Circular fingerprints) with a radius of 2 and 1024 bits, which capture circular substructures around each atom in the molecule.

- MolecularGraphs: Graph representations where atoms constitute nodes and bonds constitute edges, particularly suited for graph neural networks [7] [25].

Recent approaches often combine multiple representations or employ learned features to enhance predictive performance. For instance, some studies concatenate descriptors and fingerprints to capture both global and local molecular features [7], while deep learning approaches like Message Passing Neural Networks (MPNNs) directly learn feature representations from molecular graphs [7] [25].

Evaluation Metrics and Validation Protocols

Consistent model evaluation requires multiple complementary metrics to assess different aspects of predictive performance. For regression tasks, common metrics include Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Coefficient of Determination (R²). For classification tasks, standard metrics include Accuracy, Precision, Recall, and F1-score [8] [7]. Beyond these conventional metrics, robust benchmarking incorporates cross-validation with statistical hypothesis testing to assess performance significance, applicability domain analysis to evaluate model generalizability, and external validation using completely independent datasets, particularly industrial in-house data, to test real-world performance [7] [25]. The Y-randomization test is frequently employed to verify that models learn genuine structure-property relationships rather than dataset artifacts [25].

Table 1: Key Research Reagents and Computational Tools for ADMET Modeling

| Resource Category | Specific Tools/Databases | Primary Function in Research |

|---|---|---|

| Public Data Repositories | ChEMBL, PubChem, TDC, PharmaBench | Source of experimental ADMET measurements and compound structures for model training and benchmarking |

| Cheminformatics Toolkits | RDKit, DeepChem | Molecular standardization, descriptor calculation, fingerprint generation, and scaffold analysis |

| Molecular Representations | RDKit 2D Descriptors, Morgan Fingerprints, Molecular Graphs | Encoding chemical structures into machine-readable numerical features |

| Machine Learning Frameworks | Scikit-learn, XGBoost, LightGBM, Chemprop | Implementation of algorithms for model training, hyperparameter tuning, and prediction |

Performance Comparison: Quantitative Benchmarking Across ADMET Endpoints

Systematic Benchmarking on Diverse ADMET Tasks

Comprehensive benchmarking studies provide critical insights into the relative performance of different algorithms across varied ADMET prediction tasks. A landmark study evaluating 22 ADMET tasks within the Therapeutics Data Commons benchmark group revealed that XGBoost demonstrated particularly strong performance, achieving first-rank placement in 18 tasks and top-3 ranking in 21 tasks when utilizing an ensemble of molecular features including fingerprints and descriptors [26]. This exceptional performance establishes XGBoost as a robust baseline algorithm for diverse ADMET prediction challenges. Another extensive benchmarking initiative investigating the impact of feature representations on ligand-based models found that while optimal algorithm choice exhibited some dataset dependency, tree-based ensemble methods consistently delivered competitive performance across multiple ADMET endpoints [7].

The comparative analysis extends beyond simple performance rankings to encompass computational efficiency and implementation complexity. In this regard, Random Forest algorithms often provide an attractive balance between performance, interpretability, and computational demands, particularly for research teams with limited ML engineering resources [27]. While deep learning approaches have demonstrated impressive performance in specific domains, their superior predictive capability typically comes with increased computational costs, data requirements, and implementation complexity [12] [7].

Table 2: Performance Comparison Across Algorithm Classes for Specific ADMET Tasks

| ADMET Task | XGBoost Performance | Random Forest Performance | Deep Learning Performance | Key Study Observations |

|---|---|---|---|---|

| Caco-2 Permeability | R²: ~0.81 [25] | Competitive but generally slightly lower than XGBoost [25] | MAE: 0.410 (MESN model) [25] | XGBoost generally provided better predictions than comparable models [25] |

| General ADMET Benchmark (22 Tasks) | Ranked 1st in 18/22 tasks [26] | Strong performance but typically outranked by XGBoost [26] | Variable performance across tasks [26] | Ensemble of features with XGBoost delivered state-of-the-art results [26] |

| Aqueous Solubility | Highly competitive accuracy [7] | Strong performance with appropriate features [7] | Performance highly dependent on architecture and features [7] | Tree-based models consistently strong; optimal features vary by dataset [7] |

| Metabolic Stability | High accuracy in classification [12] | Reliable performance [12] | State-of-the-art in some specific tasks [12] | Graph neural networks show promise for complex metabolism prediction [12] |

Industrial Validation and Transfer Learning Considerations

A critical consideration for drug discovery applications is model performance on proprietary industrial datasets, which often exhibit different chemical distributions compared to public databases. A significant study investigating the transferability of models trained on public data to internal pharmaceutical industry datasets revealed that tree-based boosting models retained a substantial degree of predictive efficacy when applied to industry data, demonstrating their robustness for practical applications [25]. This research, conducted in collaboration with Shanghai Qilu Pharmaceutical, evaluated models on an internal set of 67 compounds and found that XGBoost maintained the strongest predictive performance among the compared algorithms [25].

The industrial validation paradigm highlights a crucial advantage of tree-based ensemble methods: their relative resilience to dataset shift between public and proprietary chemical spaces. This characteristic is particularly valuable in drug discovery settings where models trained on publicly available data must generalize to novel structural series in corporate portfolios. While deep learning approaches can achieve exceptional performance on in-distribution data, their generalization capabilities may be more susceptible to degradation when faced with significant dataset shifts, though architecture advances continue to address this limitation [12] [7].

Implementation Considerations: From Prototyping to Production

Feature Representation Strategies

The selection and engineering of molecular features significantly influence model performance, often exceeding the impact of algorithm choice alone. Recent research indicates that strategic combination of multiple feature types typically outperforms reliance on single representations [7]. For instance, concatenating Morgan fingerprints with RDKit 2D descriptors integrates substructural information with comprehensive physicochemical properties, enabling models to capture both local and global molecular characteristics [25]. Systematic approaches to feature selection—including filter methods, wrapper methods, and embedded methods—have demonstrated potential to enhance model performance while reducing computational requirements [8] [7].

Beyond traditional fixed representations, deep learning approaches offer the advantage of learned feature representations adapted to specific prediction tasks. Graph Neural Networks (GNs), particularly Message Passing Neural Networks (MPNNs), automatically learn relevant molecular features directly from graph-structured data, potentially discovering informative chemical patterns that might be overlooked by predefined representations [7] [25]. However, recent comparative analyses suggest that fixed representations combined with tree-based models currently maintain an advantage over learned representations for many ADMET endpoints, though the performance gap continues to narrow with architectural advances [7].

Data Quality and Model Robustness