Hit to Lead (H2L) in 2025: A Strategic Guide to Accelerating Drug Discovery

This article provides a comprehensive overview of the Hit-to-Lead (H2L) process in modern drug discovery, tailored for researchers, scientists, and development professionals.

Hit to Lead (H2L) in 2025: A Strategic Guide to Accelerating Drug Discovery

Abstract

This article provides a comprehensive overview of the Hit-to-Lead (H2L) process in modern drug discovery, tailored for researchers, scientists, and development professionals. It explores the foundational principles of H2L, details cutting-edge methodological applications including AI and automation, addresses common optimization challenges, and outlines rigorous validation techniques. By synthesizing current trends and data, this guide aims to equip teams with the knowledge to compress H2L timelines, improve lead compound quality, and strengthen the bridge to successful lead optimization.

What is Hit to Lead? Establishing the Bedrock of Early Drug Discovery

The hit-to-lead (H2L) stage is a critical, defined gateway in the early drug discovery process, serving as a strategic bridge between initial screening activities and the development of a optimized lead compound [1] [2]. This phase focuses on the transformation of preliminary "hits" – compounds showing desired biological activity – into refined "leads" that possess robust pharmacological profiles and are suitable for the more resource-intensive lead optimization phase [3] [4]. The overarching goal of H2L is to identify the most promising chemical series and select the best candidates based on a multi-parameter analysis that encompasses potency, selectivity, and early developability properties [2]. In the contemporary landscape, this process is being radically accelerated through the integration of artificial intelligence, computational modeling, and high-throughput experimentation, compressing traditional timelines from months to weeks [5]. The efficiency and rigor applied during H2L are paramount, as only one in every 5,000 compounds that enter drug discovery reaches the stage of becoming an approved drug [1].

Defining Hits and Leads: Core Concepts and Characteristics

What is a Hit?

In the lexicon of drug discovery, a hit is a compound identified through a primary screen that displays a reproducible and desired biological activity against a specific therapeutic target [2] [4]. Hits are the initial starting points, but they are typically rudimentary, often exhibiting suboptimal properties such as low potency (affinities in the micromolar range), poor selectivity, or unfavorable pharmacokinetics [1] [4]. They can be discovered through several methodological approaches:

- High-Throughput Screening (HTS): This involves testing vast libraries of compounds against a target protein (biochemical) or in a cell-based (phenotypic) assay using automated technologies to rapidly identify modulators of activity [2].

- Virtual Screening (VS): A computational approach that leverages techniques like molecular docking and dynamics simulations to predict which compounds from a large library are likely to bind to the target protein [2] [5].

- Fragment-Based Drug Discovery (FBDD): This method identifies very small molecular fragments that bind weakly to the target. These fragments then serve as starting points for growing or linking into more potent and selective compounds [2].

What is a Lead?

A lead compound represents a significant advancement from a hit. It is a chemically optimized molecule within a defined chemical series that has demonstrated a robust pharmacological activity profile on the specific therapeutic target [2]. A lead compound possesses improved biological activity and better pharmacological properties, making it a viable candidate for further, extensive optimization [4]. The transition from a hit to a lead involves strategic chemical modifications and rigorous testing to enhance its efficacy, selectivity, and safety profile, ensuring it is a suitable starting point for the subsequent lead optimization (LO) phase [3] [4].

Table 1: Key Characteristics Differentiating a Hit from a Lead

| Parameter | Hit Compound | Lead Compound |

|---|---|---|

| Potency (Affinity) | Typically micromolar (µM) range [1] | Improved to nanomolar (nM) range [1] |

| Selectivity | Often poor or unconfirmed | Improved selectivity against related targets and anti-targets [1] [2] |

| Cellular Efficacy | May not show efficacy in cellular models | Demonstrated efficacy in a functional cellular assay [1] [2] |

| SAR Understanding | Preliminary or non-existent | Initial Structure-Activity Relationship (SAR) is established [1] [4] |

| ADME Properties | Usually unoptimized | Favorable early in vitro ADME properties (e.g., metabolic stability, permeability) [2] [4] |

| Primary Role | Starting point, proof-of-concept | Candidate for full-scale optimization [3] |

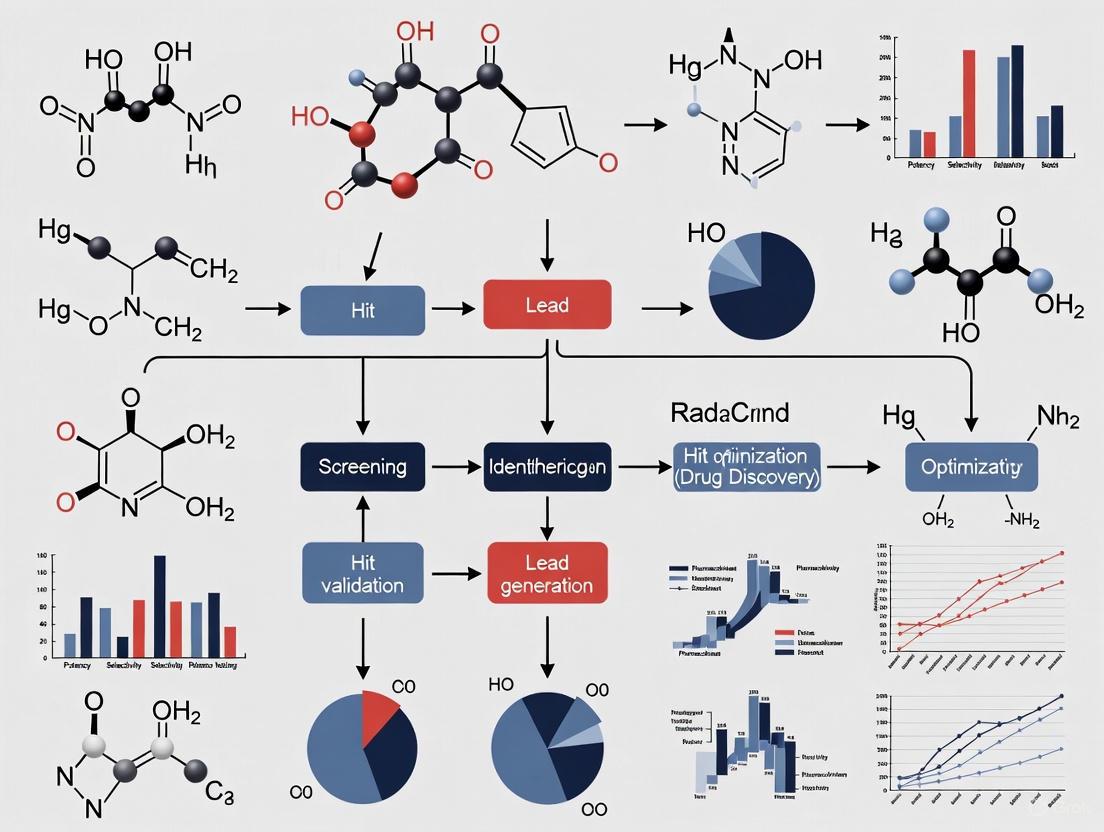

The hit-to-lead process is a systematic, multi-stage endeavor designed to validate, expand, and triage initial hits into promising lead series. The following workflow diagram encapsulates the key stages and decision gates in this process.

Stage 1: Hit Confirmation

The initial stage involves validating the authenticity and reproducibility of the primary screening hits [1]. This critical step eliminates false positives and establishes a reliable foundation for further investment. Key experimental protocols in this phase include:

- Confirmatory Testing: Re-testing the compound using the original assay conditions to ensure the initial activity is reproducible [1].

- Dose-Response Curves: Testing the compound over a range of concentrations to determine the half-maximal inhibitory/effective concentration (ICâ‚…â‚€ or ECâ‚…â‚€), which quantifies potency [1].

- Orthogonal Testing: Assessing confirmed hits using a different assay technology or one that more closely mimics the physiological condition to rule out assay-specific artifacts [1].

- Biophysical Testing: Employing techniques such as Surface Plasmon Resonance (SPR), Nuclear Magnetic Resonance (NMR), or Isothermal Titration Calorimetry (ITC) to confirm direct binding to the target and understand the kinetics and thermodynamics of the interaction [1] [2].

Stage 2: Hit Expansion

Following confirmation, multiple analogs of the validated hit compounds are generated and tested to establish an initial understanding of the Structure-Activity Relationship (SAR) [1]. This helps identify the core chemical features necessary for activity.

- Objective: To explore the chemical space around the initial hit and identify a "compound cluster" or "series" with improved properties [1].

- Methodologies:

- Key Properties Assessed: During this expansion, compounds are evaluated against a wider panel of parameters, including affinity (goal: <1 µM), selectivity, efficacy in a cellular assay, and early ADME (Absorption, Distribution, Metabolism, Excretion) properties like metabolic stability and membrane permeability [1] [2].

Stage 3: Lead Identification and Series Selection

This stage involves a thorough, multi-parameter optimization (MPO) of the most promising hit series. The project team, typically comprising medicinal chemists, biologists, and DMPK scientists, executes iterative DMTA (Design-Make-Test-Analyze) cycles [2]. The objective is to synthesize new analogs with improved potency, reduced off-target activities, and physiochemical properties predictive of reasonable in vivo pharmacokinetics [1] [4]. The process is highly data-driven, leveraging SAR and often structure-based design [1]. The final output is a comparative assessment of all explored series, leading to the selection of typically two to three chemically distinct lead series that best meet the pre-defined lead criteria for progression into the Lead Optimization phase [2]. This strategy of having backup series increases the overall probability of success in downstream development [2].

Table 2: Quantitative Profile of an Ideal Lead Compound Series

| Property | Target Value/Range | Measurement Protocol |

|---|---|---|

| Potency | < 1 µM (goal: nanomolar) [1] | IC₅₀/EC₅₀ from dose-response curves in biochemical and cellular assays [1] |

| Selectivity | > 30-fold against related targets [1] | Secondary screening against a panel of related targets and anti-targets [1] |

| Ligand Efficiency (LE) | > 0.3 kcal/mol per heavy atom | Calculated from potency and non-hydrogen atom count [1] |

| Molecular Weight | Moderate (e.g., <400 Da) [1] | — |

| Lipophilicity (clogP) | Moderate (e.g., <3) [1] | Calculated Log P [1] |

| Metabolic Stability | Moderate to high (e.g., low hepatic clearance) | In vitro incubation with liver microsomes or hepatocytes [2] [4] |

| Membrane Permeability | High | Caco-2 or PAMPA assay [2] |

| Solubility | > 10 µM [1] | Kinetic or thermodynamic solubility measurement in aqueous buffer [1] |

| Cytotoxicity | Low (high therapeutic index) | Cytotoxicity assay in relevant cell lines (e.g., HEK293) [2] |

The Scientist's Toolkit: Essential Research Reagents and Solutions

The experimental rigor of the H2L phase is supported by a suite of specialized reagents, assays, and technologies.

Table 3: Key Research Reagent Solutions for Hit-to-Lead

| Reagent/Assay System | Function in H2L |

|---|---|

| SPR (Surface Plasmon Resonance) Biosensors | A biophysical technique used to confirm target binding and quantify association/dissociation kinetics (on/off rates) without labels [1] [2]. |

| Liver Microsomes / Hepatocytes | In vitro systems used to assess metabolic stability and identify major metabolites of the compound, predicting its in vivo clearance [4]. |

| Caco-2 Cell Line | A model of the human intestinal epithelium used to predict oral absorption and permeability of compounds [2]. |

| Target-Specific Biochemical Assays | Validated, robust enzymatic or binding assays used for primary screening and SAR determination during DMTA cycles [1] [3]. |

| Cell-Based Phenotypic Assays | Functional assays in a physiologically relevant cellular context to confirm efficacy and mechanism of action [1] [2]. |

| CETSA (Cellular Thermal Shift Assay) | A platform for confirming direct target engagement in intact cells or complex biological systems, bridging the gap between biochemical potency and cellular efficacy [5]. |

| Panels of Off-Target Proteins | Used in secondary screening to evaluate compound selectivity and identify potential off-target liabilities that could lead to side effects [1] [4]. |

| 2-Methyl-1,4-hexadiene | 2-Methyl-1,4-hexadiene|C7H12|CAS 1119-14-8 |

| Propylene glycol, allyl ether | Propylene glycol, allyl ether, CAS:1331-17-5, MF:C6H12O2, MW:116.16 g/mol |

The hit-to-lead process is a disciplined, gate-driven stage in drug discovery that transforms initial screening outcomes into qualified starting points for development. By applying a rigorous, multi-parameter optimization strategy—powered by iterative DMTA cycles and a comprehensive toolkit of biochemical, cellular, and DMPK assays—research teams can significantly de-risk the pipeline. The precise definition of hits and leads, coupled with systematic evaluation against a well-defined candidate drug target profile, is fundamental for selecting the optimal chemical series to advance into lead optimization, thereby increasing the likelihood of delivering successful new therapeutics to patients.

The Strategic Position of H2L in the Drug Discovery Pipeline

The hit-to-lead (H2L) stage represents a pivotal and strategic phase in the drug discovery pipeline, serving as an essential bridge between initial screening activities and the rigorous optimization of preclinical candidate compounds [6]. This phase is systematically designed to address the high attrition rates that plague the pharmaceutical industry by transforming preliminary "hits"—compounds identified through high-throughput screening (HTS) that show initial activity against a biological target—into refined "lead" compounds with enhanced potency, selectivity, and drug-like properties [1] [7]. The strategic positioning of H2L occurs after target validation and HTS but before lead optimization and preclinical development, making it a critical gatekeeper that determines which chemical series warrant significant further investment [6] [1].

The fundamental objective of the H2L phase is the multiparametric optimization of multiple properties simultaneously to identify chemical series with the highest potential for successful development into therapeutics [7]. This process involves intensive structure-activity relationship (SAR) investigations, preliminary absorption, distribution, metabolism, excretion, and toxicity (ADMET) profiling, and the establishment of synthetic tractability for promising compounds [6]. By focusing on these crucial parameters early in the discovery process, H2L aims to mitigate downstream failures and provide a solid foundation for the subsequent lead optimization phase, where compounds undergo further refinement for in vivo efficacy and safety testing [1]. The strategic importance of H2L has grown significantly in recent years as pharmaceutical companies face increasing pressure to reduce development timelines and costs while maintaining rigorous scientific standards for candidate selection.

Defining the H2L Process: From Hits to Leads

Conceptual Framework and Definitions

In the context of drug discovery, precise definitions distinguish "hits" from "leads" and establish clear objectives for the H2L process. A hit is typically defined as a compound that exhibits reproducible activity against a specific biological target in primary assays, usually with binding affinity or inhibitory concentration (IC50) in the micromolar range (typically 1-10 μM) [6] [7]. These initial hits are characterized by their potential for optimization rather than fully developed drug-like properties. In contrast, a lead compound represents a more advanced molecule that has undergone preliminary optimization during H2L to achieve improved potency (typically IC50 < 1 μM), demonstrated selectivity against related off-targets, and possesses preliminary evidence of acceptable ADMET properties suitable for further progression [6] [1].

The H2L process serves as the critical transition between these two states through systematic chemical optimization and biological characterization [7]. This phase typically commences 3-6 months after project initiation and lasts approximately 6-9 months, culminating in the selection of 1-5 lead series for advancement into full lead optimization [6]. The primary goals of H2L include confirming the biological relevance of initial screening hits, establishing preliminary structure-activity relationships (SAR), improving potency by several orders of magnitude (often from micromolar to nanomolar range), and ensuring compounds meet minimum criteria for drug-likeness based on established parameters such as Lipinski's Rule of Five [6] [1]. This strategic positioning enables medicinal chemists and pharmacologists to identify and address potential development liabilities early, thereby reducing the likelihood of costly late-stage failures.

Quantitative Benchmarks for Hit and Lead Compounds

The progression from hit to lead is guided by well-established quantitative benchmarks that reflect the compound's potential for successful development. The table below summarizes the key distinguishing characteristics between hits and leads based on standard industry criteria:

Table 1: Quantitative Benchmarks Differentiating Hits from Leads

| Parameter | Hit Compound | Lead Compound |

|---|---|---|

| Potency (IC50) | 100 nM - 10 μM [6] [1] | < 1 μM (submicromolar to nanomolar range) [6] [1] |

| Molecular Weight | < 500 Da [6] | < 500 Da (ideal range) [6] |

| clogP/LogD | Variable, often high | 1-3 (LogD at pH 7.4) [6] |

| Ligand Efficiency (LE) | Preliminary assessment | > 0.3 kcal/mol per heavy atom [1] |

| Lipophilic Efficiency (LipE) | Preliminary assessment | > 5 [1] |

| Solubility | > 10 μM [1] | > 100 μM [6] |

| Selectivity | Preliminary assessment | > 10-fold against related targets [6] |

| Cellular Activity | Confirmed in secondary assays | Demonstrated efficacy in functional cellular assays [1] |

These quantitative parameters provide a framework for objective assessment of compounds throughout the H2L process. The transition from hit to lead requires significant improvement in multiple parameters simultaneously, particularly potency, which often must improve by several orders of magnitude (from micromolar to nanomolar range) while maintaining or enhancing other drug-like properties [1]. Efficiency metrics such as ligand efficiency and lipophilic efficiency have become increasingly important in contemporary H2L campaigns as they help optimize potency without excessive increases in molecular size or lipophilicity, which can negatively impact solubility and overall developability [7].

Strategic Position in the Drug Discovery Pipeline

The Integrated Drug Discovery Workflow

The H2L phase occupies a strategic position within the broader drug discovery pipeline, serving as the crucial connection between exploratory research and development-focused activities. The complete drug discovery workflow follows a sequential path: Target Validation (TV) → Assay Development → High-Throughput Screening (HTS) → Hit to Lead (H2L) → Lead Optimization (LO) → Preclinical Development → Clinical Development [1]. This positioning is significant because H2L represents the first stage where promising but unoptimized screening outputs undergo systematic transformation into compounds with genuine therapeutic potential.

The schematic workflow below illustrates the strategic position and key decision points of the H2L stage within the broader drug discovery pipeline:

The H2L phase typically begins immediately following the identification of confirmed hits from HTS campaigns and involves multiple iterative cycles of design, synthesis, and testing [6]. This stage is characterized by parallel exploration of multiple chemical series rather than focused optimization of single compounds, allowing research teams to maintain diverse options until sufficient data accumulates to prioritize the most promising scaffolds [6] [1]. The output of a successful H2L campaign is the identification of 1-5 lead series that meet predefined criteria for potency, selectivity, and developability, which then progress into the more resource-intensive lead optimization phase [6].

Gatekeeping Function and Attrition Management

The strategic importance of the H2L phase is magnified by its function as a key attrition management gate in the drug discovery pipeline. With only approximately one in every 5,000 compounds that enter drug discovery ultimately achieving regulatory approval, early and rigorous candidate selection is crucial for resource allocation and portfolio management [1]. The H2L phase addresses this challenge by implementing multi-parameter optimization (MPO) approaches that balance potency, selectivity, and drug-like properties through weighted scoring systems, typically aggregating multiple parameters on a 0-1 scale to rank compounds holistically [6].

This gatekeeping function is further enhanced by the implementation of quality filters throughout the H2L process, including assessments for hERG channel affinity to prevent cardiac toxicity risks, synthetic accessibility for scalable production, and freedom-to-operate evaluations to ensure patentability [6] [1]. By applying these rigorous criteria early in the discovery process, organizations can avoid investing significant resources into chemical series with fundamental limitations that would likely result in failure during later, more expensive development stages. The strategic positioning of H2L thus serves as a crucial risk mitigation step, enabling organizations to focus their efforts on chemical matter with the highest probability of ultimately becoming successful therapeutics.

Core Methodologies and Experimental Protocols

Hit Confirmation and Validation

The initial stage of the H2L process involves rigorous confirmation and validation of screening hits to eliminate false positives and identify compounds with genuine biological activity. This systematic approach employs orthogonal assay techniques to build confidence in the chemical series before committing significant resources to their optimization. The hit confirmation workflow involves multiple experimental methodologies:

Table 2: Experimental Protocols for Hit Confirmation

| Methodology | Experimental Protocol | Key Output Parameters |

|---|---|---|

| Confirmatory Testing | Re-testing compounds using original HTS assay conditions with freshly prepared samples [1] | Reproducibility of activity; elimination of artifacts from compound degradation or precipitation [6] |

| Dose-Response Analysis | Testing compounds across a concentration range (typically 8-12 points in serial dilution) to generate full dose-response curves [1] | IC50/EC50 values; curve characteristics (Hill slope, R²) to confirm appropriate pharmacology [6] |

| Orthogonal Assays | Testing confirmed hits in different assay formats (e.g., switching from fluorescence to luminescence readouts) or more physiologically relevant systems [1] | Confirmation of target engagement independent of assay technology; elimination of technology-specific artifacts [6] |

| Counter-Screening | Assessing activity against known nuisance targets (e.g., redox activity, aggregation) and promiscuous binders using specialized assays [6] | Identification of pan-assay interference compounds (PAINS); specificity assessment [6] |

| Biophysical Characterization | Using techniques like SPR, ITC, NMR, or DLS to confirm binding and characterize interaction kinetics and stoichiometry [1] | Direct binding confirmation; measurement of KD, Kon, Koff; elimination of aggregators [6] |

The implementation of these orthogonal confirmation protocols is essential for establishing a validated starting point for H2L optimization. Recent advances in this area include the integration of artificial intelligence and machine learning for predicting assay interferences such as PAINS or aggregation, thereby accelerating validation workflows and improving decision-making [6]. Furthermore, the application of cellular target engagement assays such as CETSA (Cellular Thermal Shift Assay) has emerged as a powerful approach for confirming direct target binding in physiologically relevant environments, helping to bridge the gap between biochemical potency and cellular efficacy [5].

Hit Expansion and SAR Exploration

Following hit confirmation, the H2L process advances into hit expansion and systematic exploration of structure-activity relationships (SAR). This phase aims to identify analog compounds that define initial SAR trends and improve key properties through limited chemical optimization. The primary objectives include establishing the relationship between chemical structure and biological activity, identifying key pharmacophoric elements, and improving potency while maintaining favorable physicochemical properties.

The experimental approach to hit expansion typically involves multiple parallel strategies:

SAR by Catalog: Rapid identification and testing of structurally related analogs from internal compound collections or commercial sources to establish preliminary SAR without requiring de novo synthesis [1].

Focused Library Design: Synthesis of targeted analog libraries around the hit scaffold to systematically explore key regions of the molecule and identify critical positions for modification [6].

Multi-Parameter Optimization: Concurrent assessment of multiple properties including potency, selectivity, solubility, metabolic stability, and permeability to build a comprehensive understanding of the structure-property relationships [7].

The hit expansion phase typically involves the synthesis or acquisition of 50-200 analogs per chemical series to establish robust SAR [6]. This systematic exploration enables medicinal chemists to identify positions tolerant of substitution for property optimization, regions critical for maintaining potency, and opportunities to remove structural alerts or undesirable functionality. The output of this phase is the selection of 3-6 compound series that demonstrate the most favorable balance of properties for further progression into lead identification [1].

Quantitative Data Analysis and Decision Metrics

Key Efficiency Metrics and Property Optimization

The transition from hits to leads requires careful balancing of multiple physicochemical and pharmacological parameters. Several key efficiency metrics have been developed to guide this optimization process and ensure that improvements in potency are not achieved at the expense of molecular properties associated with good developability. The most widely applied metrics in contemporary H2L campaigns include:

Table 3: Key Efficiency Metrics for Hit-to-Lead Optimization

| Metric | Calculation | Target Value | Strategic Importance |

|---|---|---|---|

| Ligand Efficiency (LE) | ΔG / NHA ≈ 1.37 × pIC50 / NHA [1] | > 0.3 kcal/mol per heavy atom [1] | Normalizes potency by molecular size; ensures binding efficiency [7] |

| Lipophilic Efficiency (LipE) | pIC50 - logP (or logD) [1] | > 5 [1] | Balances potency against lipophilicity; predictor of compound quality [7] |

| Ligand Lipophilicity Efficiency (LLE) | pIC50 - logP (or logD) [7] | > 5 [7] | Similar to LipE; indicates whether sufficient potency is achieved without excessive lipophilicity [7] |

| Solubility | Measured kinetic or thermodynamic solubility in aqueous buffer [6] | > 100 μM [6] | Ensures adequate concentration for in vitro and in vivo testing [6] |

| Molecular Weight | Sum of atomic masses [6] | < 500 Da [6] | Maintains drug-likeness; correlates with absorption and permeability [6] |

These efficiency metrics have become fundamental tools in modern H2L campaigns as they provide a framework for evaluating compound quality beyond simple potency measurements. By monitoring these parameters throughout the optimization process, medicinal chemists can make informed decisions that balance multiple properties simultaneously and avoid the introduction of development liabilities early in the optimization process. The application of these metrics is particularly important given the common challenge of optimizing one property (such as absorption) without compromising another (such as potency) during H2L [7].

Multi-Parameter Optimization and Lead Selection Criteria

The culmination of the H2L process involves the integrated assessment of all data to select lead compounds for progression into full lead optimization. This decision-making process typically employs multi-parameter optimization (MPO) scoring systems that weight and combine multiple critical parameters into a single composite score [6]. A typical MPO approach might incorporate parameters such as potency, selectivity, solubility, metabolic stability, permeability, and cytochrome P450 inhibition profile, with each parameter normalized to a 0-1 scale based on desired thresholds [6].

The quantitative criteria for lead selection vary depending on the target class and therapeutic area but generally include the following benchmarks:

- Potency: IC50 < 1 μM (submicromolar to nanomolar range) in functional cellular assays [6] [1]

- Selectivity: >10-100 fold against related targets or anti-targets [6]

- Solubility: >100 μM in physiologically relevant buffers [6]

- Metabolic Stability: >30-60% remaining after incubation with hepatocytes or liver microsomes [6]

- Permeability: Demonstrated cellular permeability in models such as Caco-2 or MDCK [1]

- Cytotoxicity: Minimal effects on cell viability at relevant concentrations (>10-100× IC50) [1]

- Ligand Efficiency: >0.3 kcal/mol per heavy atom [1]

Compounds that meet these integrated criteria are designated as lead compounds and progress into the lead optimization phase, where they undergo more extensive refinement of their pharmacological and pharmaceutical properties before selection of a preclinical candidate [6] [1].

Emerging Trends and Innovative Approaches

AI-Enabled Hit-to-Lead Acceleration

The H2L process is undergoing rapid transformation through the integration of artificial intelligence and machine learning approaches that accelerate and enhance decision-making. AI has evolved from a disruptive concept to a foundational capability in modern drug discovery, with machine learning models now routinely informing target prediction, compound prioritization, pharmacokinetic property estimation, and virtual screening strategies [5]. Recent advances demonstrate the significant impact of these technologies on H2L efficiency, with one 2025 study showing that integrating pharmacophoric features with protein-ligand interaction data can boost hit enrichment rates by more than 50-fold compared to traditional methods [5].

The application of deep graph networks and other AI-driven approaches has demonstrated remarkable potential for accelerating the traditionally lengthy H2L phase. In a notable 2025 case study, researchers utilized deep graph networks to generate over 26,000 virtual analogs, resulting in the identification of sub-nanomolar inhibitors with over 4,500-fold potency improvement over initial hits [5]. These AI-enabled approaches are compressing discovery timelines from months to weeks by enabling rapid design-make-test-analyze (DMTA) cycles and providing richer data for optimization decisions [5]. The integration of AI with experimental data creates a virtuous cycle of improvement, where each iteration generates higher-quality data that further refines the predictive models.

Integrated Cross-Disciplinary Platforms

Contemporary H2L campaigns increasingly rely on integrated cross-disciplinary platforms that combine expertise from computational chemistry, structural biology, pharmacology, and data science [5]. This convergence enables the development of predictive frameworks that combine molecular modeling, mechanistic assays, and translational insight to support more confident go/no-go decisions [5]. The organizations leading the field are those that can effectively combine in silico foresight with robust experimental validation, creating seamless workflows that maintain mechanistic fidelity throughout the optimization process [5].

These integrated approaches are particularly evident in the growing emphasis on physiologically relevant assay systems that better predict in vivo efficacy. Cellular target engagement assays such as CETSA have emerged as critical tools for confirming direct target binding in intact cells and tissues, thereby closing the gap between biochemical potency and cellular efficacy [5]. Recent work has applied CETSA in combination with high-resolution mass spectrometry to quantify drug-target engagement in complex biological systems, confirming dose- and temperature-dependent stabilization ex vivo and in vivo [5]. These advanced approaches provide quantitative, system-level validation that enhances decision-making confidence and reduces late-stage attrition due to inadequate target engagement.

The Scientist's Toolkit: Essential Research Reagents and Technologies

The successful execution of H2L campaigns relies on a comprehensive toolkit of research reagents, technologies, and methodologies that enable the multiparametric optimization required for lead identification. The table below details key solutions essential for contemporary H2L operations:

Table 4: Essential Research Reagent Solutions for Hit-to-Lead Programs

| Technology/Reagent | Application in H2L | Key Function |

|---|---|---|

| CETSA (Cellular Thermal Shift Assay) | Target engagement validation [5] | Confirms direct binding to cellular target in physiologically relevant environment [5] |

| Surface Plasmon Resonance (SPR) | Binding kinetics characterization [1] | Measures binding affinity (KD), association (kon), and dissociation (koff) rates [1] |

| High-Content Screening Systems | Cellular phenotype assessment [1] | Multiparametric analysis of cellular responses including efficacy and toxicity [1] |

| Metabolic Stability Assays | Hepatic clearance prediction [6] | Measures compound half-life in liver microsomes or hepatocytes [6] |

| Parallel Artificial Membrane Permeability Assay (PAMPA) | Passive permeability screening [6] | Predicts membrane penetration potential [6] |

| CYP Inhibition Assays | Drug-drug interaction potential [1] | Identifies compounds that inhibit major cytochrome P450 enzymes [1] |

| Compound Management Systems | Sample storage and distribution [6] | Maintains compound integrity and enables efficient screening [6] |

| AI-Guided Design Platforms | Compound prioritization and design [5] | Predicts properties and activity of proposed analogs before synthesis [5] |

| 3-(2-Hydroxy-1-naphthyl)propanenitrile | 3-(2-Hydroxy-1-naphthyl)propanenitrile | RUO | High-purity 3-(2-Hydroxy-1-naphthyl)propanenitrile for research. For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

| 2-Methyl-1,1-dipropoxypropane | 2-Methyl-1,1-dipropoxypropane | High-Purity Reagent | 2-Methyl-1,1-dipropoxypropane, a key acetalization reagent. For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

This comprehensive toolkit enables the multiparametric optimization essential for successful H2L outcomes. The integration of these technologies into streamlined workflows allows research teams to efficiently profile compounds across multiple parameters simultaneously, generating the comprehensive data sets required for informed lead selection. As H2L approaches continue to evolve, the strategic combination of experimental and computational tools will further enhance the efficiency and success rates of this critical drug discovery phase.

The hit-to-lead (H2L) phase represents a critical juncture in drug discovery, where initial screening hits are transformed into viable lead compounds with the potential for clinical success. The primary objective during this stage is the simultaneous optimization of multiple, often competing, properties: potency at the intended biological target, selectivity against off-targets to minimize toxicity, and favorable absorption, distribution, metabolism, excretion, and toxicity (ADMET) profiles to ensure adequate safety and pharmacokinetics [8]. Failure to adequately balance these properties early in the discovery process remains a major contributor to late-stage attrition, making integrated optimization strategies essential for improving the efficiency and success rates of modern drug development pipelines [5].

This technical guide examines contemporary frameworks and methodologies for achieving this crucial balance, with a focus on the integration of artificial intelligence (AI), advanced in silico tools, and high-throughput experimental data. We position this discussion within the broader thesis that a deliberate, data-driven approach to multiparameter optimization (MPO) during the H2L phase is fundamental to generating what experienced drug hunters term "beautiful molecules" – those that are therapeutically aligned, synthetically feasible, and bring value beyond traditional approaches [8].

The Multiparameter Optimization (MPO) Framework

The ultimate goal of generative chemistry and lead optimization is not merely to generate novel molecules, but to produce candidates that holistically integrate synthetic practicality, molecular function, and disease-modifying capabilities [8]. This requires a robust MPO framework that moves beyond simple potency metrics.

Defining the "Beautiful Molecule" in H2L

A molecule's "beauty" is context-dependent, shaped by program objectives that evolve with emerging data and shifting priorities [8]. The essential considerations for a successful H2L outcome include:

- Chemical Synthesizability: Accounting for practical time and cost constraints for procurement [8].

- Favorable ADMET Properties: Ensuring a high probability of acceptable pharmacokinetics and safety [8] [9].

- Target-Specific Binding: Effective modulation of the intended biological mechanism with sufficient selectivity [8].

- The Construction of Appropriate MPO Functions: Quantitative functions to drive AI and design efforts toward project-specific objectives [8].

- Indispensable Human Feedback: The nuanced judgment of experienced drug hunters remains irreplaceable in guiding the optimization process [8].

The Role of AI and Generative Models

AI and generative models have evolved from disruptive concepts to foundational capabilities in modern R&D [5]. These tools now routinely inform target prediction, compound prioritization, and property estimation. For instance, Terray Therapeutics' EMMI platform employs a full-stack AI system where generative models design property-optimized molecules using latent diffusion and reinforcement learning, while predictive models profile ADMET properties and potency across the proteome [10]. This approach allows for the exploration of vast chemical spaces while simultaneously optimizing for multiple parameters.

Table 1: Key AI-Generated Efficiency Metrics in Hit-to-Lead Optimization

| Company/Platform | Reported Efficiency | Key Achievement |

|---|---|---|

| Exscientia [11] | ~70% faster design cycles; 10x fewer compounds synthesized | Clinical candidate achieved after synthesizing only 136 compounds |

| Terray Therapeutics (EMMI) [10] | Database of over 13 billion precise binding measurements | Exploration of "dark areas" of chemical space with custom-built focus libraries |

| AI-Guided Workflow [5] | Hit enrichment rates boosted by >50-fold | Integration of pharmacophoric features with protein-ligand interaction data |

Methodologies for Individual Parameter Assessment

Assessing and Optimizing Potency

Potency optimization requires a multi-faceted approach that combines computational and experimental techniques.

- Ultra-High-Throughput Screening: Platforms like Terray's ultra-dense microarrays enable the measurement of interactions between millions of small molecules and targets of interest, generating billions of data points to inform potency optimization [10].

- In Silico Potency Modeling: A two-tiered computational approach is emerging as a best practice. First, an ultra-fast sequence-only model evaluates thousands of generated molecules cheaply and quickly. The most promising subset is then profiled with a more accurate, structure-based multi-modal potency model [10].

- Cellular Target Engagement: Technologies like the Cellular Thermal Shift Assay (CETSA) provide direct, quantitative validation of target engagement in intact cells, confirming dose-dependent stabilization and closing the gap between biochemical potency and cellular efficacy [5].

Ensuring Selectivity

Selectivity is crucial for minimizing off-target toxicity and ensuring a sufficient therapeutic index.

- Proteome-Wide Binding Predictions: Advanced AI models predict binding affinity and potential off-target interactions across large panels of proteins, flagging compounds with potential selectivity issues early in the design process [10].

- Structural Modeling: Leveraging crystal structures and homology models to understand key binding interactions that drive selectivity, then designing molecules to exploit subtle differences between target and off-target binding sites.

- Experimental Selectivity Screening: Testing compounds against panels of related targets (e.g., kinase panels, GPCR panels) to empirically confirm computational selectivity predictions.

Early ADMET Profiling

The accurate prediction of ADMET properties remains a significant challenge, yet early identification of liabilities is critical for reducing late-stage attrition [8] [9].

- In Silico ADMET Prediction: Tools like SwissADME and ADMET Predictor are routinely deployed to filter for drug-likeness and predict key properties such as solubility, LogD, permeability, and metabolic stability before synthesis [5] [9].

- Physiologically-Based Pharmacokinetic (PBPK) Modeling: PBPK modeling bridges discovery and development by predicting human pharmacokinetics, assisting in understanding distribution, oral absorption, formulation, and drug-drug interaction potential [9].

- High-Throughput Experimental ADME: Automated, miniaturized in vitro assays for metabolic stability, plasma protein binding, and permeability enable rapid profiling of compound libraries [9]. Advances in microsampling and accelerator mass spectrometry (AMS) further support the acquisition of high-quality PK data with minimal resource expenditure [9].

Table 2: Key Experimental ADMET Assays and Their Applications in H2L

| Assay Type | Primary Function | Strategic Application in H2L |

|---|---|---|

| Metabolic Stability (e.g., microsomes/hepatocytes) [9] | Measures compound half-life in liver fractions | Prioritizes compounds with lower clearance; supports IVIVE for human PK prediction |

| Plasma Protein Binding [9] | Quantifies fraction of compound bound to plasma proteins | Informs free drug concentration estimates for efficacy and toxicity |

| Caco-2/PAMPA Permeability [5] | Predicts intestinal absorption and brain penetration | Filters compounds with poor membrane permeability |

| CYP Inhibition [9] | Identifies potential for drug-drug interactions | Flags compounds with high DDI risk early in optimization |

| hERG Binding | Assesses potential for cardiac toxicity | Early de-risking of cardiovascular safety liabilities |

Integrated Workflows for Balanced Optimization

Successful H2L campaigns require the tight integration of design, synthesis, and testing within a continuous feedback loop.

The Design-Make-Test-Analyze (DMTA) Cycle

The DMTA cycle forms the backbone of modern lead optimization, and its acceleration is key to compressing H2L timelines [5] [10].

- Design: AI-driven generative models propose novel molecular structures optimized for multiple parameters simultaneously. Reinforcement learning with human feedback (RLHF) helps align the AI's proposals with medicinal chemists' intuition and project objectives [8].

- Make: Advances in automated synthesis, including robotics-mediated chemistry and high-throughput parallel synthesis, enable the rapid production of AI-designed compounds [11] [10].

- Test: Ultra-high-throughput screening technologies and automated bioassays generate precise, high-quality data for potency, selectivity, and early ADMET properties [10].

- Analyze: Data analysis feeds back into AI models, refining their predictions and initiating the next design cycle. The entire process is becoming increasingly closed-loop, with minimal human intervention required for routine iterations [11] [10].

The following diagram illustrates this continuous, AI-integrated workflow:

The Scientist's Toolkit: Essential Research Reagents and Platforms

Table 3: Key Research Reagent Solutions for Hit-to-Lead Optimization

| Tool / Reagent | Function in H2L | Application Context |

|---|---|---|

| Ultra-Dense Microarrays (Terray) [10] | Measures millions of target-molecule interactions | Primary hit finding and SAR expansion |

| CETSA Kits [5] | Confirms target engagement in intact cells | Mechanistic validation of cellular potency |

| Human Liver Microsomes/Hepatocytes [9] | Evaluates metabolic stability and metabolite ID | Early ADME profiling; IVIVE for human clearance prediction |

| Transfected Cell Lines | Expresses specific human enzymes or transporters | Assessment of transporter-mediated DDI potential |

| PBPK/PD Modeling Software [9] | Simulates human PK and dose-response | Strategic candidate selection and human dose prediction |

| AI Foundation Models (e.g., COATI) [10] | Encodes molecules in invertible mathematical space | Generative molecular design and property prediction |

| 2-Bromo-N-phenylbenzamide | 2-Bromo-N-phenylbenzamide | Research Chemical | High-purity 2-Bromo-N-phenylbenzamide for research use only. A key intermediate for organic synthesis and medicinal chemistry. Not for human consumption. |

| Zinc acetylacetonate | Zinc(2+) Bis(4-oxopent-2-en-2-olate)|CAS 14024-63-6 |

Balancing potency, selectivity, and early ADMET properties during the hit-to-lead process is a complex, multi-dimensional challenge that defines the success of subsequent drug development stages. The most effective strategies leverage integrated, AI-driven platforms that combine high-quality proprietary data, predictive modeling, and automated experimentation within a tight DMTA cycle [8] [10]. The emerging paradigm emphasizes "closing the loop" between computational design and experimental validation, while still leveraging the irreplaceable nuanced judgment of experienced drug hunters through RLHF [8].

Future progress will depend on continued improvements in the accuracy of property prediction models, particularly for complex ADMET endpoints and target-specific bioactivity [8]. Furthermore, the adoption of Model-Informed Drug Development (MIDD) principles, as outlined in emerging regulatory guidelines like ICH M15, will further solidify the role of quantitative modeling in strategic decision-making from the earliest stages of discovery [12]. By embracing these advanced frameworks and technologies, research teams can systematically navigate the vast chemical space to discover not just novel molecules, but truly "beautiful" lead compounds optimized for therapeutic success.

In the rigorous pathway of drug discovery, the hit-to-lead (H2L) phase represents a critical gateway designed to transition from initial screening outcomes to viable lead compounds [1]. This stage begins with a list of active compounds, or "hits," typically identified from a high-throughput screen (HTS) of large compound libraries [1]. The primary objective of hit confirmation is to subject these initial hits to a series of stringent tests to validate their biological activity and specificity, thereby eliminating false positives and laying a solid foundation for subsequent optimization [13] [14]. A confirmed hit is not merely active; it is a reproducible, dose-dependent, and specific modulator of the intended target, exhibiting early indicators of drug-like potential [15].

The process of hit confirmation serves as a crucial quality control checkpoint. Without it, resource-intensive lead optimization efforts risk being wasted on compounds that are artifacts of the assay technology rather than genuine bioactive entities [13]. The core pillars of this triage process are reproducibility testing, dose-response analysis, and orthogonal assay validation, which together provide a multi-faceted assessment of hit quality [13] [1]. This guide details the experimental strategies and methodologies essential for effective hit confirmation, providing a technical roadmap for researchers and drug development professionals navigating this foundational stage of early drug discovery.

Core Pillars of Hit Confirmation

Reproducibility and Confirmatory Testing

The first and most fundamental step in hit confirmation is to verify that the observed activity is real and reproducible. This process begins with confirmatory testing, which involves re-testing the initial hits using the same assay conditions and protocol as the primary screen [1]. The goal is to eliminate hits whose activity was a result of random chance, transient system fluctuations, or compound handling errors (e.g., pipetting inaccuracies, plate placement effects) [13].

A key requirement for a robust confirmatory assay is its pharmacological importance and quality, ensuring it is reproducible across assay plates and screening days, and that the pharmacology of standard control compounds falls within predefined limits [15]. Furthermore, the assay must not be sensitive to the concentrations of solvents like DMSO used to store and dilute the compound library [15]. Compounds that fail to show significant activity in this retesting phase are typically discarded immediately, conserving valuable resources for more promising candidates.

Dose-Response Curves and Hit Characterization

Once reproducible activity is confirmed, the next step is to quantify the potency and efficacy of the hit compounds. This is achieved by generating dose-response curves, where each hit is tested over a range of concentrations (typically from nanomolar to high micromolar) to determine the concentration that results in half-maximal activity (EC50) or binding (IC50) [1] [13].

The shape of the dose-response curve provides critical information about the compound's behavior [13]:

- Steep curves may indicate potential toxicity or cooperative binding.

- Shallow or bell-shaped curves can signal poor compound solubility, aggregation, or interference with the assay detection method.

- Compounds that fail to produce a reproducible sigmoidal dose-response relationship are generally discarded due to a lack of reliable activity [13].

Beyond simple potency, this stage allows for the calculation of ligand efficiency (LE), a metric that normalizes the binding affinity to the molecular size of the compound [16]. This helps prioritize smaller hits that make more efficient use of their atoms to bind to the target, which is particularly valuable for fragment-based approaches [16].

Orthogonal Assays: Confirming Biological Relevance

Orthogonal assays are perhaps the most powerful tool for distinguishing true bioactive compounds from assay-specific artifacts. The purpose of an orthogonal assay is to confirm the bioactivity of a hit using a different readout technology or under assay conditions that are closer to the target's physiological environment [13] [1]. This step is vital because a compound that produces the same biological outcome via two distinct measurement techniques is unlikely to be a false positive.

Table 1: Common Orthogonal Assay Technologies for Hit Confirmation

| Primary Screening Readout | Orthogonal Readout Technologies | Key Applications |

|---|---|---|

| Fluorescence-based | Luminescence- or Absorbance-based readouts | Biochemical or cell-based assays; avoids fluorescent compound interference [13] |

| Bulk-readout (Plate reader) | Microscopy imaging & High-content analysis | Cell-based assays; inspects single-cell effects vs. population averages [13] |

| Biochemical Assay | Biophysical binding assays (SPR, ITC, MST, TSA, NMR) | Target-based approaches; confirms binding, measures affinity & kinetics [13] [1] |

| Immortalized Cell Line (2D) | Different cell models (3D, primary cells) | Phenotypic screening; validates hits in more disease-relevant settings [13] |

The selection of an orthogonal assay depends on the nature of the primary screen. For target-based campaigns, biophysical assays like Surface Plasmon Resonance (SPR) or Isothermal Titration Calorimetry (ITC) provide direct evidence of target engagement and can yield detailed information on binding kinetics and stoichiometry [13] [1]. In phenotypic screening, employing different cell models or high-content imaging that examines specific cellular features (e.g., morphology, translocation) can confirm the biological phenotype while offering deeper mechanistic insights [13].

Advanced Triage: Counterscreens and Selectivity Profiling

After establishing reproducible, potent, and orthogonal activity, the focus shifts to assessing a hit's specificity. Counter screens are specifically designed to identify and eliminate compounds that act through general assay interference mechanisms rather than specific target modulation [13]. These assays test for common artifact-causing behaviors by bypassing the actual biological reaction and measuring only the compound's effect on the detection technology itself [13].

Prevalent interference mechanisms and corresponding counter-assays include:

- Autofluorescence or Signal Quenching: Testing compounds in control cells or assay systems devoid of the target [13].

- Compound Aggregation: Using detergents like Triton X-100 in the assay buffer to disrupt aggregate-based inhibition [13].

- Chemical Reactivity: Assessing promiscuous inhibition in unrelated enzyme assays.

- Chelation: Testing activity in the presence of excess divalent cations like Mg²⺠or Zn²âº.

Furthermore, selectivity profiling is conducted through secondary screening against related targets (e.g., other kinases in a kinase inhibitor program) or anti-targets to identify and deprioritize promiscuous hits [1]. This step is crucial for predicting potential off-target side effects early in the process.

The Hit Confirmation Workflow

The following diagram illustrates the sequential and iterative process of hit confirmation, from initial retesting to the final selection of triaged hits ready for the hit-to-lead phase.

Essential Research Reagents and Tools

A successful hit confirmation campaign relies on a suite of specialized reagents and assay technologies. The following table details key solutions used throughout the process.

Table 2: Key Research Reagent Solutions for Hit Confirmation

| Reagent/Assay Type | Function in Hit Confirmation | Specific Examples |

|---|---|---|

| Cell Viability Assays | Assess cellular fitness and rule out general toxicity as a cause for activity [13]. | CellTiter-Glo (ATP quantitation), MTT assay (Metabolic activity) [13]. |

| Cytotoxicity Assays | Measure compound-induced cell damage and membrane integrity [13]. | Lactate Dehydrogenase (LDH) assay, CytoTox-Glo, CellTox Green [13]. |

| High-Content Staining Dyes | Enable multiplexed analysis of cellular morphology and health via microscopy [13]. | DAPI/Hoechst (Nuclei), MitoTracker (Mitochondria), TO-PRO-3 (Membrane integrity) [13]. |

| Biophysical Assay Platforms | Confirm direct target binding and quantify affinity/kinetics [13] [1]. | Surface Plasmon Resonance (SPR), Isothermal Titration Calorimetry (ITC) [13]. |

| Serum Albumin | Evaluate protein binding, which can influence compound potency and availability [1]. | Human Serum Albumin (HSA) binding assays. |

| Detergents & Additives | Mitigate compound aggregation and nonspecific binding in biochemical assays [13]. | Bovine Serum Albumin (BSA), Triton X-100, Tween-20 [13]. |

Integrating Hit Confirmation into the Broader Hit-to-Lead Pipeline

Hit confirmation is not the final goal but a critical filtering mechanism within the larger hit-to-lead (H2L) pipeline. The output of a successful confirmation process is a collection of triaged hit series that demonstrate confirmed bioactivity, acceptable potency (typically < 1 μM), and initial signs of specificity and drug-likeness [1]. These qualified hits become the input for the hit expansion phase, where medicinal chemists generate analogs to establish a robust Structure-Activity Relationship (SAR) and to further improve properties [1] [17].

The criteria assessed during hit confirmation directly inform the objectives of the subsequent H2L stage. For instance, a confirmed hit with moderate potency but excellent selectivity and clean ancillary pharmacology is often a more attractive starting point than a highly potent but promiscuous compound. The rigorous experimental strategies outlined in this guide—ensuring reproducibility, quantifying response, and verifying specificity—are therefore indispensable for de-risking drug discovery projects. By investing in a thorough hit confirmation workflow, research teams can confidently allocate resources to the most promising chemical series, thereby increasing the probability of successfully delivering a development candidate with a strong foundation for preclinical and clinical success.

In the drug discovery pipeline, the hit-to-lead (H2L) stage serves as a critical gateway where initial screening hits are transformed into promising lead compounds [1]. Within this phase, hit expansion represents a systematic and multidisciplinary process aimed at exploring the structure-activity relationship (SAR) around a confirmed hit to develop a robust compound series [1] [18]. This expansion is not merely about generating numerous analogs; it is a deliberate strategy to understand which structural features correlate with desired biological activity and physicochemical properties [19]. When a single compound shows activity against a therapeutic target, it represents merely a starting point. The fundamental goal of hit expansion is to rapidly build a chemical series that allows researchers to identify key trends, mitigate potential development risks, and ultimately select the most viable lead candidates for the subsequent, more resource-intensive lead optimization phase [1] [20]. This guide details the core principles, methodologies, and experimental protocols that enable scientists to navigate this complex process efficiently.

Conceptual Foundation: From a Hit to a Series

Defining the Starting Point: The Confirmed Hit

Before expansion can begin, the initial screening hit must be rigorously validated. A confirmed hit is a compound whose activity against the biological target is reproducible and validated through a series of controlled experiments [1]. The hit confirmation process typically involves:

- Confirmatory Testing: Re-testing the compound using the original assay conditions to ensure activity is reproducible [1].

- Dose-Response Analysis: Determining the concentration that results in half-maximal binding or activity (IC50 or EC50) to establish potency [1].

- Orthogonal Testing: Assessing compound activity using a different assay technology or one that more closely mirrors physiological conditions [1].

- Secondary Screening: Evaluating efficacy in a functional cellular assay to confirm biological relevance [1].

- Biophysical Testing: Using techniques like surface plasmon resonance (SPR) or nuclear magnetic resonance (NMR) to confirm direct binding to the target [1].

The Objectives of Hit Expansion

Hit expansion aims to rapidly generate a compound series that elucidates the SAR, thereby reducing uncertainty and guiding subsequent optimization. The primary objectives include:

- SAR Elucidation: Understanding how structural modifications affect potency, selectivity, and other key properties [1] [19].

- Improving Potency: Enhancing affinity for the target, often from micromolar (10−6 M) to nanomolar (10−9 M) range [1].

- Property Optimization: Addressing drug-like properties such as metabolic stability, solubility, and membrane permeability early in the process [1] [20].

- Risk Mitigation: Identifying and overcoming potential development challenges such as cytotoxicity, poor selectivity, or chemical instability [20].

- Securing Patentability: Expanding the structural scope to establish a strong intellectual property position [1].

Strategic Methodologies for Hit Expansion

Traditional and Modern Approaches

Several strategic approaches can be employed for hit expansion, each with distinct advantages and applications.

SAR by Catalog: This approach involves identifying and purchasing structurally analogous compounds from commercial sources to quickly generate initial SAR trends without synthetic effort [1]. It is a rapid and cost-effective method for the early exploration of structural diversity around the hit.

SAR by Synthesis: Medicinal chemists design and synthesize analogs based on the original hit structure [1]. This approach allows for greater control over the structural modifications and access to novel, proprietary chemical space not available commercially.

SAR by Space: A modern computational approach that leverages vast virtual chemical spaces, such as the REAL Space (containing over 11 billion tangible compounds), to identify synthetically accessible analogs [18]. Navigation through these spaces uses algorithms like Feature Trees (FTrees), which compute similarity based on physicochemical properties, enabling the efficient selection of compounds for synthesis that are likely to be active [18].

Cross-Structure-Activity Relationship (C-SAR): An emerging methodology that analyzes pharmacophoric substituents and their substitution patterns across diverse chemotypes targeting the same biological entity [21]. This approach can accelerate SAR expansion by applying learned principles from one chemotype to another, effectively "hopping" between different structural classes.

Quantitative Analysis of Hit Expansion

The following table summarizes key metrics and criteria used to evaluate compounds during hit expansion, compiled from industry analyses and case studies [1] [16] [18].

Table 1: Key Property Targets and Analytical Metrics During Hit Expansion

| Property Category | Specific Metric | Typical Target Range | Analysis Method |

|---|---|---|---|

| Potency | Affinity (Ki/Kd/IC50) | < 1 μM → nanomolar range [1] | Dose-response curves, TR-FRET [1] [18] |

| Ligand Efficiency | LE (Ligand Efficiency) | ≥ 0.3 kcal/mol/HA [16] | Calculated from potency and heavy atom (HA) count [16] |

| Selectivity | Activity vs. related targets | >10-100 fold selectivity [20] | Counter-screening against target panels [20] |

| Cellular Activity | Efficacy in cell-based assay | Significant activity at <10 μM [1] | Secondary functional cellular assays [1] |

| Solubility | Aqueous Solubility | > 10 μM [1] | Thermodynamic solubility measurements |

| Microsomal Stability | Metabolic Half-life | Sufficient for in vivo models [1] | In vitro incubation with liver microsomes |

| Cytotoxicity | Selectivity over general toxicity | Low cytotoxicity at therapeutic concentrations [1] | Cell viability assays (e.g., MTT, CellTiter-Glo) |

The success of a hit expansion campaign is often measured by the resulting ligand efficiency (LE), which normalizes potency by molecular size, and the successful establishment of a clear structure-activity relationship (SAR) [16]. A successful campaign will typically yield multiple compound clusters or series with improved properties, from which the project team will select between three and six for further exploration [1].

Experimental Protocols and Workflows

The Integrated Hit Expansion Workflow

A typical hit expansion workflow is cyclical, involving design, synthesis, testing, and data analysis to inform the next cycle. The diagram below illustrates this integrated process.

Diagram 1: Hit Expansion Workflow

Detailed Methodologies for Key Experiments

Biochemical Potency and Mechanism of Action Assays

Objective: To quantify the direct interaction between the analog and the target, and to understand its mechanism of inhibition [20].

Protocol:

- Assay Format: Use homogeneous, mix-and-read assays like Transcreener, Fluorescence Polarization (FP), or Time-Resolved FRET (TR-FRET) for efficiency and scalability [20].

- Dose-Response Curves: Test compounds over a range of concentrations (typically from 10 µM to 0.1 nM in a 3- or 10-fold dilution series) in duplicate or triplicate.

- Data Analysis: Fit the dose-response data to a four-parameter logistic (4PL) Hill equation to determine the IC50 value [16].

- Mechanistic Studies: For enzymes, vary substrate concentrations in the presence of fixed inhibitor concentrations to determine the mode of inhibition (e.g., competitive, non-competitive) [20].

Cellular Target Engagement and Functional Assays

Objective: To confirm that the compound engages the target and produces the desired functional effect in a physiologically relevant cellular environment [5].

Protocol (Cellular Thermal Shift Assay - CETSA):

- Cell Treatment: Incubate cells (e.g., primary cells or relevant cell lines) with the test compound or vehicle control for a predetermined time.

- Heat Challenge: Aliquot the cell suspension, heat each aliquot to a different temperature (e.g., from 45°C to 65°C) for a fixed time (e.g., 3 minutes).

- Cell Lysis and Fractionation: Lyse the cells and separate the soluble protein fraction by centrifugation.

- Detection: Quantify the remaining soluble target protein in each fraction using Western blot or, for higher throughput, an immunoassay like TR-FRET [5].

- Data Analysis: Plot the fraction of intact protein versus temperature. A rightward shift in the melting curve (increased ΔTm) for the compound-treated sample indicates target stabilization and successful engagement [5].

In Vitro ADME and Physicochemical Profiling

Objective: To assess key developability properties of the analogs early in the process [1] [20].

Protocol (Metabolic Stability in Liver Microsomes):

- Incubation: Combine test compound (typically 1 µM), liver microsomes (e.g., 0.5 mg/mL protein), and NADPH-regenerating system in potassium phosphate buffer.

- Time Course: Incubate at 37°C and remove aliquots at multiple time points (e.g., 0, 5, 15, 30, 60 minutes).

- Reaction Termination: Stop the reaction by adding an equal volume of ice-cold acetonitrile containing an internal standard.

- Analysis: Remove precipitated protein by centrifugation and analyze the supernatant using LC-MS/MS to determine the parent compound concentration remaining at each time point.

- Data Analysis: Plot the natural logarithm of the compound concentration versus time. The slope of the linear regression is used to calculate the in vitro half-life (t1/2) and intrinsic clearance (CLint).

The Scientist's Toolkit: Essential Reagents and Materials

Successful hit expansion relies on a suite of specialized reagents, assay platforms, and computational tools. The following table details key solutions used in the process.

Table 2: Research Reagent Solutions for Hit Expansion

| Tool Category | Specific Solution | Function & Application |

|---|---|---|

| Biochemical Assays | Transcreener Assays [20] | Homogeneous, high-throughput measurement of enzyme activity (e.g., kinases, GTPases). |

| Cellular Assays | CETSA (Cellular Thermal Shift Assay) [5] | Quantifies target engagement and binding in intact cells under physiological conditions. |

| Computational Platforms | Schrödinger Suite [22] | Provides in silico tools for FEP+ binding affinity prediction, de novo design, and property prediction. |

| Chemical Spaces | REAL Database & REAL Space [18] | Vast collections of commercially available and virtually accessible, synthetically feasible compounds for analog sourcing. |

| Data Analysis & Collaboration | LiveDesign [22] | A cloud-native platform for sharing, revising, and testing design ideas with team members in real-time. |

| Anisole chromium tricarbonyl | Anisole chromium tricarbonyl, CAS:12116-44-8, MF:C10H8CrO4, MW:244.16 g/mol | Chemical Reagent |

| 1,3,6-Trimethyluracil | 1,3,6-Trimethyluracil, CAS:13509-52-9, MF:C7H10N2O2, MW:154.17 g/mol | Chemical Reagent |

Advanced Topics and Future Directions

Leveraging Large Chemical Spaces

The concept of "SAR by Space" represents a paradigm shift in hit expansion [18]. Instead of being limited to enumerated compound libraries, researchers can now navigate combinatorial chemical spaces containing billions of virtual molecules that are readily synthesizable from validated building blocks. For instance, the REAL Space, built from over 130,000 building blocks and 106 validated reactions, contained 647 million molecules in its first version and has since grown to over 11 billion [18]. Navigation is accomplished using tree-based molecular descriptors (FTrees) and dynamic programming, allowing for the identification of close neighbors with improved properties within weeks, as demonstrated by the discovery of novel, potent BRD4 inhibitors [18].

The Role of AI and Automation

Artificial intelligence (AI) and automation are rapidly compressing hit expansion timelines. AI-driven analysis can now predict off-target interactions and suggest optimal synthetic routes [5]. In a 2025 case study, deep graph networks were used to generate over 26,000 virtual analogs, leading to the identification of sub-nanomolar inhibitors of MAGL with a 4,500-fold potency improvement over the initial hit [5]. When combined with high-throughput automated synthesis and miniaturized assay platforms, these technologies enable ultra-rapid design-make-test-analyze (DMTA) cycles, reducing what was once a multi-month process to a matter of weeks [5].

Cross-Structure-Activity Relationship (C-SAR)

The C-SAR approach is a novel methodology that accelerates structural development by extracting SAR data from a diverse library of molecules with different parent structures (chemotypes) that all target the same protein [21]. By analyzing Matched Molecular Pairs (MMPs)—pairs of compounds that differ only by a single defined structural change—across multiple chemotypes, researchers can identify "activity cliffs" and derive general rules about which pharmacophoric substitutions positively or negatively influence activity [21]. This allows for the intelligent transformation of inactive compounds into active ones, even when moving between different chemical scaffolds, thereby expanding the utility of SAR data beyond a single chemical series [21].

The Modern H2L Toolkit: AI, Automation, and Integrated Workflows

The Central Role of Artificial Intelligence in Target Prediction and Virtual Screening

The hit-to-lead process represents a critical, early-stage bottleneck in drug discovery, characterized by the resource-intensive task of transforming initial "hit" compounds from screening into validated "lead" candidates with confirmed activity and optimized properties. Artificial Intelligence (AI) has emerged as a transformative force in this domain, compressing timelines and improving success rates. Traditional drug discovery is a lengthy and costly endeavor, often requiring over 12 years and exceeding $2.5 billion from initial compound identification to regulatory approval, with high attrition rates where clinical trial success probabilities decline from Phase I (52%) to an overall success rate of merely 8.1% [23]. AI addresses these inefficiencies by enabling effectively extract molecular structural features, perform in-depth analysis of drug–target interactions (DTI), and systematically model the complex relationships among drugs, targets, and diseases [23]. This technical guide explores the integral role of AI in reshaping target prediction and virtual screening within the hit-to-lead framework, providing detailed methodologies and resource toolkits for research implementation.

AI-Driven Target Prediction

Target prediction involves identifying and validating the biological macromolecules, typically proteins, most likely to respond to therapeutic intervention for a specific disease. AI leverages massive heterogeneous datasets to illuminate novel, previously overlooked targets.

Data Integration and Analysis

AI platforms integrate diverse biological data sources to build a comprehensive understanding of disease mechanisms. PandaOmics (Insilico Medicine) exemplifies this approach, combining multi-omics data (genomics, transcriptomics), biological network analysis, and natural language processing (NLP) of scientific literature and patents to rank potential drug targets [24]. This system identified TNIK, a kinase not previously studied in idiopathic pulmonary fibrosis, as a top-ranking novel target, later validated in preclinical studies [24]. Another approach, utilized by Recursion Pharmaceuticals, involves generating high-content cellular images and single-cell genomics data to map human biology at scale. Their "Operating System" uses this massive phenomic dataset to continuously train machine learning (ML) models, enabling the rapid identification of novel biological pathways and druggable targets [24].

Machine Learning Methodologies

Target prediction employs several ML paradigms, each suited to different data environments and prediction goals, as detailed in the table below.

Table 1: Machine Learning Paradigms for Target Prediction

| ML Paradigm | Primary Function | Key Algorithms | Application in Target Prediction |

|---|---|---|---|

| Supervised Learning | Classification, Regression | Support Vector Machines (SVM), Random Forests (RF) [23] | Building predictive models from labeled data to classify target-disease associations or predict target druggability. |

| Unsupervised Learning | Clustering, Dimensionality Reduction | Principal Component Analysis (PCA), K-means Clustering [23] | Identifying latent patterns and structures in unlabeled omics data to reveal novel disease subtypes and associated targets. |

| Semi-Supervised Learning | Leveraging labeled & unlabeled data | Model collaboration, simulated data generation [23] | Enhancing prediction reliability when labeled data is scarce by incorporating a large pool of unlabeled data. |

| Reinforcement Learning | Optimization via decision processes | Markov decision processes [23] | Iteratively refining policies to explore and optimize target selection strategies against multi-parameter reward functions. |

| 1-Phenyl-4-(4-pyridinyl)piperazine | 1-Phenyl-4-(4-pyridinyl)piperazine|CAS 14549-61-2 | 1-Phenyl-4-(4-pyridinyl)piperazine (CAS 14549-61-2) is a chemical compound for research use only. It is strictly for laboratory applications and not for personal use. | Bench Chemicals |

| 3-[(E)-2-Phenylethenyl]aniline | 3-[(E)-2-Phenylethenyl]aniline|14064-82-5 | 3-[(E)-2-Phenylethenyl]aniline (CAS 14064-82-5), a meta-aminostilbene for organic electronics research. For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

The following diagram illustrates the typical AI-driven workflow for target identification, from data integration to final target prioritization.

AI-Enhanced Virtual Screening

Virtual screening (VS) computationally evaluates vast molecular libraries to identify structures most likely to bind a target. AI has revolutionized VS, enabling the screening of ultra-large chemical libraries far beyond the capacity of traditional physical methods [24].

Key Methodologies: SBVS and LBVS

Two primary computational strategies dominate the field, both augmented by AI:

- Structure-Based Virtual Screening (SBVS): This method relies on the 3D structural information of the target protein. AI enhances SBVS through tools like AlphaFold, which predicts protein structures with near-experimental accuracy, providing models for targets with unknown structures [25]. Deep learning models can then predict molecular binding affinities by learning from known receptor-ligand complexes, significantly outperforming classical docking scoring functions [24]. This allows for the screening of billions of compounds in silico [26].

- Ligand-Based Virtual Screening (LBVS): When 3D structural data is unavailable, LBVS uses the chemical structures of known active compounds to identify new molecules with similar bioactivity. Machine learning models are trained on chemical fingerprints of active and inactive compounds to recognize latent patterns associated with activity, enabling the prediction of novel bioactive molecules [26]. DeepChem is a popular open-source tool that democratizes the use of deep learning for such tasks in drug discovery [27].

The TAME-VS Platform: An Integrated Workflow

The TArget-driven Machine learning-Enabled VS (TAME-VS) platform provides a flexible, publicly accessible framework for early-stage hit identification [26]. Its workflow, which can be initiated with a single protein UniProt ID, is modular and automated. The platform's methodology offers a robust protocol for AI-enabled virtual screening.

Table 2: Experimental Protocol for ML-Enabled Virtual Screening (Based on TAME-VS)

| Step | Module | Protocol Description | Key Parameters & Tools |

|---|---|---|---|

| 1 | Target Expansion | Perform a global protein sequence homology search using BLAST (BLASTp). Expands the target list to proteins with high sequence similarity. | Tool: Biopython package. Parameter: Default sequence similarity cutoff = 40% (user-configurable). |

| 2 | Compound Retrieval | Extract reported active/inactive ligands for the expanded target list from the ChEMBL database via an API. | Tool: chembl_webresource_client Python package. Parameter: Default activity cutoff = 1,000 nM (for Ki, IC50, EC50); 50% for % inhibition. |

| 3 | Vectorization | Compute molecular fingerprints for extracted compounds, converting structures into a numerical format for ML. | Tools: RDKit. Fingerprint Types: Morgan, AtomPair, TopologicalTorsion, MACCS keys. |

| 4 | ML Model Training | Train supervised ML classification models to distinguish between active and inactive compounds. | Default Algorithms: Random Forest (RF), Multilayer Perceptron (MLP). Data: Labeled datasets from Step 2 and 3. |

| 5 | Virtual Screening | Apply trained ML models to screen user-defined compound collections (e.g., Enamine diversity 50K library). | Output: Compounds ranked by predicted activity scores. |

| 6 | Post-VS Analysis | Evaluate drug-likeness and key physicochemical properties of the top-ranked virtual hits. | Metrics: Quantitative Drug-likeness (QED), solubility, metabolic stability, etc. |

The following workflow diagram maps the sequence of these modules from input to final hit nomination.

The Scientist's Toolkit: Essential Research Reagents & Platforms

Successful implementation of AI-driven hit-to-lead campaigns relies on a suite of computational tools, data resources, and experimental platforms. The following table details key solutions and their functions.

Table 3: Essential Research Reagent Solutions for AI-Driven Hit Identification

| Category | Tool/Platform | Primary Function | Key Features |

|---|---|---|---|

| Open-Source Software | DeepChem [27] | Open-source deep learning toolkit for drug discovery, materials science, and biology. | Democratizes access to deep learning models; compatible with Amazon SageMaker for scalable computing. |