From Lab to Real World: 7 Data-Centric Strategies to Boost AI Model Transferability in Drug Development

This article provides a strategic roadmap for researchers and drug development professionals to enhance the performance and reliability of AI/ML models when applied to real-world industry data.

From Lab to Real World: 7 Data-Centric Strategies to Boost AI Model Transferability in Drug Development

Abstract

This article provides a strategic roadmap for researchers and drug development professionals to enhance the performance and reliability of AI/ML models when applied to real-world industry data. Covering the full model lifecycle—from foundational data strategies and modern methodological approaches like Small Language Models (SLMs) and MLOps to troubleshooting for common deployment challenges and rigorous validation frameworks—it offers actionable insights to bridge the gap between experimental research and industrial application. Readers will learn how to implement a 'Fit-for-Purpose' modeling approach, leverage expert-in-the-loop evaluation, and navigate the technical and organizational hurdles critical for successful model transferability in biomedical research.

Laying the Groundwork: Understanding Data and Domain Challenges in Industrial AI

Troubleshooting Guide: Common Model Transferability Failures and Solutions

This guide addresses specific, high-stakes challenges you may encounter when transferring computational or experimental models from development to real-world industry applications.

1. Problem: My model, which had super-human clinical performance at its training site, performs substantially worse at new validation sites.

- Underlying Cause: This is a classic failure to transport, often resulting from differences in the multivariate data distribution between your training dataset and the application dataset at the new site [1]. This can be due to site-specific clinical practices, variations in disease prevalence, or differences in data collection protocols.

- Troubleshooting Steps:

- Audit Data Definitions: Meticulously verify that the prediction target and all input variables are defined identically between the original and new sites. A subtle difference in the sepsis label definition, for example, was a key reason for the performance drop of a commercial sepsis prediction model [1].

- Check for Information Leaks: Scrutinize your training data for information that would not be available at the time of prediction in a real-world setting. This includes data generated after the clinical event or using the full length of a hospital admission to construct negative instances [1].

- Quantify the Shift: Use metrics like the transfer ratio (( R^t{\mathrm{transfer}} = \frac{\text{Performance}{\mathrm{real}}}{\text{Performance}_{\mathrm{ideal}}} )) to measure the performance drop between your ideal (training) and real-world (application) environments [2].

2. Problem: A cell line model predicts drug response with high accuracy but fails to predict patient outcomes.

- Underlying Cause: This is a fundamental translational challenge. Cell line models are trained on in vitro data that lacks the complexity of the human body, including the immune system and tumor micro-environment [3]. A model perfect for one context may be learning noise or patterns irrelevant to human biology.

- Troubleshooting Steps:

- Systematic Pipeline Scan: Don't just optimize one part of your model. Systematically test different combinations of data preprocessing, feature selection, and algorithm choices. Research has shown that scanning thousands of pipeline combinations can reveal optimal, robust settings for patient data prediction [3].

- Incorporate Homogenization: Use batch-effect correction methods like Remove Unwanted Variation (RUV) or ComBat to bridge the genomic differences between in vitro cell line data and in vivo patient data [3].

- Validate Across Multiple Datasets: A model that performs perfectly on one patient dataset may fail on another. Always validate your translational model on multiple, independent patient cohorts to ensure its robustness and clinical relevance [3].

3. Problem: My model trained on synthetic or simulated data does not generalize to real-world data (the "sim-to-real" gap).

- Underlying Cause: The synthetic data does not fully capture the uncontrolled variability, noise, and complex distributional shifts present in real-world data [2].

- Troubleshooting Steps:

- Implement Hybrid Training: Combine synthetic pre-training with targeted fine-tuning on a modest set of real-world data. This leverages the scalability of synthetic data while anchoring the model in reality [2].

- Use Domain Randomization: During training, randomize parameters in your simulations (e.g., lighting, textures, noise levels). This teaches the model to learn invariances and become more robust to the variations it will encounter in real life [2].

- Apply Blockwise Transfer: For complex models like Graph Neural Networks (GNNs), use a blockwise transfer approach: freeze general low-level encodings learned from synthetic data, and only fine-tune the higher-level, task-specific layers with a small amount of real data. This can reduce error (MAPE) by up to 88% with minimal real data [2].

4. Problem: I need to adapt an existing model to a new context but have very limited local data.

- Underlying Cause: Developing a new model from scratch is not feasible, but a general model lacks local specificity.

- Troubleshooting Steps:

- Structured Model Adaptation: Follow a formal guideline for model adaptation [4]:

- Step 1: Revision & Design: Collaborate with domain experts to assess the general model's structure and identify which components (e.g., conditional probability tables in a Bayesian network) need adjustment for the new context.

- Step 2: Knowledge Acquisition: Gather all available information for the new context, including limited local data, peer-reviewed literature, and expert knowledge.

- Step 3: Parameterization: Use techniques like linguistic labels or scenario-based elicitation to formally incorporate expert knowledge and update the model's parameters for the new site [4].

- Structured Model Adaptation: Follow a formal guideline for model adaptation [4]:

Frequently Asked Questions (FAQs)

Q1: How does transfer learning differ from traditional machine learning in this context? A: Traditional machine learning trains a new model from scratch for every task, requiring large, labeled datasets. Transfer learning reuses a pre-trained model as a starting point for a new, related task. This leverages prior knowledge, significantly reducing computational resources, time, and the amount of data needed, which is crucial for drug development where real patient data can be scarce and expensive [5].

Q2: What is "negative transfer" and how can I avoid it? A: Negative transfer occurs when knowledge from a source task adversely affects performance on the target task. This typically happens when the source and target tasks are too dissimilar [5]. To avoid it, carefully evaluate the similarity between your original model's context and the new application context. Do not assume all tasks are transferable. Using hybrid modeling approaches that incorporate mechanistic knowledge can also make models more robust to such failures [6].

Q3: Are there specific modeling techniques that enhance transferability? A: Yes, hybrid modeling (or grey-box modeling) combines mechanistic understanding with data-driven approaches. For example, a hybrid model developed for a Chinese hamster ovary (CHO) cell bioprocess was successfully transferred from shake flask (300 mL) to a 15 L bioreactor scale (a 1:50 scale-up) with low error, demonstrating excellent transferability by leveraging known bioprocess mechanics [6]. Intensified Design of Experiments (iDoE), which introduces parameter shifts within a single experiment, can also provide more process information faster and build more robust models [6].

Q4: What are the ethical considerations in model transferability? A: Using pre-trained models raises questions about the origin, bias, and ethical use of the original training data. It is critical to ensure that models are transparent and that their use complies with ethical and legal standards. Furthermore, a model that fails to transport could lead to incorrect clinical decisions, highlighting the need for rigorous validation and transparency about a model's limitations and intended use case [5] [1].

Experimental Protocols for Enhancing Transferability

Protocol 1: Implementing a Hybrid Modeling and iDoE Workflow

This methodology reduces experimental burden while building transferable models for bioprocess development [6].

- Experimental Design: Define a design space with Critical Process Parameters (CPPs), such as cultivation temperature and feed glucose concentration, at multiple levels.

- Intensified DoE (iDoE) Execution: Perform experiments where intra-experimental shifts of CPPs are introduced. This characterizes multiple CPP combinations within one run, maximizing information output on cell rates (growth, consumption, formation).

- Data Collection: At a small scale (e.g., shake flasks), collect high-frequency data on process responses (e.g., viable cell concentration - VCC, product titer).

- Hybrid Model Building: Develop a model that integrates mechanistic equations (e.g., for cell growth) with data-driven components (e.g., artificial neural networks to model complex, non-linear relationships between CPPs and specific rates).

- Model Transfer and Validation: Apply the hybrid model, without re-calibration, to predict process outcomes at a larger scale (e.g., 15 L bioreactor). Validate model predictions (VCC, titer) against new experimental data from the larger scale using metrics like Normalized Root Mean Square Error (NRMSE).

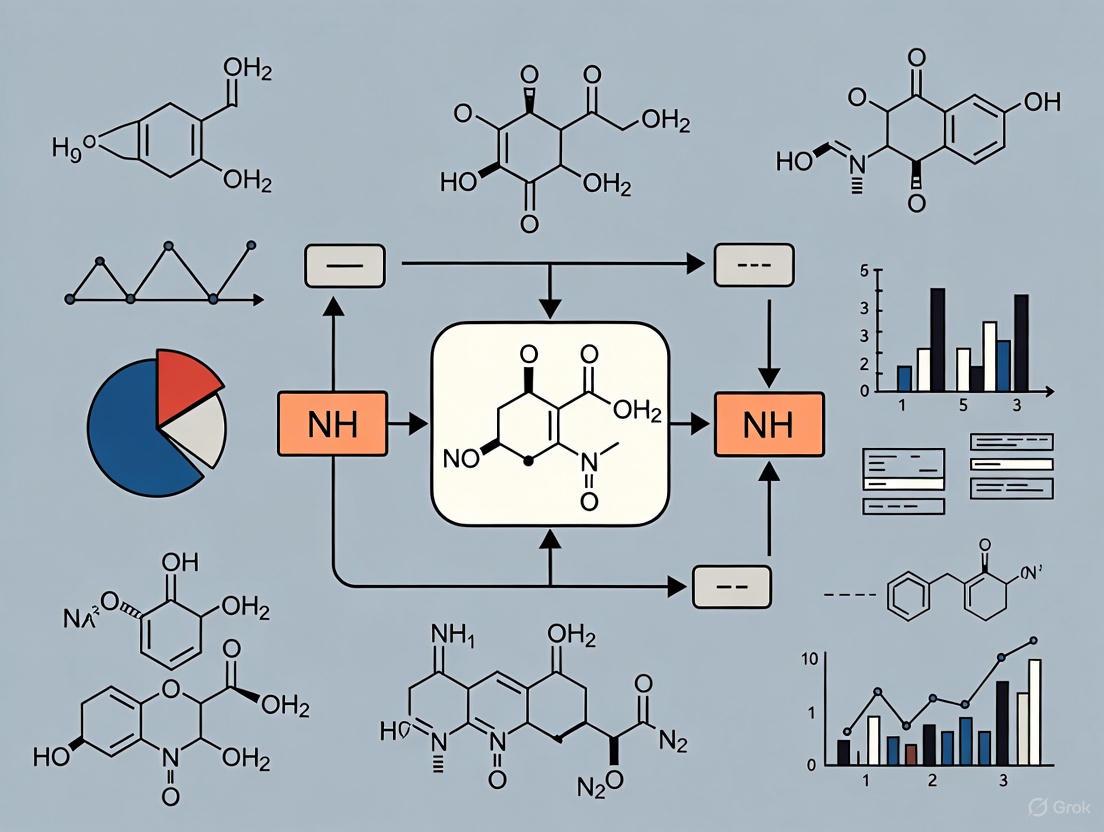

The workflow for this protocol is illustrated below:

Protocol 2: Systematic Pipeline Scanning for Translational Robustness

This protocol ensures models are robust across different patient datasets, moving beyond single-dataset optimization [3].

- Data Compilation: Gather relevant in vitro (e.g., cell line drug screening - GDSC) and in vivo (patient tumor) datasets.

- Pipeline Component Definition: Define multiple options for each step of the modeling pipeline:

- Cell Response Preprocessing: e.g., none, logarithm, power transform, k-means binarization.

- Homogenization/Batch Correction: e.g., none, RUV, ComBat, limma, YuGene.

- Feature Selection: e.g., all features, landmark genes, p-value filter.

- Feature Preprocessing: e.g., none, z-score, PCA.

- Algorithm: e.g., linear regression, lasso, elastic net, random forest (rf), SVM.

- Automated Pipeline Execution: Use a framework (e.g., the FORESEE R-package) to automatically train and evaluate models for all possible combinations of these components.

- Multi-Dataset Validation: Evaluate the performance (e.g., AUC of ROC) of every pipeline combination on multiple, independent patient datasets.

- Model Selection: Identify the pipeline that demonstrates consistently high performance across all validation datasets, rather than just performing best on one.

The following diagram maps the decision points in this systematic approach:

Quantitative Performance Data

The table below summarizes experimental results that demonstrate the impact of different strategies on model transferability.

Table 1: Quantifying Transferability in Experimental Models

| Model / Strategy | Source Context | Target Context | Performance Metric | Result | Key Insight |

|---|---|---|---|---|---|

| Hybrid Model with iDoE [6] | Shake Flask (300 mL) | 15 L Bioreactor (1:50 scale) | NRMSE (Viable Cell Density) | 10.92% | Combining mechanistic knowledge (hybrid) with information-rich data (iDoE) enables successful scale-up. |

| Hybrid Model with iDoE [6] | Shake Flask (300 mL) | 15 L Bioreactor (1:50 scale) | NRMSE (Product Titer) | 17.79% | |

| Systematic Pipeline Scan [3] | GDSC Cell Lines & Various Preprocessing | Breast Cancer Patients (GSE6434) | AUC of ROC | 0.986 (Best Pipeline) | Systematically testing modeling components, rather than relying on a single best practice, can yield near-perfect performance on a specific dataset. |

| Blockwise GNN Transfer [2] | Synthetic Network Data | Real-World Network Data | Mean Absolute Percentage Error (MAPE) | Reduction up to 88% | Transferring pre-trained model components and fine-tuning only specific layers with minimal real data dramatically improves performance. |

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Featured Experiments

| Research Reagent / Tool | Function / Explanation | Featured Use Case |

|---|---|---|

| CHO Cell Line | A recombinant mammalian cell line widely used for producing therapeutic proteins, such as monoclonal antibodies [6]. | Model organism for bioprocess development and scale-up studies [6]. |

| Chemically Defined Media (e.g., Dynamis AGT) | A cell culture medium with a known, consistent composition, ensuring process reproducibility and reducing variability in experimental outcomes [6]. | Provides a controlled nutritional environment for CHO cell cultivations in transferability studies [6]. |

| Feed Medium (e.g., CHO CD EfficientFeed) | A concentrated nutrient supplement added during the culture process to extend cell viability and increase protein production in fed-batch bioreactors [6]. | Used to manipulate Critical Process Parameters (CPPs) like glucose concentration in iDoE [6]. |

| RUV (Remove Unwanted Variation) | A computational homogenization method used to correct for non-biological technical differences between datasets, such as those from different labs or platforms [3]. | Bridges the batch-effect gap between in vitro cell line and in vivo patient genomic data in translational models [3]. |

| FORESEE R-Package | A software tool designed to systematically train and test different translational modeling pipelines, enabling unbiased benchmarking [3]. | Used for the systematic scan of 3,920 modeling pipelines to find robust predictors of patient drug response [3]. |

| PACAP-38 (31-38), human, mouse, rat | PACAP-38 (31-38), human, mouse, rat, MF:C47H83N17O11, MW:1062.3 g/mol | Chemical Reagent |

| Raltegravir-d4 | Raltegravir-d4, MF:C20H21FN6O5, MW:448.4 g/mol | Chemical Reagent |

Troubleshooting Guide: Common Data Challenges in Pharma R&D

This guide addresses frequent data management problems encountered when moving from controlled research datasets to diverse, real-world data sources in pharmaceutical research and development.

Problem 1: How to Overcome Data Silos and Disorganization?

The Issue: Your organization's critical data is isolated across different departments, archives, and external partners, each with unique storage practices and naming conventions [7] [8]. This fragmentation makes it difficult to aggregate, analyze, and glean insights, ultimately slowing down research and drug discovery [7].

Solutions:

- Implement a Centralized Data Integration Platform: Use advanced platforms to consolidate data from multiple sources into a unified repository [9] [8].

- Adopt Interoperable Standards: Establish and enforce consistent data formats, nomenclature, and storage practices across the organization [7] [8].

- Encourage Cross-Functional Collaboration: Foster a culture of data sharing and break down territorial attitudes that lead to data being closely guarded [8].

Problem 2: How to Ensure Data Quality and Integrity in Multi-Source Data?

The Issue: Data sourced from myriad channels often suffers from inaccuracies, inconsistencies, and incompleteness. In an industry with high stakes, even minor discrepancies can profoundly impact drug efficacy, safety, and regulatory approvals [8].

Solutions:

- Employ Automated Data Validation: Implement robust data validation and verification processes to ensure accuracy and reliability [8].

- Utilize Data Governance Frameworks: Establish clear policies for data access, usage, and quality standards [9] [8].

- Leverage Specialized Tools: Use data management and analysis tools designed to enhance data quality and integrity [8]. For classification datasets, tools like

cleanlabcan automatically detect label issues across various data modalities [10].

Problem 3: How to Handle Massive Datasets with High Computational Demands?

The Issue: Pharma companies often work with petabytes of medical imaging and research data. Effectively working with databases of this scale requires massive computational resources, often requiring cloud-scale infrastructure and hybrid environments [7].

Solutions:

- Implement Elastic Scaling: Utilize modern tools and infrastructure that can elastically scale to fit research needs quickly and cost-effectively [7].

- Optimize Data Processing: For large datasets with limited memory, use batched processing methods. With

cleanlab, for instance, you can usefind_label_issues_batched()to control memory usage by adjusting thebatch_sizeparameter [10]. - Consider Rare Classes: For datasets with many classes, merge rare classes into a single "Other" category to improve processing efficiency without significantly affecting label error detection accuracy [10].

Problem 4: How to Maintain Compliance in Collaborative Environments?

The Issue: Research organizations must meet stringent regulatory requirements when using sensitive biomedical data, especially with the increase in remote work and far-flung collaborations. Data privacy regulations like HIPAA and GDPR set strict standards for data handling [7] [8].

Solutions:

- Centralize on Secure Platforms: Use shared, secure, and compliant platforms to keep projects moving while maintaining vigilance over sensitive information [7].

- Conduct Regular Security Audits: Perform frequent security audits and risk assessments to identify and address vulnerabilities [8].

- Implement Robust Cybersecurity Measures: Apply encryption, multi-factor authentication, and secure access controls to protect sensitive data [8].

Data Integration Methods Comparison

Table 1: Comparison of Data Integration Approaches for Multi-Source Data

| Method | Handled By | Data Cleaning | Source Requirements | Best Use Cases |

|---|---|---|---|---|

| Data Integration [11] | IT Teams | Before output | No same-source requirement | Comprehensive, systemic consolidation into standardized formats |

| Data Blending [11] | End Users | After output | No same-source requirement | Combining native data from multiple sources for specific analyses |

| Data Joining [11] | End Users | After output | Same source required | Combining datasets from the same system with overlapping columns |

Research Reagent Solutions: Data Management Tools

Table 2: Essential Solutions for Multi-Source Data Challenges

| Solution Type | Function | Example Applications |

|---|---|---|

| Centralized Data Warehouses [9] [11] | Consolidated repositories for structured data from multiple sources | Creating single source of truth for inventory levels, customer data |

| Data Lakes [9] [11] | Storage systems handling large volumes of structured and unstructured data | Combining diverse data types (EHR, lab systems, imaging) for comprehensive analysis |

| Entity Resolution Tools [12] | Identify and merge records referring to the same real-world entity | Resolving varying representations of the same entity across multiple data providers |

| Truth Discovery Systems [12] | Resolve attribute value conflicts by evaluating source reliability | Determining correct values when different sources provide conflicting information |

| Automated Data Cleansing Tools [10] | Detect and correct label issues, inconsistencies in datasets | Improving data quality for ML model training in classification tasks |

Experimental Protocols for Data Quality Assessment

Protocol 1: Entity Resolution for Multi-Source Data Integration

Objective: Resolve entity information overlapping across multiple data sources [12].

Methodology:

- Blocking: Divide records into blocks to avoid comparing every record with all others. Use standard blocking, sorted blocking, or token blocking techniques [12].

- Matching: Determine whether two representations refer to the same real-world entity using schema-aware or schema-agnostic methods [12].

- Knowledge Integration: Incorporate external knowledge bases, rules, or constraints to improve matching accuracy where applicable [12].

Entity Resolution Workflow

Protocol 2: Label Quality Assessment for Machine Learning Readiness

Objective: Detect and address label issues in classification datasets to improve model performance and transferability [10].

Methodology:

- Data Preparation: Format labels as integer-encoded values in the range {0,1,...,K-1} where K is the number of classes [10].

- Issue Detection: Use appropriate methods based on dataset size:

- Label Correction: For training data, auto-correct or manually review flagged issues. For test data, manually review flagged issues to ensure reliable evaluation [10].

Label Quality Assessment Process

Frequently Asked Questions

Effective integration requires a systematic approach [11]:

- Identify the need for combined data and relevant sources

- Extract data from sources in native format

- Transform data through normalization, removal of duplicates, and correction of inaccuracies

- Load data into analytics applications or business intelligence systems Automating as much of the cleansing process as possible is crucial for efficiency and enabling self-service data access [11].

What are the specific data quality issues in multi-source data compared to single-source data?

Multi-source data introduces three specific quality challenges [12]:

- Entity Information Overlapping: Different representations of the same entity across sources, requiring entity resolution

- Attribute Value Conflicts: Conflicting observations for the same attribute across different sources, requiring truth discovery

- Attribute Value Inconsistencies: Invalid or incomplete attribute values across different entities, requiring integrity constraints for detection and repair

How should we handle label errors in both training and test datasets?

For the most reliable model training and evaluation [10]:

- Merge training and test sets into one larger dataset

- Use cross-validation training to detect label issues across the combined dataset

- For training data: Auto-correcting labels is acceptable and should improve ML performance

- For test data: Manually fix labels via careful review of flagged issues to ensure evaluation reliability Tools like Cleanlab Studio can help efficiently fix label issues in large datasets [10].

What computational strategies work for large-scale data problems in drug discovery?

For petabyte-scale datasets common in pharmaceutical research [7]:

- Use hybrid environments combining cloud-scale resources with high-performance compute clusters

- Implement elastic scaling to quickly and cost-effectively meet fluctuating computational demands

- Employ batched processing for large datasets with limited memory, processing data in manageable chunks

- Consider algorithmic workflows that can be pipelined together, using the output of one process as input to the next

How can we boost model transferability from clean lab data to messy real-world data?

Enhancing model transferability requires [13] [14]:

- Data Augmentation: Using transformations in both spatial and frequency domains to increase data diversity

- Adversarial Training: Leveraging adversarial examples to reveal model weaknesses and enhance robustness

- Feature Perturbation: Strategically perturbing consistent features across modalities to improve generalization

- Multi-Domain Training: Incorporating data from various domains and conditions during model training

Troubleshooting Guides

Guide 1: Diagnosing Model Performance Decay in Production

Symptom: Your model's predictive accuracy or performance metrics are degrading over time, despite functioning correctly initially.

| Potential Cause | Diagnostic Check | Immediate Action | Long-term Solution |

|---|---|---|---|

| Gradual Concept Drift [15] | Monitor model accuracy or error rate over time using control charts [16]. | Retrain the model on the most recent data [17]. | Implement a continuous learning pipeline with periodic retraining [15]. |

| Sudden Concept Drift [15] | Use drift detection methods (e.g., DDM, ADWIN) to identify abrupt changes in data statistics [16]. | Trigger a model retraining alert and investigate external events (e.g., market changes, new regulations) [15]. | Develop a model rollback strategy to quickly revert to a previous stable version. |

| Data (Covariate) Shift [18] | Compare the distribution of input features in live data versus the training data (e.g., using Population Stability Index) [18]. | Evaluate if the model is still calibrated on the new input distribution [18]. | Employ domain adaptation techniques or source data from a more representative sample [5]. |

Guide 2: Addressing Data Integrity and Access Issues

Symptom: Inability to access, integrate, or trust the data needed for model training or inference.

| Potential Cause | Diagnostic Check | Immediate Action | Long-term Solution |

|---|---|---|---|

| Siloed or Disparate Data [19] | Audit the number and accessibility of data sources required for your research. | Use a centralized data platform to aggregate sources, if available [19]. | Advocate for and invest in integrated data infrastructure and shared data schemas [19]. |

| Data Quality Decay [16] | Check for "data corrosion," "data loss," or schema inconsistencies in incoming data [16]. | Implement data validation rules at the point of entry. | Establish robust data governance and quality monitoring frameworks. |

| Limited Data Availability [19] | Identify if required data is behind paywalls, has privacy restrictions, or simply doesn't exist [19]. | Explore alternative data sources or synthetic data generation. | Build partnerships for data sharing and advocate for open data initiatives where appropriate. |

Guide 3: Navigating the Regulatory and Ethical Landscape

Symptom: Projects are delayed or halted due to compliance issues, ethical concerns, or institutional barriers.

| Challenge Area | Key Questions for Self-Assessment | Risk Mitigation Strategy |

|---|---|---|

| Data Privacy & Security [20] | Have we obtained proper consent for data use? Are we compliant with regulations like HIPAA? [20] | Anonymize patient data and implement strict access controls [20]. |

| Algorithmic Bias & Fairness [19] | Does our training data represent the target population? Could the model yield unfair outcomes? | Use a centralized AI platform to reduce human selection bias and perform rigorous bias audits [19]. |

| Regulatory Hurdles [21] | Have we engaged with regulators early? Is our validation process rigorous and documented? | Proactively engage with regulatory bodies and design studies with regulatory requirements in mind. |

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between concept drift and data drift?

A: Concept drift is a change in the underlying relationship between your input data (features) and the target variable you are predicting [15]. For example, the characteristics of a spam email change over time, even if the definition of "spam" does not. Data drift (or covariate shift) is a change in the distribution of the input features themselves, while the relationship to the target remains unchanged [18]. An example is your model seeing more users from a new geographic region than it was trained on [15].

Q2: How can I detect concept drift if I don't have immediate access to true labels in production?

A: While monitoring the actual model error rate is the most direct method, it's often not feasible due to label lag. In such cases, you can use proxy methods [15]:

- Prediction Drift: Monitor the distribution of the model's predictions. A significant shift can indicate concept drift [15].

- Data Drift: Analyze shifts in the input feature distributions, as concept drift often coincides with data drift [16] [15].

- Domain-specific Heuristics: Use business logic as a proxy. For instance, a sudden drop in the click-through rate for a recommendation model can signal performance decay [15].

Q3: Our model is performing well, but we are concerned about regulatory approval. What are key considerations for drug development research?

A: For healthcare and drug development, focus on:

- Transparency and Explainability: Be prepared to explain how your model works and why it makes certain predictions [20].

- Robust Validation: Go beyond standard accuracy metrics. Perform rigorous internal and external validation to ensure generalizability [20].

- Data Provenance: Maintain clear records of your data sources, how the data was processed, and any transformations applied [20].

- Ethical Data Sourcing: Ensure patient data is anonymized and used in compliance with all relevant regulations and with proper consent [20].

Q4: We have a small dataset for our specific task. How can we leverage transfer learning effectively while avoiding negative transfer?

A:

- Task Similarity is Key: Transfer learning works best when the source and target tasks are related [5]. Using a model trained on natural images for medical imaging is more likely to succeed than using one trained on text.

- Fine-Tuning: Don't just use the pre-trained model as a static feature extractor. Fine-tune the later layers of the model on your small, domain-specific dataset to adapt the learned features [5].

- Validate Rigorously: To avoid negative transfer (where the source knowledge harms performance), always validate the transferred model's performance on a held-out test set from your target domain and compare it to a baseline [5].

The following table summarizes key metrics and thresholds for common drift detection methods.

| Method Name | Type | Key Metric Monitored | Typical Thresholds/Actions |

|---|---|---|---|

| DDM (Drift Detection Method) | Online, Supervised | Model error rate | Warning level (e.g., error mean + 2σ), Drift level (e.g., error mean + 3σ) |

| EDDM (Early DDM) | Online, Supervised | Distance between classification errors | Tracks the average distance between errors; more robust to slow, gradual drift than DDM. |

| ADWIN (ADaptive WINdowing) | Windowing-based | Data distribution within a window | Dynamically adjusts window size to find a sub-window with different data statistics. |

| KSWIN (Kolmogorov-Smirnov WINdowing) | Windowing-based | Statistical distribution | Uses the KS test to compare the distribution of recent data against a reference window. |

Experimental Protocols

Protocol 1: Implementing a Concept Drift Detection Framework using ADWIN

Objective: To proactively detect concept drift in a live model using the Adaptive Windowing (ADWIN) algorithm.

Materials:

- A trained machine learning model in production.

- A stream of incoming feature data and, when available, ground truth labels.

- An implementation of the ADWIN algorithm (available in libraries like

scikit-multiflow).

Methodology:

- Initialization: Configure the ADWIN detector with a chosen significance level (e.g., δ=0.002) and a initial window size [16].

- Stream Processing: For each new data instance (or batch) that the model processes: a. Record the model's prediction and, if available, the true label to calculate the prediction error. b. Input the error value (or a relevant data statistic) into the ADWIN algorithm.

- Drift Detection: ADWIN maintains a window of recent data. It continuously checks whether the average of a sub-window of recent data differs significantly from the rest of the window.

- Alerting: If a significant change is detected, ADWIN triggers a drift alarm.

- Response: Upon alarm, initiate a pre-defined workflow: log the event, trigger model retraining on recent data, and/or alert the engineering team [16].

Diagram: ADWIN Drift Detection Workflow

Protocol 2: Evaluating Model Transferability with Domain Adaptation

Objective: To systematically assess and improve a model's performance when applied from a source domain (e.g., general patient population) to a target domain (e.g., a specific sub-population).

Materials:

- Labeled dataset from the source domain.

- (Possibly smaller) labeled dataset from the target domain for evaluation.

- A pre-trained model on the source domain.

Methodology:

- Baseline Performance: Evaluate the pre-trained source model directly on the held-out test set from the target domain. Record key performance metrics (e.g., AUC, F1-Score). This is your transferability baseline [5].

- Fine-Tuning: Take the pre-trained model and continue training (fine-tune) it on a small, labeled portion of the target domain data. Use techniques like a lower learning rate and/or freezing the initial layers to avoid catastrophic forgetting [5].

- Performance Comparison: Evaluate the fine-tuned model on the target domain test set. Compare the results to the baseline.

- Analysis: Calculate the performance delta. A significant improvement indicates successful transfer learning. If performance degrades, this may indicate "negative transfer," suggesting the domains are too dissimilar [5].

Diagram: Model Transferability Evaluation

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Robust ML Research

| Tool / Reagent | Function / Purpose | Example Use-Case |

|---|---|---|

| Evidently AI [15] | An open-source library for monitoring and debugging ML models. | Calculating prediction drift and data drift metrics in a production environment. |

| TensorFlow / PyTorch [5] | Core frameworks for building, training, and deploying ML models. | Implementing and fine-tuning pre-trained models for transfer learning tasks. |

| Hugging Face [5] | A platform hosting thousands of pre-trained models, primarily for NLP. | Quickly prototyping a text classification model by fine-tuning a pre-trained BERT model. |

| ADWIN Algorithm [16] | A drift detection algorithm that adaptively adjusts its window size. | Detecting gradual concept drift in a continuous data stream without pre-defining a window size. |

| Centralized Data Platform [19] | A unified system (e.g., AlphaSense, internal data lakes) to aggregate disparate data sources. | Solving the challenge of siloed content and ensuring a 360-degree view of research data. |

| Desloratadine-3,3,5,5-d4 | Desloratadine-3,3,5,5-d4, MF:C19H19ClN2, MW:314.8 g/mol | Chemical Reagent |

| 1,3-Linolein-2-olein | 1,3-Linolein-2-olein, MF:C57H100O6, MW:881.4 g/mol | Chemical Reagent |

Troubleshooting Guide: Common Model Alignment and Transferability Issues

This guide addresses frequent challenges in applying the 'Fit-for-Purpose' mindset to model development for drug research.

| Problem Area | Common Symptoms | Potential Root Causes | Recommended Solutions |

|---|---|---|---|

| Negative Transfer | Model performs worse on the target task than training from scratch; poor generalization to new data [5]. | Source and target domains are too dissimilar; inadequate feature space overlap [5]. | Conduct thorough task & domain similarity analysis before transfer; use domain adaptation techniques [5]. |

| Data Scarcity | High variance in model performance; failure to converge on the target task [22]. | Limited labeled data for novel drug targets or rare diseases; costly and time-consuming experimental data generation [22]. | Leverage pre-trained models (PLMs) and self-supervised learning; employ data augmentation and synthetic data generation [22] [5]. |

| Model Misalignment with COU | Model behaves undesirably in specific contexts; violates regulatory or business guidelines [23]. | Lack of alignment to particular contextual regulations, social norms, or organizational values [23]. | Implement contextual alignment frameworks like Alignment Studio for fine-tuning on policy documents and specific regulations [23]. |

| Multi-Modal Fusion Challenges | Inability to leverage complementary data types (e.g., graph + sequence); model fails to capture complex interactions [22]. | Treating modalities separately; lack of effective cross-modal attention mechanisms [22]. | Implement advanced fusion modules like co-attention and paired multi-modal attention to capture cross-modal interactions [22]. |

| Overfitting on Small Datasets | High accuracy on training data but poor performance on validation/test data during fine-tuning [5]. | Fine-tuning a complex pre-trained model on a small, domain-specific dataset [5]. | Apply regularization (L1, L2, dropout); fine-tune only the last few layers; use progressive unfreezing of layers [5]. |

Frequently Asked Questions (FAQs)

Q1: How does the 'Fit-for-Purpose' model differ from traditional machine learning development? The 'Fit-for-Purpose' model, as a framework for Better Business, emphasizes that all building blocks of a project—Why (purpose), What (value proposition), Whom (stakeholders), Where (operational context), and How (operating practices)—must be coherently aligned [24]. In machine learning, this translates to ensuring that the model's design, data, and deployment environment are all intentionally aligned with the specific Context of Use, rather than just optimizing for a generic accuracy metric.

Q2: What is negative transfer and how can we avoid it in transfer learning? Negative transfer occurs when knowledge from a source task adversely affects performance on a related target task [5]. To avoid it:

- Analyze Domain Similarity: Carefully evaluate the relevance of the source model's pre-training data to your target domain [5].

- Validate First: Test the pre-trained model on a small, representative sample of your target data before committing to a full implementation [5].

- Use Transferability Metrics: Employ metrics to estimate performance before fine-tuning.

Q3: Our organization has specific guidelines (e.g., BCGs). How can we align a model to them? Aligning models to particular contextual regulations requires a principled approach. One method is an Alignment Studio architecture, which uses three components [23]:

- Framers: Process natural language policy documents to create fine-tuning data and scenarios.

- Instructors: Fine-tune the model using the generated data to instill the desired behaviors.

- Auditors: Use human and automated red-teaming to evaluate if the model has successfully learned the guidelines [23].

Q4: What strategies can improve the transferability of research findings to real-world industry settings? To enhance transferability, research should be designed for applicability in different contexts [25].

- Engage Stakeholders Early: Involve industry partners and end-users in the research process to ensure relevance and feasibility [25].

- Communicate Clearly: Share findings in an accessible manner for various audiences, not just academic peers [25].

- Conduct Multi-Site Trials: Perform pilot studies in multiple settings to test the robustness and adaptability of the model or method [25].

Q5: When should we consider using Small Language Models (SLMs) over Large Language Models (LLMs) in AI agents? For many industry applications, SLMs (models under ~10B parameters) are a strategic fit-for-purpose choice [26]. Consider SLMs when your tasks are narrow and repetitive (e.g., parsing commands, calling APIs, generating structured outputs), as they offer 10–30x lower inference cost and latency while matching the performance of last-generation LLMs on specific benchmarks [26].

Experimental Protocol: Implementing a 'Fit-for-Purpose' Multi-Modal Model

The following workflow details the methodology for frameworks like DrugLAMP, which integrates multiple data modalities for accurate and transferable Drug-Target Interaction (DTI) prediction [22].

Multi-Modal Model Workflow

1. Data Preparation & Input Modalities:

- Drug Data: Collect and preprocess molecular structures represented as SMILES strings and/or molecular graphs [22].

- Target Data: Collect and preprocess protein sequences and, if available, 3D protein structure or pocket information [22].

2. Feature Extraction with Pre-trained Models:

- Utilize Pre-trained Language Models (PLMs) to encode the sequential data (SMILES strings and protein sequences). This leverages the PLMs' ability to capture rich, contextual representations from vast unlabeled datasets [22].

- Use Graph Neural Networks (GNNs) or other feature extractors to process the molecular graph data [22].

3. Multi-Modal Fusion:

- Implement fusion modules to integrate the extracted features from different modalities. Key techniques include:

- Pocket-Guided Co-Attention (PGCA): Uses protein pocket information to guide the attention mechanism on drug features, focusing on the most relevant molecular substructures [22].

- Paired Multi-Modal Attention (PMMA): Enables effective cross-modal interactions between drug and protein features, allowing the model to learn complex, interdependent representations [22].

4. Contrastive Pre-training (2C2P Module):

- Perform contrastive compound-protein pre-training on large, unlabeled datasets. This step enhances the model's generalization and transferability by aligning features across modalities and conditions, which is crucial for predicting interactions for novel drugs or targets [22].

5. Supervised Fine-Tuning:

- Finally, fine-tune the entire model on a labeled DTI dataset for the specific prediction task (e.g., binding affinity classification). This adapts the generally pre-trained model to the precise "purpose" of the research question [22].

The Scientist's Toolkit: Key Research Reagents & Solutions

The following tools and frameworks are essential for building transferable, 'fit-for-purpose' models in computational drug discovery.

| Tool / Framework | Primary Function | Relevance to 'Fit-for-Purpose' & Transferability |

|---|---|---|

| Pre-trained Language Models (e.g., BERT, GPT) [5] | Provide powerful base models that have learned general representations from vast biological and chemical text/data. | Drastically reduce data and computational needs via transfer learning; can be fine-tuned for specific tasks like DTI prediction [22] [5]. |

| Multi-Modal Fusion Architectures [22] | Integrate diverse data types (e.g., graphs, sequences) into a unified model using attention mechanisms. | Critical for capturing the complex interactions in biology; enables models to leverage complementary information for more accurate predictions [22]. |

| Contrastive Learning Modules (e.g., 2C2P) [22] | Align representations from different modalities in a shared space using unlabeled data. | Enhances model generalization and robustness, key for transferability to novel drugs and targets where labeled data is scarce [22]. |

| Alignment Studio Framework [23] | A toolset for aligning LLM behavior to specific contextual regulations and business guidelines. | Ensures models are not just technically accurate but also operate within required ethical, legal, and organizational constraints [23]. |

| Small Language Models (SLMs) [26] | Provide a class of models under ~10B parameters optimized for specific, narrow tasks. | Offer a strategic "fit-for-purpose" solution for deployment in resource-constrained environments or for repetitive agentic tasks, balancing cost and performance [26]. |

| Nidulin | Nidulin, CAS:1402-15-9, MF:C20H17Cl3O5, MW:443.7 g/mol | Chemical Reagent |

| Pimozide-d4 | Pimozide-d4, MF:C28H29F2N3O, MW:465.6 g/mol | Chemical Reagent |

Troubleshooting Guides

Guide 1: Resolving Model Instability in Pharmacometric Analyses

Problem: My pharmacometric model (e.g., PopPK, PKPD) fails to converge, produces biologically unreasonable parameter estimates, or yields different results with different initial estimates.

Explanation: Model instability is often a multifactorial issue, frequently stemming from a mismatch between model complexity and the information content of your data [27]. Diagnosing the root cause is essential for applying the correct solution.

Solution: Follow this structured workflow to identify and resolve stability issues [27].

Steps:

- Model Confirmation and Verification: Before investigating instability, ensure your model is an appropriate representation of the biological system (confirmation) and that your code is a faithful translation of the model schematic (verification) [27]. This step rules out coding errors as the source of instability.

- Assess Data Quality: Scrutinize your dataset for issues that can undermine stability, such as significant outliers, critical missing data, or errors in dosing and sampling records [27].

- Balance Model Complexity and Data Information: This is a common cause of instability [27].

- If the model is over-parameterized: Simplify the model structure. For example, a model expecting Target-Mediated Drug Disposition (TMDD) might need to be approximated with a simpler model (e.g., linear or time-varying clearance) if the data is insufficient to inform the full kinetic binding parameters [27].

- If the data is limited: Consider incorporating strong prior knowledge or leveraging a pre-existing, mechanistically sound "system-lens" model from the literature, ensuring it is fit-for-your-current-purpose [27].

- Evaluate Optimization Algorithm and Settings: Review your software's (e.g., NONMEM) optimization settings. The choice of algorithm, interaction method, and other numerical settings can significantly impact stability and may need adjustment for your specific model and data [27].

Guide 2: Managing Compressed Timelines for Regulatory Submissions

Problem: Our team needs to prepare a robust pharmacometric analysis for a regulatory submission (NDA/BLA) under an expedited timeline with limited resources.

Explanation: Regulatory agencies increasingly expect pharmacometric evidence, and managing this under compressed timelines is a common challenge [28]. Success hinges on efficient, cross-functional workflows and strategic planning.

Solution: Adopt a proactive, fit-for-purpose strategy to ensure submission readiness without compromising scientific rigor [28].

Steps:

- Early Regulatory Engagement: Proactively engage with agencies like the FDA or EMA to discuss your Model-Informed Drug Development (MIDD) strategy. Seek agreement on the Context of Use (COU) and the Model Analysis Plan (MAP) to minimize later objections [28] [29].

- Leverage Core Analyses Efficiently: Focus on fit-for-purpose population PK (PopPK) and exposure-response models to address critical questions on dose selection, variability, and benefit-risk, which are essential for labeling decisions [28].

- Streamline Operational Workflows: Utilize efficient software and platforms (e.g., R packages like

mrgsolvefor simulation) that support reproducible and flexible pharmacometric workflows to accelerate analysis time [30] [31]. - Communicate for Decision-Making: In decisional meetings, use a deductive approach: state your conclusion and recommendation first, followed by the supporting model results. This focuses the discussion on the actionable decision rather than the technical modeling details [32].

Frequently Asked Questions (FAQs)

Q1: What are the most critical skills for a pharmacometrician to influence drug development decisions? A pharmacometrician requires three key skill sets to be influential: technical skills (e.g., modeling, simulation), business skills (understanding drug development), and soft skills (especially effective communication) [32]. A survey found that 82% of professionals believe pharmacometricians, on average, lack strategic communication skills, highlighting a critical area for development [32].

Q2: How should I present pharmacometric results to an interdisciplinary team to maximize impact? Tailor your communication to your audience [32]. For interdisciplinary teams, use a deductive approach: start with the bottom-line recommendation, then provide the supporting evidence. Avoid deep technical details; instead, focus on how the analysis informs the specific decision at hand (e.g., "We recommend a 50 mg dose because the model predicts a 90% probability of achieving target exposure") [32].

Q3: What is the significance of the new ICH M15 guideline for MIDD? The ICH M15 draft guideline, released in late 2024, provides the first internationally harmonized framework for MIDD. It aims to align expectations between regulators and sponsors, support consistent regulatory decisions, and minimize errors in the acceptance of modeling and simulation evidence in drug labels [29]. It establishes a structured process with a clear taxonomy, including stages for Planning, Implementation, Evaluation, and Submission [29].

Q4: My model works for one software but fails in another. What could be the cause? This is a recognized symptom of model instability [27]. Differences in optimization algorithms, default numerical tolerances, or interaction methods between software platforms (e.g., NONMEM, Monolix, etc.) can produce different results when a model is poorly identified. Revisit the troubleshooting guide for model instability, paying close attention to the balance between model complexity and data information content [27].

The Scientist's Toolkit: Essential Research Reagents & Solutions

The table below lists key tools and methodologies used in modern pharmacometrics.

| Tool/Solution | Function & Application |

|---|---|

| Population PK/PD (PopPK/PD) Modeling [29] | A preeminent method using nonlinear mixed-effects models to characterize drug concentrations and variability in effects, and to perform clinical trial simulations. |

| Model-Informed Drug Development (MIDD) [29] | A framework that uses quantitative modeling to integrate data and prior knowledge to inform drug development and regulatory decisions. |

| mrgsolve [30] | An R package for pharmacokinetic-pharmacodynamic (PK/PD) model simulation. It is used to simulate drug behavior from a pre-defined model, aiding in trial design and dose selection. |

| RsNLME [31] | A suite of R packages supporting flexible and reproducible pharmacometric workflows for model building and execution. |

| Model Analysis Plan (MAP) [29] | A critical document in the ICH M15 framework that defines the introduction, objectives, data, and methods for a modeling exercise, ensuring alignment and clarity. |

| Credibility Assessment [29] | A framework (based on standards like ASME V&V 40) used to evaluate model relevance and adequacy, ensuring computational models are fit-for-purpose. |

| 1-Alaninechlamydocin | 1-Alaninechlamydocin, MF:C27H36N4O6, MW:512.6 g/mol |

| Ivacaftor-D19 | Ivacaftor-D19, MF:C24H28N2O3, MW:411.6 g/mol |

Practical Methods and MIDD Tools for Enhanced Industrial Generalization

Leveraging Model-Informed Drug Development (MIDD) as a Strategic Framework

MIDD and Model Transferability: Core Concepts FAQ

Q1: What is Model-Informed Drug Development (MIDD) and how does it relate to model transferability?

A1: Model-Informed Drug Development (MIDD) is defined as a quantitative framework for prediction and extrapolation, centered on knowledge and inference generated from integrated models of compound, mechanism, and disease-level data. Its goal is to improve the quality, efficiency, and cost-effectiveness of decision-making [33]. Within this framework, model transferability refers to the ability of a model developed for one specific task or context—such as a pre-clinical model or a model for one patient population—to be effectively applied or adapted to a related but distinct context, such as a different disease population or a new drug candidate with a similar mechanism of action [5]. The strategic integration of transferability principles helps ensure that quantitative models remain valuable assets across a drug's lifecycle.

Q2: What are the primary business benefits of implementing a MIDD strategy with a focus on transferability?

A2: Adopting a MIDD strategy that emphasizes model transferability offers several key business and R&D benefits [33] [34]:

- Enhanced R&D Efficiency: It significantly shortens development cycle timelines and reduces discovery and trial costs by leveraging existing knowledge and models.

- Improved Decision-Making: It provides a structured, data-driven framework for evaluating safety and efficacy, thereby increasing the probability of technical and regulatory success.

- Cost Reduction: Companies like Pfizer and Merck & Co. have reported substantial cost savings—up to $100 million annually and $0.5 billion, respectively—attributed to the impact of MIDD on decision-making [33].

- Knowledge Preservation and Leverage: It creates reusable quantitative tools that can be applied across multiple drug programs, maximizing return on investment.

Q3: What are common pitfalls that hinder model transferability in MIDD, and how can they be avoided?

A3: A major challenge in transferring models is "negative transfer," which occurs when a model from a source task adversely affects performance on the target task because the domains are too dissimilar [5]. Other pitfalls include overfitting during fine-tuning on small datasets and the computational complexity of adapting large models [5]. To mitigate these risks:

- Conduct Rigorous Domain Analysis: Carefully evaluate the similarity between the source and target tasks (e.g., disease pathophysiology, patient demographics) before transfer [5] [35].

- Adopt a "Fit-for-Purpose" Mindset: Select and adapt models based on the specific "Question of Interest" and "Context of Use" for the new application, avoiding unnecessary complexity [34].

- Implement Robust Validation: Use techniques like cross-validation and external validation to ensure model performance generalizes to the new context.

Troubleshooting Guide: Common MIDD Implementation Challenges

Q1: My PK/PD model performs well in pre-clinical data but fails to predict early clinical outcomes. What should I check?

A1: This is a classic model transferability issue between pre-clinical and clinical phases. Follow this systematic troubleshooting protocol:

- Step 1: Verify Physiological Relevance. Check if your Physiologically-Based Pharmacokinetic (PBPK) model accurately reflects human (as opposed to animal) physiology and metabolic pathways. Ensure allometric scaling for dose prediction is appropriate [34].

- Step 2: Inter-species Differences. Re-evaluate assumptions about drug absorption, distribution, metabolism, and excretion (ADME). Key proteins like enzymes and transporters may have different expression and activity in humans.

- Step 3: Model Complexity. Determine if your model is either oversimplified (missing key mechanistic elements) or overly complex (leading to overfitting on pre-clinical data). A semi-mechanistic PK/PD approach might offer a better balance [34].

- Step 4: Data Quality and Recalibration. Use the limited early clinical (e.g., First-in-Human) data to recalibrate and refine the model, focusing on updating the most uncertain parameters.

Q2: How can I select the best pre-existing model from a repository ("model zoo") for my new, unlabeled clinical dataset?

A2: This is a common scenario in industry research where labeled data is scarce. Employ a source-free transferability assessment framework [36]:

- Step 1: Feature Extraction. Process a small, representative sample of your unlabeled target data through each candidate model in your repository to generate feature embeddings.

- Step 2: Create a Reference. Generate a task-agnostic reference embedding using an ensemble of Randomly Initialized Neural Networks (RINNs), which capture structural priors without training [36].

- Step 3: Similarity Analysis. Quantify the structural similarity between the embeddings from the candidate models and the RINN reference using a metric like Centered Kernel Alignment (CKA).

- Step 4: Model Ranking. Rank the candidate models based on their similarity scores. The model with the highest score is the most transferable to your new dataset and should be selected for fine-tuning or direct application [36].

Q3: Our quantitative systems pharmacology (QSP) model is not accepted by internal decision-makers for informing a clinical trial design. How can we improve stakeholder confidence?

A3: This is often a communication and validation issue, not a technical one.

- Action 1: Strengthen Context of Use (COU) Definition. Clearly and concisely document the specific question the model is intended to answer, its boundaries, and its limitations. This aligns with regulatory best practices [33] [34].

- Action 2: Demonstrate Predictive Performance. Present a retrospective analysis showing how the model accurately simulated or predicted outcomes from historical data within your organization.

- Action 3: Conduct Sensitivity and Uncertainty Analysis. Systematically identify and present the key model parameters that drive the outcomes and quantify the uncertainty in the predictions. This demonstrates a thorough understanding of the model's robustness.

- Action 4: Align with Regulatory Precedents. Cite successful examples of MIDD applications in regulatory submissions for similar drug classes or clinical questions [33] [37].

Detailed Experimental Protocols for Key MIDD Analyses

Protocol 1: Developing a "Fit-for-Purpose" Population PK/PD Model

Objective: To characterize the population pharmacokinetics and exposure-response relationship of a drug to inform dosing recommendations.

Materials & Methodology:

- Data: Rich or sparse drug concentration-time data and response (efficacy/safety) data from clinical trials.

- Software: Non-linear mixed-effects modeling software (e.g., NONMEM, Monolix, R).

- Structural Model Development: Identify the best-fitting structural PK model (e.g., one- or two-compartment) and PD model (e.g., Emax, linear) using standard goodness-of-fit plots and objective function value (OFV).

- Statistical Model Development: Identify and quantify sources of inter-individual variability (IIV) and inter-occasion variability (IOV) on model parameters. Model residual unexplained variability.

- Covariate Analysis: Identify patient-specific factors (e.g., weight, renal function, age) that explain a portion of the IIV. Use a stepwise forward inclusion/backward elimination procedure based on statistical significance (e.g., change in OFV).

- Model Validation: Validate the final model using:

- Bootstrap: Assess parameter precision and stability.

- Visual Predictive Check (VPC): Assess the model's ability to simulate data that matches the original dataset.

- Numerical Predictive Check (NPC): Quantify the difference between simulated and observed data.

Protocol 2: Conducting a Source-Free Model Transferability Assessment

Objective: To rank pre-trained models from a repository for their suitability on a new, unlabeled target dataset without access to source data.

Materials & Methodology:

- Inputs: A "model zoo" of pre-trained models; a small, unlabeled sample from the target domain.

- Software: Python with deep learning frameworks (e.g., PyTorch, TensorFlow).

- Embedding Extraction: For each candidate model

M_iin the zoo, forward-pass the target data sample and extract the feature embeddings from the model's penultimate layer. - Reference Embedding Generation: Create an ensemble of

KRandomly Initialized Neural Networks (RINNs) with the same architecture as the candidate models but with no training. Use this ensemble to extract a second set of embeddings from the same target data sample [36]. - Similarity Scoring: For each candidate model, compute the Minibatch-Centered Kernel Alignment (CKA) score between its embeddings and the RINN ensemble's embeddings. CKA measures the similarity of the representations.

- Model Selection: Rank all candidate models based on their CKA scores in descending order. The model with the highest

S_Escore is deemed the most transferable and is selected for the task [36].

Workflow Visualization

MIDD Strategy and Troubleshooting Workflow

Model Transferability Assessment Protocol

Table 1: Key Quantitative Tools and Methodologies in MIDD

| Tool / Methodology | Primary Function | Typical Context of Use |

|---|---|---|

| Physiologically-Based Pharmacokinetic (PBPK) Modeling [34] [37] | Mechanistic modeling to predict drug absorption, distribution, metabolism, and excretion (ADME) based on physiology and drug properties. | Predicting drug-drug interactions (DDIs); extrapolating to special populations (e.g., hepatic impairment); supporting generic drug bioequivalence. |

| Population PK (PPK) / Exposure-Response (ER) [34] | Quantifies and explains variability in drug exposure (PK) and its relationship to efficacy/safety outcomes (PD) across a patient population. | Optimizing dosing regimens; identifying sub-populations requiring dose adjustment; supporting label claims. |

| Quantitative Systems Pharmacology (QSP) [34] | Integrates systems biology and pharmacology to model drug effects on biological pathways and disease processes mechanistically. | Target validation; combination therapy strategy; understanding complex biological mechanisms; clinical trial simulation. |

| Model-Based Meta-Analysis (MBMA) [34] | Quantitative analysis of summary-level data from multiple clinical trials to understand drug class effects and competitive landscape. | Informing dose selection and trial endpoints; benchmarking a new drug's potential efficacy against standard of care. |

| Artificial Intelligence / Machine Learning (AI/ML) [34] | Analyzes large-scale datasets to predict compound properties, optimize molecules, identify biomarkers, and personalize dosing. | Drug discovery (e.g., QSAR); predicting ADME properties; analyzing real-world evidence (RWE). |

Foundations of Data-Centric AI

What is Data-Centric AI and how does it differ from model-centric approaches?

Answer: Data-Centric AI (DCAI) is a paradigm that shifts the focus from model architecture and hyperparameter tuning to systematically improving data quality and quantity [38]. Unlike the model-centric approach, which treats data as a static, fixed asset and optimizes the algorithm, DCAI treats data as a dynamic, core component to be engineered and optimized [39] [38].

The core difference is this: a model-centric team working with a fixed dataset might spend time adjusting neural network layers to improve accuracy from 95.0% to 95.5%. A data-centric team, holding the model architecture constant, would instead focus on improving the dataset itself—by correcting mislabeled examples, adding diverse data, or applying smart augmentations—to achieve a similar or greater performance boost that often transfers more reliably to real-world, industry data [38].

What are the core pillars of a Data-Centric AI development process?

Answer: The DCAI paradigm is structured around three interconnected pillars [38]:

- Training Data Development: The process of collecting and producing high-quality, rich data for model training. This involves data creation, collection, labeling, preparation, reduction, and augmentation.

- Inference Data Development: The generation of sophisticated evaluation sets that go beyond simple accuracy metrics. This aims to probe model capabilities like robustness, adaptability, and reasoning, using techniques like in-distribution and out-of-distribution evaluation.

- Data Maintenance: The ongoing process of maintaining data quality and reliability in dynamic environments. This includes data understanding, quality assurance, and efficient data storage and retrieval.

Troubleshooting Guides: Common Data-Centric AI Challenges

How do I fix a model that performs well on validation data but poorly on real-world industry data?

Problem: This is a classic sign of a model failing to transfer from a controlled research environment to a production setting. It often stems from a mismatch between your training data and the actual "inference data" encountered in the wild.

Solution: Implement a robust Inference Data Development strategy [38].

Action 1: Perform Out-of-Distribution (OOD) Evaluation

- Methodology: Systematically create test sets that differ from your training data. This can involve introducing noise, simulating domain shifts (e.g., different medical scanner types in healthcare), or using adversarial examples to test robustness.

- Protocol: From your main dataset, create a hold-out OOD test set that mirrors the conditions of your industry data as closely as possible. Benchmark your model's performance on this set to understand its failure modes.

Action 2: Identify and Calibrate Underrepresented Groups

- Methodology: Analyze your model's performance across different subgroups within your data. A common issue is that the training data does not adequately represent the full diversity of the target domain.

- Protocol: Use clustering or data profiling tools to identify latent subgroups. Then, actively source or synthesize data for these underrepresented groups to rebalance your training dataset, a process often called "data cartography."

The following workflow outlines the systematic process for diagnosing and resolving the disconnect between validation and real-world performance.

How can I resolve data quality issues that are causing technical debt and "data cascades"?

Problem: "Data cascades" are compounding events resulting from underlying data issues that cause negative, downstream effects, accumulating technical debt over time [38]. A Google study found that 92% of AI practitioners experienced this issue [38]. Common signs include inconsistent labels, missing values, and data that doesn't match the real-world distribution.

Solution: Institute a rigorous Data Quality Assurance framework during the Training Data Development and Data Maintenance phases [38].

Action 1: Implement Confident Learning for Label Quality

- Methodology: Use techniques like Confident Learning to algorithmically identify mislabeled examples in a dataset, regardless of the model or data type [38].

- Protocol: Apply an open-source Confident Learning library (e.g.,

cleanlab) to your dataset. It will output a list of potential label errors for your review and correction, significantly improving the cleanliness of your training labels.

Action 2: Establish Continuous Data Monitoring

- Methodology: Data quality is not a one-time task. Implement automated checks for data drift, schema changes, and anomaly detection in incoming data streams.

- Protocol: Define objective data quality metrics (e.g., accuracy, timeliness, consistency, completeness) and set up a dashboard to monitor these metrics over time. This is a core part of the Data Maintenance pillar [38].

The diagram below illustrates how small, unresolved data issues early in a project can compound into significant problems later, and how to intervene.

My model is exhibiting biased behavior. How can I address this from a data-centric perspective?

Problem: Bias in AI models often originates from biased or unrepresentative training data, leading to unfair outcomes in critical areas like patient stratification in drug development [40].

Solution: Proactively audit and curate your datasets to promote fairness and representation.

Action 1: Audit Data for Representational Gaps

- Methodology: Use data visualization and statistical tools to analyze the distribution of your data across sensitive or relevant attributes (e.g., age, gender, ethnicity, disease subtype).

- Protocol: Generate summary reports and visualizations showing the count and proportion of data points for each subgroup. This helps identify over- or under-represented cohorts.

Action 2: Apply Data-Centric Bias Mitigation Techniques

- Methodology: Instead of only using algorithmic debiasing, focus on improving the data itself. This can involve strategic data collection, augmentation of underrepresented groups, and re-weighting data samples.

- Protocol: For an underrepresented subgroup, employ data augmentation techniques specific to that domain (e.g., synthetic data generation) to increase its presence in the training set. Alternatively, use sampling strategies to ensure a more balanced batch composition during training.

Experimental Protocols & The Scientist's Toolkit

Detailed Methodology: Confident Learning for Label Error Detection

This protocol is used to identify and correct mislabeled examples in a dataset, a common issue in manually annotated biological data.

- Input: A dataset with features

Xand (noisy) labelss. - Cross-Validation: Train a classifier on

(X, s)usingk-foldcross-validation to generate out-of-sample predicted probabilitiesP. - Estimate Joint Distribution: Use

Pandsto compute the confident joint matrix, which estimates the joint distribution between the given (noisy) labels and the inferred (true) labels. - Find Label Errors: Identify the examples and their indices that are likely mislabeled based on the confident joint.

- Output: A boolean mask indicating which labels are likely erroneous, and a list of suggested alternative labels.

- Human-in-the-Loop Review: A domain expert (e.g., a biologist) reviews the suggested errors and makes the final correction, updating the dataset.

Detailed Methodology: Out-of-Distribution (OOD) Evaluation Set Creation

This protocol tests model robustness and prepares it for transfer to industry data.

- Define OOD Axes: Identify potential sources of distribution shift relevant to your domain (e.g., for histology images: different tissue staining protocols, scanners, or patient demographics).

- Data Sourcing: Actively source a new dataset that varies along one or more of these axes. This should be a separate dataset from your main training and validation sets.

- Annotation: Label this new OOD set with the same procedure as your main dataset to ensure label consistency.

- Benchmarking: Evaluate your trained model on this OOD set. Report performance metrics separately for the in-distribution (ID) test set and the OOD test set. A large performance gap indicates poor transferability and a need for data-centric improvements.

Research Reagent Solutions

The following table details key tools and conceptual "reagents" essential for implementing Data-Centric AI experiments.

| Research Reagent / Tool | Function in Data-Centric AI |

|---|---|

| Confident Learning Framework | Algorithmically identifies label errors in datasets by estimating the joint distribution of noisy and true labels, enabling high-quality data curation [38]. |

| Data Augmentation Libraries | Systematically increase the size and diversity of training data by applying label-preserving transformations, improving model robustness [38]. |

| Federated Learning Platforms | Enable model training across decentralized data sources without sharing raw data, addressing privacy concerns and expanding data access [40]. |

| Data Profiling & Visualization Tools | Provide statistical summaries and visualizations to understand data distributions, identify biases, and uncover representational gaps [38]. |

| Model Monitoring Dashboards | Track data drift and model performance metrics in real-time after deployment, a key component of the Data Maintenance pillar [38]. |

| Indacaterol-d3 | Indacaterol-d3, MF:C24H28N2O3, MW:395.5 g/mol |

| 6-amino-7-bromoquinoline-5,8-dione | 6-Amino-7-bromoquinoline-5,8-dione |

FAQ on Data-Centric AI for Research

We have limited data for a rare disease target. What data-centric techniques can help?

Answer: Data augmentation and synthetic data generation are key strategies within the Training Data Development pillar [38].

- Advanced Augmentation: Move beyond simple rotations and flips. Use domain-specific augmentations. For molecular data, this could be valid structural perturbations. For medical images, this could involve simulating different lighting or scanner artifacts.

- Synthetic Data Generation: Leverage generative models (e.g., VAEs, GANs, Diffusion Models) to create synthetic, labeled data samples that follow the distribution of your limited real data. This can artificially expand your dataset and improve model robustness.

- Transfer Learning with a Data-Centric Twist: Instead of just using a pre-trained model, use a pre-trained model to inform your data collection and augmentation strategy. Analyze which data points the model finds most confusing and prioritize collecting or generating more of that type of data.

How do we evaluate model success in a data-centric paradigm?

Answer: Move beyond a single aggregate accuracy metric on a static test set. The Inference Data Development pillar calls for a multi-faceted evaluation strategy [38]:

- Performance on Sliced Data: Report accuracy on semantically meaningful data slices (e.g., by patient subgroup, experimental batch, or disease severity).

- Robustness Metrics: Evaluate performance on OOD and adversarial test sets.

- Calibration: Measure how well the model's predicted probabilities match the true likelihood of correctness, which is critical for risk assessment in drug development.

- Fairness Audits: Use fairness metrics (e.g., demographic parity, equality of opportunity) across different subgroups.

How can we convince project stakeholders to invest in data-centric practices?

Answer: Frame the argument around risk mitigation, return on investment (ROI), and the critical goal of transferability to industry data.

- Cite Empirical Evidence: Reference studies like the one from Google which found that data cascades, which are preventable with DCAI, lead to significant technical debt and project failures [38].

- Run a Pilot Study: Demonstrate the value empirically. Take an existing model and, without changing its architecture, show how techniques like label cleaning or data augmentation can boost performance, especially on a test set designed to mimic real-world conditions.

- Highlight the Transferability Link: Emphasize that models trained on high-quality, diverse, and robust data are far more likely to work reliably when deployed in a real industry setting, such as a clinical research environment. This reduces the cost and time of post-deployment fixes.

Technical Support Center

Troubleshooting Guides

Guide 1: Addressing Accuracy Degradation After Pruning

Problem: A pruned model for molecular property prediction shows a significant drop in accuracy (e.g., >20% loss as noted in some studies [41]) compared to the original model.

Diagnosis: This is often caused by the aggressive removal of parameters critical for the model's task or insufficient fine-tuning after pruning.

Solution:

- Adopt an Iterative Pruning Strategy: Instead of removing a large percentage of parameters at once, progressively prune the model in smaller steps [42]. A recommended protocol is to prune ≤10% of the weights in a single cycle, followed by a short fine-tuning cycle, repeating until the target sparsity is reached [42] [43].

- Use a Calibrated Importance Metric: For transformer-based models in drug discovery, avoid simple magnitude-based pruning. Employ movement pruning, which considers both weight magnitude and the change in the loss function when a weight is dropped [42].

- Implement a Robust Fine-tuning Protocol: After pruning, fine-tune the model on your specific bioassay or molecular dataset. Use a lower learning rate (e.g., 1e-5) and a validation set to monitor for overfitting and ensure performance recovery [44].

Guide 2: Managing Performance Loss in Quantized Models for Molecular Dynamics

Problem: A quantized model used for molecular dynamics simulations or virtual screening exhibits unstable behavior or poor predictive performance.

Diagnosis: The loss of numerical precision from 32-bit floating points (FP32) to 8-bit integers (INT8) can introduce significant errors, especially in models not trained to handle lower precision [45] [41].

Solution:

- Switch to Quantization-Aware Training (QAT): If post-training quantization (PTQ) fails, integrate quantization simulations into the training loop. This allows the model to adapt to the lower precision, often preserving much higher accuracy [45] [43]. Frameworks like TensorFlow Lite and PyTorch provide QAT APIs.

- Apply Mixed-Precision Quantization: Do not quantize all layers uniformly. Identify sensitive layers (e.g., the final classification head) and keep them in higher precision (FP16 or FP32) while quantizing the rest of the model [43].

- Calibrate with a Representative Dataset: For PTQ, the calibration process is crucial. Use a diverse, task-specific subset of your data (e.g., a variety of molecular structures) to determine the optimal scaling factors for quantization [43].

Guide 3: Student Model Failing to Learn from Teacher in Distillation

Problem: In a knowledge distillation setup for a biomedical knowledge graph, the small student model does not converge or performs far worse than the teacher model.

Diagnosis: The performance gap may be too large, the student architecture may be inadequate, or the knowledge transfer method may be unsuitable for the task [46] [41].

Solution: