Beyond the Balance: Advanced Strategies for Tackling Imbalanced Data in ADMET Machine Learning

This article provides a comprehensive guide for drug discovery scientists and computational researchers on overcoming the pervasive challenge of imbalanced datasets in ADMET machine learning.

Beyond the Balance: Advanced Strategies for Tackling Imbalanced Data in ADMET Machine Learning

Abstract

This article provides a comprehensive guide for drug discovery scientists and computational researchers on overcoming the pervasive challenge of imbalanced datasets in ADMET machine learning. We explore the foundational causes of data imbalance and its impact on model performance, then delve into advanced methodological solutions including sophisticated data splitting strategies, algorithmic innovations, and feature engineering techniques. The guide further offers practical troubleshooting and optimization protocols for real-world implementation and concludes with rigorous validation frameworks and comparative analyses of emerging approaches like federated learning and multimodal integration. By synthesizing the latest research and benchmarks, this resource aims to equip professionals with the knowledge to build more accurate, robust, and generalizable ADMET prediction models, ultimately reducing late-stage drug attrition.

Understanding the Root Causes and Impact of Data Imbalance in ADMET Prediction

FAQs on Data Imbalance in ADMET Modeling

Q1: What constitutes a "severely" imbalanced dataset in ADMET research, and why is it a problem? A severely imbalanced dataset in ADMET research is one where the class of interest (e.g., toxic compounds) is vastly outnumbered by the other class (e.g., non-toxic compounds). This isn't defined by a fixed ratio, but by its practical impact: when standard training batches may contain few or no examples of the minority class, preventing the model from learning its patterns [1]. The core problem is that standard machine learning algorithms, which aim to maximize overall accuracy, become biased towards predicting the majority class. This leads to poor performance on the minority class, which is often the most critical to identify (e.g., hepatotoxic compounds) [2] [3]. Relying on accuracy in such cases is misleading; a model that always predicts "non-toxic" would have high accuracy but be useless for identifying toxic risks [4].

Q2: Beyond the class ratio, what other factors define data imbalance for an ADMET endpoint? A class ratio is just the starting point. A comprehensive definition of imbalance must also consider:

- Data Quality and Noise: Noisy data, often stemming from heterogeneous experimental sources or lab conditions, can obscure the true signal of the minority class, making it harder for a model to learn effectively [2].

- Feature Quality and Redundancy: The presence of irrelevant or highly correlated molecular descriptors can dilute the predictive signal. Feature selection methods are crucial to identify the most informative descriptors for the specific ADMET endpoint [5].

- Class Overlap and Separability: The degree to which minority and majority class examples are intermixed in the feature space is critical. High overlap, where compounds with similar structures have different toxicities, makes the classification task inherently difficult, regardless of the sampling ratio [3].

- Dataset Size and Dimensionality: The absolute number of minority class examples is vital. A 10:1 ratio is manageable with 1,000 minority samples but becomes a severe imbalance with only 10, making reliable pattern learning nearly impossible [1].

Q3: What are the primary methodological strategies to mitigate class imbalance? Strategies can be categorized into data-level, algorithm-level, and advanced architectural approaches.

- Data-Level (External) Methods: These alter the training dataset.

- Oversampling: Increasing the number of minority class examples, e.g., using the Synthetic Minority Over-sampling Technique (SMOTE), which creates synthetic examples rather than duplicating existing ones [2].

- Undersampling: Reducing the number of majority class examples. Augmented Random Undersampling uses feature frequency to inform which majority samples to remove, preserving more information than random removal [2].

- Algorithm-Level (Internal) Methods: These modify the learning algorithm.

- Class Weighting: Assigning a higher cost to misclassifications of the minority class during model training. This is often implemented by setting

class_weight='balanced'in scikit-learn, which automatically weights classes inversely proportional to their frequencies [5] [4]. - Hybrid Strategies: Techniques like "downsampling and upweighting" combine data-level and algorithm-level approaches. The majority class is downsampled during training to create a balanced batch, but its contribution to the loss function is upweighted to correct for the sampling bias, teaching the model both the feature-label relationship and the true class distribution [1].

- Class Weighting: Assigning a higher cost to misclassifications of the minority class during model training. This is often implemented by setting

- Advanced Architectural Methods: Modern approaches leverage sophisticated machine learning.

- Multitask Learning: Training a single model on multiple related ADMET endpoints simultaneously can improve generalization and mitigate overfitting to the imbalance of any single endpoint [6] [7].

- Graph Neural Networks: Using graph-based representations of molecules, where atoms are nodes and bonds are edges, allows the model to learn task-specific features directly from the molecular structure, often leading to superior performance on imbalanced data [5].

Q4: A standard model trained on our imbalanced DILI data has high accuracy but poor recall for toxic compounds. What is a robust validation framework? When dealing with imbalanced ADMET data like Drug-Induced Liver Injury (DILI), a single metric like accuracy is insufficient. A robust validation framework should include:

- Multiple Threshold-Invariant Metrics:

- Area Under the ROC Curve (AUC): Measures the model's ability to distinguish between classes across all possible classification thresholds. It is generally insensitive to class imbalance [2] [3].

- Area Under the Precision-Recall Curve (AUPRC): Often more informative than AUC for imbalanced datasets, as it focuses on the performance of the positive (minority) class.

- Threshold-Dependent Metrics (using a single decision threshold):

- Balanced Accuracy (BA): The average of recall obtained on each class. This prevents the model from being rewarded for only predicting the majority class [3].

- F1-Score: The harmonic mean of precision and recall, providing a single score that balances the two [4].

- Sensitivity (Recall) and Specificity: It is crucial to report both. The goal is to minimize the gap between them, ensuring the model performs well on both the toxic and non-toxic classes [2].

The workflow below outlines a principled approach to troubleshooting and improving a model trained on an imbalanced ADMET dataset.

Experimental Protocols for Imbalance Mitigation

Protocol 1: Implementing Class Weights in Logistic Regression

This algorithm-level method is straightforward to implement and highly effective.

- Train a Baseline Model: First, train a standard logistic regression model on your imbalanced training set without any class weighting.

- Evaluate Baseline Performance: Calculate key metrics (F1-score, Balanced Accuracy, Sensitivity) on a held-out test set to establish a baseline.

- Apply Balanced Class Weights: Retrain the logistic regression model using the

class_weight='balanced'parameter. This automatically adjusts weights inversely proportional to class frequencies. The weight for class j is calculated as:w_j = n_samples / (n_classes * n_samples_j)[4]. - Re-evaluate and Compare: Compute the same metrics on the same test set using the new model. The performance on the minority class should show significant improvement.

Protocol 2: Combining SMOTE Oversampling with Random Forest

This data-level method was successfully used to build a high-performance DILI prediction model [2].

- Data Preparation: Split the data into training and test sets. Ensure the test set is left untouched and representative of the original class distribution.

- Apply SMOTE only to Training Data: Use the SMOTE algorithm to synthetically generate new examples of the minority class within the training set only. This prevents data leakage.

- Train Random Forest Classifier: Train a Random Forest model on the newly balanced training dataset. Random Forest is an ensemble method known for its robustness.

- Validate on Original Test Set: Predict on the pristine, imbalanced test set. The study achieving 93% accuracy and 0.94 AUC for DILI used this exact protocol, resulting in a sensitivity of 96% and specificity of 91% [2].

The Scientist's Toolkit: Key Reagents & Software

The table below lists essential computational tools for handling imbalanced ADMET data.

| Item Name | Type | Primary Function |

|---|---|---|

| RDKit | Cheminformatics Library | Calculates thousands of molecular descriptors (1D-3D) and fingerprints (e.g., Morgan fingerprints) from chemical structures, which are essential features for model training [5] [2]. |

| SMOTE | Data Sampling Algorithm | Synthetically generates new examples for the minority class to balance a dataset, helping the model learn minority class patterns without simple duplication [2]. |

| scikit-learn | Machine Learning Library | Provides implementations of key algorithms (SVM, Random Forest, Logistic Regression) with built-in class_weight parameters for imbalance mitigation and tools for model validation [5] [4]. |

| MACCS Keys | Molecular Fingerprint | A fixed-length binary fingerprint indicating the presence or absence of 166 predefined chemical substructures, commonly used as a feature set in toxicity prediction models [2]. |

| Graph Neural Networks (GNNs) | Advanced ML Architecture | Represents molecules as graphs (atoms=nodes, bonds=edges) to learn task-specific features automatically, often achieving state-of-the-art accuracy on imbalanced ADMET endpoints [6] [5]. |

| ADMETlab 2.0/3.0 | Integrated Web Platform | Offers a benchmarked environment for predicting a wide array of ADMET properties, useful for generating additional data or comparing model performance [8] [7]. |

| Mordred | Descriptor Calculation Tool | Calculates a comprehensive set of 2D molecular descriptors, which can be curated and selected to create highly informative feature sets for prediction [7]. |

Technical Support Center: Troubleshooting Imbalanced ADMET Datasets

This technical support center provides solutions for researchers encountering common issues when building predictive machine learning (ML) models for ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) properties. The following guides and FAQs address specific challenges related to imbalanced datasets, a major contributor to model inaccuracy and, consequently, late-stage drug attrition [8] [9].

Frequently Asked Questions (FAQs)

Q1: My ADMET toxicity model has high overall accuracy, but it fails to flag most of the truly toxic compounds. What is the most likely cause?

A1: This is a classic symptom of a highly imbalanced dataset [8] [9]. If your dataset contains, for instance, 95% non-toxic compounds and only 5% toxic ones, a model can achieve 95% accuracy by simply predicting "non-toxic" for every compound. This creates a false sense of security and is a major pitfall in early safety screening. To diagnose this, move beyond simple accuracy and examine metrics like Precision, Recall (Sensitivity), and the F1-score for the minority class (toxic compounds) [5].

Q2: What are the most effective techniques to address a class imbalance in my ADMET dataset?

A2: A multi-pronged approach is often most effective. The optimal strategy can be evaluated by comparing the performance metrics of different methods on your validation set. The table below summarizes the core techniques:

Table: Techniques for Handling Imbalanced ADMET Datasets

| Technique Category | Description | Common Methods | Key Considerations |

|---|---|---|---|

| Algorithmic Approach | Using models that inherently cost for misclassifying the minority class. | Cost-sensitive learning; Tree-based algorithms (e.g., Random Forest) | Directly alters the learning process to penalize missing the minority class more heavily [9]. |

| Data-Level Approach | Adjusting the training dataset to create a more balanced class distribution. | Oversampling (e.g., SMOTE); Undersampling | Oversampling creates synthetic examples of the minority class; undersampling removes examples from the majority class [5]. |

| Ensemble Approach | Combining multiple models to improve robustness. | Bagging; Boosting (e.g., XGBoost) | Can be combined with data-level methods to enhance performance on imbalanced data [9]. |

Q3: How can I validate that my "fixed" model is truly reliable for decision-making in lead optimization?

A3: Rigorous validation is critical. Follow this protocol:

- Use Stratified Splitting: Ensure your training, validation, and test sets maintain the original class distribution.

- Employ Robust Metrics: Prioritize metrics like the Matthews Correlation Coefficient (MCC) or the Area Under the Precision-Recall Curve (AUPRC) over accuracy, as they provide a more reliable picture of performance on imbalanced data [5].

- Validate on External Datasets: Test the final model on a completely held-out dataset from a different source (e.g., a public database) to assess its generalizability and avoid overfitting to your lab's data [8] [6].

- Perform Error Analysis: Manually inspect the false negatives—the toxic compounds your model predicted as safe. This analysis is crucial for understanding the model's blind spots and the potential real-world risk [9].

Q4: Our team has generated a large, proprietary dataset of experimental ADMET results. How can we best leverage this with public data to improve model performance?

A4: Integrating multimodal data is a state-of-the-art strategy. The workflow involves:

- Data Curation: Preprocess both your in-house data and public data (e.g., from ChEMBL, PubChem) to ensure consistency in features and endpoints [5].

- Feature Representation: Use advanced molecular descriptors, such as graph-based representations, which are particularly powerful for ML models as they capture complex structural information [5].

- Apply Multitask Learning (MTL): Train a single model to predict several related ADMET endpoints simultaneously. MTL allows the model to learn generalized patterns from the larger pooled dataset, which can significantly improve accuracy and reduce overfitting, especially for imbalanced targets [9].

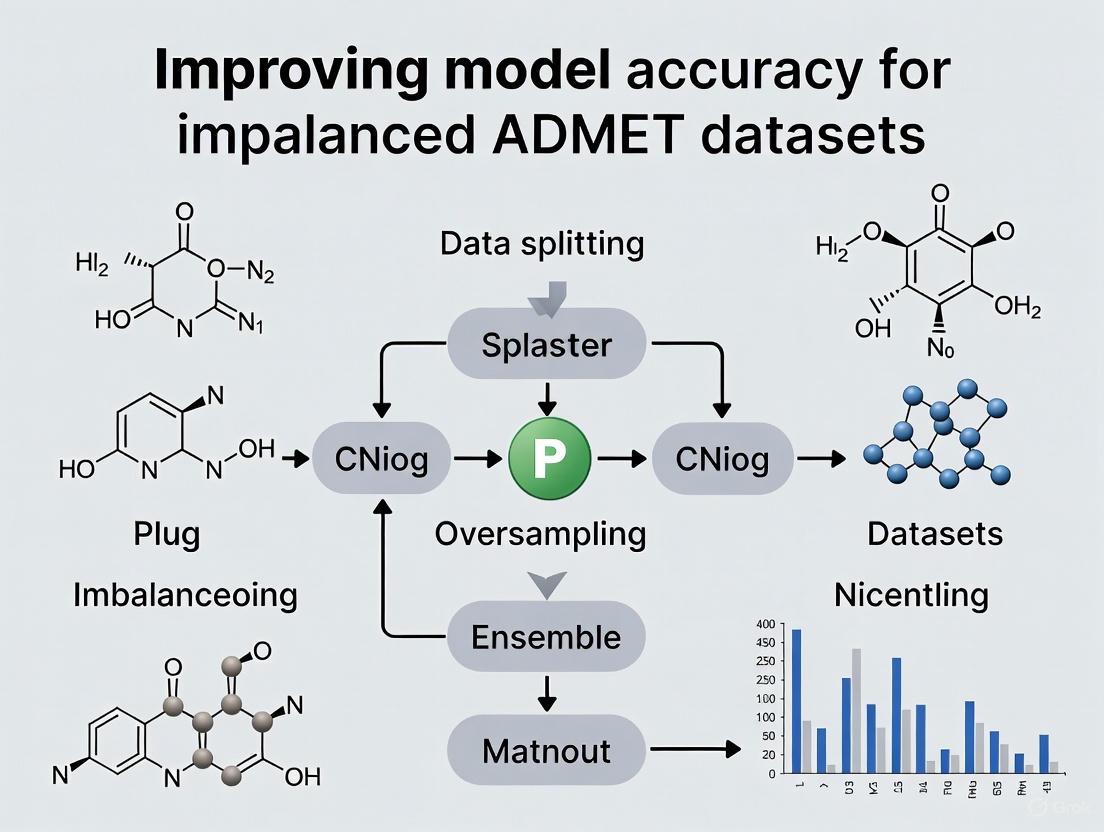

The following workflow diagram illustrates a robust methodology for developing and validating models for imbalanced ADMET data:

Essential Research Reagent Solutions

The following table details key computational tools and resources essential for conducting research on imbalanced ADMET datasets.

Table: Key Research Reagents & Tools for ADMET Modeling

| Tool / Reagent | Type | Primary Function in Research |

|---|---|---|

| Graph Neural Networks (GNNs) | Algorithm | Learns task-specific features from molecular graph structures, achieving high accuracy in ADMET prediction [5] [9]. |

| ADMETlab 2.0 | Software Platform | An integrated online platform for accurate and comprehensive predictions of ADMET properties, useful for benchmarking [8]. |

| Multitask Learning (MTL) Frameworks | Modeling Approach | Improves model generalizability and data efficiency by training a single model on multiple related ADMET endpoints simultaneously [9]. |

| SMOTE | Data Preprocessing Algorithm | A popular oversampling technique that generates synthetic examples for the minority class to balance dataset distribution [5]. |

| ColorBrewer | Design Tool | Provides research-backed, colorblind-safe color palettes for creating clear and accessible data visualizations [10]. |

Troubleshooting Guides and FAQs for Imbalanced ADMET Datasets

Data imbalance in ADMET modeling stems from three interconnected challenges:

- Assay Limitations: Experimental constraints, such as lower detection bounds and high costs, lead to truncated or sparse data distributions [11].

- Public Data Curation: Public datasets often aggregate data from multiple sources, which can introduce inconsistencies in measurement protocols, units, and reporting standards, creating a "mosaic" effect that complicates modeling [12].

- Chemical Space Gaps: The compounds tested in specific assays are often structurally similar (congeneric), leading to a narrow representation of chemical space and poor model performance on structurally novel compounds [13].

Troubleshooting Guide: Addressing Data Imbalance

| Challenge Category | Specific Issue | Impact on Data Balance | Recommended Solution |

|---|---|---|---|

| Assay Limitations | Lower bound of detection in Clint assays (e.g., < 10 µL/min/mg) [11] | Censored Data: Inability to confidently quantify values below a threshold, creating a truncated distribution. | Apply a filter to exclude unreliable low-range measurements from the test set [11]. |

| Sparse testing across multiple assays [11] | Missing Data: Not every molecule is tested in every assay, creating an incomplete and uneven data matrix. | Leverage multi-task learning or imputation techniques designed for sparse pharmacological data. | |

| Public Data Curation | Inconsistent aggregation from multiple sources [12] | Representation Imbalance: Certain property values or chemical series may be over- or under-represented. | Implement rigorous data standardization and apply domain-aware feature selection [5]. |

| Variable experimental protocols and cut-offs [12] | Label Noise: Inconsistent measurements for similar compounds, blurring decision boundaries. | Perform extensive data cleaning, calculate mean values for duplicates, and remove high-variance entries [12]. | |

| Chemical Space Gaps | Focus on congeneric series in industrial research [13] | Structural Bias: Models become experts on a narrow chemical space and fail to generalize. | Introduce structurally diverse compounds from public data or use generative models to explore novel space. |

| Prevalence of specific molecular fragments | Feature Imbalance: Model predictions are dominated by common substructures. | Use hybrid tokenization (fragments and SMILES) to better capture both common and rare structural features [14]. |

Detailed Experimental Protocols for Improving Model Accuracy

Protocol 1: Curating a High-Quality Public Dataset for Modeling

This methodology is adapted from the curation process used for a large-scale Caco-2 permeability model [12].

Objective: To create a robust, non-redundant dataset from multiple public sources suitable for training predictive ADMET models.

Materials:

- Data Sources: Public datasets (e.g., from published literature on Caco-2 permeability) [12].

- Software: RDKit for molecular standardization and descriptor calculation [12].

- Computing Environment: Standard computational chemistry environment (e.g., Python, KNIME).

Procedure:

- Data Aggregation: Combine datasets from multiple public sources into an initial collection.

- Unit Standardization: Convert all measurements to consistent units (e.g., apparent permeability in cm/s × 10–6) and apply a logarithmic transformation (base 10) for modeling [12].

- Duplicate Handling: For duplicate molecular entries, calculate the mean and standard deviation. Retain only entries with a standard deviation ≤ 0.3 to minimize uncertainty, using the mean value for modeling [12].

- Molecular Standardization: Use the RDKit

MolStandardizemodule to generate consistent tautomer canonical states and final neutral forms, preserving stereochemistry [12]. - Dataset Splitting: Randomly divide the curated, non-redundant records into training, validation, and test sets (e.g., 8:1:1 ratio), ensuring identical distribution across splits. For robust validation, repeat this splitting process multiple times (e.g., 10 splits with different random seeds) [12].

Protocol 2: Implementing a Hybrid Tokenization Model for ADMET Prediction

This protocol is based on a novel approach that enhances molecular representation for Transformer-based models [14].

Objective: To improve ADMET prediction accuracy on imbalanced datasets by using a hybrid fragment-SMILES tokenization method.

Materials:

- Model Architecture: Transformer-based model (e.g., MTL-BERT) [14].

- Data: ADMET datasets (e.g., from public challenges like the Antiviral ADMET Challenge) [11].

- Software: Cheminformatics toolkit for molecular fragmentation; deep learning framework (e.g., PyTorch, TensorFlow).

Procedure:

- Fragment Library Generation: Break down the molecules in the training set into smaller sub-structural fragments. Analyze the frequency of each fragment's occurrence [14].

- Frequency Cut-off Application: Create a refined fragment library by including only the fragments that appear above a specific frequency threshold. This prevents the model from being overwhelmed by a vast number of rare fragments [14].

- Hybrid Tokenization: Represent each molecule using a combination of:

- High-frequency fragments from your library.

- Standard SMILES characters for the remaining atomic-level structure [14].

- Model Pre-training & Training: Utilize a pre-training strategy (e.g., one-phase or two-phase) on a large corpus of molecular structures. Fine-tune the pre-trained model on the specific, imbalanced ADMET prediction tasks [14].

The following diagram illustrates the logical workflow and decision points for addressing imbalance in ADMET datasets:

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Experiment | Application Context |

|---|---|---|

| Caco-2 Cell Lines | In vitro model to assess intestinal permeability of drug candidates [12]. | Gold standard for predicting oral drug absorption. |

| Cryopreserved Hepatocytes | Metabolic stability assays (e.g., HLM, MLM) to predict drug clearance [11] [15]. | Critical for evaluating metabolic stability. |

| Williams Medium E with Supplements | Optimized culture medium for maintaining hepatocyte viability and function in vitro [15]. | Essential for plating and incubating hepatocytes. |

| RDKit | Open-source cheminformatics toolkit for molecular standardization, descriptor calculation, and fingerprint generation [12]. | Core software for data curation and feature engineering. |

| Morgan Fingerprints | A type of circular fingerprint that provides a substructure-based representation of a molecule [12]. | Common molecular representation for ML models. |

| Collagen I-Coated Plates | Provides a suitable substratum for cell attachment, crucial for assays using plateable hepatocytes [15]. | Improves cell attachment efficiency in cell-based assays. |

| MTESTQuattro / GaugeSafe | PC-based controller and software for controlling testing systems and analyzing material properties data [16]. | Used in physical properties testing (e.g., tensile testing). |

FAQs and Troubleshooting Guides

This technical support center provides targeted solutions for researchers tackling data imbalance and variability in critical ADMET endpoints, with a special focus on hERG inhibition.

FAQ 1: Why is there high variability in reported hERG IC50 values for the same compound across different studies?

High variability in hERG IC50 values often stems from differences in experimental methodologies rather than the compound's true activity. Two key sources of this variability are the temperature at which the assay is conducted and the voltage pulse protocol used to activate the hERG channel [17] [18].

- Troubleshooting Guide: To ensure highly repeatable and conservative safety evaluations, implement the following standardized protocol [18]:

- Recommended Action: Conduct patch-clamp recordings at near-physiological temperature (approximately 37°C) instead of room temperature.

- Recommended Action: Use a step-ramp voltage protocol for activating hERG K+ channels, as it provides a more accurate evaluation compared to simple step-pulse protocols.

- Example: A study found that the hERG inhibition for the antibiotic erythromycin was underestimated when using a 2-second step-pulse protocol compared to the step-ramp pattern [17]. Adopting this standardized approach yielded IC50 values for a 15-drug panel that differed by less than twofold [18].

FAQ 2: How can we improve machine learning model performance for imbalanced ADMET datasets where inactive compounds vastly outnumber actives?

Imbalanced datasets are a major challenge in ADMET modeling, leading to models that are biased toward the majority class (e.g., non-toxic compounds). Addressing this requires strategies at the data and algorithm levels [5] [19].

- Troubleshooting Guide:

- Data-Level Action: Employ data sampling techniques combined with feature selection. Research indicates that combining feature selection with data sampling can significantly improve prediction performance for imbalanced datasets [5].

- Algorithm-Level Action: Utilize tree-based ensemble models like Random Forests or gradient boosting frameworks (e.g., LightGBM, CatBoost). These have been shown to perform robustly across various ADMET prediction tasks [19].

- Validation Action: Enhance model evaluation by integrating cross-validation with statistical hypothesis testing. This provides a more robust and reliable model assessment than a single hold-out test set, which is crucial in a noisy domain like ADMET [19].

FAQ 3: What are the best practices for feature representation when building ML models for ADMET prediction?

The choice of how to represent a molecule numerically (feature representation) is critical and can impact performance more than the choice of the ML algorithm itself [19].

- Troubleshooting Guide:

- Recommended Action: Do not default to concatenating multiple feature representations (e.g., fingerprints + descriptors) without systematic reasoning. Instead, use a structured approach to feature selection [19].

- Recommended Action: For a given dataset, iteratively test different representations and their combinations (e.g., molecular descriptors, Morgan fingerprints, and deep-learned features) to identify the best-performing set [19].

- Note: The optimal feature representation is often dataset-dependent. A one-size-fits-all approach is less effective than a targeted, dataset-specific selection [19].

Standardized Experimental Protocol for Reliable hERG Inhibition Assay

The following methodology, adapted from Kirsch et al., is designed to minimize variability and provide a conservative safety evaluation [17] [18].

- Cell Line: Use HEK293 cells stably transfected with hERG cDNA.

- Patch-Clamp Recording:

- Temperature: Maintain recordings at near-physiological temperature (37°C).

- Voltage Protocol: Apply a step-ramp pattern to activate hERG K+ channels.

- Drug Application: Evaluate a panel of drugs spanning a broad range of potency and pharmacological classes. Perform concentration-response analysis.

- Data Analysis: Calculate IC50 values using conservative acceptance criteria. Data obtained with this protocol show high repeatability with less than a twofold difference in IC50 for a diverse drug panel [18].

Summary of Quantitative Data on hERG Assay Variability

The table below consolidates key findings from the study investigating sources of variability in hERG measurements [17] [18].

| Experimental Variable | Impact on hERG Inhibition Measurement | Example Compound Affected |

|---|---|---|

| Temperature (Room Temp vs. 37°C) | Markedly increases measured potency for some drugs [17]. | d,l-sotalol, Erythromycin [17] |

| Stimulus Pattern (2-s step vs. step-ramp) | Step-pulse protocol can underestimate potency compared to step-ramp [17]. | Erythromycin [17] |

| Standardized Protocol (37°C + step-ramp) | Yields highly repeatable data; IC50 values differ < 2x for 15 drugs [18]. | All 15 tested drugs [18] |

Visualization of Workflows and Concepts

The following diagrams, generated with Graphviz, illustrate the core experimental and computational concepts discussed in this case study.

Standardized hERG Assay Workflow

ML Model Development for Imbalanced Data

The Scientist's Toolkit: Research Reagent Solutions

The table below details key materials and computational tools essential for experiments in hERG safety assessment and imbalanced ADMET modeling.

| Item/Tool Name | Function / Application | Relevant Context |

|---|---|---|

| HEK293 cells stably transfected with hERG cDNA | Provides a consistent cellular system for expressing the target hERG potassium channel for patch-clamp assays. [18] | hERG inhibition safety pharmacology. |

| Step-Ramp Voltage Protocol | A specific pattern of electrical stimulation used in patch-clamp to activate hERG channels more accurately for drug testing. [17] [18] | Standardized hERG patch-clamp assay. |

| RDKit Cheminformatics Toolkit | An open-source toolkit for cheminformatics used to calculate molecular descriptors and fingerprints for ML models. [19] | Feature generation for ADMET prediction models. |

| Therapeutics Data Commons (TDC) | A public resource providing curated benchmarks and datasets for ADMET-associated properties to train and validate ML models. [19] | Accessing standardized ADMET datasets. |

| CETSA (Cellular Thermal Shift Assay) | A method for validating direct drug-target engagement in intact cells and native tissues, providing system-level validation. [20] | Mechanistic confirmation of target binding in complex biological systems. |

Methodological Arsenal: Techniques and Algorithms for Robust ADMET Modeling

In machine learning for drug discovery, how you split your dataset into training, validation, and test sets is a critical determinant of your model's real-world usefulness. A poor splitting strategy can lead to data leakage, where a model performs well in testing but fails prospectively because it was evaluated on data that was not sufficiently independent from its training data. For ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) properties, which often feature imbalanced and heterogeneous endpoints, rigorous data splits are essential for accurate benchmarking and ensuring models can generalize to novel chemical matter. [21]

This guide addresses common implementation challenges and provides troubleshooting advice for robust data-splitting strategies.

Frequently Asked Questions & Troubleshooting

Q1: My model's performance drops drastically when I switch from a random split to a scaffold split. Is this normal, and what does it mean?

- Answer: Yes, this is an expected and well-documented behavior. A significant performance drop indicates that your model, trained on a random split, was likely overfitting to local chemical patterns within specific scaffolds. It was memorizing structural features rather than learning generalizable structure-property relationships. [21] [22] Scaffold splitting provides a more realistic and challenging assessment by ensuring your model is tested on entirely new chemical series. [23] If performance drops, it suggests the model's applicability domain is limited, and you should not trust its predictions on truly novel compounds.

Q2: I'm using Bemis-Murcko scaffolds for splitting, but my test set contains structures that are very similar to ones in the training set. Why is this happening?

- Answer: This is a key limitation of the standard Bemis-Murcko method. It can generate an overly large number of fine-grained scaffolds that don't align with a medicinal chemist's concept of a "chemical series." [23] For example, a single med-chem paper (representing one series) may contain dozens of unique Murcko scaffolds. [23] This can leave structurally related compounds across the train/test boundary.

- Troubleshooting: Consider using a more sophisticated scaffold-finding algorithm that groups related substructures into series, or switch to a cluster split based on molecular fingerprints, which can provide a more holistic measure of structural similarity. [21] [23]

Q3: I want to use a temporal split to simulate real-world use, but my public dataset doesn't have reliable timestamps. What can I do?

- Answer: This is a common problem. As a robust alternative, you can use the SIMPD (Simulated Medicicnal Chemistry Project Data) algorithm. SIMPD uses a genetic algorithm to split public datasets in a way that mimics the property and activity shifts observed between early and late compounds in real drug discovery projects. It is designed to be more realistic than random splits and less pessimistic than neighbor/scaffold splits. [22] [24]

Q4: When I use a multitask model for my imbalanced ADMET data, performance on my smaller tasks gets worse. How can I prevent this "negative transfer"?

- Answer: Negative transfer occurs when tasks with little relatedness or imbalanced data volumes interfere with each other during training. [21]

- Troubleshooting:

- Implement Task-Weighted Loss: Scale each task's contribution to the total loss inversely with its training set size or by its difficulty. This prevents larger tasks from dominating the learning process. [21]

- Use Adaptive Optimizers: Employ methods like AIM (Adaptive Inference Model) that learn to mediate destructive gradient interference between tasks. [21]

- Re-evaluate Task Grouping: The benefits of multitask learning are highest when endpoints are chemically or biologically related. Integrating hundreds of weakly related endpoints can saturate or degrade performance. Be selective about which tasks to model together. [21]

Q5: How do I choose the right splitting strategy for my specific goal?

- Answer: The choice of split should mirror your model's intended application. The following table summarizes the core strategies and their uses.

| Splitting Strategy | Best Used For | Key Advantage | Primary Limitation |

|---|---|---|---|

| Random Split | Initial model prototyping and benchmarking against simple baselines. | Simple to implement; maximizes data usage. | Highly optimistic; grossly overestimates prospective performance. [22] |

| Scaffold Split | Evaluating model generalizability to novel chemical scaffolds/series. | Tests generalization to new chemotypes; identifies systematic model failures. [21] [23] | Can be overly pessimistic; standard Murcko scaffolds may not reflect true chemical series. [23] |

| Temporal Split | Simulating real-world prospective use and validating model utility over time. | Gold standard for realistic performance estimation; accounts for temporal distribution shifts. [22] [25] | Requires timestamped data, which is often unavailable in public databases. [22] |

| Cluster Split | Ensuring the test set is structurally distinct from the training set. | Provides a robust, structure-based split that is less granular than scaffold splits. | Performance depends on the choice of fingerprint and clustering algorithm. |

| Cold-Split | Multi-instance problems (e.g., Drug-Target Interaction), where one entity type is new. | Tests the model's ability to predict for new entities (e.g., a new drug or a new protein). [26] | Very challenging; requires the model to learn generalized patterns, not just memorize entities. |

Experimental Protocols & Methodologies

Protocol: Implementing a Rigorous Scaffold Split

Principle: Assign all molecules sharing a core Bemis-Murcko scaffold to the same partition (train, validation, or test) to evaluate performance on unseen chemotypes. [21]

Materials:

- Software: RDKit (open-source cheminformatics toolkit).

- Input: A dataset of compounds with validated chemical structures (e.g., SMILES strings).

Method:

- Generate Scaffolds: For every molecule in your dataset, generate its Bemis-Murcko scaffold. The RDKit implementation preserves degree-one atoms with double bonds, which slightly varies from the original algorithm but better captures the scaffold's electronic properties. [23]

- Group by Scaffold: Group all molecules by their identical generated scaffolds.

- Partition Scaffolds: Split the unique scaffolds into train, validation, and test sets (e.g., 80/10/10). It is critical to split on the scaffolds, not the molecules.

- Assign Molecules: Assign all molecules belonging to a scaffold group to the partition assigned to that scaffold.

Troubleshooting: If the split results in a test set that is too small or imbalanced, consider using a scaffold network analysis or a cluster-based method to group similar scaffolds before splitting. [23]

Protocol: Simulating a Temporal Split with SIMPD

Principle: When real timestamp data is unavailable, use the SIMPD algorithm to create splits that mimic the evolution of a real-world drug discovery project. [22] [24]

Materials:

- Software: SIMPD code (available from GitHub.com/rinikerlab/moleculartimeseries under an open-source license).

- Input: A dataset of compounds with associated activity/property values.

Method:

- Data Curation: Prepare your dataset, ensuring it meets basic quality controls (e.g., molecular weight between 250-700 g/mol, removing compounds with unreliable measurements). [22] [24]

- Define Objectives: SIMPD uses a multi-objective genetic algorithm. The objectives are pre-defined based on an analysis of real project data and typically include maximizing the difference in molecular properties (e.g., molecular weight, lipophilicity) and activity trends between the early (training) and late (test) sets. [22] [24]

- Run Algorithm: Execute the SIMPD algorithm on your curated dataset to generate the training and test splits.

- Validate Split: Check that the generated splits exhibit the expected property shifts (e.g., later compounds might be more potent or have more optimized physicochemical properties).

Research Reagent Solutions

The following table lists key computational tools and resources essential for implementing advanced data-splitting strategies.

| Resource Name | Type | Primary Function in Data Splitting |

|---|---|---|

| RDKit | Open-source Cheminformatics Library | Generates molecular structures, fingerprints, and Bemis-Murcko scaffolds; fundamental for scaffold and similarity-based splits. [23] |

| Therapeutics Data Commons (TDC) | Benchmarking Platform | Provides access to curated ADMET datasets with pre-defined, rigorous splits (scaffold, temporal, cold-start) for fair model comparison. [21] [26] |

| SIMPD | Algorithm & Datasets | Generates simulated time splits on public data to mimic real-world project evolution, a robust alternative when true temporal data is missing. [22] [24] |

Workflow Diagrams

Data Splitting Strategy Selection

Multitask Learning with Adaptive Weighting

Frequently Asked Questions (FAQs)

Q1: What is the primary advantage of using Multitask Learning (MTL) over Single-Task Learning (STL) for ADMET prediction?

MTL's primary advantage is its ability to improve prediction accuracy, especially for tasks with scarce labeled data, by leveraging shared information across related ADMET endpoints. Unlike STL, which builds one model per task, MTL solves multiple tasks simultaneously, exploiting commonalities and differences across them. This knowledge transfer compensates for data scarcity in individual tasks and leads to more robust molecular representations [27]. For example, since Cytochrome P450 (CYP) enzyme inhibition can influence both distribution and excretion endpoints, MTL can use these inherent task associations to boost performance on all related predictions [27].

Q2: How can I prevent data leakage and ensure my model generalizes to novel chemical structures?

To ensure rigorous benchmarking and realistic validation, it is crucial to use structured data splitting strategies that prevent cross-task leakage. Instead of random splitting, you should employ:

- Temporal Splits: Partition compounds based on experimental dates or the date they were added to a database. This simulates a real-world, prospective prediction scenario and often provides a less optimistic but more realistic measure of generalization [21].

- Scaffold Splits: Group compounds by their core chemical scaffolds (e.g., Bemis-Murcko scaffolds). This ensures that the training and test sets contain structurally distinct molecules, forcing the model to generalize to novel chemotypes [21]. A robust multitask split must ensure that no compound in the test set has any of its measurements (for any endpoint) present in the training or validation set [21].

Q3: My GNN model is biased towards majority classes in an imbalanced ADMET dataset. What are effective mitigation strategies?

Class imbalance is a common issue where GNNs become biased toward classes with more labeled data. To address this:

- Unified Structural and Semantic Learning: Implement frameworks like Uni-GNN that extend message passing beyond immediate structural neighbors to include semantically similar nodes. This helps propagate discriminative information for minority classes throughout the entire graph, alleviating the "under-reaching problem" [28].

- Balanced Pseudo-Labeling: Employ a mechanism to generate pseudo-labels for unlabeled nodes in a class-balanced manner. This strategically augments the pool of labeled instances for minority classes, providing more training signals [28].

- Topology-Aware Re-weighting: Use methods that assign higher importance weights to labeled nodes from minority classes while also considering the graph connectivity, which can be more effective than traditional re-weighting [28].

Q4: How do I select the best molecular representation (features) for my ligand-based ADMET model?

The choice of molecular representation significantly impacts model performance. A structured approach is recommended:

- Systematic Evaluation: Do not arbitrarily concatenate multiple representations. Instead, iteratively evaluate different feature sets—such as classical RDKit descriptors, Morgan fingerprints, and deep-learned embeddings—to identify which combination works best for your specific dataset and task [19].

- Feature Selection: Use methods like filter, wrapper, or embedded techniques to identify the most relevant molecular descriptors. The quality and relevance of features are often more important than the quantity [5].

- Model-Specific Tuning: The optimal feature representation can be highly dataset- and model-dependent. For example, random forests may perform well with certain fingerprints, while graph-based models inherently learn from the molecular graph structure [19].

Troubleshooting Guides

Issue 1: Poor Multitask Model Performance Due to Task Interference

Problem: Your MTL model is performing worse than individual STL models, indicating negative transfer where unrelated tasks are interfering with each other.

Diagnosis: This occurs when the selected auxiliary tasks are not sufficiently related to the primary task, or when there is destructive gradient interference during training [21] [27].

Solution: Implement an adaptive task selection and weighting strategy.

- Quantify Task Relatedness: Build a task association network. Measure the relatedness between two tasks (α and β) using metrics like label agreement for highly similar compounds:

R = max{S(α,β), D(α,β)} / (S(α,β) + D(α,β)), where S and D indicate agreement and disagreement for compound pairs with high Tanimoto similarity [21]. - Select Optimal Auxiliaries: Use algorithms that combine status theory and maximum flow within the task association network to adaptively select the most beneficial auxiliary tasks for your primary task of interest [27].

- Apply Adaptive Loss Weighting: During training, use a loss function that dynamically weights each task's contribution. For example, use the QW-MTL method, which scales each task's loss via

w_t = r_t^(softplus(log β_t)), wherer_tis the task's data ratio, andβ_tis a learnable parameter [21]. This balances the influence of tasks with different data volumes and difficulties.

Issue 2: Handling Highly Imbalanced Datasets in Graph-Based Property Prediction

Problem: Your GNN for node classification (e.g., predicting toxic vs. non-toxic compounds) shows high accuracy overall but fails to correctly identify minority class instances (e.g., toxic compounds).

Diagnosis: GNNs suffer from neighborhood memorization and under-reaching for minority classes, meaning they cannot effectively propagate information from the few labeled nodes [28].

Solution: Adopt a unified GNN framework that integrates structural and semantic connectivity.

- Build Dual Connectivity Graphs:

- The Structural Graph is your original molecular graph with atoms as nodes and bonds as edges.

- Construct a Semantic Graph by connecting nodes (molecules) that have similar embeddings, calculated using a metric like cosine similarity.

- Implement Unified Message Passing: In each GNN layer, perform message passing separately on both the structural and semantic graphs. This allows a node to receive information from both its direct structural neighbors and semantically similar nodes across the graph, vastly improving the flow of information for minority classes [28].

- Augment with Balanced Pseudo-Labels: Use the model's confident predictions to generate pseudo-labels for unlabeled nodes. Sample these pseudo-labels in a class-balanced way to artificially increase the number of labeled instances for minority classes and add them back to the training set [28].

Diagram: Unified GNN Framework for Class Imbalance

Issue 3: Suboptimal Performance with Ligand-Based Models

Problem: Your ligand-based model (using precomputed molecular features) is underperforming on a held-out test set or external dataset.

Diagnosis: The issue may stem from poor feature representation, inadequate model selection, or a failure to generalize to data from different sources.

Solution: Follow a structured model and feature optimization protocol.

- Data Cleaning and Curation:

- Standardize SMILES representations and remove inorganic salts and organometallic compounds.

- Extract the organic parent compound from salt forms.

- Adjust tautomers for consistent functional group representation and remove duplicates with inconsistent property values [19].

- Systematic Feature and Model Selection:

- Iteratively train and evaluate different models (e.g., Random Forest, LightGBM, SVM, MPNN) using various feature sets (e.g., RDKit descriptors, Morgan fingerprints) and their combinations.

- Perform hyperparameter tuning for the chosen model architecture in a dataset-specific manner [19].

- Robust Statistical Evaluation:

- Use cross-validation combined with statistical hypothesis testing (e.g., paired t-tests) to confirm that performance improvements from optimization steps are statistically significant, not just lucky splits [19].

- External Validation:

- Finally, evaluate the optimized model's performance on a test set from a completely different data source to simulate a practical application and truly assess its generalizability [19].

Experimental Protocols & Data

Protocol 1: Implementing a Multi-Task Graph Learning Framework (MTGL-ADMET)

This protocol outlines the methodology for building a multi-task graph learning model that adaptively selects auxiliary tasks to boost performance on a primary ADMET task [27].

Data Preparation and Splitting:

- Obtain a multi-task ADMET dataset with multiple property endpoints (e.g., a public dataset from TDC).

- Apply a scaffold split to partition the data into training, validation, and test sets (e.g., 80:10:10 ratio) to ensure evaluation on novel chemical structures. Repeat this process with different random seeds for robust evaluation.

Adaptive Auxiliary Task Selection:

- Build Task Association Network: Train single-task models (STL) and pairwise multi-task models for all possible task pairs.

- Calculate Status: For each task pair (i, j), use the performance results to calculate a "status" value, which quantifies the benefit (or detriment) task j provides to task i.

- Select Optimal Auxiliaries: Model the tasks as a flow network. For a given primary task, use the maximum flow algorithm to identify the set of auxiliary tasks that provide the maximum positive transfer.

Model Training and Interpretation:

- Model Architecture: For the selected primary-auxiliary task group, build the MTGL-ADMET model which includes:

- A task-shared atom embedding module (using a GNN).

- A task-specific molecular embedding module that aggregates atom embeddings.

- A primary task-centered gating module to focus learning.

- A multi-task predictor [27].

- Training: Train the model using a task-weighted loss function. Use the validation set for early stopping.

- Interpretation: Analyze the atom aggregation weights from the task-specific molecular embedding module to identify crucial molecular substructures related to each ADMET endpoint.

- Model Architecture: For the selected primary-auxiliary task group, build the MTGL-ADMET model which includes:

Diagram: MTGL-ADMET Workflow

Protocol 2: Benchmarking Models with External Data

This protocol tests model robustness by training on one data source and evaluating on another, a key step for assessing practical utility [19].

- Source Dataset Selection: Identify two public datasets for the same ADMET endpoint but from different sources (e.g., data from TDC and an in-house dataset from a published study like Biogen's [19]).

- Model Training: Train your optimized model (from Troubleshooting Issue 3) on the entire training set of Data Source A.

- External Validation: Evaluate the trained model directly on the test set from Data Source B. This tests the model's ability to generalize to different experimental conditions or chemical spaces.

- Combined Data Training (Optional): Investigate the effect of combining data by training a new model on a mixture of Data Source A and an increasing amount of Data Source B's training data. Evaluate on Data Source B's test set to see if performance improves.

Performance Data

The following table summarizes quantitative results from a study comparing the MTGL-ADMET model against other single-task and multi-task graph learning baselines [27].

Table: Benchmarking Performance of MTGL-ADMET on Selected ADMET Endpoints

| Endpoint | Metric | ST-GCN | MT-GCN | MGA | MTGL-ADMET |

|---|---|---|---|---|---|

| HIA (Human Intestinal Absorption) | AUC | 0.916 ± 0.054 | 0.899 ± 0.057 | 0.911 ± 0.034 | 0.981 ± 0.011 |

| OB (Oral Bioavailability) | AUC | 0.716 ± 0.035 | 0.728 ± 0.031 | 0.745 ± 0.029 | 0.749 ± 0.022 |

| P-gp Inhibitors | AUC | 0.916 ± 0.012 | 0.895 ± 0.014 | 0.901 ± 0.010 | 0.928 ± 0.008 |

Note: HIA and OB are absorption endpoints, while P-gp inhibition is a distribution-related endpoint. MTGL-ADMET demonstrates superior or competitive performance across these key ADMET properties. The number of auxiliary tasks used for each primary task in MTGL-ADMET is indicated in the original study [27].

Table: Key Computational Tools and Algorithms for ADMET Model Development

| Tool / Algorithm | Type | Primary Function | Application in ADMET Research |

|---|---|---|---|

| Graph Neural Networks (GNNs) | Algorithm | Learns representations from graph-structured data. | Directly models molecules as graphs (atoms=nodes, bonds=edges) for highly accurate property prediction [29] [27]. |

| Therapeutics Data Commons (TDC) | Database | Provides curated, benchmarked datasets for drug discovery. | Source of standardized, multi-task ADMET datasets for fair model training and comparison [21] [19]. |

| RDKit | Software | Open-source cheminformatics toolkit. | Calculates molecular descriptors and fingerprints for feature-based models, and handles molecule standardization [19]. |

| Multitask Graph Learning (MTGL-ADMET) | Algorithm | Adaptive multi-task learning framework. | Boosts prediction on a primary ADMET task by intelligently selecting and leveraging related auxiliary tasks [27]. |

| Uni-GNN Framework | Algorithm | Unified graph learning for class imbalance. | Mitigates bias in GNNs by combining structural and semantic message passing, crucial for imbalanced toxicity datasets [28]. |

| Scaffold Split | Methodology | Data splitting based on molecular Bemis-Murcko scaffolds. | Ensures model evaluation on structurally novel compounds, providing a rigorous test of generalizability [21] [19]. |

Molecular representation learning has emerged as a transformative approach in computational drug discovery, particularly for addressing the challenges of predicting Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties. Traditional fingerprint-based methods, while computationally efficient, often struggle with the complexity and imbalanced nature of ADMET datasets. This technical guide explores the transition from fixed molecular fingerprints to adaptive, learned representations that can capture intricate structure-property relationships, ultimately improving prediction accuracy for critical ADMET endpoints [5] [30].

The limitations of traditional approaches have become increasingly apparent as drug discovery tasks grow more sophisticated. Conventional representations like molecular fingerprints and fixed descriptors often fail to capture the subtle relationships between molecular structure and complex biological properties essential for accurate ADMET prediction [30]. Learned representations, particularly those derived from deep learning models, automatically extract molecular features in a data-driven fashion, enabling more nuanced understanding of molecular behavior in biological systems [31].

Technical FAQs & Troubleshooting Guides

FAQ: Fundamental Concepts

Q1: What are the key differences between traditional fingerprints and learned molecular representations?

Traditional fingerprints are predefined, rule-based encodings that capture specific molecular substructures or physicochemical properties as fixed-length binary vectors or numerical values. In contrast, learned representations are generated by deep learning models that automatically extract relevant features from molecular data during training, creating continuous, high-dimensional embeddings that capture complex structural patterns [30] [31].

Table: Comparison of Traditional vs. Learned Molecular Representations

| Feature | Traditional Fingerprints | Learned Representations |

|---|---|---|

| Creation Method | Predefined rules and expert knowledge | Data-driven, learned from molecular structures |

| Flexibility | Fixed, limited adaptability | Adaptive to specific tasks and datasets |

| Information Capture | Explicit substructures and properties | Implicit structural patterns and relationships |

| Examples | ECFP, MACCS keys, molecular descriptors | GNN embeddings, transformer-based representations |

| Performance on Imbalanced Data | Often requires extensive feature engineering | Can learn robust patterns with appropriate techniques |

Q2: Why are learned representations particularly valuable for imbalanced ADMET datasets?

Imbalanced ADMET datasets, where certain property classes are underrepresented, present significant challenges for predictive modeling. Learned representations excel in this context because they can capture hierarchical features—from atomic-level patterns to molecular-level characteristics—that are robust across different data distributions. Advanced architectures like graph neural networks and transformers can learn invariant representations that generalize well even when training data is sparse or unevenly distributed [32] [19].

Q3: What are the main categories of modern molecular representation learning approaches?

Modern approaches primarily fall into three categories: (1) Language model-based methods that treat molecular sequences (e.g., SMILES) as a chemical language using architectures like Transformers; (2) Graph-based methods that represent molecules as graphs with atoms as nodes and bonds as edges, processed using Graph Neural Networks (GNNs); and (3) Multimodal and contrastive learning approaches that combine multiple representation types or use self-supervised learning to capture robust features [30].

Troubleshooting Guide: Common Implementation Challenges

Problem: Poor generalization performance on external validation sets despite high training accuracy.

Solution: This often indicates overfitting to the training distribution or dataset-specific biases. Implement these strategies:

Utilize Hybrid Representations: Combine traditional descriptors with learned features. Studies show that integrating multiple representation types can enhance model robustness. For example, concatenating extended-connectivity fingerprints (ECFP) with graph-based embeddings has demonstrated improved performance across diverse ADMET tasks [19].

Apply Advanced Regularization: Incorporate physical constraints and symmetry awareness. The OmniMol framework implements SE(3)-equivariance to ensure representations respect molecular geometry and chirality, significantly improving generalization [32].

Adopt Multi-Task Learning: Train on multiple related ADMET properties simultaneously. Hypergraph-based approaches that capture relationships among different properties have shown state-of-the-art performance on imperfectly annotated data, leveraging correlations between tasks to enhance generalization [32].

Table: Performance Comparison of Representation Methods on Imbalanced ADMET Data

| Representation Type | BA | F1-Score | AUC | MCC | Key Advantage |

|---|---|---|---|---|---|

| ECFP | 0.72 | 0.69 | 0.75 | 0.41 | Computational efficiency |

| Molecular Descriptors | 0.75 | 0.71 | 0.78 | 0.45 | Interpretability |

| Pre-trained SMILES Embeddings | 0.79 | 0.75 | 0.82 | 0.52 | Transfer learning capability |

| Graph Neural Networks | 0.83 | 0.80 | 0.87 | 0.61 | Structure-awareness |

| Multi-task Hypergraph (OmniMol) | 0.86 | 0.83 | 0.90 | 0.67 | Property relationship modeling |

Problem: Handling inconsistent or imperfectly annotated ADMET data across multiple sources.

Solution: Imperfect annotation is a common challenge in real-world ADMET datasets, where properties are often sparsely, partially, and imbalanced labeled due to experimental costs [32].

Implement Unified Multi-Task Frameworks: Adopt architectures specifically designed for imperfect annotation. The OmniMol framework formulates molecules and properties as a hypergraph, capturing three key relationships: among properties, molecule-to-property, and among molecules. This approach maintains O(1) complexity regardless of the number of tasks while effectively handling partial labeling [32].

Apply Rigorous Data Cleaning Protocols: Standardize molecular representations and remove noise. Follow these established steps:

- Remove inorganic salts and organometallic compounds

- Extract organic parent compounds from salt forms

- Adjust tautomers for consistent functional group representation

- Canonicalize SMILES strings

- De-duplicate with consistency checks (keep first entry if target values are consistent, remove entire group if inconsistent) [19]

Utilize Cross-Validation with Statistical Testing: Enhance evaluation reliability by combining k-fold cross-validation with statistical hypothesis testing. This approach provides more robust model comparisons than single hold-out tests, which is particularly important for noisy ADMET domains [19].

Problem: Limited interpretability of models using learned representations.

Solution: While learned representations can function as "black boxes," several strategies can enhance explainability:

Implement Attention Mechanisms: Use models with built-in interpretability features. Graph attention networks can highlight which molecular substructures contribute most to predictions, aligning with traditional structure-activity relationship (SAR) studies [32].

Analyze Representation Topology: Apply Topological Data Analysis (TDA) to understand the geometric properties of feature spaces. Research shows that topological descriptors correlate with model generalizability, providing insights into why certain representations perform better on specific ADMET tasks [31].

Correlate with Known Molecular Descriptors: Project learned embeddings onto traditional chemical descriptor spaces to identify familiar physicochemical properties that the model has learned to emphasize for specific ADMET endpoints [5].

Experimental Protocols & Methodologies

Protocol 1: Benchmarking Representation Methods for ADMET Prediction

Objective: Systematically evaluate different molecular representations on imbalanced ADMET datasets.

Materials:

- Dataset: Curated ADMET properties from public sources (TDC, ADMETlab 2.0)

- Representations: ECFP, molecular descriptors, pre-trained embeddings, GNN representations

- Models: Random Forests, Gradient Boosting, Message Passing Neural Networks

Methodology:

- Data Preparation: Apply standardized cleaning protocols including salt removal, tautomer standardization, and de-duplication [19].

- Feature Generation: Compute multiple representation types for all molecules:

- ECFP (radius=3, 2048 bits)

- RDKit molecular descriptors (standardized)

- Graph embeddings from pre-trained GNN

- SMILES embeddings from chemical language models

- Model Training: Train each model type with different representations using scaffold splitting to ensure proper generalization.

- Evaluation: Assess performance using balanced metrics (Balanced Accuracy, F1-score, AUC, MCC) with cross-validation and statistical significance testing.

Expected Outcomes: Identification of optimal representation-model combinations for specific ADMET property types, understanding of how representation choice affects performance on imbalanced data.

Protocol 2: Implementing Multi-Task Learning for Imperfectly Annotated ADMET Data

Objective: Leverage correlations between ADMET properties to improve prediction on sparsely labeled endpoints.

Materials:

- Framework: OmniMol or similar multi-task architecture

- Data: Imperfectly annotated ADMET properties from multiple sources

- Computational Resources: GPU-enabled environment for deep learning

Methodology:

- Hypergraph Construction: Formulate molecules and properties as a hypergraph where each property is a hyperedge connecting its labeled molecules [32].

- Model Configuration: Implement task-routed mixture of experts (t-MoE) backbone with task-specific encoders.

- Physics-Informed Learning: Incorporate SE(3)-equivariance for chirality awareness and geometric consistency.

- Training Strategy: Employ multi-task optimization with adaptive weighting to handle different property scales and annotation densities.

Expected Outcomes: Improved performance on sparsely labeled properties by leveraging correlations with well-annotated tasks, more robust representations that capture underlying physical principles.

Essential Research Reagents & Computational Tools

Table: Key Resources for Molecular Representation Learning

| Resource Category | Specific Tools/Frameworks | Primary Function | Application Context |

|---|---|---|---|

| Traditional Representation | RDKit, OpenBabel | Molecular descriptor calculation and fingerprint generation | Baseline representations, interpretable features |

| Deep Learning Frameworks | PyTorch, TensorFlow, DeepChem | Implementation of neural network architectures | Building custom representation learning models |

| Specialized Molecular ML | Chemprop, DGL-LifeSci, TorchDrug | Pre-built GNN architectures for molecules | Rapid prototyping of graph-based representation learning |

| Multi-Task Learning | OmniMol Framework | Hypergraph-based multi-property prediction | Handling imperfectly annotated ADMET data |

| Benchmarking & Evaluation | TDC (Therapeutics Data Commons), MoleculeNet | Standardized datasets and evaluation metrics | Fair comparison of representation methods |

| Topological Analysis | TopoLearn, Giotto-TDA | Topological Data Analysis of feature spaces | Understanding representation characteristics and modelability |

Workflow Visualization

Molecular Representation Learning Workflow

Solutions for Data Imbalance Challenges

Federated Learning (FL) is a decentralized machine learning paradigm that enables multiple data owners to collaboratively train a model without exchanging raw data. Instead of centralizing sensitive datasets, a global model is trained by aggregating locally-computed updates from each participant. This approach is particularly transformative for drug discovery, where it addresses the critical challenge of data scarcity and diversity while preserving data privacy and intellectual property.

In the specific context of improving model accuracy for imbalanced ADMET datasets, FL offers a powerful solution. ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) properties are crucial for predicting a drug's efficacy and safety, yet experimental data is often heterogeneous, low-throughput, and siloed within individual organizations. FL systematically addresses this by altering the geometry of chemical space a model can learn from, improving coverage and reducing discontinuities in the learned representation. Cross-pharma collaborations have consistently demonstrated that federated models outperform local baselines, with performance improvements scaling with the number and diversity of participants. Crucially, the applicability domain of these models expands, making them more robust when predicting properties for novel molecular scaffolds and assay modalities [33].

The following diagram illustrates the foundational workflow of a federated learning system in a drug discovery setting.

Quantitative Benchmarks & Data Presentation

Empirical results from large-scale, real-world federated learning initiatives provide compelling evidence of its benefits for expanding chemical diversity and model generalizability. The following tables summarize key quantitative findings.

Table 1: Performance Gains from Federated Learning in Drug Discovery

| Project / Study | Key Finding | Quantitative Improvement / Impact |

|---|---|---|

| MELLODDY (Cross-Pharma) | Systematic outperformance of local baselines in QSAR tasks [33] [34]. | Performance improvements scaled with the number and diversity of participating organizations. |

| Polaris ADMET Challenge | Benefit of multi-task architectures & diverse data [33]. | Up to 40–60% reduction in prediction error for endpoints like solubility and permeability. |

| Federated Clustering Benchmark (Bujotzek et al.) | Effective disentanglement of distributed molecular data [35]. | Federated clustering methods (Fed-kMeans, Fed-PCA, Fed-LSH) successfully mapped diverse chemical spaces across 8 molecular datasets. |

| Federated CPI Prediction (Chen et al.) | Enhanced out-of-domain prediction [36]. | FL model showed improved generalizability for predicting novel compound-protein interactions. |

Table 2: Federated Clustering Performance on Molecular Datasets (Bujotzek et al.) [35]

| Clustering Method | Key Metric | Centralized Performance (Upper Baseline) | Federated Performance |

|---|---|---|---|

| Federated k-Means (Fed-kMeans) | Standard mathematical metrics & SF-ICF (chemistry-informed) | k-Means with PCA was most effective in centralized setting. | Successfully disentangled distributed molecular data; importance of domain-informed metrics. |

| Fed-PCA + Fed-kMeans | Dimensionality reduction & clustering quality. | PCA followed by k-Means. | Federated PCA computes exact global covariance without error; effective combined workflow. |

| Federated LSH (Fed-LSH) | Grouping of structurally similar molecules. | LSH based on high-entropy ECFP bits. | Used consensus high-entropy bits from clients; effective for creating informed data splits. |

Experimental Protocols & Methodologies

A. Protocol: Implementing Federated k-Means for Chemical Data Diversity Analysis

This protocol is designed to assess the structural diversity of distributed molecular datasets, a critical step for understanding the combined chemical space and creating meaningful train/test splits to avoid over-optimistic performance estimates [35].

Data Preparation and Fingerprinting

- Input: Each client (e.g., a pharmaceutical company) uses its proprietary set of molecular structures.

- Processing: Using a toolkit like RDKit, each client computes Extended-Connectivity Fingerprints (ECFPs) for their molecules. Typical parameters are a radius of 2 and 2048 bits, resulting in a high-dimensional binary vector for each molecule [35].

- Output: Each client's dataset is represented as a local matrix of ECFP vectors.

Federated Clustering via Fed-kMeans

- Initialization: The central server initializes global cluster centroids using a method like k-means++ and broadcasts them to all clients [35].

- Local Clustering: Each client performs local k-means clustering on its ECFP data using the received global centroids.

- Client-Server Communication: Each client sends its locally updated centroids and the counts of molecules assigned to each centroid back to the server.

- Secure Aggregation: The server computes a weighted average of the local centroids (weighted by cluster counts) to update the global centroids.

- Iteration: Steps 2-4 are repeated for a predefined number of communication rounds or until centroids converge.

Chemistry-Informed Evaluation with SF-ICF

- Scaffold Calculation: Murcko scaffolds are computed for all molecules across clients to abstract their core ring systems and linkers [35].

- Metric Calculation: The Scaffold-Frequency Inverse-Cluster-Frequency (SF-ICF) metric is computed. This chemistry-informed metric helps identify scaffolds that are frequent within a specific cluster but rare in the overall dataset, providing domain-aware validation of cluster quality [35].

B. Protocol: Federated Training of an ADMET Prediction Model

This protocol outlines the core steps for training a robust ADMET prediction model across multiple data silos, such as in the Apheris Federated ADMET Network or the MELLODDY project [33] [34].

Problem Formulation and Model Architecture Selection

- Define Task: Partners agree on a common prediction task, e.g., human liver microsomal clearance.

- Select Model: A unified model architecture (e.g., a Graph Neural Network or Multi-Layer Perceptron) is defined. This model will be used by all participants.

Federated Training Loop

- Step 1 - Global Model Broadcast: The server provides the latest version of the global model to all participating clients.

- Step 2 - Local Training: Each client trains the model on its private, local ADMET dataset for a number of epochs.

- Step 3 - Model Update Transmission: Clients send their updated local model parameters (e.g., weights, gradients) back to the server. Raw data never leaves the client's silo.

- Step 4 - Secure Model Aggregation: The server aggregates the local updates using an algorithm like Federated Averaging (FedAvg). Techniques like differential privacy or secure multi-party computation can be applied at this stage to enhance privacy [37] [38].

- Step 5 - Global Model Update: The server updates the global model with the aggregated parameters.

- Iteration: Steps 1-5 are repeated for multiple communication rounds.

Rigorous Model Validation

- Scaffold-Based Splits: To ensure generalizability, the model is evaluated using scaffold-based cross-validation, where molecules with similar core structures are held out in the test set [33].

- Performance Benchmarking: The final federated model is benchmarked against models trained only on local data to quantify the improvement gained through collaboration.

The workflow below visualizes this iterative process.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Federated Learning in Drug Discovery

| Tool / Technology | Type | Function in Experiment |

|---|---|---|

| NVIDIA FLARE (NVFlare) | Framework | An open-source, domain-agnostic framework for orchestrating federated learning workflows. It provides built-in algorithms for federated averaging and secure aggregation [35] [39]. |

| Flower | Framework | A friendly federated learning framework designed to be compatible with multiple machine learning approaches and easy to integrate [40] [34]. |

| TensorFlow Federated | Framework | A Google-developed open-source framework for machine learning on decentralized data, integrated with the TensorFlow ecosystem [37] [34]. |

| PySyft | Library | An open-source library for privacy-preserving machine learning that supports federated and differential privacy [37] [34]. |

| RDKit | Cheminformatics | The open-source cheminformatics toolkit used for computing molecular descriptors, including ECFP fingerprints and Murcko scaffolds, ensuring consistent featurization across clients [35]. |

| Extended-Connectivity Fingerprints (ECFPs) | Molecular Representation | A circular fingerprint that encodes the presence of specific substructures and atomic environments in a molecule into a fixed-length bit vector, serving as a standard input feature [35]. |

| Differential Privacy | Privacy Technique | A mathematical framework that adds calibrated noise to model updates during aggregation, providing a strong privacy guarantee against data leakage [37] [38]. |

Troubleshooting Guides & FAQs

Frequently Asked Questions

Q1: How can we ensure our proprietary data isn't reverse-engineered from the shared model updates? Federated Learning is designed to mitigate this risk by sharing model updates, not data. For enhanced security, techniques like differential privacy can be applied, which adds calibrated noise to the updates, making it statistically impossible to reconstruct raw input data. Additionally, secure multi-party computation (SMPC) can be used to perform aggregation without any single party seeing the raw updates from others [37] [38].

Q2: Our internal dataset is small and covers a narrow chemical space. Will federated learning still benefit us, or will our model be "overwhelmed" by larger partners? Yes, you can still benefit. One of the key advantages of FL is that it allows organizations with smaller, niche datasets to leverage the collective chemical diversity of the federation. This often results in a global model that is more robust and has a wider applicability domain, which you can then fine-tune on your specific, narrow dataset for optimal local performance [33] [36].

Q3: What happens if the data across different pharmaceutical companies is highly heterogeneous (e.g., different assays, formats)? Data heterogeneity is a common challenge. Strategies to address this include:

- Horizontal Federated Learning (HFL): This is the most common scenario in drug discovery, where partners share the same feature space (e.g., all use ECFP fingerprints) but have data on different chemical entities. The federation enriches the chemical space [34].

- Robust Aggregation Algorithms: Advanced aggregation methods beyond simple averaging can handle non-IID (independently and identically distributed) data distributions.

- Similarity-Guided Ensembles: Recent research proposes creating an ensemble that combines the global FL model with a model fine-tuned on local data, achieving robust performance for both in-domain and out-of-domain tasks [36].

Q4: How do we create meaningful train/test splits in a federated setting to get realistic performance estimates? This is a critical step to avoid data leakage and over-optimism. Federated clustering methods like Federated Locality-Sensitive Hashing (Fed-LSH) or Federated k-Means can be used to group structurally similar molecules across clients. You can then ensure that all molecules from the same cluster end up in the same data split (e.g., all in the test set), creating a more challenging and realistic benchmark for model generalizability [35] [40].

Troubleshooting Common Experimental Issues

| Problem | Possible Cause | Solution & Recommendation |

|---|---|---|

| Model Divergence or Poor Performance | High data heterogeneity among clients; local models drifting apart. | Use regularization techniques during local training to prevent overfitting to local data. Experiment with control variates or adjust the learning rate and number of local epochs [34]. |

| Slow Convergence | Infrequent communication or large number of local training epochs. | Tune the number of local epochs before aggregation. Increase the frequency of communication rounds. Consider using adaptive optimizers suited for federated settings. |

| Low Cluster Quality in Diversity Analysis | Federated clustering algorithm not capturing chemical semantics. | Incorporate chemistry-informed evaluation metrics like SF-ICF to validate results from a domain perspective. Ensure consistent fingerprinting (ECFP) across all clients [35]. |