Benchmarking Open Access vs. Commercial ADMET Tools: A 2025 Guide for Drug Development

This article provides a comprehensive, evidence-based benchmark of open-access and commercial ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) prediction tools for researchers and drug development professionals.

Benchmarking Open Access vs. Commercial ADMET Tools: A 2025 Guide for Drug Development

Abstract

This article provides a comprehensive, evidence-based benchmark of open-access and commercial ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) prediction tools for researchers and drug development professionals. With the global ADMET testing market projected to reach $17 billion by 2029 and a proliferation of new AI-driven models, selecting the right tool is critical. We explore the foundational landscape of available software, detail rigorous methodological protocols for fair comparison, address common troubleshooting and optimization challenges, and present a validation framework based on real-world performance metrics. Our analysis synthesizes findings from recent peer-reviewed studies, market reports, and emerging trends to guide strategic tool selection, ultimately aiming to enhance efficiency and reduce late-stage attrition in drug discovery pipelines.

The Evolving ADMET Tool Landscape: From Open-Source Communities to Commercial AI Platforms

In modern drug discovery, the assessment of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties has become a pivotal step for mitigating clinical attrition rates and optimizing candidate selection. Historically, 40-60% of drug failures in clinical trials have been attributed to inadequate pharmacokinetics and toxicity profiles [1]. The evolution of computational approaches has introduced powerful in silico tools that predict these properties rapidly and cost-effectively, enabling researchers to prioritize compounds with the highest likelihood of success [2]. This guide provides an objective comparison of open-access and commercial ADMET prediction tools, examining their performance against standardized benchmarks and experimental validation protocols to inform tool selection for drug development pipelines.

Essential ADMET Endpoints and Their Biological Significance

Core Physicochemical and Toxicokinetic Properties

ADMET endpoints encompass a spectrum of physicochemical (PC) and toxicokinetic (TK) properties that collectively determine a compound's behavior in biological systems. These properties are routinely predicted in silico to filter compound libraries and guide lead optimization. The most critical endpoints, along with their abbreviations and biological impacts, are summarized in the table below.

Table 1: Key ADMET Endpoints and Their Impact on Drug Discovery

| Property Category | Endpoint | Abbreviation | Impact on Drug Discovery & Development |

|---|---|---|---|

| Physicochemical (PC) | Octanol/Water Partition Coefficient | LogP | Determines lipophilicity, influencing membrane permeability and absorption [3] |

| Water Solubility | LogS | Affects drug dissolution and bioavailability; poor solubility is a major formulation challenge [3] | |

| Acid/Base Dissociation Constant | pKa | Influences ionization state, which impacts solubility, permeability, and protein binding across physiological pH [3] | |

| Toxicokinetic (TK) | Human Intestinal Absorption | HIA | Predicts oral bioavailability; a prerequisite for orally administered drugs [3] |

| Blood-Brain Barrier Permeability | BBB | Critical for central nervous system (CNS) drugs to reach targets, and for non-CNS drugs to avoid off-target effects [3] | |

| Fraction Unbound in Plasma | FUB | Determines the fraction of drug available for pharmacological activity and interaction with tissues [3] | |

| Caco-2 Permeability | Caco-2 | Serves as an in vitro model for predicting human intestinal absorption [3] | |

| P-glycoprotein Substrate/Inhibitor | Pgp.sub/Pgp.inh | Identifies compounds involved in transporter-mediated drug-drug interactions and multidrug resistance [3] | |

| Hepatotoxicity | DILI | Liver injury is a leading cause of drug attrition and post-market withdrawals [4] | |

| hERG Inhibition | hERG | Predicts potential for cardiotoxicity and fatal arrhythmias [4] | |

| CYP450 Inhibition | CYP | Flags compounds that may cause metabolically-based drug-drug interactions [4] |

The ADMET Pathway in Drug Discovery

The following diagram illustrates the interconnected relationship between key ADMET properties and their collective impact on the success of a drug candidate. It maps the journey of an oral drug candidate from administration to excretion, highlighting the critical endpoints assessed at each stage.

Diagram 1: The ADMET Pathway in Drug Discovery.

Benchmarking Methodologies for ADMET Prediction Tools

Standardized Workflows for Model Validation

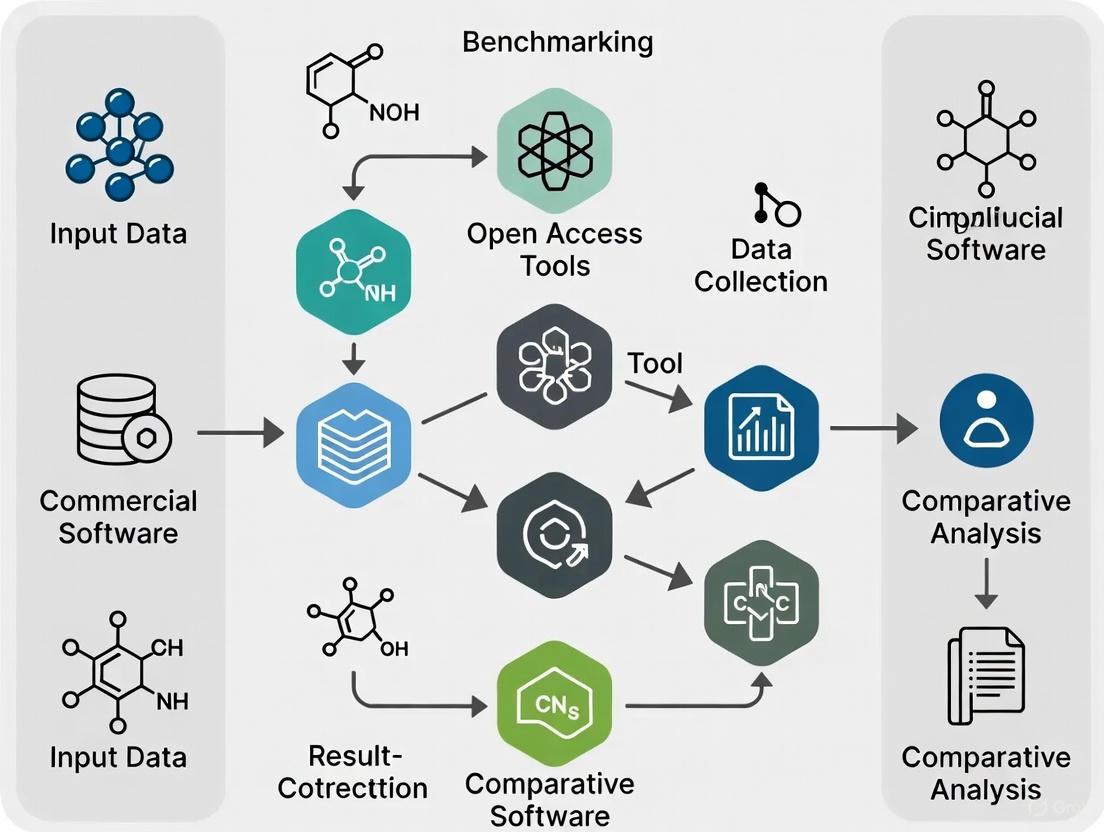

Robust benchmarking requires standardized protocols for data curation, model training, and performance evaluation. The following workflow, synthesized from recent comprehensive studies, outlines the key steps for a fair and rigorous comparison of ADMET tools.

Diagram 2: ADMET Tool Benchmarking Workflow.

Detailed Experimental Protocol:

- Data Curation: Raw data from public sources like ChEMBL and PubChem undergoes a rigorous cleaning process. This includes standardizing SMILES representations, removing inorganic and organometallic compounds, neutralizing salts to isolate the parent organic compound, and handling duplicates. Conflicting experimental values for the same compound are resolved by averaging if the standardized standard deviation is <0.2, or by removal if the difference is larger [5] [1].

- Data Splitting: To rigorously assess a model's ability to generalize to novel chemical structures, the dataset is split using scaffold splitting. This method groups compounds based on their molecular Bemis-Murcko scaffolds, ensuring that the training and test sets contain structurally distinct molecules. This is more challenging and realistic than simple random splitting [5].

- Performance Evaluation: Models are evaluated on a held-out test set. For regression tasks (e.g., predicting LogP), the coefficient of determination (R²) is a primary metric. For classification tasks (e.g., predicting hERG inhibition), balanced accuracy is preferred, especially for imbalanced datasets. Performance should be assessed specifically on compounds falling within the model's Applicability Domain (AD), which defines the chemical space where the model is expected to make reliable predictions [1]. Finally, statistical hypothesis testing (e.g., paired t-tests across multiple cross-validation folds) should be used to determine if performance differences between models are statistically significant [5].

The Scientist's Toolkit: Essential Research Reagents and Software

The following table details key software and resources that are foundational for conducting ADMET benchmarking studies and building predictive models.

Table 2: Essential Research Reagents and Software for ADMET Benchmarking

| Tool/Resource Name | Type | Primary Function in ADMET Research |

|---|---|---|

| RDKit [6] | Open-Source Cheminformatics Library | Calculates molecular descriptors and fingerprints; standardizes chemical structures; integrates with machine learning workflows. |

| Therapeutics Data Commons (TDC) [5] | Curated Data Resource | Provides curated, publicly available benchmark datasets for ADMET and other molecular properties, facilitating standardized model comparison. |

| PharmaBench [7] | Benchmark Dataset | Offers a large-scale ADMET benchmark curated using a multi-agent LLM system to extract experimental conditions from public bioassays. |

| DataWarrior [5] [6] | Interactive Cheminformatics Software | Enables exploratory data analysis, visualization, and filtering of compound datasets based on chemical structures and properties. |

| Python/Pandas/Scikit-learn [7] [5] | Programming Environment | Provides the core computational environment for data processing, machine learning model development, and statistical analysis. |

| KRAS G12C inhibitor 51 | KRAS G12C inhibitor 51, MF:C33H35FN6O3, MW:582.7 g/mol | Chemical Reagent |

| Ellipyrone A | Ellipyrone A, MF:C25H34O8, MW:462.5 g/mol | Chemical Reagent |

Performance Comparison: Open-Access vs. Commercial Tools

Quantitative Benchmarking Across Key Endpoints

A comprehensive benchmark study evaluated multiple software tools, including both open-access and commercial options, across 17 PC and TK properties using 41 externally curated datasets [1]. The results provide a quantitative basis for comparison. The following table synthesizes the key findings, highlighting top-performing tools for critical endpoints.

Table 3: Performance Comparison of ADMET Prediction Tools on Key Endpoints

| Endpoint | Best Performing Tools (Open Access) | Best Performing Tools (Commercial) | Reported Performance (Metric) | Notes / Key Characteristics |

|---|---|---|---|---|

| LogP | OPERA [1] | ADMET Predictor [8] | R² = 0.717 (Average for PC properties) [1] | Commercial tools often use larger, proprietary training sets and advanced AI/ML. |

| Water Solubility (LogS) | OPERA [1] | ADMET Predictor [8] | R² = 0.717 (Average for PC properties) [1] | Open-access tools like OPERA show strong performance for core physicochemical properties. |

| Caco-2 Permeability | TDC Benchmarks [5] | ADMET Predictor [8] | R² = 0.639 (Average for TK regression) [1] | Predictions for complex biological endpoints are generally more challenging. |

| BBB Permeability | TDC Benchmarks [5] | ADMET Predictor [8] | Balanced Accuracy = 0.780 (Average for TK classification) [1] | Open-access models can be competitive, but may require careful feature selection [5]. |

| hERG Inhibition | Chemprop [5] [4] | Receptor.AI [4] | N/A (Varies by dataset) | Modern AI models use multi-task learning and graph-based embeddings for toxicity endpoints. |

| CYP450 Inhibition | ADMET-AI (Chemprop) [4] | Receptor.AI [4] | N/A (Varies by dataset) | A key endpoint for predicting drug-drug interactions. |

Summary of Comparative Analysis:

- Overall Performance Trends: The benchmarking study found that models predicting physicochemical properties (average R² = 0.717) generally outperform those predicting toxicokinetic properties (average R² = 0.639 for regression) [1]. This highlights the greater complexity of modeling biological interactions compared to intrinsic molecular properties.

- Open-Access Tool Suitability: Open-access tools like OPERA demonstrate robust and reliable performance for fundamental physicochemical properties like LogP and LogS, making them excellent choices for initial screening and for organizations with limited budgets [1]. Frameworks like Chemprop and benchmarks on the TDC platform provide state-of-the-art performance for various ADMET endpoints and are highly configurable for research purposes [5].

- Commercial Tool Advantages: Commercial software such as ADMET Predictor and Receptor.AI's platform leverage larger, often proprietary datasets and offer advanced features like integrated PBPK (Physiologically Based Pharmacokinetic) simulation, high-throughput AI-driven drug design, and enterprise-level support [8] [4]. They often provide a broader suite of pre-built, validated models and are designed to seamlessly fit into industrial drug discovery workflows.

Impact of Data Quality and Feature Representation

Beyond the choice of software, the quality of input data and the representation of molecules are critical factors influencing prediction accuracy.

- Data Quality and Curation: Inconsistent experimental data is a major challenge. For example, the same compound can have different solubility values depending on buffer, pH, and experimental procedure [7]. Tools like PharmaBench address this by using Large Language Models (LLMs) to systematically extract and standardize experimental conditions from public bioassays, leading to more consistent and reliable benchmark datasets [7].

- Feature Representation: The choice of how to represent a molecule numerically (e.g., molecular fingerprints, descriptors, graph representations) significantly impacts model performance. Studies show that systematically selecting and combining feature representations is more effective than arbitrarily concatenating them. For example, combining Mol2Vec embeddings with curated molecular descriptors can enhance predictive accuracy [5] [4]. Furthermore, random forest models have been found to be strong performers with fixed representations in several ADMET benchmarks [5].

The landscape of ADMET prediction is rapidly evolving, driven by better datasets, more sophisticated AI models, and collaborative efforts. The emergence of large, carefully curated benchmarks like PharmaBench is crucial for meaningful tool comparison [7]. Furthermore, paradigms like federated learning allow multiple pharmaceutical companies to collaboratively train models on their distributed proprietary data without sharing it, leading to more robust and generalizable models without compromising data privacy [9].

When selecting an ADMET tool, researchers must consider the trade-offs. Open-access tools offer transparency, cost-effectiveness, and are ideal for foundational research and proof-of-concept studies. Commercial software provides turn-key, validated solutions with advanced features and support, suitable for regulatory-facing decisions and high-throughput industrial pipelines. Ultimately, the choice depends on the specific endpoint requirements, the available budget, the need for interpretability, and the intended application within the drug discovery workflow. Rigorous, externally validated benchmarks, as discussed in this guide, provide the essential foundation for making these critical decisions.

The high attrition rate of drug candidates due to unfavorable pharmacokinetics and toxicity (ADMET) remains a significant challenge in pharmaceutical development. In silico prediction tools have become indispensable for early-stage risk assessment, offering the potential to prioritize compounds with a higher likelihood of success. While commercial software exists, the open-source ecosystem has seen rapid innovation, providing powerful, accessible, and transparent alternatives. This guide objectively maps and compares prevalent open-source ADMET tools—focusing on Chemprop, ADMETlab 3.0, and ADMET-AI—and benchmarks their capabilities against commercial-grade software, providing researchers with a clear framework for tool selection based on empirical evidence.

This section provides a detailed comparison of the core features, architectures, and access models of the leading open-source ADMET tools and a representative commercial counterpart.

Table 1: Core Feature Comparison of Prevalent ADMET Tools

| Tool Name | Primary Access Model | Core Architecture | Number of Endpoints | Key Differentiating Features |

|---|---|---|---|---|

| Chemprop | Standalone/Code Library [10] | Directed Message Passing Neural Network (DMPNN) [11] | User-definable | Highly flexible, modular framework for building custom models; command-line interface [12]. |

| ADMETlab 3.0 | Free Web Server [11] | Multi-task DMPNN + Molecular Descriptors [11] | 119 [11] | Extremely broad endpoint coverage; API for batch processing; uncertainty estimation [11]. |

| ADMET-AI | Free Web Server [12] | Chemprop-RDKit (Graph Neural Network) [12] | 41 [12] | Fast prediction speed; results benchmarked against a DrugBank reference set [12]. |

| ADMET Predictor | Commercial Software [13] | Proprietary | >70 valid models [13] | Wide applicability domain beyond drug-like molecules; high consistency in predictions [13]. |

As illustrated in Table 1, the open-source tools present a range of specializations. ADMETlab 3.0 stands out for its exceptional coverage of 119 endpoints, a significant increase from its previous version [11]. ADMET-AI, also built on a sophisticated graph neural network architecture (Chemprop-RDKit), prioritizes speed and context, providing comparisons to approved drugs from DrugBank [12]. In contrast, Chemprop itself is not a webserver but a flexible code library that allows researchers to train their own models on proprietary datasets, offering maximum customization at the cost of ease of use [10]. In commercial benchmarks, tools like ADMET Predictor are noted for their broad applicability domain and consistency, particularly for non-drug-like molecules such as microcystins, where some open-source tools showed limitations due to molecular size or mass [13].

Performance Benchmarking and Experimental Data

Independent benchmarking studies provide crucial insights into the real-world predictive performance of these tools. A comprehensive 2024 study evaluated twelve software tools against 41 curated validation datasets for 17 physicochemical and toxicokinetic properties [3].

Table 2: Selected Benchmarking Results from External Validation Studies

| Property Type | Exemplary Endpoint | Reported Performance (Open-Source) | Overall Benchmark Finding |

|---|---|---|---|

| Physicochemical (PC) | LogP (Octanol/water partition coefficient) | ADMETlab and others showed adequate predictivity [3] | PC models (R² average = 0.717) generally outperformed Toxicokinetic models [3]. |

| Toxicokinetic (TK) - Classification | P-gp substrate/inhibitor | Balanced accuracy of top tools >0.85 [3] | TK classification models achieved an average balanced accuracy of 0.780 [3]. |

| Toxicokinetic (TK) - Regression | Fraction unbound (FUB) | R² performance varies by tool and endpoint [3] | TK regression models showed an average R² of 0.639 [3]. |

| Toxicity | hERG channel blockade | Multiple open-source models available (e.g., hERG-MFFGNN, BayeshERG) [10] | Several open-source tools were identified as recurring optimal choices across different properties [3]. |

The benchmarking concluded that several open-source tools demonstrated adequate predictive performance and were "recurring optimal choices" across various properties, making them suitable for high-throughput assessment [3]. The study emphasized that performance is highest for predictions within a model's applicability domain—the chemical space its training data covers [3]. This underscores the importance of selecting a tool whose training set aligns with the researcher's chemical space of interest.

Experimental Protocols in Benchmarking Studies

To ensure reliability and reproducibility, independent benchmarking studies follow rigorous experimental protocols. The methodology from the comprehensive 2024 review is typical of a high-quality benchmarking workflow [3]:

- Dataset Curation: Data is collected from public sources (e.g., ChEMBL, PubChem) and literature. SMILES are standardized, salts are neutralized, and inorganic/organometallic compounds are removed.

- Data Deduplication and Outlier Removal: Duplicate compounds are identified and consolidated. Intra-dataset outliers (Z-score > 3) and inter-dataset compounds with inconsistent values are excluded.

- Model Evaluation: The curated external validation sets are used to predict properties with each tool. Performance metrics are then calculated.

- Performance Metrics:

- For regression tasks (e.g., LogP, solubility), R-squared (R²), Root Mean Squared Error (RMSE), and Mean Absolute Error (MAE) are standard [11] [3].

- For classification tasks (e.g., hERG inhibition, P-gp substrate), the Area Under the ROC Curve (AUC), Balanced Accuracy, and Matthews Correlation Coefficient (MCC) are commonly used [11] [3].

Benchmarking Workflow

Architectural Insights: The Role of Deep Learning

The performance leap in modern ADMET prediction is largely driven by deep learning architectures that directly learn relevant features from molecular structure.

- Graph Neural Networks (GNNs): Tools like ADMET-AI and ADMETlab 3.0 use GNN variants that represent molecules as graphs (atoms as nodes, bonds as edges). These models learn meaningful representations by passing messages between atoms and bonds, capturing complex sub-structural patterns linked to properties [11] [12].

- Multi-task Learning (MTL): ADMETlab 3.0 employs a multi-task DMPNN framework. This allows a single model to learn multiple related endpoints (e.g., various toxicity measures) simultaneously. MTL can improve generalizability and efficiency by leveraging shared information across tasks [11].

- Hybrid Approaches: Many top performers combine GNNs with traditional molecular descriptors. ADMETlab 3.0 concatenates its graph readout features with RDKit 2D descriptors, arguing that global molecular information from descriptors complements the local structural information from the GNN [11].

Deep Learning Architecture

The Scientist's Toolkit: Essential Research Reagents

This section details key computational "reagents" and resources essential for conducting or interpreting ADMET tool benchmarking studies.

Table 3: Essential Resources for ADMET Tool Research

| Resource Name/Type | Function in Research | Relevance to Benchmarking |

|---|---|---|

| RDKit | Open-source cheminformatics library [6] | Foundation for structure standardization, descriptor calculation, and molecular visualization; used by many tools under the hood [11] [12]. |

| Therapeutics Data Commons (TDC) | Curated collection of datasets for AI in therapeutics [12] | Provides standardized, benchmark-ready datasets for training and evaluating ADMET models (e.g., used by ADMET-AI) [12]. |

| PubChem PUG REST API | Programmatic interface for chemical data [3] | Used during data curation to retrieve canonical structures (SMILES) from identifiers like CAS numbers [3]. |

| Curated Validation Datasets | Literature-derived, chemically diverse compound sets with experimental data [3] | Serve as the ground truth for external validation, enabling objective comparison of tool predictivity on novel chemicals [3]. |

| Docker Containers | Platform for software containerization [14] | Ensures reproducible deployment and testing of tools (e.g., local installations of webserver tools) by standardizing the computing environment [14]. |

| Curvulamine A | Curvulamine A | Curvulamine A is a novel antibacterial alkaloid for research applications. This product is for Research Use Only (RUO). Not for human or veterinary use. |

| Cox-2-IN-8 | Cox-2-IN-8, MF:C19H19N3O4S2, MW:417.5 g/mol | Chemical Reagent |

The open-source ecosystem for ADMET prediction, led by tools like ADMETlab 3.0, ADMET-AI, and the Chemprop framework, offers robust, high-performance options that are increasingly competitive with commercial software. Independent benchmarks confirm that these tools provide adequate to excellent predictivity for a wide range of properties, particularly for drug-like molecules. The choice of tool should be guided by the specific needs of the project: ADMETlab 3.0 for maximum endpoint coverage and batch API functionality, ADMET-AI for fast results with clinical context, and Chemprop for ultimate flexibility with proprietary data. As regulatory agencies like the FDA increasingly accept New Approach Methodologies (NAMs), the role of these transparent, validated, open-source in silico tools is poised to become even more central to efficient and predictive drug discovery.

The integration of artificial intelligence into drug discovery has given rise to specialized platforms that aim to de-risk and accelerate the development of new therapeutics. The table below contrasts two such platforms, Receptor.AI and Logica, highlighting their distinct approaches and core offerings.

| Feature | Receptor.AI | Logica |

|---|---|---|

| Core Description | Multi-platform, generative AI ecosystem for end-to-end drug discovery [15] [16] | A collaborative platform combining AI with experimental expertise and a risk-sharing model [17] |

| Parent Company/Structure | Preclinical TechBio company [18] | A collaboration between Charles River and Valo Health [17] |

| Technology Core | Proprietary AI model stack (e.g., DTI, ADMET, ArtiDock) and agentic R&D strategy control [19] [16] | Integration of Valo's AI/ML with Charles River's experimental and discovery capabilities [17] |

| Supported Modalities | Small molecules, peptides, proximity inducers (e.g., degraders, molecular glues) [16] [18] | Small molecules [17] |

| Key Value Proposition | De novo design against complex and "undruggable" targets using a validated, modular AI ecosystem [15] [16] | Predictable outcomes via a fixed-budget, risk-sharing model that fuses AI design with lab validation [17] |

| Business Model | Partnerships and co-development programs with pharma and biotech [20] [15] | Risk-sharing, with a fixed budget tied to key value-inflection points [17] |

Architectural and Workflow Comparison

The fundamental difference between the platforms lies in their overarching architecture and the role of AI. Receptor.AI employs a technology-centric model built on a proprietary AI stack, while Logica champions an expertise-centric model that natively integrates AI with human insight and wet-lab validation.

Receptor.AI's Technology-Centric Architecture Receptor.AI's platform is structured on a unified 4-level architecture [16]:

- Level 1: R&D Strategy and Control. An Agentic AI system selects validated drug discovery strategies, generates project plans, and adapts them in real-time under expert oversight.

- Level 2: Drug Discovery Workflows. The system assembles end-to-end, target-specific workflows for different modalities (small molecules, peptides) and target classes (GPCRs, kinases).

- Level 3: AI Model Stack. A suite of rigorously benchmarked predictive and generative AI models power core tasks. Key models include its drug-target interaction (DTI) predictor, ADMET engine, and high-throughput docking tool, ArtiDock [19] [16].

- Level 4: Data Engine. Manages project-specific data, enabling feature engineering and active learning for the AI models.

This architecture supports a virtual screening pipeline where primary screening uses AI models to predict drug-target activity, and secondary screening applies ADMET filters and molecular docking with AI rescoring [19].

Receptor.AI's 4-Level Platform Architecture

Logica's Expertise-Centric Workflow Logica's process is a tightly integrated cycle where AI-driven design and experimental validation inform each other continuously [17]. The workflow is designed to be a closed-loop discovery system:

- AI/Molecular Design: Scan billions of virtual molecules and rank novel chemistries.

- Experimental Data Generation: Leverage hundreds of in vitro and in vivo models from Charles River.

- Expert Analysis & Iteration: Human drug discovery experts analyze data to drive the next design cycle, amalgamating data into high-precision predictive models.

Logica's Closed-Loop Discovery System

Experimental Protocols and Performance Benchmarking

A critical differentiator for AI platforms is the rigor of their experimental validation. Receptor.AI's benchmarking data for its core AI models is publicly detailed, providing insights into its claimed performance advantages.

Receptor.AI's ADMET Model Validation Receptor.AI's ADMET prediction model is a multi-task neural network that uses a graph-based structure for universal molecular descriptors [21].

- Training Datasets: Compiled from ChEMBL, ToxCast, and manually curated literature sources. Molecular structures were standardized, and salts/inorganic compounds were removed [21].

- Model Architecture: A Hard Parameter Sharing (HPS) Graph Neural Network as a shared encoder, with task-specific multilayer perceptrons (MLPs) for each of the 40+ ADMET endpoints [21].

- Benchmarking Results: The model was benchmarked on internal test sets and public benchmarks from the Therapeutic Data Commons (TDC). Receptor.AI reports that its model family achieved first-place ranking on 10 out of 16 ADMET tasks in the TDC, outperforming other models like Chemprop and GraphDTA on challenging endpoints like DILI (drug-induced liver injury) and hERG (cardiotoxicity) [22].

Receptor.AI's Drug-Target Interaction (DTI) Model Validation The DTI model is foundational for primary virtual screening.

- Experimental Protocol: The model's performance was evaluated on two widespread public benchmark datasets, Davis (kinase inhibitors) and KIBA (kinase bioactivity scores). It was compared against eight other modern AI algorithms using metrics like Mean Squared Error (MSE) and Concordance Index (CI) [19].

- Key Findings: As shown in the table below, Receptor.AI's DTI model demonstrated superior performance on both datasets across all metrics compared to other state-of-the-art methods [19].

| Dataset | Metric | Receptor.AI DTI | Next Best Competitor |

|---|---|---|---|

| Davis | MSE | 0.219 | 0.234 (DeepCDA) |

| CI | 0.898 | 0.886 (GraphDTA) | |

| rm2 | 0.716 | 0.681 (DeepCDA) | |

| KIBA | MSE | 0.136 | 0.144 (GraphDTA) |

| CI | 0.887 | 0.863 (DeepCDA) | |

| rm2 | 0.782 | 0.701 (DeepCDA) |

Real-World Performance Test In a separate benchmark, the DTI model was tasked with prioritizing known active ligands for 8 protein targets from a large pool of decoy molecules. The model successfully placed a high number of known actives in the top ranks; for instance, for the protein BACE1, 9 out of 9 known active ligands were identified within the top 100 ranked compounds [19].

Both platforms provide access to extensive research resources, though their nature differs significantly due to the platforms' distinct models.

| Tool/Resource | Platform | Description | Function in Discovery |

|---|---|---|---|

| ChemoVista | Receptor.AI [18] | A curated library of over 8 million in-stock, QC-validated small molecules. | Hit discovery and lead optimization; provides readily available compounds for high-throughput screening campaigns. |

| VirtuSynthium | Receptor.AI [18] | A vast space of 10¹ⶠsynthesis-ready virtual compounds built from over 1 million reagents. | Expands accessible chemical space for AI-driven de novo design, with real-time synthesis feasibility checks. |

| DNA-Encoded Libraries (DEL) | Logica [17] | A high-throughput hit-finding technology comprising vast collections of small molecules tagged with DNA barcodes. | Rapidly identifies binders for a target protein from millions to billions of compounds in a single experiment. |

| OmniPeptide Nexus | Receptor.AI [18] | A platform for designing and optimizing linear and cyclic peptides of 2-100 amino acids, including modified variants. | Targets challenging protein-protein interactions and "undruggable" targets with peptide therapeutics. |

| Integrated in vitro & in vivo Models | Logica [17] | A collection of hundreds of pharmacological and biological assay systems provided by Charles River. | Provides empirical data on compound efficacy, pharmacokinetics, and toxicity to validate AI predictions and guide optimization. |

For researchers, the choice between a platform like Receptor.AI and one like Logica hinges on strategic priorities. Receptor.AI offers a deeply integrated, generative AI engine for pioneering novel modalities against difficult targets. In contrast, Logica provides a de-risked path to a clinical candidate for small-molecule programs by guaranteeing outcomes and leveraging proven experimental infrastructure.

The Pharmaceutical Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) testing sector represents a critical pillar in the drug development pipeline, enabling the assessment of drug safety and efficacy before clinical use [23]. This market has experienced substantial expansion, growing from $9.67 billion in 2024 to an expected $10.7 billion in 2025, reflecting a compound annual growth rate (CAGR) of 10.6% [24] [25]. Projections indicate continued robust growth, with the market expected to reach $17.03 billion by 2029, propelled by a CAGR of 12.3% [24] [23]. This growth trajectory is underpinned by several key drivers, including escalating drug development activities, increasing regulatory requirements for product approvals, and a marked shift toward innovative testing methodologies that integrate artificial intelligence and computational modeling [24] [25] [23].

The rising number of product approvals directly fuels the ADMET testing market, as these assessments are mandatory for regulatory clearance. For instance, the U.S. Food and Drug Administration (FDA) approved 55 new drugs in 2023, up from 37 in 2022, increasing the demand for comprehensive safety and efficacy profiling [24] [23]. Furthermore, a significant surge in clinical trials has amplified the need for tailored ADMET evaluations; as of May 2023, 452,604 clinical studies were registered on ClinicalTrials.gov, a substantial increase from over 365,000 trials in early 2021 [25] [23]. This expanding landscape sets the stage for rigorous benchmarking of the tools and methodologies that enable these essential assessments.

Market Segmentation and Key Drivers

Market Segmentation Analysis

The pharma ADMET testing market is segmented by testing type, technology, and application area, each contributing differently to market dynamics and growth [24] [25] [23].

Table 1: Pharma ADMET Testing Market Segmentation

| Segmentation Type | Key Categories | Sub-segments and Specializations |

|---|---|---|

| By Testing Type | In Vivo ADMET Testing | Animal Studies, Pharmacokinetics Studies, Toxicology Studies, Biodistribution Studies [25] |

| In Vitro ADMET Testing | Metabolism Studies, Drug-Drug Interaction Studies, Absorption Studies, Cytotoxicity and Safety Testing [25] | |

| In Silico ADMET Testing | Predictive Modeling and Simulation, QSAR Analysis, Machine Learning Algorithms, Software Tools [25] | |

| By Technology | Cell Culture, High Throughput, Molecular Imaging, OMICS Technology [24] | |

| By Application | Systemic Toxicity, Renal Toxicity, Hepatotoxicity, Neurotoxicity, Other Applications [24] |

Asia-Pacific emerged as the largest regional market in 2024, with North America and Europe also representing significant markets [24] [25]. The in silico segment is witnessing particularly rapid evolution, driven by technological innovations and the trend toward reducing animal testing [26].

Primary Market Growth Drivers

Several interrelated factors are propelling the growth of the ADMET testing market:

- Increasing Product Approvals: The growing number of regulatory authorizations for new drugs directly stimulates demand for ADMET testing services, as these evaluations are prerequisites for establishing safety and efficacy profiles [24] [23].

- Expansion of Clinical Trials: The substantial increase in registered clinical studies worldwide elevates the need for thorough pharmacokinetic and toxicological assessments across diverse drug candidates and patient populations [25] [23].

- Growth of Biologics and Biosimilars: The expanding presence of biopharmaceuticals and biosimilars in the therapeutic landscape requires specialized ADMET testing protocols, contributing to market diversification and growth [24] [23].

- Stringent Regulatory Mandates: Global regulatory bodies are implementing expanded testing requirements, ensuring comprehensive safety assessment and driving market standardization [24].

Benchmarking Open-Source and Commercial ADMET Tools

Experimental Protocol for Software Evaluation

Benchmarking computational ADMET tools requires a structured methodology to ensure fair and reproducible comparisons. The following protocol outlines key steps for objective evaluation:

Dataset Curation and Standardization: Collect experimental data from publicly available chemical databases (e.g., ChEMBL, PubChem) and literature [3] [7]. Standardize molecular structures using toolkits like RDKit, including neutralization of salts, removal of duplicates, and curation of ambiguous values [3]. Identify and exclude response outliers through Z-score analysis and remove compounds with inconsistent experimental values across different datasets [3].

Definition of Applicability Domain: Assess whether test compounds fall within the chemical space of each software's training set. This critical step determines the reliability of predictions for specific chemical classes, such as the cyclic heptapeptides found in microcystins [13].

External Validation Procedure: Use meticulously curated external validation datasets not included in software training. Emphasize evaluating model performance inside the established applicability domain [3]. For properties with conflicting experimental values, apply standardized deviation thresholds (e.g., standardized standard deviation >0.2) to exclude ambiguous data [3].

Performance Metrics Calculation: For regression tasks (e.g., logP, solubility), calculate the coefficient of determination (R²) between predicted and experimental values. For classification tasks (e.g., BBB permeability, P-gp inhibition), compute balanced accuracy to account for class imbalance [3].

Comparative Analysis: Systematically compare predictive performance across software tools for each ADMET property, identifying optimal tools for specific endpoints and chemical spaces [3] [13].

Diagram of the experimental workflow for benchmarking ADMET software tools, from initial data collection to final analysis.

Comparative Performance Analysis of ADMET Software

Recent comprehensive studies have benchmarked multiple computational tools for predicting physiochemical (PC) and toxicokinetic (TK) properties. A 2024 evaluation of twelve software tools implementing Quantitative Structure-Activity Relationship (QSAR) models revealed that models for PC properties (average R² = 0.717) generally outperformed those for TK properties (average R² = 0.639 for regression, average balanced accuracy = 0.780 for classification) [3]. This performance differential highlights the greater complexity of predicting biological interactions compared to fundamental physicochemical characteristics.

Table 2: Comparative Analysis of ADMET Prediction Software

| Software Tool | License Type | Key Strengths | Performance Notes | Ideal Use Cases |

|---|---|---|---|---|

| ADMET Predictor | Commercial | Extensive model coverage (70+ models); Broad chemical applicability [13] | High consistency for microcystins; Valid predictions across multiple endpoints [13] | Industrial drug discovery; Environmental toxicology |

| admetSAR | Freemium | Balanced for drug-like and broader chemical compounds [13] | Similar results to ADMET Predictor despite fewer models [13] | Academic research; Preliminary screening |

| SwissADME | Free | User-friendly interface; Tailored for drug simulations [13] | Some discrepant results for specific toxin classes [13] | Early-stage drug discovery; Educational purposes |

| T.E.S.T. | Free | Focus on environmental toxicology; Acute toxicity in aquatic organisms [13] | Adequate for lipophilicity, permeability, absorption [13] | Environmental risk assessment |

| RDKit | Open-Source | Comprehensive descriptor calculation; High customizability [27] | Foundation for ADMET predictions but requires external models [27] | Building custom prediction pipelines; Research informatics |

| ADMETlab | Free | Tailored for drug simulations [13] | Molecule size/mass limitations for certain toxins [13] | Standard drug-like molecules |

Specialized studies comparing software for specific toxin classes provide further insights into performance characteristics. When evaluating microcystin toxicity, researchers found ADMET Predictor, admetSAR, SwissADME, and T.E.S.T. adequate for predicting lipophilicity, permeability, intestinal absorption, and transport proteins, while ADMETlab and ECOSAR showed limitations due to molecule size/mass constraints [13]. This demonstrates the critical importance of applicability domain assessment when selecting computational tools for specific chemical classes.

Emerging Trends and Future Outlook

Key Market Trends Shaping the ADMET Landscape

Several prominent trends are reshaping the pharma ADMET testing sector and influencing tool development:

Integration of Artificial Intelligence: Major companies are launching AI-powered solutions that significantly enhance predictive capabilities. For instance, Charles River Laboratories and Valo Health introduced Logica, a platform that leverages the Opal Computational Platform to provide AI-enhanced ADMET testing services [24] [25] [23].

Strategic Partnerships and Collaborations: Leading market players are increasingly forming strategic alliances to advance computational capabilities. Excelra's partnership with HotSpot Therapeutics integrates annotated datasets into AI/ML models to accelerate allosteric drug discovery, demonstrating how collaboration drives innovation [25] [23].

Focus on Product Innovation: Continuous innovation in testing methodologies and platforms is essential for maintaining competitive advantage. Companies are investing heavily in developing novel testing solutions that improve accuracy, reduce costs, and decrease reliance on animal testing [25] [23].

Advancements in High-Throughput and OMICS Technologies: Technological improvements in screening efficiency and comprehensive molecular profiling are enhancing the depth and speed of ADMET assessments, enabling more thorough evaluation of drug candidates [24].

Rising Importance of ESG Considerations: Environmental, Social, and Governance (ESG) factors are increasingly influencing ADMET testing practices, driving adoption of greener laboratory processes, ethical testing protocols, and reduced animal experimentation [26].

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Key Research Reagent Solutions for ADMET Testing

| Reagent/Assay System | Function in ADMET Testing | Application Context |

|---|---|---|

| Caco-2 Cell Lines | Model human intestinal absorption and permeability [3] | In vitro absorption studies |

| Human Liver Microsomes | Evaluate metabolic stability and metabolite formation [25] | In vitro metabolism studies |

| Plasma Protein Binding Assays | Determine fraction unbound to plasma proteins (FUB) [3] | Distribution studies |

| hERG Assay Kits | Assess potential for cardiotoxicity via hERG channel interaction [25] | Safety pharmacology |

| Cyanobacterial Toxins (e.g., MC-LR) | Reference compounds for environmental toxicology assessment [13] | Toxicity benchmarking |

| 3D Liver Microtissues | More physiologically relevant models for hepatotoxicity screening [23] | Advanced in vitro toxicity testing |

| DNA-Encoded Libraries | Enable high-throughput screening of compound interactions [24] [25] | Discovery optimization |

| eIF4A3-IN-7 | eIF4A3-IN-7|eIF4A3 Inhibitor | eIF4A3-IN-7 is a potent eIF4A3 inhibitor for cancer research. This product is For Research Use Only and not intended for diagnostic or therapeutic use. |

| ar-Turmerone-d3 | ar-Turmerone-d3 Stable Isotope |

Decision tree for selecting appropriate ADMET software tools based on budget, chemical scope, and customization needs.

The pharma ADMET testing sector continues to evolve rapidly, driven by increasing regulatory requirements, technological advancements, and growing demand for efficient drug development processes. The benchmarking of open-access and commercial ADMET tools reveals a diverse landscape where optimal software selection depends on specific research needs, chemical space, and available resources. Commercial solutions like ADMET Predictor offer extensive model coverage and reliability for industrial applications, while open-access platforms provide valuable capabilities for academic research and preliminary screening, particularly for standard drug-like molecules.

The integration of artificial intelligence, strategic industry partnerships, and continuous methodological innovations are poised to further transform the ADMET testing landscape. As the market progresses toward the projected $17 billion mark by 2029, researchers and drug development professionals will benefit from increasingly sophisticated computational tools that enhance predictive accuracy while reducing costs and animal testing. These advancements will ultimately contribute to more efficient drug discovery pipelines and safer therapeutic products, underscoring the critical importance of ongoing tool development and rigorous benchmarking in this essential sector.

Designing a Rigorous Benchmarking Protocol: Best Practices for Model Evaluation

Accurate prediction of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties sits at the heart of modern drug discovery, directly influencing a drug's efficacy, safety, and ultimate clinical success. The rise of computational approaches provides a fast and cost-effective means for early ADMET assessment, allowing researchers to focus resources on the most promising drug candidates [28]. However, the performance and reliability of these artificial intelligence models are fundamentally constrained by the quality of the data on which they are trained. Public benchmark datasets for ADMET properties face significant challenges related to data consistency, standardization, and overall cleanliness, issues that permeate many widely used resources and complicate fair comparison of computational methods [5] [29]. This guide objectively examines the data curation methodologies and cleaning protocols employed by two major public initiatives—Therapeutics Data Commons (TDC) and PharmaBench—contrasting their approaches to overcoming inherent inconsistencies in public bioassay data.

The landscape of publicly available ADMET data has evolved significantly, with newer datasets attempting to address the shortcomings of earlier efforts. The following section provides a detailed comparison of the resources in terms of scale, curation, and data quality.

Table 1: Overview and Comparison of ADMET Data Resources

| Feature | Therapeutics Data Commons (TDC) | PharmaBench | Legacy Benchmarks (e.g., MoleculeNet) |

|---|---|---|---|

| Initial Release & Scale | 2021; 22 ADMET tasks in benchmark group [30] | 2024; 11 ADMET properties [28] | 2017; 16 datasets across 4 categories [29] |

| Primary Data Sources | Integrates multiple previously curated datasets [28] | ChEMBL, AstraZeneca, B3DB, and other public datasets [28] | Combines data from sources like ChEMBL, PubChem [29] |

| Key Data Curation Strategy | Provides standardized data splits (scaffold, random); data functions and processors [31] | Multi-agent LLM system to extract experimental conditions from assay descriptions [28] | Aggregation of public data with limited re-curation [29] |

| Scale (Compounds) | Over 100,000 entries across ADMET datasets [28] | 52,482 curated entries from 156,618 raw entries [28] | Varies; e.g., ESOL has 1,128 compounds [28] |

| Handling of Experimental Conditions | Limited explicit filtering based on conditions [5] | Systematic extraction and filtering based on buffer, pH, technique, etc. [28] | Largely unaddressed; results from different conditions are often combined [29] |

| Notable Data Quality Issues | - Inconsistent binary labels for the same SMILES- Data cleanliness challenges [5] | Designed to mitigate these issues via structured curation | - Invalid chemical structures (e.g., in BBB dataset)- Duplicate entries with conflicting labels [29] |

The Data Quality Challenge in Older Benchmarks

Legacy benchmarks, while foundational, exhibit numerous flaws that undermine their utility for rigorous method comparison. The widely used MoleculeNet collection, cited over 1,800 times, serves as a prime example of these challenges [29]. Technical issues abound, including the presence of invalid chemical structures that cannot be parsed by standard cheminformatics toolkits, a lack of consistent chemical representation (e.g., the same functional group represented in protonated, anionic, and salt forms), and a high prevalence of molecules with undefined stereochemistry [29]. These problems are compounded by philosophical issues, such as the aggregation of data from dozens of original sources without sufficient normalization of experimental protocols, leading to inconsistencies in measurement [29]. Perhaps most critically, datasets like the MoleculeNet Blood-Brain Barrier (BBB) penetration dataset contain fundamental curation errors, including duplicate molecular structures with conflicting activity labels [29].

TDC: A Unified Ecosystem with Persistent Data Cleanliness Hurdles

Therapeutics Data Commons (TDC) represents a significant step forward, creating a unified ecosystem of machine-learning tasks, datasets, and benchmarks for therapeutic science [31]. Its key innovation lies in providing a standardized Python library with systematic data splits, particularly scaffold splits that simulate real-world scenarios by separating structurally dissimilar molecules in training and test sets [30] [31]. This approach offers a more meaningful evaluation of model generalizability. However, independent analyses confirm that TDC datasets, like their predecessors, face significant data cleanliness challenges. These include inconsistent binary labels for identical SMILES strings across training and test sets, the presence of fragmented SMILES representing multiple organic compounds, and duplicate measurements with varying values [5]. These inconsistencies necessitate rigorous data cleaning before reliable model training can occur.

PharmaBench: Leveraging LLMs for Systematic Data Curation

PharmaBench, a more recent and comprehensive benchmark, was created specifically to address the limitations of previous resources, most notably their small size and lack of representativeness toward drug discovery compounds [28]. Its core innovation is a multi-agent data mining system powered by Large Language Models (LLMs) that automatically identifies and extracts critical experimental conditions from unstructured assay descriptions in databases like ChEMBL [28]. This workflow allows for the merging of entries from different sources based on standardized experimental parameters, such as pH, analytical method, and solvent system. The result is a larger and more chemically diverse benchmark, with molecular weights more aligned with those in drug discovery pipelines (300-800 Dalton) compared to older sets like ESOL (mean 203.9 Dalton) [28]. The process of standardizing and filtering data based on these extracted conditions is a key differentiator in its curation methodology.

Table 2: Experimental Condition Filtering in PharmaBench Curation

| ADMET Property | Key Extracted Experimental Conditions | Standardized Filter Criteria |

|---|---|---|

| LogD | pH, Analytical Method, Solvent System, Incubation Time | pH = 7.4, Analytical Method = HPLC, Solvent System = octanol-water [28] |

| Water Solubility | pH Level, Solvent/System, Measurement Technique | 7.6 ≥ pH ≥ 7, Solvent = Water, Technique = HPLC [28] |

| Blood-Brain Barrier (BBB) | Cell Line Models, Permeability Assays, pH Levels | Cell Line Models = BBB, Permeability Assays ≠effective permeability [28] |

Experimental Protocols for Benchmarking and Data Cleaning

A Standardized Workflow for Data Cleaning

To ensure robust model performance, a rigorous data cleaning protocol must be applied to any dataset, whether public or proprietary. The following workflow, synthesized from recent benchmarking studies, outlines a structured approach to mitigate common data issues [5].

The process begins with SMILES Standardization, which ensures consistent representation of chemical structures [5]. This is followed by the removal of inorganic salts and organometallic compounds and the extraction of the organic parent compound from any salt forms, as the property measurement is typically attributed to the parent molecule [5]. Subsequent steps include tautomer standardization to achieve consistent functional group representation and canonicalization of SMILES strings. A critical step is duplicate handling, where entries with identical SMILES are grouped; if their target values are consistent (identical for binary tasks, within a tight range for regression), the first entry is kept, but the entire group is removed if values are inconsistent [5]. Finally, given the relatively small size of many ADMET datasets, a visual inspection using tools like DataWarrior is recommended as a final quality check [5].

Protocols for Benchmarking Model Performance

When benchmarking ADMET prediction tools, the methodology for model training and evaluation is as important as the data itself. The following protocols are considered best practice.

Hyperparameter Optimization and Model Training: For machine learning models like XGBoost, a randomized grid search cross-validation (CV) is typically applied to optimize key parameters, including n_estimators (number of trees), max_depth (maximum tree depth), learning_rate (boosting learning rate), and regularization terms (reg_alpha, reg_lambda) [30]. The model with the highest CV score is selected for final evaluation on a held-out test set. This process is often repeated over multiple random seeds (e.g., 5 times) to ensure stability of results [30].

Performance Evaluation Metrics: The choice of evaluation metric depends on the task type. For regression tasks (e.g., predicting solubility or clearance), common metrics are Mean Absolute Error (MAE), which measures the average deviation between predictions and true values, and Spearman's correlation coefficient, which assesses the monotonic relationship between ranked variables [30]. For binary classification tasks (e.g., toxicity or inhibition), the Area Under the Receiver Operating Characteristic Curve (AUROC) and the Area Under the Precision-Recall Curve (AUPRC) are standard, with higher values indicating better model performance [30].

Statistical Significance Testing: To move beyond simple performance comparisons on hold-out test sets, advanced benchmarking incorporates cross-validation with statistical hypothesis testing [5]. This involves running multiple cross-validation folds, generating a distribution of performance scores, and then applying appropriate statistical tests (e.g., paired t-tests) to determine if the performance differences between models are statistically significant, thereby adding a layer of reliability to model assessments [5].

Table 3: Essential Software and Data Resources for ADMET Research

| Tool or Resource | Type | Primary Function in ADMET Research |

|---|---|---|

| Therapeutics Data Commons (TDC) [31] | Python Library / Data Resource | Provides unified access to numerous curated datasets, benchmark tasks, and data splitting functions for systematic model evaluation. |

| RDKit [5] [28] | Cheminformatics Toolkit | The workhorse for chemical data handling; used to compute molecular descriptors, fingerprints, standardize structures, and handle tautomers. |

| DataWarrior [5] | Desktop Application | An interactive tool for visual data analysis, used for the final visual inspection of cleaned datasets to identify potential outliers or patterns. |

| Scikit-learn [28] | Python Library | Provides standard implementations for machine learning models, preprocessing, and evaluation metrics crucial for benchmarking. |

| XGBoost [30] | Machine Learning Library | A powerful tree-based boosting algorithm frequently used as a strong baseline or top-performing model for ADMET prediction tasks. |

| Chemprop [5] | Deep Learning Library | A message-passing neural network (MPNN) specifically designed for molecular property prediction, often used in state-of-the-art comparisons. |

| PharmaBench [28] | Data Resource | A more recent, large-scale benchmark dataset curated using LLMs, designed to be more representative of drug-like chemical space. |

The evolution of public ADMET datasets from simple aggregates like MoleculeNet to systematically curated resources like TDC and PharmaBench marks significant progress in the field. While challenges of data inconsistency, erroneous labels, and incompatible experimental conditions persist, newer resources are employing advanced strategies, including LLM-powered condition extraction and rigorous standardization workflows, to overcome them [28] [5]. For researchers, the choice of dataset and the application of a rigorous cleaning protocol are paramount. Benchmarking studies consistently show that data diversity and representativeness, rather than model architecture alone, are the dominant factors driving predictive accuracy and generalizability [9]. As the community moves forward, the adoption of standardized cleaning practices, robust benchmarking protocols involving statistical testing, and the utilization of larger, more carefully curated benchmarks will be essential for developing ADMET models with truly reliable predictive power in real-world drug discovery applications.

The selection of an optimal molecular representation is a foundational step in computational drug discovery, directly influencing the predictive accuracy of quantitative structure-activity relationship (QSAR) and quantitative structure-property relationship (QSPR) models. In the specific context of benchmarking open-access ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) tools against commercial software, this choice becomes critically important. Molecular representations translate chemical structures into a computationally tractable format, serving as the input feature space for machine learning (ML) and deep learning (DL) models. The three predominant paradigms are expert-designed descriptors and fingerprints, and data-driven deep-learned embeddings.

This guide provides an objective comparison of these representation classes, synthesizing insights from recent, rigorous benchmarking studies to inform researchers and drug development professionals. The performance of these representations is evaluated based on key criteria including predictive accuracy, generalizability, computational efficiency, and interpretability, with a specific focus on ADMET property prediction tasks.

Expert-Designed Representations

Expert-designed representations rely on pre-defined rules and chemical knowledge to convert a molecular structure into a fixed-length vector.

- Molecular Descriptors: These are numerical quantities that capture physicochemical properties (e.g., molecular weight, logP, topological polar surface area) or graph-theoretical indices of the molecule. They are typically calculated using software like RDKit [32] [5] and can provide interpretable insights into the factors governing molecular activity.

- Molecular Fingerprints: These are bit-string representations that encode the presence or absence of specific structural patterns or substructures within a molecule. Common examples include the Extended Connectivity Fingerprint (ECFP) and MACCS keys [33] [34]. Their primary strength lies in molecular similarity searching and structure-activity modeling.

Deep-Learned Representations (Embeddings)

Deep-learned representations aim to automate feature extraction by using neural networks to map molecules into a continuous, high-dimensional vector space [35].

- Graph Neural Networks (GNNs): Models such as Message Passing Neural Networks (MPNNs) and Graph Isomorphism Networks (GINs) operate directly on the molecular graph structure, treating atoms as nodes and bonds as edges [5] [34]. They learn to aggregate information from a node's local neighborhood to generate an embedding for the entire molecule.

- SMILES-Based Transformers: Inspired by natural language processing, these models treat the SMILES string of a molecule as a sequence of tokens and use transformer architectures to learn contextualized embeddings [34].

- Self-Supervised Learning (SSL): Many modern embedding models are pre-trained on large, unlabeled chemical databases using SSL objectives, such as masking parts of the input or contrasting different views of the same molecule, with the goal of learning general-purpose, transferable representations [35] [34].

Comparative Performance Analysis: Experimental Data

Numerous independent studies have benchmarked these representation types across various molecular property prediction tasks. The following tables synthesize quantitative findings from recent, high-quality investigations.

Table 1: Performance comparison of feature representations and algorithms on an olfactory prediction dataset (n=8,681 compounds).

| Feature Representation | Algorithm | AUROC | AUPRC | Accuracy (%) | Specificity (%) | Precision (%) | Recall (%) |

|---|---|---|---|---|---|---|---|

| Morgan Fingerprints (ST) | XGBoost | 0.828 | 0.237 | 97.8 | 99.5 | 41.9 | 16.3 |

| Morgan Fingerprints (ST) | LightGBM | 0.810 | 0.228 | - | - | - | - |

| Morgan Fingerprints (ST) | Random Forest | 0.784 | 0.216 | - | - | - | - |

| Molecular Descriptors (MD) | XGBoost | 0.802 | 0.200 | - | - | - | - |

| Functional Group (FG) | XGBoost | 0.753 | 0.088 | - | - | - | - |

Source: Adapted from a study in Communications Chemistry [32]. Metrics are Area Under the Receiver Operating Characteristic Curve (AUROC), Area Under the Precision-Recall Curve (AUPRC).

Table 2: General findings from large-scale benchmarking studies across multiple ADMET and property prediction datasets.

| Representation Category | Example Models | Relative Performance | Key Strengths | Key Limitations |

|---|---|---|---|---|

| Traditional Fingerprints | ECFP, MACCS, Atom Pair | Competitive or superior on many benchmarks [33] [34] | Computational efficiency, robustness, strong baseline | Fixed representation, may not capture complex electronic properties |

| Molecular Descriptors | RDKit Descriptors, PaDEL | Excels in predicting physical properties [33] | High interpretability, grounded in physicochemical principles | Performance can be dataset-dependent; requires careful selection |

| Deep-Learned Embeddings | GNNs (GIN, MPNN), Transformers | Variable; often fails to consistently outperform fingerprints [5] [34] | Automated feature extraction, potential for transfer learning | Computational cost, data hunger, risk of overfitting on small datasets |

Source: Synthesized from [5] [33] [34].

A landmark study benchmarking 25 pretrained embedding models across 25 datasets arrived at a striking conclusion: "nearly all neural models show negligible or no improvement over the baseline ECFP molecular fingerprint" [34]. This finding underscores the necessity of establishing robust, simple baselines when evaluating new representation learning methods, especially in applied settings like ADMET prediction.

Experimental Protocols from Key Studies

To ensure reproducibility and provide context for the data, this section outlines the methodologies employed in several cited benchmark studies.

Protocol: Odor Prediction Benchmark

This study compared functional group (FG) fingerprints, classical molecular descriptors (MD), and Morgan structural fingerprints (ST) using tree-based models [32].

- Dataset: A rigorously curated set of 8,681 unique odorants from ten expert sources, standardized into 200 odor descriptors.

- Feature Extraction:

- FG: Generated using SMARTS patterns for predefined substructures.

- MD: Calculated via RDKit, including molecular weight, logP, TPSA, etc.

- ST: Morgan fingerprints with a radius of 2 (equivalent to ECFP4) computed from optimized 3D conformations.

- Modeling & Evaluation: Separate one-vs-all classifiers were trained for each odor label using Random Forest, XGBoost, and LightGBM. Models were evaluated via stratified 5-fold cross-validation, with performance reported as mean AUROC and AUPRC across folds.

Protocol: Benchmarking Pretrained Embeddings

This extensive evaluation assessed the generalizability of static molecular embeddings [34].

- Models: 25 pretrained models, including GNNs (e.g., GIN, ContextPred, GraphMVP), graph transformers (e.g., GROVER, MAT), and fingerprint baselines (ECFP, Atom Pair).

- Datasets: 25 benchmark datasets covering a wide range of molecular properties.

- Evaluation Framework: A fixed, linear logistic regression probe was trained on top of the frozen embeddings for each task. This design directly evaluates the intrinsic quality of the representation, independent of further fine-tuning.

- Statistical Analysis: A hierarchical Bayesian statistical testing model was used to rank models and determine the significance of performance differences.

Protocol: ADMET-Focused Feature Selection

This study addressed feature selection for ligand-based ADMET models, moving beyond simple concatenation of different representations [5].

- Data: Multiple public ADMET datasets (e.g., from TDC, NIH solubility) subjected to a rigorous cleaning pipeline to remove salts, standardize SMILES, and deduplicate entries.

- Features: A wide array of representations, including RDKit descriptors, Morgan fingerprints, and deep-learned features from models like Chemprop.

- Modeling & Evaluation: Models including SVM, Random Forest, LightGBM, and MPNNs were evaluated. The study emphasized combining cross-validation with statistical hypothesis testing for robust model comparison and assessed practical utility via cross-dataset evaluation (training on one data source and testing on another).

Workflow and Logical Relationship Diagram

The following diagram illustrates a standardized workflow for comparing molecular representations in a benchmarking study, integrating the key phases from the experimental protocols described above.

The Scientist's Toolkit: Essential Research Reagents and Computational Tools

The experimental studies referenced herein rely on a suite of software libraries and computational tools. The following table details key resources essential for reproducing such benchmarking efforts.

Table 3: Key computational tools and resources for molecular representation research.

| Tool/Resource Name | Type | Primary Function | Relevance to Benchmarking |

|---|---|---|---|

| RDKit [32] [5] | Cheminformatics Library | Calculates molecular descriptors, fingerprints, and handles molecular standardization. | Industry standard for generating expert-based feature representations. |

| PyRfume Archive [32] | Public Dataset | Provides access to a curated, unified dataset of odorant molecules and their perceptual descriptors. | Served as the primary data source for the olfactory prediction benchmark. |

| PharmaBench [7] | Benchmark Dataset | A comprehensive benchmark set for ADMET properties, designed to be more representative of drug discovery compounds. | Provides a robust dataset for evaluating representations on pharmaceutically relevant properties. |

| TDC (Therapeutics Data Commons) [5] | Benchmark Framework | Provides a collection of curated datasets and leaderboards for therapeutic ML tasks, including ADMET. | A common source for standardized datasets and benchmarking protocols. |

| XGBoost / LightGBM [32] [5] | Machine Learning Library | Gradient boosting frameworks for building predictive models. | Often the top-performing algorithms when paired with fingerprint-based representations. |

| Chemprop [5] | Deep Learning Library | A message-passing neural network (MPNN) implementation specifically designed for molecular property prediction. | A standard baseline for task-specific deep-learned representations in ADMET. |

| Apheris Federated ADMET Network [9] | Federated Learning Platform | Enables collaborative training of ADMET models across institutions without sharing raw data. | Addresses the data scarcity challenge, a key limitation for deep-learned representations. |

| Clevidipine-d7 | Clevidipine-d7 Stable Isotope | Clevidipine-d7 is an internal standard for LC-MS/MS quantification of clevidipine in pharmacokinetic studies. For Research Use Only. Not for human use. | Bench Chemicals |

| Mif-IN-3 | Mif-IN-3, MF:C20H20N4O5S, MW:428.5 g/mol | Chemical Reagent | Bench Chemicals |

The collective evidence from recent benchmarks indicates that for many predictive tasks in drug discovery, including ADMET profiling, traditional molecular fingerprints like ECFP remain remarkably strong and often superior baselines. Their computational efficiency, robustness, and performance on small- to medium-sized datasets make them a default choice for initial modeling.

Deep-learned embeddings, while powerful in their ability to automatically extract features, have not yet consistently delivered on their promise to universally outperform expert-designed representations. Their success appears highly dependent on the specific task, dataset size, and the rigor of the pretraining process [34]. Future directions in molecular representation learning are focused on overcoming current limitations:

- 3D-Aware and Equivariant Models: Incorporating spatial and conformational information beyond 2D topology to better model molecular interactions [35].

- Multi-Modal Fusion: Integrating complementary information from graphs, SMILES strings, and quantum chemical descriptors to create more holistic representations [35] [36].

- Federated Learning: Addressing data scarcity and privacy concerns by enabling model training across distributed datasets, as demonstrated by initiatives like the MELLODDY project, which have shown that federation systematically expands a model's effective chemical domain [9].

For researchers benchmarking open-access ADMET tools, the empirical data strongly suggests that any credible evaluation must include simple fingerprint-based baselines. The representation selection should be guided by the problem's specific constraints: fingerprints for a robust, efficient starting point; descriptors for interpretability and physical property prediction; and deep-learned embeddings where large, relevant pre-training datasets exist and computational resources permit extensive validation. A rigorous, data-driven approach to feature selection is paramount for building reliable predictive models in computational pharmacology.

Accurate prediction of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties is fundamental to modern drug discovery, with approximately 40-45% of clinical attrition attributed to ADMET liabilities [9]. While computational methods offer a cost-effective approach for early assessment, the reliability of these models depends heavily on the rigor of their validation. Conventional practices that combine molecular representations without systematic reasoning or rely solely on hold-out test sets introduce significant uncertainty in model selection and performance assessment [5]. This comparison guide examines current methodologies for establishing robust validation frameworks in ADMET prediction, focusing on the integration of cross-validation with statistical hypothesis testing to provide drug development professionals with evidence-based protocols for benchmarking both open-access and commercial software tools.

The limitations of existing ADMET benchmarks—including small dataset sizes, insufficient representation of drug-like compounds, and data quality issues—further complicate model validation [7]. Recent research addresses these challenges through structured approaches to data feature selection, enhanced model evaluation methods, and practical scenario testing [5]. This guide synthesizes these methodological advances into a comprehensive framework for objectively comparing ADMET prediction tools, with supporting experimental data presented in structured formats to facilitate informed tool selection by researchers and scientists.

Experimental Protocols: Methodologies for Rigorous ADMET Benchmarking

Data Curation and Standardization Protocols

High-quality data curation forms the foundation of reliable ADMET model validation. The following standardized protocol, synthesized from recent benchmarking studies, ensures data consistency and relevance to drug discovery applications:

- Compound Standardization: Process all chemical structures using standardized tools to generate consistent SMILES representations. Modifications to standard definitions should include adding boron and silicon to organic element lists and implementing truncated salt lists that exclude components with two or more carbons [5].

- Data Cleaning Pipeline: Implement a sequential cleaning procedure that (1) removes inorganic salts and organometallic compounds; (2) extracts organic parent compounds from salt forms; (3) adjusts tautomers for consistent functional group representation; (4) canonicalizes SMILES strings; and (5) de-duplicates entries while resolving value inconsistencies [5].

- Experimental Condition Harmonization: For bioassays, employ Large Language Model (LLM)-based multi-agent systems to extract critical experimental conditions from unstructured assay descriptions. This process identifies factors such as buffer composition, pH levels, and experimental procedures that significantly influence measured values [7].

- Outlier Detection and Removal: Apply statistical methods to identify both intra-dataset and inter-dataset outliers. For continuous data, calculate Z-scores and remove data points with values exceeding 3. For compounds appearing across multiple datasets, remove entries with standardized standard deviation greater than 0.2 [3].

Model Training and Hyperparameter Optimization

Consistent model training protocols enable fair comparison across different ADMET prediction tools:

- Baseline Architecture Selection: Initiate experiments with a carefully selected model architecture serving as a baseline for subsequent optimization. Common choices include Random Forests, Support Vector Machines, gradient boosting frameworks (LightGBM, CatBoost), and Message Passing Neural Networks as implemented in Chemprop [5].

- Feature Representation Strategy: Investigate molecular representations both individually and in combination, including RDKit descriptors, Morgan fingerprints, and deep neural network embeddings. Employ iterative feature combination until optimal performing combinations are identified [5].

- Hyperparameter Tuning: Implement dataset-specific hyperparameter optimization using appropriate search strategies (grid search, random search, or Bayesian optimization) with validation metrics aligned to the specific ADMET endpoint [5].

- Federated Learning Implementation: For cross-pharma collaborations, utilize federated learning protocols that train models across distributed proprietary datasets without centralizing sensitive data. This approach systematically expands chemical space coverage and improves model robustness [9].

Statistical Evaluation Framework

The core innovation in robust ADMET validation integrates cross-validation with statistical testing:

- Hypothesis Testing Integration: Employ cross-validation with statistical hypothesis testing to assess the significance of optimization steps. This approach adds a layer of reliability to model assessments beyond conventional performance metrics [5] [37].

- Practical Scenario Evaluation: Test optimized models in practical scenarios where models trained on one data source are evaluated on test sets from different sources for the same property. This assesses real-world applicability and cross-dataset generalizability [5].

- External Data Impact Assessment: Train models on combinations of data from different sources to mimic scenarios when external data is combined with increasing amounts of internal data, quantifying the performance impact of data source integration [5].

- Applicability Domain Assessment: Evaluate model performance specifically within the applicability domain of each tool, providing realistic expectations for practical use cases [3].

Comparative Analysis: ADMET Tool Performance Benchmarking

Performance Metrics Across ADMET Endpoints

Comprehensive benchmarking requires evaluation across multiple ADMET properties. The table below summarizes the performance of computational tools in predicting key physicochemical (PC) and toxicokinetic (TK) properties based on recent large-scale validation studies:

Table 1: Performance Metrics of ADMET Prediction Tools Across Key Properties

| Property Category | Specific Endpoint | Best Performing Algorithm | Performance Metric | Key Findings |

|---|---|---|---|---|

| Physicochemical (PC) | Water Solubility (LogS) | Random Forest with Combined Features | R² = 0.717 (average) | Classical descriptors outperformed deep learned representations in curated datasets [5] |

| Physicochemical (PC) | Octanol/Water Partition (LogP) | LightGBM with RDKit Descriptors | R² = 0.694 | Feature combination strategies showed diminishing returns with over-complex representations [5] |

| Toxicokinetic (TK) | Bioavailability (F30%) | Federated Multi-task Learning | Balanced Accuracy = 0.780 | Federation across multiple datasets significantly expanded applicability domains [9] |

| Toxicokinetic (TK) | Caco-2 Permeability | Message Passing Neural Networks | R² = 0.639 (average) | Model performance highly dataset-dependent despite architecture optimization [5] [3] |

| Toxicokinetic (TK) | Blood-Brain Barrier Penetration | Gaussian Process Models | AUC = 0.821 | Uncertainty estimation crucial for reliable predictions in early screening [5] |

Impact of Validation Methodology on Performance Assessment

The choice of validation methodology significantly influences performance outcomes and model selection:

Table 2: Impact of Validation Strategy on Model Performance Rankings

| Validation Method | Key Characteristics | Model Ranking Consistency | Limitations | Recommended Use Cases |

|---|---|---|---|---|

| Single Hold-Out Test Set | Conventional approach with fixed split | Low (Highly variable across random seeds) | Overestimates performance on structurally similar compounds | Preliminary screening of multiple algorithms |