Validating Observational Studies with Randomized Trials: A Modern Framework for Biomedical Research

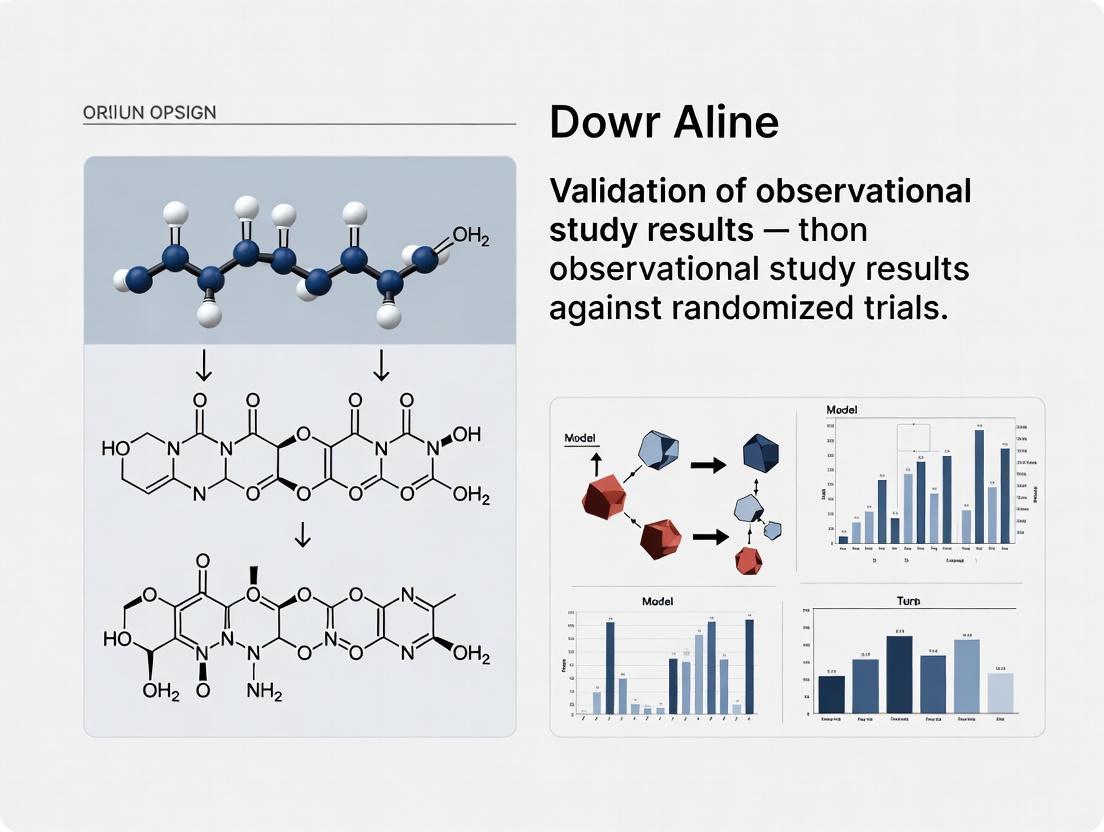

This article provides a comprehensive framework for researchers and drug development professionals seeking to validate observational study results against randomized controlled trial (RCT) data.

Validating Observational Studies with Randomized Trials: A Modern Framework for Biomedical Research

Abstract

This article provides a comprehensive framework for researchers and drug development professionals seeking to validate observational study results against randomized controlled trial (RCT) data. It explores the foundational strengths and limitations of both methodologies in the era of big data, detailing advanced causal inference techniques and novel diagnostic frameworks for bias assessment. The content addresses core challenges in reconciling conflicting evidence and presents practical validation strategies, including sensitivity analyses and emerging statistical approaches. By synthesizing current methodological innovations, this guide aims to enhance the reliability of real-world evidence and inform robust clinical and regulatory decision-making.

Observational Studies vs RCTs: Understanding the Modern Evidence Landscape

Randomized Controlled Trials (RCTs) occupy a preeminent position in medical research as the traditional gold standard for establishing the efficacy of new interventions. This guide provides an objective comparison between RCTs and observational studies, detailing their methodologies, strengths, and limitations, with a specific focus on how observational study results are validated against randomized trial research.

Why RCTs Are the Efficacy Gold Standard

RCTs are prospective studies in which participants are randomly allocated to receive either a new intervention (experimental group) or a control/alternative intervention (control group). This design is specifically intended to measure efficacy—the extent to which an intervention can bring about its intended effect under ideal and controlled circumstances [1].

The designation of RCTs as the "gold standard" is rooted in their unique ability to establish cause-effect relationships between an intervention and outcome [2]. This capability stems from key methodological features:

- Random Allocation: Researchers use computer-generated random number sequences or similar methods to assign participants to study groups [2] [3]. This process ensures that both known and unknown participant characteristics are evenly distributed across groups, creating comparable groups at baseline [2] [1].

- Bias Control: Methodological safeguards including blinding (where participants and/or researchers are unaware of treatment assignments) and allocation concealment prevent the deliberate or unconscious manipulation of results [4] [2].

- Confounding Elimination: By balancing both measured and unmeasured factors across groups, randomization mathematically eliminates confounding bias—a fundamental limitation of observational designs [5] [2] [6].

For drug development professionals, RCTs provide the rigorous evidence required by regulatory agencies like the FDA to demonstrate that a drug's benefits outweigh its risks [6] [7].

Direct Comparison: RCTs Versus Observational Studies

The table below summarizes the key methodological differences and applications of RCTs versus observational studies:

| Feature | Randomized Controlled Trials (RCTs) | Observational Studies |

|---|---|---|

| Core Definition | Experiment where investigator actively assigns interventions via randomization [5] | Study where investigator observes effects of exposures without assigning them [5] |

| Primary Strength | High internal validity; establishes efficacy and causal inference [5] [2] | High external validity; assesses real-world effectiveness [5] [1] |

| Key Limitation | Limited generalizability (highly selective populations) [5] [6] | Susceptible to confounding and bias [5] |

| Ideal Application | Regulatory approval of new drugs/devices [1] [7] | Examining long-term outcomes, natural experiments, or when RCTs are unethical [5] |

| Cost & Feasibility | High cost, time-intensive, complex logistics [4] [6] [3] | Generally more feasible and less costly [5] |

| Bias Control | Randomization addresses known and unknown confounding [2] | Requires statistical methods (e.g., propensity scoring) to control for measured confounders only [5] |

Key Methodological Concepts and Reagents

The table below details essential components and methodologies central to conducting rigorous RCTs:

| Component/Method | Function & Purpose |

|---|---|

| Randomization Sequence | Computer-generated random numbers ensuring unbiased group assignment [2] [3] |

| Blinding (Single/Double) | Prevents assessment bias; double-blind protects against patient and investigator bias [8] |

| Placebo Control | Inert substance mimicking active treatment to isolate psychological treatment effects [9] [8] |

| Intention-to-Treat (ITT) Analysis | Analyzes participants in original randomized groups, preserving bias control of randomization [2] |

| Consolidated Standards of Reporting Trials (CONSORT) | Guidelines ensuring comprehensive and transparent reporting of RCT methodology and findings [2] |

Experimental Protocols in RCTs

Standard Parallel-Group RCT Workflow

The following diagram illustrates the standard workflow for a parallel-group RCT, the most common design for evaluating intervention efficacy:

Advanced RCT Designs

Beyond standard parallel trials, several innovative RCT designs have emerged to address specific research challenges:

- Adaptive Trials: Incorporate scheduled interim analyses that allow for predetermined modifications to the trial design (e.g., dropping ineffective treatment arms) based on accumulating data, while maintaining trial validity [5] [6].

- Platform Trials: Evaluate multiple interventions for a single disease condition simultaneously, allowing interventions to be added or dropped over time based on performance [5].

- Sequential Trials: Continuously recruit participants and analyze results, stopping the trial once predetermined evidence thresholds for treatment effectiveness are reached [5].

- Pragmatic Clinical Trials (PrCTs): Bridge the gap between explanatory RCTs and observational studies by retaining randomization while implementing interventions in real-world clinical practice settings to enhance generalizability [1].

Validating Observational Studies Against RCTs

Triangulating evidence from both observational and experimental approaches provides a stronger basis for causal inference [5]. Several methodological frameworks enable more direct comparison between these research paradigms:

Causal Inference Methods

Observational studies increasingly employ causal inference methods that mimic RCT design principles [5]. These approaches require researchers to explicitly define:

- The Design Intervention: Precisely specifying the exposure and comparison conditions.

- Key Confounders: Using Directed Acyclic Graphs (DAGs) to map hypothesized causal relationships and identify variables that must be controlled for to avoid biased effect estimates [5].

- Target Trial: Framing the observational analysis as an emulation of a hypothetical RCT that would answer the same research question.

Sensitivity Analysis for Unmeasured Confounding

The E-value metric has emerged as a crucial tool for assessing the robustness of observational study results [5]. It quantifies the minimum strength of association an unmeasured confounder would need to have with both the treatment and outcome to fully explain away the observed treatment-outcome association.

RCTs maintain their status as the gold standard for efficacy research primarily through their unparalleled ability to minimize bias and establish causality via randomization. However, observational studies provide complementary evidence on real-world effectiveness and are essential when RCTs are unethical, impractical, or financially prohibitive. The evolving research landscape recognizes the unique value of both methodologies, with advances in causal inference strengthening the validity of observational research and pragmatic trial designs enhancing the real-world relevance of RCTs. For researchers and drug development professionals, understanding both the theoretical foundations and practical applications of these designs is crucial for building a comprehensive evidence base for medical interventions.

For decades, randomized controlled trials (RCTs) have been considered the gold standard for medical evidence, prized for their ability to establish causal relationships through controlled conditions and random assignment [5]. However, the primacy of RCTs is increasingly being questioned in an era of big data and advanced methodologies [5]. Real-world evidence (RWE) derived from observational studies has emerged as a powerful complement to traditional RCTs, offering unique insights into how medical interventions perform in actual clinical practice [10]. This article examines the key advantages of observational studies in generating real-world evidence and validates these findings against randomized trial research.

Understanding the Evidence Spectrum

Defining Real-World Evidence and Observational Studies

Real-world data (RWD) refers to routinely collected data associated with patient health status or healthcare delivery from sources including electronic health records (EHRs), medical claims data, patient registries, and digital health technologies [10]. When analyzed, this data generates real-world evidence (RWE) regarding the usage, benefits, and risks of medical products [10]. RWE is increasingly valuable throughout the product lifecycle, from informing trial design to supporting regulatory decisions and monitoring long-term safety [11].

In contrast to RCTs where investigators assign interventions, observational studies involve investigators observing the effects of exposures on outcomes using existing or collected data without playing a role in treatment assignment [5]. This fundamental difference in design creates complementary strengths and limitations that researchers must understand when evaluating evidence.

Key Methodological Differences

Table 1: Fundamental Design Characteristics of RCTs vs. Observational Studies

| Characteristic | Randomized Controlled Trials | Observational Studies |

|---|---|---|

| Intervention Assignment | Investigator-controlled | Naturally occurring in clinical practice |

| Setting | Controlled research environment | Real-world clinical practice [10] |

| Patient Selection | Strict inclusion/exclusion criteria | No strict criteria for patient inclusion [10] |

| Primary Aim | Establish efficacy under ideal conditions | Determine effectiveness in routine practice [10] |

| Data Drivers | Investigator-centered | Patient-centered [10] |

| Comparator | Placebo or standard care | Variable treatments determined by market and physician [10] |

Key Advantages of Real-World Evidence from Observational Studies

Enhanced Generalizability and Real-World Relevance

Observational studies generate evidence with superior external validity by including patient populations that reflect actual clinical practice [5]. While RCTs employ strict eligibility criteria that create homogeneous study groups, observational studies encompass the full spectrum of patients, including those with comorbidities, concomitant medications, and diverse demographic characteristics typically excluded from trials [10] [11]. This diversity provides crucial insights into how interventions perform across heterogeneous real-world populations [11].

The real-world setting also captures practical clinical factors absent from controlled trials, including variations in treatment adherence, healthcare delivery dynamics, resource availability, and physician expertise [11]. This contextual information helps bridge the gap between theoretical efficacy and practical effectiveness, offering stakeholders a more complete picture of how interventions function in routine care environments.

Accessibility to Underserved Populations

RWE provides critical insights into populations traditionally excluded from RCTs due to ethical concerns or methodological constraints [11]. Observational studies can include children, pregnant women, older adults, and individuals with multiple comorbidities who are often underrepresented in clinical trials [10] [11]. This inclusive approach generates evidence for vulnerable groups who nevertheless require medical treatment in actual practice, addressing significant ethical and practical gaps left by restrictive trial protocols.

Economic and Temporal Efficiency

Observational studies typically offer substantial cost and time advantages over traditional RCTs [10]. By leveraging existing data sources such as EHRs, claims databases, and patient registries, researchers can bypass the resource-intensive processes of patient recruitment, dedicated study sites, and prolonged follow-up periods required for RCTs [10]. The ability to conduct retrospective analyses using previously collected data enables rapid generation of insights that can respond to emerging clinical questions or public health needs [11].

Longitudinal Insights and Post-Marketing Surveillance

While RCTs are necessarily limited in duration, observational studies can provide extended follow-up to understand long-term treatment effects, safety profiles, and rare adverse events [11]. This longitudinal perspective is particularly valuable for chronic conditions requiring sustained management and for detecting late-emerging safety signals that may not manifest within typical trial timelines [11]. Regulatory agencies increasingly utilize RWE for post-marketing safety monitoring through initiatives like the FDA Sentinel System [10] [11].

Ethical and Practical Solutions for Unresearchable Questions

Observational studies provide methodological approaches for scenarios where RCTs would be unethical, impractical, or impossible to conduct [5]. When investigating harmful exposures, studying rare outcomes, or evaluating interventions in emergency situations, random assignment may be ethically prohibited or logistically unfeasible. In these contexts, well-designed observational studies offer the only viable means to generate clinical evidence to guide decision-making [5].

Validating Observational Studies Against Randomized Trials

Methodological Advances Enhancing Validity

Concerns about confounding and bias have historically limited confidence in observational studies [5]. However, methodological innovations have significantly improved the robustness of RWE. Causal inference methods now enable researchers to analyze observational data as hypothetical RCTs through well-defined frameworks requiring explicit definition of design interventions, exposures, and confounders [5]. The use of Directed Acyclic Graphs (DAGs) helps identify and address potential sources of bias [5].

The development of metrics like the E-value provides intuitive measurement of how robust results are to unmeasured confounding, quantifying the minimum strength of association an unmeasured confounder would need to fully explain away a treatment-outcome association [5]. These advances, combined with greater data availability and analytical sophistication, have enhanced the reliability of observational study findings.

Comparative Performance and Convergence

Evidence suggests that high-quality observational studies often produce results similar to RCTs. When real-world data are analyzed using advanced causal inference methods, they have generated similar results to those of randomized trials [5]. This convergence supports the value of RWE as complementary evidence rather than merely inferior substitute.

Table 2: Comparative Advantages and Limitations of Evidence Sources

| Consideration | Randomized Controlled Trials | Observational Studies |

|---|---|---|

| Internal Validity | High (controls confounding through randomization) | Variable (requires sophisticated methods to control confounding) [5] |

| External Validity | Limited (selected populations, controlled settings) | High (diverse populations, real-world settings) [5] [10] |

| Implementation Timeline | Lengthy (protocol development, recruitment, follow-up) | Relatively rapid (especially retrospective designs) [10] |

| Cost Considerations | High (site monitoring, data collection, participant compensation) | Lower (leveraging existing data sources) [10] |

| Ethical Constraints | May be prohibitive for certain questions | Enables investigation where RCTs are unethical [5] |

| Regulatory Acceptance | Well-established for product approval | Growing for complementary decisions [12] [11] |

Experimental Protocols for Real-World Evidence Generation

Protocol 1: Retrospective Cohort Study Using EHR Data

Objective: Compare effectiveness of two therapeutic strategies for chronic disease management.

Data Source: Electronic health records from integrated healthcare system, including demographics, diagnoses, medications, laboratory results, and clinical outcomes.

Inclusion Criteria:

- Adults with confirmed diagnosis

- Minimum 12 months continuous enrollment prior to index date

- At least one prescription for studied medications during identification period

Exclusion Criteria:

- Contraindications to studied treatments

- Pregnancy during study period

- Life expectancy less than 12 months

Statistical Analysis:

- Propensity Score Matching: Estimate probability of treatment assignment based on observed baseline characteristics

- Cox Proportional Hazards Regression: Compare time-to-event outcomes between matched cohorts

- Sensitivity Analyses: Assess impact of unmeasured confounding using E-values

- Subgroup Analyses: Examine consistency of treatment effects across clinically relevant patient subsets

Protocol 2: Prospective Registry Study

Objective: Document long-term safety and effectiveness of newly approved medical device.

Data Source: Disease-specific clinical registry with mandated participation for all patients receiving the device.

Data Collection Points: Baseline, implant procedure, 30 days, 6 months, 1 year, and annually thereafter.

Key Variables:

- Patient-reported outcomes (pain, function, quality of life)

- Device performance metrics

- Adverse event documentation

- Revision/explantation information

Analysis Plan:

- Kaplan-Meier Survival Analysis: Estimate time to device failure or key adverse events

- Mixed Effects Models: Analyze longitudinal patient-reported outcomes

- Benchmarking: Compare registry outcomes to performance goals established from historical controls and clinical trial data

Visualization of Real-World Evidence Generation Workflow

Table 3: Key Research Reagent Solutions for Real-World Evidence Generation

| Tool Category | Representative Examples | Primary Function |

|---|---|---|

| Data Platforms | OMOP Common Data Model, Sentinel Initiative | Standardize data structure and facilitate multi-source analytics |

| Statistical Software | R, Python, SAS, STATA | Implement advanced statistical methods and causal inference approaches |

| Terminology Standards | SNOMED CT, LOINC, ICD-10 | Ensure consistent coding of clinical concepts across data sources |

| Analytical Packages | R: propensity, MatchIt; Python: CausalML | Facilitate implementation of specialized methods for observational data |

| Data Quality Tools | Achilles Heel, Data Quality Dashboard | Assess fitness-for-use of real-world data sources |

The rise of real-world evidence represents a fundamental shift in evidence generation that complements rather than replaces traditional randomized trials. Observational studies provide unique advantages in generalizability, inclusivity, efficiency, and practical relevance that address critical limitations of RCTs [5] [10] [11]. While methodological rigor remains essential, advanced analytical approaches have significantly enhanced the validity and reliability of RWE.

The evolving regulatory landscape reflects growing acceptance of well-generated RWE, with agencies increasingly incorporating real-world studies into decision-making processes [12] [11]. For researchers and drug development professionals, the strategic integration of both randomized and observational evidence offers the most comprehensive approach to understanding medical interventions throughout their lifecycle. Rather than debating the supremacy of either method, the future of clinical evidence lies in triangulation—leveraging the complementary strengths of diverse study designs to build a more complete and clinically relevant evidence base [5].

In clinical and scientific research, the validity of a study determines its credibility and usefulness. Validity is the degree to which a study's findings accurately reflect the true effect of an intervention or exposure, free from bias and error [13]. For researchers, scientists, and drug development professionals, understanding validity is paramount when translating findings from controlled settings to real-world applications, particularly when validating observational study results against randomized trials research.

The two fundamental pillars of research validity are internal and external validity, which often exist in a delicate balance. Internal validity examines whether the study design, conduct, and analysis answer the research questions without bias, focusing on establishing a trustworthy cause-and-effect relationship [14]. External validity refers to the extent to which the research findings can be generalized to other contexts, including different populations, settings, and times [15] [14]. This guide explores the core trade-off between these competing forms of validity, providing a structured framework for evaluating research quality across study designs.

Defining Internal Validity

Core Concept and Importance

Internal validity is defined as the extent to which the observed results represent the truth in the population being studied and are not due to methodological errors [15]. It addresses a fundamental question: can the changes in the dependent variable be confidently attributed to the manipulation of the independent variable, rather than to other confounding factors? [16]. When a study has high internal validity, researchers can conclude that their intervention or treatment genuinely causes the observed effect.

Establishing strong internal validity is particularly crucial for randomized controlled trials (RCTs) and preclinical studies where proving causal relationships is essential before progressing to broader applications. Without high internal validity, any conclusions about cause and effect are questionable, and the foundation for generalizing findings becomes compromised [15] [17].

Threats to Internal Validity and Control Methodologies

Multiple factors can threaten internal validity, potentially undermining the causal inferences drawn from research. The table below summarizes key threats and corresponding methodological controls.

Table 1: Threats to Internal Validity and Control Methodologies

| Threat | Description | Control Methodologies |

|---|---|---|

| Selection Bias | Pre-existing differences between groups before the intervention [18] | Random assignment to ensure groups are comparable at baseline [16] [19] |

| Attrition | Loss of participants over time, potentially creating biased groups [18] [16] | Intent-to-treat analysis; examining characteristics of dropouts [16] |

| Confounding Variables | Unmeasured third variables that influence both independent and dependent variables [16] [19] | Blinding; standardized procedures; statistical control [16] [19] |

| Historical Events | External events occurring during the study that influence outcomes [18] [16] | Use of control groups; careful study timing [16] |

| Testing Effects | Participants changing their behavior due to familiarity with testing procedures [18] [16] | Counterbalancing; using alternative forms of tests [18] |

| Instrumentation | Changes in measurement tools or procedures during the study [18] [16] | Consistent use of calibrated instruments; training raters [18] |

Defining External Validity

Core Concept and Importance

External validity concerns the generalizability of study findings—whether the results observed in a specific research context would apply to other populations, settings, treatment variables, and measurement variables [20]. This form of validity asks: would patients in our daily practice, especially those representing the broader target population, experience similar outcomes? [15]. For drug development professionals, external validity determines whether promising preclinical or clinical results will translate to diverse patient populations and real-world clinical settings.

Two key subtypes of external validity include population validity (whether findings generalize to other groups of people) and ecological validity (whether findings generalize to other situations and settings) [18] [16]. Ecological validity is a particularly important consideration for animal models in preclinical research, as it examines whether laboratory findings can be generalized to naturalistic situations, including clinical practice in everyday life [14].

Threats to External Validity and Enhancement Strategies

Threats to external validity often arise from the artificiality of research conditions or narrow participant selection. The table below outlines common threats and strategies to mitigate them.

Table 2: Threats to External Validity and Enhancement Strategies

| Threat | Description | Enhancement Strategies |

|---|---|---|

| Sampling Bias | Study participants differ substantially from the target population [18] | Use of heterogeneous, representative samples; broad inclusion criteria [15] [16] |

| Hawthorne Effect | Participants change their behavior because they know they are being studied [18] | Naturalistic observation; concealed assessment when ethical and feasible [18] |

| Aptitude-Treatment Interaction | Some treatments are more or less effective for particular individuals based on specific characteristics [16] | Subgroup analysis; examining moderating variables [16] |

| Artificial Research Settings | Laboratory conditions differ substantially from real-world application contexts [14] [17] | Field experiments; pragmatic trial designs [20] [19] |

| Historical Context | Specific temporal or cultural factors limit applicability to other time periods [16] | Replication across different time periods and locations [16] |

The Fundamental Trade-Off and Experimental Design

The Core Trade-Off Explained

The central tension between internal and external validity represents one of the most significant challenges in research design. Studies with high internal validity typically employ strict controls, standardized procedures, and homogeneous samples to isolate causal effects—but these very features can limit how applicable the findings are to real-world conditions [18] [19]. Conversely, studies designed with high external validity often embrace real-world complexity, which can introduce confounding variables that threaten causal inference [13].

This trade-off is particularly evident in the distinction between efficacy trials (explanatory trials) and effectiveness trials (pragmatic trials). Efficacy trials determine whether an intervention produces expected results under ideal, controlled circumstances, thus prioritizing internal validity. Effectiveness trials measure the degree of beneficial effect under "real-world" clinical settings, thus emphasizing external validity [20]. Both approaches provide valuable, but different, evidence for drug development.

Visualizing the Research Pathway and Validity Trade-Off

The following diagram illustrates the sequential relationship between internal and external validity in the research continuum, highlighting how establishing causality precedes testing generalizability:

Experimental Protocols for Validity Assessment

Protocol for Evaluating Internal Validity in Randomized Trials

Assessing internal validity requires systematic examination of a study's design and implementation. The following protocol provides a structured approach:

Randomization Verification: Examine methods used for random allocation sequence generation and allocation concealment. Proper randomization prevents selection bias by ensuring all participants have an equal chance of receiving any treatment [20] [13].

Blinding Assessment: Determine whether patients, investigators, and outcome assessors were blinded to treatment assignments. Blinding prevents performance and detection bias that can exaggerate treatment effects [13] [17].

Attrition Analysis: Calculate attrition rates across all study groups and examine reasons for dropout. Differential attrition between groups can introduce bias, particularly if related to the treatment or outcome [18] [16].

Confounding Evaluation: Identify potential confounding variables measured in the study and assess how they were controlled statistically or through design features such as matching or restriction [19].

Instrumentation Consistency: Verify that outcome measures remained consistent throughout the study and that any changes in measurement tools were accounted for in the analysis [18].

Protocol for Assessing External Validity in Clinical Studies

Evaluating external validity involves examining the representativeness of the study and its relevance to target populations:

Population Representativeness Analysis: Compare the demographic and clinical characteristics of the study sample to the target population for generalization. Key factors include age, gender, disease severity, comorbidities, and racial/ethnic diversity [20].

Intervention Applicability Assessment: Evaluate whether the intervention, as implemented in the study, is feasible in routine clinical practice. Consider dosage, administration complexity, required monitoring, and resource requirements [15] [20].

Setting Comparison: Examine similarities and differences between the research settings (e.g., academic medical centers, specialized clinics) and typical care settings where the intervention might be applied [20].

Outcome Relevance Determination: Assess whether the measured outcomes align with outcomes important to patients, clinicians, and policymakers in real-world decision-making [20].

Validity in Animal Models for Drug Development

Validation Framework for Preclinical Research

In drug development, animal models serve as crucial bridges between basic research and clinical applications. The validation of these models extends beyond internal and external validity to include three specialized criteria that determine their predictive value for human conditions:

Table 3: Validation Criteria for Animal Models in Drug Development

| Validity Type | Definition | Research Example |

|---|---|---|

| Face Validity | How well a model replicates the disease phenotype in humans [21] | MPTP non-human primate model for Parkinson's Disease displays similar motor symptoms [21] |

| Construct Validity | How well the mechanism used to induce the disease reflects the understood human disease etiology [21] | Transgenic mice with human Smn gene for Spinal Muscular Atrophy [21] |

| Predictive Validity | How well a model predicts therapeutic outcomes in humans [21] | 6-OHDA rodent model for Parkinson's Disease used to screen potential therapeutics [21] |

The Translational Challenge Diagram

The following diagram illustrates the significant validity challenges in translating findings from animal models to human applications, highlighting specific gaps at each stage:

Essential Research Reagent Solutions for Validity Assessment

The following reagents and methodologies represent critical tools for maintaining validity across research designs:

Table 4: Essential Research Reagents and Methodologies for Validity

| Reagent/Methodology | Function in Validity Assessment | Application Context |

|---|---|---|

| Randomization Software | Generizes unpredictable allocation sequences to prevent selection bias [13] | RCTs; animal model assignment |

| Validated Measurement Scales | Ensures construct validity through proven reliability and accuracy [13] | Clinical outcomes assessment; psychological constructs |

| Blinding Protocols | Prevents performance and detection bias through concealed treatment allocation [13] [17] | Drug trials; outcome assessment |

| Standardized Operating Procedures | Maintains consistency in interventions and measurements across settings [19] | Multicenter trials; longitudinal studies |

| Statistical Analysis Packages | Provides appropriate methods for handling missing data and confounding [13] | Data analysis across all study designs |

The trade-off between internal and external validity represents a fundamental consideration in research design, particularly when validating observational studies against randomized trials. While internal validity is an essential prerequisite for establishing causal relationships, external validity determines the practical impact and generalizability of research findings. The most robust research programs strategically balance these competing forms of validity, often through sequential studies that first establish causality under controlled conditions before testing generalizability in real-world settings. For drug development professionals, understanding this balance is crucial for interpreting evidence across the research continuum and making informed decisions about therapeutic potential.

Randomized Controlled Trials (RCTs) are universally regarded as the gold standard for clinical evidence due to their design, which minimizes bias and confounding through random assignment, thereby ensuring high internal validity [22] [23]. They are the cornerstone for establishing the efficacy of pharmacological interventions and have transformed medicine into an empirical science [24]. However, the rigorous conditions that make RCTs so definitive also render them unsuitable for many critical research questions. In numerous scenarios, ethical constraints, profound practical challenges, or extended time horizons make the execution of a traditional RCT impossible [25] [26]. This guide examines the inherent limitations of RCTs and objectively compares them with observational studies, framing this comparison within the broader thesis of how observational study results can be validated against randomized trial research.

Limitations of Randomized Controlled Trials

Ethical Limitations

The fundamental ethical requirement of clinical equipoise—the genuine uncertainty within the expert medical community about the preferred treatment—is a prerequisite for any RCT [22]. When this condition is absent, proceeding with an RCT becomes unethical.

- Established Standard of Care: It is considered unethical to withhold an established treatment from individuals in a placebo-controlled trial [25]. For instance, it would be highly unethical to assess the influence of intraoperative opioids on surgical pain compared to a placebo, as the benefit of pain control is already unequivocally established [25].

- Life-Saving Interventions: In contexts such as life-threatening hemorrhage, where blood transfusion is known to be life-saving, it is "absolutely impossible" to create a control group that does not receive transfusion [25]. Research in such extreme clinical scenarios (Zone 3, as illustrated in the diagram below) cannot be conducted via RCTs.

Practical and Feasibility Limitations

RCTs are often prohibitably costly and resource-intensive, requiring significant funding, infrastructure, and personnel over many years [23] [4]. This is compounded by other practical hurdles:

- Rare Diseases: For rare conditions, the population of eligible patients is so small that enrolling a sufficiently powered sample size becomes nearly impossible [26].

- Surgical and Complex Interventions: Nearly 60% of surgical research questions cannot be answered by RCTs, as it is often infeasible or unrealistic to standardize complex surgical procedures and recruit the required large sample sizes [4].

- Recruitment and Generalizability: The strict inclusion and exclusion criteria of RCTs often lead to the enrollment of a very small, homogenous proportion of the patient population. This results in a studied population that does not reflect the "real world," threatening the generalizability (external validity) of the findings [25] [27].

Temporal Limitations

The timeline of an RCT is frequently misaligned with the clinical need for evidence and the natural history of diseases.

- Long-Term Outcomes: RCTs may be of "too short duration" to detect longer-term harms or benefits, which are often of greater importance to patients [26]. Studying outcomes like the development of melanoma following an intervention would require a follow-up period so long that an RCT would be unfeasible [23] [5].

- Speed of Evidence Generation: The process of designing, funding, recruiting for, conducting, and analyzing an RCT can take many years [23]. In rapidly evolving fields or during public health emergencies (e.g., assessing COVID-19 public health measures), this slow pace is a critical limitation [23] [5].

Methodological and Inferential Limitations

Even well-executed RCTs have underappreciated methodological constraints.

- Post-Randomization Biases: Randomization only protects against confounding at baseline. Biases can be introduced later through loss to follow-up, non-compliance, and missing data [23] [5].

- Assessment of Harms: RCTs are frequently "underpowered to detect differences between comparators in harms" because they are often designed and sized to detect efficacy benefits, not rare adverse events [26].

- The Individual Patient Dilemma: While RCTs are effective at determining the average treatment effect for a group, it is surprisingly difficult to apply this result to an individual patient. A 50% response rate in a trial does not mean half the patients are responders and half are not; it could mean all patients have a 50% probability of responding, with vast individual variability [24].

The diagram below synthesizes the ethical and practical boundaries of clinical research, illustrating the spectrum from ideal RCT candidates to scenarios where only observational studies are possible.

Methodological Approaches When RCTs Are Not Possible

When RCTs are not feasible, observational studies and advanced methodological frameworks provide powerful alternatives for generating real-world evidence. The key is to apply rigorous design and analytical techniques to mitigate confounding and bias.

Core Observational Study Methodologies

| Method | Core Protocol Description | Key Function to Mitigate Bias |

|---|---|---|

| Propensity Score Matching | A two-stage process: 1) The probability (propensity) of receiving the treatment is calculated for each patient using a model with all known pre-treatment confounders. 2) Treated patients are matched to untreated patients (controls) with identical or very similar propensity scores. | Creates a synthetic cohort where the treatment and control groups are balanced on all measured baseline characteristics, mimicking random assignment [25] [23]. |

| Multivariable Regression | A statistical model is built where the outcome is a function of the treatment exposure and a set of potential confounding variables. This statistically adjusts for the impact of these confounders on the relationship between exposure and outcome. | Directly controls for the influence of measured confounders, providing an estimate of the treatment effect that is independent of those factors [25]. |

| Target Trial Emulation | A formal framework where researchers first precisely specify the protocol of an RCT they would ideally run (the "target trial"). They then design and analyze observational data to emulate this hypothetical trial as closely as possible. | Forces explicit declaration of key study design elements (eligibility, treatment strategies, outcomes, etc.) to prevent common biases like immortal time bias and to align observational analysis with causal inference principles [22]. |

Advanced Causal Inference Frameworks

Modern causal inference provides a structured intellectual discipline for drawing conclusions from non-randomized data.

- Directed Acyclic Graphs (DAGs): DAGs are visual tools used to map out assumed causal relationships between the treatment, outcome, confounders, and other variables based on subject-matter knowledge. This process makes researchers explicitly state their assumptions about the sources of bias and guides the appropriate analytical strategy to adjust for them [23] [5].

- E-Value: The E-value is a quantitative metric that assesses how robust a study's findings are to potential unmeasured confounding. It answers the question: "How strong would an unmeasured confounder need to be, in its association with both the treatment and the outcome, to fully explain away the observed association?" A large E-value indicates greater robustness of the result [23] [5].

The workflow for applying these advanced methods is systematized in the following diagram.

Comparative Data: RCTs vs. Observational Studies

The following tables provide a structured comparison of the core characteristics, strengths, and weaknesses of RCTs and observational studies, offering a clear guide for researchers and decision-makers.

Core Characteristics and Applicability

| Feature | Randomized Controlled Trial (RCT) | Observational Study |

|---|---|---|

| Primary Objective | Establish efficacy under ideal, controlled conditions ("Can it work?") [25] [23]. | Establish effectiveness in real-world clinical practice ("Does it work for us?") [25] [23] [5]. |

| Defining Feature | Random assignment of participants to intervention groups. | Investigator observes effects without assigning exposure. |

| Ideal Application | Pharmacologic interventions where tight control is possible and equipoise exists. | Natural experiments, long-term outcomes, rare diseases, and situations where RCTs are unethical [25] [23] [26]. |

| Internal Validity | High, when well-conducted, due to control of known and unknown confounders at baseline [22] [26]. | Lower, requires sophisticated methods to control for measured confounders; vulnerable to unmeasured confounding [23]. |

| External Validity | Often limited due to strict eligibility criteria and artificial settings [27] [26]. | Typically higher, as it reflects outcomes in diverse, real-world patient populations and settings [23] [5]. |

Quantitative Comparison of Strengths and Limitations

| Aspect | Randomized Controlled Trial (RCT) | Observational Study |

|---|---|---|

| Control of Confounding | Eliminates both measured and unmeasured confounding at baseline [22] [23]. | Can only control for measured confounders; residual confounding is a major threat [23]. |

| Cost & Duration | Very high cost and long duration (often many years) [23] [4]. | Relatively fast and inexpensive when utilizing existing data (e.g., EHRs, registries) [23] [5]. |

| Ethical Feasibility | Requires clinical equipoise; not possible for established care or clearly harmful exposures [25] [22]. | Often the only ethical option for evaluating interventions in the above scenarios [25] [26]. |

| Data on Harms | Often underpowered for detecting rare or long-term adverse events [27] [26]. | Can provide robust data on real-world harms and safety signals from large, diverse populations over time. |

| Risk of Bias | Vulnerable to post-randomization biases (non-compliance, drop-outs) and selective reporting [27] [23]. | Vulnerable to selection bias, information bias, and confounding by indication if not carefully designed [25]. |

The Scientist's Toolkit: Essential Reagents for Modern Clinical Research

This table details key methodological "reagents" and resources essential for designing and interpreting both experimental and observational studies.

| Item | Category | Function / Explanation |

|---|---|---|

| ClinicalTrials.gov | Registry | A publicly accessible database for trial registration, mandated for most clinical trials as a condition of publication. It increases transparency and reduces selective reporting [22] [27]. |

| CONSORT Guidelines | Reporting Guideline | An evidence-based minimum set of recommendations for reporting RCTs. Includes a 25-item checklist and flow diagram to improve the quality and completeness of trial reporting [22] [27]. |

| PRECIS-2 Tool | Design Tool | A tool to help trialists design trials that are more pragmatic (conducted under usual clinical conditions) rather than explanatory (conducted under ideal conditions), helping match the design to the stated aim [28]. |

| Propensity Score | Statistical Method | A patient's probability of receiving the treatment given their observed baseline covariates. Used for matching or weighting to create balanced comparison groups in observational studies [25] [23]. |

| Directed Acyclic Graph (DAG) | Causal Framework | A visual tool used to represent prior knowledge about causal assumptions and sources of bias, guiding the selection of variables for adjustment in observational analyses [23] [5]. |

| E-Value | Sensitivity Metric | A quantitative measure of the robustness of a study result to potential unmeasured confounding. A higher E-value indicates greater confidence that the result is not explained by an unmeasured confounder [23] [5]. |

| IRBP (1-20), human | IRBP (1-20), human, MF:C101H164N24O28S, MW:2194.6 g/mol | Chemical Reagent |

| ST-401 | ST-401, MF:C24H20N2O, MW:352.4 g/mol | Chemical Reagent |

The debate is not about whether RCTs or observational studies are universally superior. The central thesis is that the research question and context must drive the choice of method [23] [5]. RCTs remain the gold standard for establishing efficacy under controlled conditions where they are feasible and ethical. However, a significant proportion of medicine must be practiced in the grey zones where RCTs cannot tread—due to ethical imperatives, practical realities, or the long arc of disease. In these areas, observational studies are not a weak substitute but a necessary and powerful source of evidence. The credibility of this evidence hinges on the rigorous application of advanced methodologies like target trial emulation, causal inference frameworks, and sensitivity analyses. For researchers, clinicians, and regulators, the path forward lies in moving beyond a rigid hierarchy of evidence. Instead, they must embrace triangulation—the practice of seeking consistency from multiple, independent study types with different underlying biases—to build a robust, clinically relevant, and ethically sound foundation for medical science [25] [23] [5].

The paradigm for establishing medical evidence is undergoing a fundamental transformation. For decades, the randomized controlled trial (RCT) has been considered the undisputed gold standard for clinical research [25]. This hierarchy positioned observational studies as inferior due to their perceived susceptibility to confounding and bias. However, the era of big data, characterized by massive datasets from electronic health records (EHRs), genomic databases, and real-world monitoring, is challenging this long-standing convention [5]. Emerging data sources and advanced analytical methods are enabling observational studies to complement and, in some contexts, even compete with RCTs in generating reliable evidence. This shift is particularly consequential for drug development and biomedical research, where the limitations of RCTs—including high costs, limited generalizability, and ethical constraints—are increasingly apparent [25] [5]. This guide objectively compares the performance of these evolving research paradigms, examining how big data is catalyzing a fundamental reassessment of what constitutes valid scientific evidence.

The Traditional Hierarchy: RCTs as the Gold Standard

Core Principles and Strengths of RCTs

Randomized Controlled Trials are designed to establish the efficacy of an intervention under ideal conditions [5]. Their primary strength lies in internal validity: the random assignment of subjects to intervention or control groups minimizes selection bias and, in large samples, balances both known and unknown confounding variables at baseline [25] [5]. This design provides a robust foundation for causal inference about the effect of the intervention itself.

Inherent Limitations and Practical Challenges

Despite their strengths, RCTs face significant constraints that impact their utility in the big data era:

- Limited Generalizability (External Validity): Strict inclusion and exclusion criteria often result in homogeneous study populations that do not reflect the "real-world" patients treated in clinical practice [25] [5].

- Ethical and Practical Infeasibility: For many critical clinical questions, such as the long-term effects of lifestyle factors or the harms of established treatments, RCTs are considered unethical or impractical to conduct [25].

- High Cost and Time Intensity: RCTs are exceptionally resource-intensive, requiring substantial financial investment and many years to complete, which can slow the pace of medical innovation [5].

- Vulnerability to Post-Randomization Biases: Randomization only protects against confounding at baseline. Issues such as loss to follow-up, non-adherence, and missing data can introduce bias during the trial's course [29] [5].

Table 1: Traditional Strengths and Limitations of Randomized Controlled Trials

| Aspect | Strengths | Limitations |

|---|---|---|

| Internal Validity | High; balances known and unknown confounders at baseline [5] | Vulnerable to post-randomization biases (non-adherence, loss to follow-up) [29] |

| Generalizability | Controlled conditions ensure precise efficacy measurement | Often low; homogeneous populations under artificial conditions [5] |

| Feasibility | Considered gold standard for regulatory approval | Costly, time-intensive, and sometimes unethical [25] [5] |

| Causal Inference | Strong, intuitive causal interpretation for assigned treatment | Intention-to-treat analysis may not reflect effects of actual treatment received [29] |

The Big Data Revolution: New Capabilities for Observational Research

The volume, variety, and velocity of data available for medical research have exploded. Key sources powering this revolution include:

- Electronic Health Records (EHRs) and Health Administrative Data: Provide longitudinal, real-world patient data on a massive scale, enabling research on diverse populations and clinical scenarios [5].

- Biobanks and Genomic Databases: Resources like the Catalogue Of Somatic Mutations In Cancer (COSMIC) and the Human Somatic Mutation Database (HSMD) aggregate expert-curated genetic and clinical data from hundreds of thousands of patients, accelerating target identification and drug discovery [30].

- Real-World Data from Digital Health Tools: Data from wearables, sensors, and mobile health applications offer continuous, real-time monitoring of patient physiology and behavior outside clinical settings [5].

Advanced Methodologies Strengthening Causal Inference

Critically, the analysis of these massive datasets has been revolutionized by sophisticated statistical methods that directly address traditional weaknesses of observational studies.

- Causal Inference Frameworks: Methods such as Directed Acyclic Graphs (DAGs) force researchers to explicitly define and visualize assumed causal relationships, potential confounders, and sources of bias before analysis [5].

- G-Methods: Advanced techniques like inverse probability weighting, g-estimation, and the parametric g-formula can adjust for both measured confounding and selection bias, including in re-analyses of RCTs with non-adherence [29].

- Propensity Score Matching: This technique attempts to mimic randomization by creating matched cohorts of treated and untreated subjects who have similar probabilities of receiving the treatment based on their observed characteristics [25].

- Sensitivity Analyses: Tools like the E-value quantify how strong an unmeasured confounder would need to be to explain away an observed association, providing a metric for assessing the robustness of study findings [5].

Direct Comparison: RCTs vs. Modern Observational Studies

Empirical evidence increasingly demonstrates that well-designed observational studies can produce results remarkably similar to RCTs. A landmark meta-analysis compared results from RCTs and observational studies across five clinical topics and found that the summary estimates were strikingly consistent [31].

Table 2: Comparative Analysis of RCTs and Modern Observational Studies

| Characteristic | Randomized Controlled Trial (RCT) | Modern Observational Study |

|---|---|---|

| Primary Objective | Establish efficacy under ideal conditions [5] | Examine effectiveness and safety in real-world settings [5] |

| Confounding Control | Randomization balances confounders at baseline [5] | Advanced statistical methods (e.g., propensity scores, g-methods) adjust for measured confounders [29] [5] |

| Data Source | Prospectively collected research data | EHRs, registries, claims data, genomic databases [30] [5] |

| Patient Population | Homogeneous, highly selected | Heterogeneous, reflects clinical practice [5] |

| Typical Scale | Hundreds to thousands of patients | Tens of thousands to millions of patients [30] |

| Key Strength | High internal validity for assigned treatment [5] | High external validity and efficiency for long-term/harm outcomes [5] |

| Key Limitation | Limited generalizability, high cost, ethical constraints [25] [5] | Residual confounding by unmeasured factors remains a threat [5] |

| Role in Drug Development | Pivotal evidence for regulatory approval of efficacy [32] | Target validation, trial design, safety monitoring, label expansion [30] [32] |

The convergence of results is further illustrated in specific clinical examples. For instance, the summary relative risk for Bacille Calmette-Guérin vaccine effectiveness from 13 RCTs was 0.49, while the odds ratio from 10 case-control studies was an almost identical 0.50 [31]. Similarly, for hypertension treatment and stroke, RCTs yielded a relative risk of 0.58, closely matching the 0.62 estimate from cohort studies [31]. This level of agreement, observed across multiple clinical domains, challenges the historical notion that observational studies systematically overestimate treatment effects.

Experimental Protocols and Data-Generation Methodologies

Protocol 1: Leveraging Curated Somatic Mutation Databases for Target Discovery

Objective: To identify and prioritize novel oncology drug targets by analyzing somatic mutation patterns across cancer types and patient populations using expert-curated databases.

Methodology:

- Data Acquisition: Source large-scale, structured somatic mutation data from expert-curated knowledgebases like COSMIC, which integrates data from over 30,000 scientific publications and large-scale cancer genomics studies [30].

- Curation and Standardization: Implement a multi-stage curation workflow [30]:

- Quality and Relevance Check: The information source (publication or bioresource) is checked for quality and relevance.

- Controlled Vocabulary Mapping: All curated features and terms are converted to standardized ontologies (e.g., NCI thesaurus for disease classification) to ensure interoperability.

- Data Extraction: The minimum unit of curation (a genetic variant, tumor type, and study scope) is extracted. Associated clinical features (age, gender, therapy history, etc.) are also captured when available.

- Variant Annotation and Prioritization: Utilize accompanying database modules like the Cancer Gene Census (CGC) and Cancer Mutation Census (CMC) to annotate the oncogenic role of genes and mutations based on defined biological evidence [30].

- Analysis: Calculate mutation prevalence, conduct pathway enrichment analyses, and correlate mutational status with clinical outcomes to identify high-value targets for therapeutic intervention.

Protocol 2: Model-Informed Drug Development (MIDD) for Trial Optimization

Objective: To use quantitative models to simulate clinical trials, optimize study design, and support regulatory decision-making, thereby increasing the probability of success and efficiency of drug development.

Methodology:

- Define Question of Interest (QOI) and Context of Use (COU): Precisely specify the clinical or pharmacological question the model will address and the context in which the model's predictions will be applied [32].

- Model Selection: Choose a "fit-for-purpose" modeling methodology aligned with the development stage [32]:

- Early Discovery: Quantitative Structure-Activity Relationship (QSAR) models to predict compound activity.

- Preclinical to First-in-Human (FIH): Physiologically Based Pharmacokinetic (PBPK) models for FIH dose prediction.

- Clinical Development: Population PK/Exposure-Response (ER) models to characterize variability and inform dosing.

- Clinical Trial Simulation: Use the developed model to run virtual trials. Explore different scenarios by varying trial parameters (e.g., dosing regimens, enrollment criteria, sample sizes) to optimize the actual trial design [32].

- Model Evaluation and Regulatory Submission: Validate the model against existing data and quantify uncertainty. Integrate the model-based evidence into the overall development strategy and regulatory submissions [32].

Visualizing Workflows and Methodologies

Evidence Integration and Validation Workflow

Diagram 1: Evidence integration from multiple data sources

Somatic Data Curation for Target Discovery

Diagram 2: Expert curation workflow for cancer genomic data

Table 3: Essential Resources for Modern Data-Intensive Clinical Research

| Resource/Solution | Type | Primary Function in Research | Example/Provider |

|---|---|---|---|

| Expert-Curated Somatic Databases | Data Resource | Provides high-quality, structured data on cancer mutations for target identification and validation [30] | COSMIC, HSMD [30] |

| Causal Inference Frameworks | Methodological Framework | Provides a structured approach for designing observational studies and drawing causal conclusions from non-experimental data [5] | Directed Acyclic Graphs (DAGs), G-Methods [29] [5] |

| Model-Informed Drug Development (MIDD) | Quantitative Framework | Uses pharmacokinetic/pharmacodynamic models to simulate drug behavior, optimize trials, and support regulatory decisions [32] | PBPK, QSP, ER Modeling [32] |

| Electronic Health Record (EHR) Systems | Data Resource | Provides large-scale, real-world clinical data on patient populations, treatments, and outcomes for hypothesis generation and testing [5] | Epic, Cerner, Allscripts |

| Data Quality & Observability Platforms | Software Tool | Monitors data pipelines for freshness, volume, schema changes, and lineage to ensure analytics are based on reliable data [33] | Monte Carlo, Acceldata [34] [33] |

The conversation around medical evidence is fundamentally shifting from a rigid hierarchy to a pragmatic, integrated paradigm. Big data and advanced analytics have not rendered RCTs obsolete but have instead revealed that no single study design can answer all research questions [5]. The future of clinical research and drug development lies in the triangulation of evidence—thoughtfully combining the high internal validity of RCTs with the scalability, generalizability, and real-world relevance of modern observational studies [5]. Researchers and drug developers must become fluent in both paradigms, understanding the specific questions each is best suited to answer and leveraging emerging data sources and methodologies to build a more complete, rapid, and patient-centric evidence base for modern medicine.

Advanced Methods for Cross-Validation: Causal Inference and Diagnostic Frameworks

In the evolving landscape of clinical and biological research, the integration of observational studies and randomized controlled trials (RCTs) represents a paradigm shift in causal evidence generation. While RCTs have traditionally been regarded as the gold standard for establishing causal effects due to their ability to eliminate confounding through randomization, they often suffer from significant limitations including limited generalizability, high costs, and ethical constraints [35] [5]. Conversely, observational studies, which include data from electronic health records, disease registries, and large cohort studies, offer greater real-world relevance and larger sample sizes but are potentially compromised by unmeasured confounding and other biases [36] [37]. The emerging discipline of causal inference provides a methodological framework for analyzing observational data as hypothetical RCTs, thereby creating a bridge between these complementary approaches to evidence generation.

This methodology is particularly relevant for researchers and drug development professionals seeking to validate observational study results against randomized trials research. By applying formal causal frameworks to observational data, investigators can approximate the conditions of randomized experiments, test causal hypotheses, and generate evidence that complements findings from RCTs [5] [38]. The growing recognition that "no study is designed to answer all questions" has accelerated the adoption of these methods across therapeutic areas, including cardiology, mental health, and oncology, where traditional RCTs often exclude significant portions of real-world patient populations [35].

Table 1: Fundamental Characteristics of RCTs and Observational Studies

| Characteristic | Randomized Controlled Trials (RCTs) | Observational Studies |

|---|---|---|

| Primary Strength | High internal validity through confounding control | High external validity through real-world relevance |

| Key Limitation | Limited generalizability due to selective participation | Potential for unmeasured confounding |

| Implementation | Controlled experimental conditions | Real-world settings with existing data |

| Cost & Feasibility | Often expensive, time-consuming, and sometimes unethical | Generally more feasible for large-scale, long-term questions |

| Patient Population | Often highly selected with restrictive criteria | Typically more representative of target population |

Foundational Concepts and Frameworks

The Potential Outcomes Framework

The potential outcomes framework, also known as the Rubin Causal Model, provides a formal mathematical structure for defining causal effects. In this framework, each individual has two potential outcomes: Y(1) under treatment and Y(0) under control. The fundamental problem of causal inference is that we can only observe one of these outcomes for each individual [36]. The average treatment effect (ATE) is defined as Ï„ = E[Y(1) - Y(0)], representing the difference in expected outcomes between treatment and control conditions across the population. When analyzing observational data as hypothetical RCTs, researchers aim to estimate this quantity while accounting for systematic differences between treated and untreated groups.

The conditional average treatment effect (CATE), denoted as τ(x) = E[Y(1) - Y(0) | X=x], extends this concept by examining how treatment effects vary across subpopulations defined by covariates X [36]. This is particularly valuable for understanding heterogeneous treatment effects and identifying which patient subgroups benefit most from interventions. The potential outcomes framework forces researchers to explicitly state the counterfactual comparison of interest—what would have happened to the same individuals under a different treatment condition—which is the fundamental thought experiment underlying both RCTs and causal inference from observational data.

Structural Causal Models and Directed Acyclic Graphs (DAGs)

Structural causal models (SCMs) use mathematical relationships to represent data-generating processes, while directed acyclic graphs (DAGs) provide visual representations of the assumed causal relationships among variables [36] [38]. These tools are essential for articulating and testing causal assumptions before conducting analyses. A DAG consists of nodes (variables) and directed edges (causal pathways), with specific configurations representing different sources of bias:

- Confounders are common causes of both treatment and outcome variables

- Mediators lie on the causal pathway between treatment and outcome

- Colliders are common effects of treatment and outcome that can introduce bias if conditioned upon

- Effect modifiers are variables that influence the magnitude of treatment effects

The explicit mapping of these relationships helps researchers select appropriate adjustment strategies and avoid biases such as conditioning on colliders or failing to adjust for important confounders [38]. This process represents a significant advancement over traditional statistical approaches that often rely on associational patterns without explicit causal justification.

Causal Inference Workflow for Analyzing Observational Data as Hypothetical RCTs

Key Methodological Approaches

Propensity Score Methods

Propensity score methods aim to balance the distribution of covariates between treated and untreated groups in observational data, mimicking the covariate balance achieved through randomization in RCTs. The propensity score, defined as e(X) = P(A=1|X), represents the probability of treatment assignment conditional on observed covariates [36]. Several approaches leverage propensity scores:

- Propensity Score Matching: Creates matched sets of treated and untreated individuals with similar propensity scores, allowing for direct comparison of outcomes between matched groups

- Inverse Probability of Treatment Weighting (IPTW): Uses weights based on the inverse of the propensity score to create a pseudo-population where treatment assignment is independent of measured covariates

- Propensity Score Stratification: Groups individuals into strata based on propensity score quantiles and estimates treatment effects within each stratum

- Covariate Adjustment: Includes the propensity score as a covariate in outcome regression models

These methods rely on the assumption of strongly ignorable treatment assignment, which requires that all common causes of treatment and outcome are measured and included in the propensity score model [36]. When this assumption holds, propensity score methods can effectively reduce confounding bias and provide estimates that approximate those from RCTs.

G-Methods

G-methods, including g-formula, inverse probability weighting, and g-estimation, extend traditional approaches to handle time-varying treatments and confounders more effectively [38]. These methods are particularly valuable when dealing with complex longitudinal data where time-dependent confounding may be present:

- G-formula (or the parametric g-formula) uses regression models to estimate the outcome under different treatment protocols, then standardizes these estimates to the population distribution of covariates

- Inverse Probability of Treatment Weighting for time-varying treatments extends IPTW to longitudinal settings by creating weights for each treatment period

- G-estimation of structural nested models directly estimates the causal effect parameter by finding the value that makes the treatment effect independent of covariates after adjustment

These methods enable researchers to estimate the effects of sustained treatment strategies while appropriately accounting for time-varying confounders that are affected by prior treatment—a scenario where conventional methods often produce biased results.

Instrumental Variable Approaches

Instrumental variable (IV) methods address unmeasured confounding by leveraging natural experiments—variables that influence treatment assignment but do not directly affect the outcome except through treatment [36]. A valid instrument must satisfy three key conditions: (1) be associated with the treatment variable, (2) not be associated with unmeasured confounders, and (3) affect the outcome only through its effect on treatment [37]. Common instruments in clinical research include:

- Geographic variation in treatment preferences or availability

- Physician preference for specific treatments

- Genetic markers in Mendelian randomization studies

- Institutional policy changes that affect treatment algorithms

IV methods are particularly valuable when significant unmeasured confounding is suspected, as they can provide consistent effect estimates even when unmeasured confounders are present. However, the validity of IV analyses depends critically on the plausibility of the instrumental assumptions, which often cannot be fully tested with the available data.

Table 2: Comparison of Primary Causal Inference Methods

| Method | Key Mechanism | Primary Assumptions | Best Use Cases |

|---|---|---|---|

| Propensity Score Methods | Balance measured covariates between treatment groups | All confounders measured; positivity | Cross-sectional studies with rich covariate data |

| G-Methods | Account for time-varying confounding | Sequential exchangeability; no model misspecification | Longitudinal studies with time-varying treatments |

| Instrumental Variables | Leverage natural experiments | Valid instrument available; exclusion restriction | Significant unmeasured confounding suspected |

| Difference-in-Differences | Compare trends over time | Parallel trends assumption | Policy changes or natural experiments |

| Regression Discontinuity | Exploit arbitrary thresholds | Continuous relationship except at cutoff | Eligibility thresholds or scoring systems |

Experimental Protocols and Implementation

Protocol for Transporting RCT Results to Target Populations

Generalizability and transportability methods enable researchers to extend causal inferences from RCT participants to specific target populations represented by observational data [36]. The standard protocol involves:

- Define the target population using observational data that represents the clinical population of interest

- Identify common covariates measured consistently across both RCT and observational datasets

- Estimate the probability of trial participation using a model that predicts S=1 (trial participation) versus S=0 (target population) based on covariates X

- Compute transportability weights for RCT participants as ω = P(S=0|X)/P(S=1|X)

- Apply these weights when estimating the treatment effect from the RCT data

- Validate transported estimates using sensitivity analyses and model checks

This approach allows drug development professionals to assess how well RCT results might apply to broader clinical populations, addressing common concerns about the selective nature of trial participation [36] [35]. When applying this protocol, it is essential to measure and adjust for all covariates that simultaneously predict trial participation and treatment effect modification.

Data fusion methods combine information from both RCTs and observational studies to improve statistical efficiency and enhance causal conclusions [36] [39]. The standard implementation protocol includes:

- Harmonize variables across data sources to ensure consistent definitions and measurements

- Assess compatibility of study populations through covariate balance checks and overlap assessments

- Specify the integration approach based on the scientific question:

- For unconfoundedness assessment: Test whether observational and experimental estimates differ after rigorous confounding adjustment

- For efficiency improvement: Use RCT data to anchor the causal effect while borrowing information from observational data to improve precision

- For heterogeneous treatment effect estimation: Leverage the larger sample size of observational data to examine effect modification

- Implement estimation procedures using methods like doubly robust estimators, Bayesian hierarchical models, or power priors

- Conduct sensitivity analyses to evaluate robustness to violations of key assumptions

This protocol is particularly valuable when RCTs are underpowered for subgroup analyses or when assessing the consistency of treatment effects across different study designs and populations.

Key Causal Relationships in Observational Data Analysis

Comparative Performance and Validation

Empirical Comparisons with RCT Gold Standards

Multiple studies have compared causal inference methods applied to observational data with results from RCTs addressing similar clinical questions. The findings consistently demonstrate that carefully conducted observational analyses can produce estimates similar to RCTs when appropriate methods are applied:

- Colnet et al. (2023) reviewed numerous case studies and found that methods combining RCTs and observational data, such as doubly robust estimators and transportability approaches, successfully improved the generalizability of RCT findings while maintaining internal validity [36] [39]

- A literature review across cardiology, mental health, and oncology demonstrated that high-quality observational studies with appropriate causal inference methods produced similar effect estimates to RCTs in many clinical scenarios, though with variable performance across different methodological approaches [35]

- Comparative analyses have shown that discrepancies between observational studies and RCTs often stem from inadequate adjustment for confounding rather than inherent flaws in observational data itself [37]

These validation studies highlight that methodological rigor, comprehensive confounding adjustment, and careful sensitivity analyses are more important than study design per se in generating reliable causal evidence.

Performance Metrics for Causal Methods

When evaluating the performance of causal inference methods, researchers should examine multiple metrics:

- Bias: The difference between the estimated effect and the true causal effect

- Variance: The precision of the effect estimate

- Coverage: The proportion of confidence intervals that contain the true effect

- Mean squared error: A composite measure of bias and variance

- Sensitivity to unmeasured confounding: How robust estimates are to potential violations of the unconfoundedness assumption

Simulation studies consistently show that doubly robust methods, which combine outcome regression with propensity score weighting, generally outperform approaches that rely exclusively on one component, particularly when model specifications may be incorrect [36]. These methods provide consistent effect estimates if either the propensity score model or the outcome regression model is correctly specified.

Table 3: Performance Comparison of Causal Methods Against RCT Benchmarks

| Method Category | Bias Reduction | Variance Impact | Handling of Unmeasured Confounding | Ease of Implementation |

|---|---|---|---|---|

| Propensity Score Matching | Moderate to High | Increases variance | Limited | Moderate |

| Inverse Probability Weighting | High | Can substantially increase variance | Limited | Moderate |

| Doubly Robust Methods | High | Moderate variance increase | Limited | More complex |

| Instrumental Variables | Potentially addresses unmeasured confounding | Often increases variance substantially | Addresses unmeasured confounding if valid | Difficult to find valid instruments |

| G-Methods | High for time-varying confounding | Varies by implementation | Limited to measured confounders | Complex implementation |

Successful implementation of causal inference methods for analyzing observational data as hypothetical RCTs requires both conceptual understanding and practical tools. The following toolkit outlines essential components for researchers embarking on such analyses:

Table 4: Essential Toolkit for Causal Inference Analysis

| Tool Category | Specific Methods/Approaches | Function/Purpose | Implementation Considerations |

|---|---|---|---|

| Causal Assumption Mapping | Directed Acyclic Graphs (DAGs) | Visualize assumed causal relationships and identify sources of bias | Use software like Dagitty; requires substantive domain knowledge |

| Study Design Approaches | Target Trial Emulation | Design observational analysis to emulate hypothetical RCT | Specify eligibility, treatment strategies, outcomes, follow-up before analysis |

| Confounding Control | Propensity Scores, G-Methods, Instrumental Variables | Address measured and unmeasured confounding | Selection depends on confounder types and data availability |

| Sensitivity Analysis | E-values, Rosenbaum bounds | Quantify robustness to unmeasured confounding | E-values provide intuitive metric for unmeasured confounding strength |

| Software Implementation | R packages (tmle, WeightIt, ivpack), Python (causalml) | Implement complex causal methods | Consider computational requirements and learning curve |