Network Meta-Analysis in Drug Development: A Comprehensive Guide to Methodology, Applications, and Best Practices

Network meta-analysis (NMA) has emerged as a powerful statistical methodology that enables simultaneous comparison of multiple interventions by combining direct and indirect evidence across a network of studies.

Network Meta-Analysis in Drug Development: A Comprehensive Guide to Methodology, Applications, and Best Practices

Abstract

Network meta-analysis (NMA) has emerged as a powerful statistical methodology that enables simultaneous comparison of multiple interventions by combining direct and indirect evidence across a network of studies. This comprehensive guide explores NMA's foundational concepts, methodological frameworks, and practical applications in drug development and clinical research. It addresses how NMA overcomes limitations of traditional pairwise meta-analysis by providing relative effectiveness rankings for all available treatments, even those never directly compared in head-to-head trials. The content covers critical assumptions of transitivity and consistency, advanced applications including model-based meta-analysis for dose-response modeling, and contemporary approaches for assessing evidence certainty using GRADE frameworks. Through real-world examples from recent therapeutic areas including ulcerative colitis, obesity, and migraine, this article provides researchers and drug development professionals with essential knowledge for designing, conducting, and interpreting NMAs to inform evidence-based decision-making in biomedical research.

Network Meta-Analysis Fundamentals: From Basic Concepts to Clinical Applications

Network meta-analysis (NMA), also known as mixed treatment comparison or multiple treatments meta-analysis, is a sophisticated statistical technique that enables the simultaneous comparison of multiple interventions in a single, integrated analysis [1] [2]. This methodology extends beyond conventional pairwise meta-analysis, which is limited to pooling direct evidence from studies comparing two interventions head-to-head [3]. NMA achieves this comprehensive synthesis by combining both direct evidence (from studies directly comparing interventions) and indirect evidence (estimated through a common comparator) within a connected network of randomized controlled trials (RCTs) [4] [1]. This approach has revolutionized evidence-based medicine by allowing researchers and healthcare decision-makers to compare the relative efficacy and safety of all available treatments for a condition, even when direct head-to-head trials are absent [3].

The fundamental principle underlying NMA is the ability to estimate the relative effect between two interventions that have never been directly compared in a trial by using common comparator interventions [4]. For instance, if intervention A has been compared to B in some trials, and A has also been compared to C in other trials, then the relative effect between B and C can be estimated indirectly through their common comparator A [4] [1]. This capacity to fill evidence gaps through indirect comparisons makes NMA particularly valuable for comparative effectiveness research, where multiple competing interventions exist but comprehensive direct evidence is lacking [1].

Fundamental Concepts and Advantages

Core Components of Network Meta-Analysis

Network meta-analysis relies on several key components that form its structural and analytical foundation. The network geometry refers to the arrangement of interventions and their connections through direct comparisons [1]. This geometry is typically visualized using a network diagram, where nodes represent interventions and lines (edges) represent direct comparisons between them [4] [1]. The size of nodes and thickness of edges can be proportional to the number of participants or studies, providing immediate visual cues about the amount of evidence available for each intervention and comparison [1] [5].

Another essential concept is the distinction between different types of evidence. Direct evidence comes from studies that physically randomize patients between two or more interventions [1]. Indirect evidence is derived mathematically by combining studies that share common comparators [4]. When both direct and indirect evidence exist for a particular comparison, NMA combines them to produce mixed evidence, which typically yields more precise effect estimates than either source alone [4] [3].

Key Advantages Over Pairwise Meta-Analysis

NMA offers several significant advantages over traditional pairwise meta-analysis. First, it provides a comprehensive framework for comparing all relevant interventions for a condition, thereby offering a complete picture of the therapeutic landscape [4] [1]. This is particularly valuable for clinical guideline development and health technology assessments, where decisions must consider the relative merits of all available options.

Second, NMA typically yields more precise estimates of treatment effects by incorporating both direct and indirect evidence [4]. Empirical studies have demonstrated that NMA often produces estimates with narrower confidence intervals compared to those derived solely from direct evidence [4]. This enhanced precision stems from the ability to borrow strength across the entire network of evidence.

Third, NMA enables the estimation of relative ranking among all interventions [4] [1]. Through statistical ranking metrics such as P-scores (frequentist framework) or surface under the cumulative ranking curve (SUCRA, Bayesian framework), NMA can estimate the probability that each intervention is the most effective, second most effective, and so on [6] [5] [3]. This ranking information, when interpreted cautiously, provides valuable guidance for clinical decision-making.

Table 1: Key Advantages of Network Meta-Analysis Over Pairwise Meta-Analysis

| Advantage | Description | Implication for Research |

|---|---|---|

| Comprehensive Comparison | Simultaneously compares all available interventions | Provides complete therapeutic landscape for clinical decision-making |

| Enhanced Precision | Combines direct and indirect evidence | Yields more precise effect estimates with narrower confidence intervals |

| Ranking Capability | Estimates hierarchy of interventions | Informs treatment guidelines and policy decisions |

| Evidence Gap Bridging | Provides estimates for comparisons without direct evidence | Guides future research priorities by identifying unmet evidence needs |

Methodological Framework and Assumptions

The Transitivity Assumption

The validity of NMA depends critically on the satisfaction of the transitivity assumption, which is the foundational principle that enables valid indirect comparisons [4] [1]. Transitivity requires that the different sets of studies included in the analysis are similar, on average, in all important factors that may affect the relative effects, other than the interventions being compared [4] [1]. In practical terms, this means that in a hypothetical multi-arm trial including all interventions in the network, participants could be randomly assigned to any of the treatments [1].

Transitivity can be violated when studies comparing different interventions systematically differ in their effect modifiers—clinical or methodological characteristics that influence treatment effects [4] [1]. For example, if all trials comparing interventions A and B enrolled patients with mild disease, while all trials comparing A and C enrolled patients with severe disease, and disease severity modifies treatment effects, then the indirect comparison between B and C would violate the transitivity assumption [1]. Common effect modifiers include patient characteristics (e.g., disease severity, age, comorbidities), intervention characteristics (e.g., dose, administration route), and study methodology (e.g., risk of bias, outcome definitions) [1].

The Consistency Assumption

Consistency (sometimes called coherence) is the statistical manifestation of transitivity and refers to the agreement between direct and indirect evidence for the same comparison [4] [2]. When both direct and indirect evidence exist for a particular comparison, consistency means that these two sources of evidence provide similar estimates of the treatment effect [4]. Inconsistency occurs when different sources of information about a particular intervention comparison disagree [4].

Several statistical methods exist to evaluate consistency, including node-splitting (which separates direct and indirect evidence for each comparison and assesses their disagreement) and global tests (which assess inconsistency across the entire network) [7]. When significant inconsistency is detected, researchers should investigate potential causes, which may include clinical or methodological differences between studies, and consider using network meta-regression or other methods to account for these differences [3].

Network Connectivity

A fundamental requirement for NMA is that the network of interventions must be connected, meaning that there must be a path of direct comparisons linking all interventions in the network [4] [2]. If the network is disconnected (consisting of separate components), interventions in different components cannot be compared, either directly or indirectly. The presence of common comparators like placebo or standard care often facilitates connectivity by serving as hubs that link multiple interventions [1].

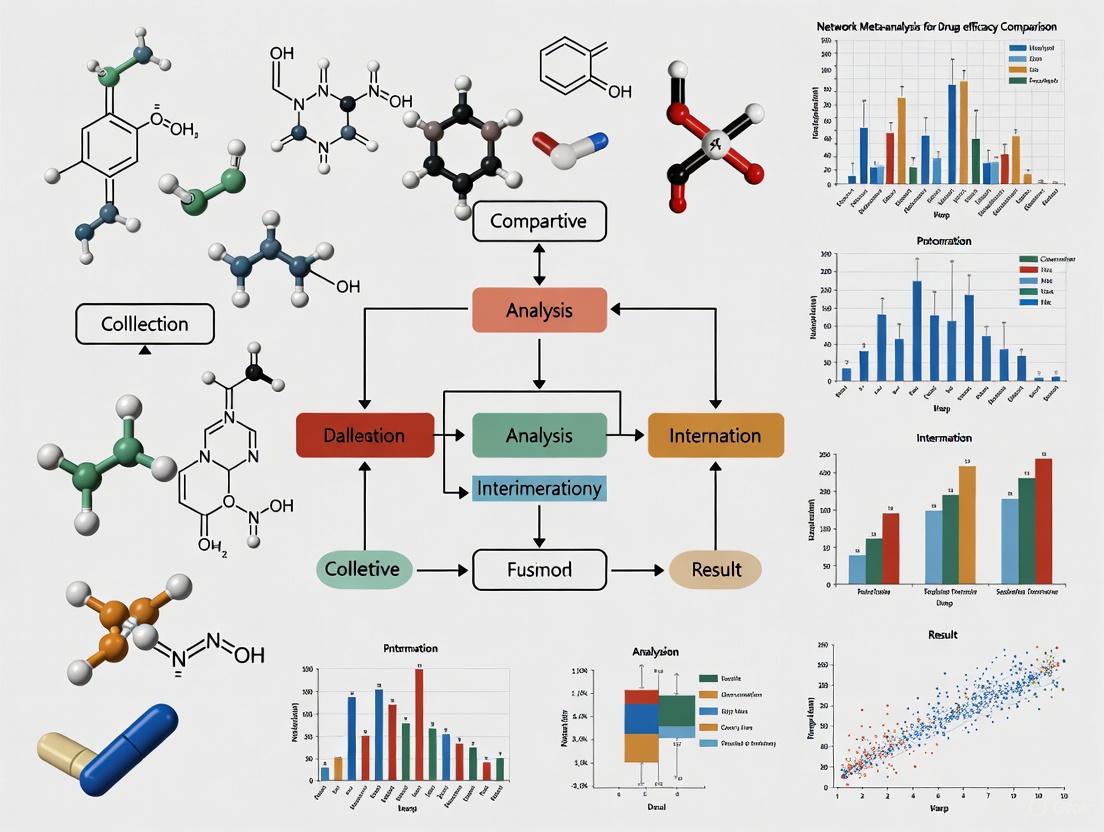

Diagram 1: NMA Evidence Synthesis Architecture. This diagram illustrates how NMA integrates different types of evidence to enable comprehensive treatment comparisons.

Designing and Conducting a Network Meta-Analysis

Developing the Research Question and Protocol

The first step in conducting a NMA is to define a clear research question using the PICO framework (Participants, Interventions, Comparators, Outcomes) [1]. The research question should be broad enough to benefit from the multiple comparisons offered by NMA but focused enough to ensure clinical relevance and feasibility [1]. A crucial decision at this stage is defining the treatment network—specifying which interventions to include and whether to examine them at the drug class, individual drug, or specific dose level [1]. These decisions should be guided by clinical relevance rather than statistical convenience.

As with any systematic review, developing and publishing a detailed protocol a priori is essential to minimize bias and data-driven decisions [3]. The protocol should specify the search strategy, study selection criteria, data extraction methods, risk of bias assessment tools, and planned statistical analyses, including approaches for assessing assumptions and exploring potential effect modifiers [1] [3].

Literature Search and Study Selection

The literature search for a NMA must be comprehensive to capture all relevant evidence for each intervention of interest [1]. This typically requires broader search strategies than conventional pairwise meta-analyses [1]. Multiple electronic databases should be searched, including MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, and trial registries [6] [3]. As NMA methodology continues to evolve, searches should also include methodological resources for the latest analytical approaches.

During study selection, standard systematic review procedures apply, but with additional consideration for ensuring network connectivity [2]. Researchers must verify that all included interventions can be linked through direct or indirect comparisons. The selection process should be documented using a PRISMA flow diagram, adapted if necessary to illustrate the network structure [5].

Data Collection and Risk of Bias Assessment

Data extraction for NMA requires special attention to potential effect modifiers that might violate the transitivity assumption [1]. In addition to standard study characteristics and outcome data, researchers should extract detailed information about patient characteristics, intervention details, and study methodology that might modify treatment effects [1]. This information is crucial for assessing transitivity and, if necessary, conducting meta-regression or subgroup analyses to account for important differences.

Risk of bias assessment should be performed using validated tools such as the Cochrane Risk of Bias tool [6] [5]. In NMA, the distribution of risk of bias across treatment comparisons should be examined, as imbalances might affect transitivity [1]. Recent methodological developments also allow for incorporating risk of bias assessments into the confidence in NMA results through frameworks like CINeMA (Confidence in Network Meta-Analysis) [7].

Table 2: Essential Methodological Steps in Network Meta-Analysis

| Step | Key Considerations | Tools and Methods |

|---|---|---|

| Protocol Development | Define treatment network, analysis plan | PICO framework, PRISMA-P guidelines |

| Literature Search | Ensure comprehensive coverage of all interventions | Multiple databases, clinical trial registries, no language restrictions |

| Study Selection | Ensure network connectivity | PRISMA flow diagram, explicit inclusion/exclusion criteria |

| Data Extraction | Capture potential effect modifiers | Standardized forms, pilot testing, duplicate extraction |

| Risk of Bias Assessment | Evaluate distribution of bias across comparisons | Cochrane RoB tool, ROB-2, CINeMA framework |

| Transitivity Assessment | Evaluate similarity across comparisons | Comparison of study characteristics, clinical reasoning |

Statistical Analysis and Interpretation

Analytical Approaches

NMA can be conducted within either frequentist or Bayesian statistical frameworks [3]. The Bayesian framework has been historically dominant due to its flexibility in modeling complex evidence structures and natural approach to probability statements about treatment rankings [3]. However, recent advances in frequentist methods have largely bridged this gap, and both frameworks now produce similar results when implemented with state-of-the-art techniques [3].

A key decision in NMA modeling is whether to use common effect (also called fixed effect) or random effects models [3]. Common effect models assume that all studies are estimating the same underlying treatment effect, while random effects models allow for heterogeneity in treatment effects across studies [7] [3]. Random effects models are generally preferred as they account for between-study heterogeneity, but they require estimation of the between-study variance (τ²) and may be less stable when studies are few [3].

Evaluating Heterogeneity and Inconsistency

Assessment of heterogeneity (variability in treatment effects within direct comparisons) and inconsistency (disagreement between direct and indirect evidence) is crucial for interpreting NMA results [3]. Heterogeneity can be evaluated using Cochran's Q and I² statistics for each direct comparison, similar to pairwise meta-analysis [3]. Global heterogeneity across the network can be assessed by estimating a common or comparison-specific between-study variance [3].

Several approaches are available for evaluating inconsistency [7] [3]. The design-by-treatment interaction model provides a global test for inconsistency across the entire network [3]. Node-splitting methods evaluate inconsistency for each specific comparison by separately estimating direct, indirect, and combined evidence [7]. When significant inconsistency is detected, researchers should investigate potential causes through subgroup analysis or meta-regression [3].

Treatment Ranking and Interpretation

Treatment ranking is a distinctive feature of NMA that provides estimates of the relative performance of all interventions [4] [3]. However, ranking should be interpreted with caution, as it is sensitive to small differences in effect estimates and uncertainty is often substantial [3]. Common ranking metrics include P-scores (frequentist framework) and SUCRA values (Bayesian framework), which estimate the probability of each treatment being the best or among the best options [6] [5] [3].

Diagram 2: NMA Methodology Workflow. This flowchart outlines the key stages in conducting a network meta-analysis, from question formulation to reporting.

Applications in Drug Efficacy Research

Case Study: Biological Therapies for Ulcerative Colitis

A recent NMA examining biological therapies and small molecules for ulcerative colitis maintenance therapy demonstrates the practical application of this methodology [6]. This analysis included 28 RCTs with 10,339 patients and compared multiple interventions including upadacitinib, vedolizumab, guselkumab, etrasimod, and infliximab [6]. The researchers employed sophisticated methods to account for differences in trial design, specifically distinguishing between studies that re-randomized responders after an open-label induction phase and those that treated patients through without re-randomization [6].

The analysis revealed that upadacitinib 30 mg once daily ranked first for clinical remission in re-randomized studies (relative risk of failure to achieve clinical remission = 0.52; 95% CI 0.44–0.61, p-score 0.99), while etrasimod 2 mg once daily ranked first for clinical remission in treat-through studies (RR = 0.73; 95% CI 0.64–0.83, p-score 0.88) [6]. These findings illustrate how NMA can provide nuanced insights into relative treatment efficacy across different clinical trial designs and patient populations.

Case Study: Medication-Overuse Headache Management

Another contemporary NMA evaluated different strategies for managing medication-overuse headache, comparing withdrawal strategies, preventive medications, educational interventions, and combination approaches [5]. This analysis of 16 RCTs with 3,000 participants found that combination therapies were most effective, with abrupt withdrawal combined with oral prevention and greater occipital nerve block showing the greatest reduction in monthly headache days (mean difference -10.6 days; 95% CI: -15.03 to -6.16) [5]. The study employed P-scores to rank treatments and provided both absolute effect estimates and relative rankings to inform clinical decision-making [5].

Table 3: Essential Resources for Network Meta-Analysis

| Resource Category | Specific Tools/Software | Primary Function |

|---|---|---|

| Statistical Software | R (netmeta, gemtc packages) [5] [3] | Frequentist and Bayesian NMA implementation |

| Bayesian Modeling | WinBUGS/OpenBUGS [3] | Flexible Bayesian modeling for complex networks |

| Risk of Bias Assessment | Cochrane RoB tool, ROB-2 [6] [5] | Methodological quality assessment of included studies |

| Evidence Grading | CINeMA framework [7] | Evaluating confidence in NMA results |

| Network Visualization | Network graphs [4] [1] | Visual representation of evidence structure |

| Inconsistency Detection | Node-splitting methods [7] | Evaluating disagreement between direct and indirect evidence |

Advanced Methodological Considerations

Study Importance and Contribution

In pairwise meta-analysis, each study's contribution to the pooled estimate is determined by its weight, typically based on the inverse variance of its estimate [7]. In NMA, defining study contributions is more complex because studies contribute not only to their direct comparisons but also to indirect estimates throughout the network [7]. Recent methodological work has developed approaches to quantify study importance, defined as the reduction in variance of a NMA estimate when a particular study is added to the network [7]. Unlike pairwise weights, these importance measures do not necessarily sum to 100% across studies but provide valuable insights about the influence of individual studies on NMA estimates [7].

Network Meta-Regression and Subgroup Analysis

When transitivity concerns exist due to systematic differences across comparisons, network meta-regression can be used to adjust for potential effect modifiers [3]. This advanced technique incorporates study-level covariates into the NMA model to examine whether treatment effects vary according to specific study or patient characteristics [3]. Network meta-regression requires careful implementation and interpretation, as ecological bias may occur when within-study and across-study relationships differ.

Individual Participant Data NMA

While most NMAs use aggregate study-level data, individual participant data (IPD) NMA offers significant advantages, including improved ability to investigate transitivity, examine subgroup effects, and handle missing data [3]. IPD NMA can be conducted using one-stage or two-stage approaches, with the one-stage approach generally preferred for its ability to properly account for all sources of correlation and uncertainty [3]. Although logistically challenging, IPD NMA represents the gold standard for evidence synthesis when feasible.

Network meta-analysis represents a powerful extension of conventional pairwise meta-analysis that enables comprehensive comparison of multiple interventions by combining direct and indirect evidence. Its application to drug efficacy research has transformed our ability to assess comparative effectiveness and make informed treatment decisions when direct evidence is limited or absent. The validity of NMA depends on satisfaction of the transitivity and consistency assumptions, which require careful evaluation through both clinical reasoning and statistical testing. As methodological advancements continue to enhance the sophistication and reliability of NMA, its role in evidence-based medicine and health technology assessment will likely expand, providing increasingly robust guidance for clinical practice and policy decisions.

Network meta-analysis (NMA) is an advanced statistical technique that has matured as a key methodology for evidence-based practice, positioned at the top of the evidence hierarchy alongside systematic reviews and pairwise meta-analyses [8]. As an extension of traditional pairwise meta-analysis, NMA enables the simultaneous comparison of multiple interventions for a specific condition, even when some have not been directly compared in head-to-head studies [8] [9]. This approach is particularly valuable in drug efficacy research, where clinicians, patients, and policy-makers often need to choose among numerous available treatments, few of which have been directly compared in clinical trials [8] [10]. The core components that enable these sophisticated comparisons are direct evidence, indirect evidence, and the network geometry that connects them, all of which must be understood to conduct, interpret, and appraise NMAs validly and reliably.

Core Component 1: Direct Evidence

Definition and Role

Direct evidence refers to evidence obtained from studies that directly compare the interventions of interest within randomized controlled trials (RCTs) [8] [4]. In traditional pairwise meta-analysis, this direct evidence is statistically pooled to provide a summary effect estimate for one intervention versus another [8]. These head-to-head comparisons form the foundational building blocks of any evidence synthesis, preserving the benefits of within-trial randomization and providing the most straightforward assessment of relative treatment effects [4].

Methodological Considerations

The process for synthesizing direct evidence in NMA follows the same rigorous methodology required for conventional pairwise meta-analysis [9]. This includes conducting a systematic literature search, assessing the risk of bias in eligible trials, and statistically pooling reported pairwise comparisons for all outcomes of interest [9]. The strength of this direct evidence depends on factors such as the number of available studies, sample sizes, risk of bias, and consistency of effects across studies [4].

Core Component 2: Indirect Evidence

Definition and Conceptual Basis

Indirect evidence allows for the estimation of relative effects between two interventions that have not been compared directly in primary studies [4]. This is achieved by leveraging a common comparator through which the interventions can be connected mathematically [8] [4]. For example, if intervention A has been compared to B in trials, and A has also been compared to C in other trials, then an indirect estimate for B versus C can be derived through their common comparator A [4].

The mathematical basis for simple indirect comparisons was first formalized by Bucher et al. (1997), using the odds ratio as the treatment effect measure [8]. The indirect estimate for the comparison between B and C is calculated as the difference between the summary statistics of the direct A versus C and A versus B meta-analyses [4]:

Effect_BC = Effect_AC - Effect_AB

The variance of this indirect estimate accounts for the uncertainty in both direct estimates:

Variance_BC = Variance_AB + Variance_AC

This approach preserves within-trial randomization and avoids the methodological pitfalls of pooling single arms across studies, which would discard the benefits of randomization [4].

Evolution of Indirect Methods

The initial adjusted indirect treatment comparison method proposed by Bucher et al. was limited to simple three-treatment scenarios [8]. Subsequent developments by Lumley introduced network meta-analysis proper, allowing indirect comparisons through multiple common comparators and providing mechanisms to measure incoherence (inconsistency) between different sources of evidence [8]. The most sophisticated current methods, developed by Lu and Ades, enable mixed treatment comparisons that simultaneously incorporate both direct and indirect evidence for all competing interventions in a single analysis, typically implemented in either Frequentist or Bayesian frameworks [8].

Table 1: Evolution of Indirect Meta-Analytical Methods

| Method | Key Developer(s) | Capabilities | Limitations |

|---|---|---|---|

| Adjusted Indirect Treatment Comparison | Bucher et al. (1997) [8] | Indirect comparison of three treatments via common comparator | Limited to simple trio of interventions (two-arm trials) |

| Network Meta-Analysis | Lumley [8] | Multiple common comparators; Incoherence measurement | Handling of complex networks limited |

| Mixed Treatment Comparisons | Lu and Ades [8] | Simultaneous analysis of all direct and indirect evidence; Treatment ranking | Increased model complexity; Computational intensity |

Core Component 3: Network Geometry

Network Structure and Visualization

Network geometry refers to the arrangement and connections between interventions within a network, typically visualized through network diagrams (also called network graphs) [8] [4]. These diagrams consist of nodes (usually represented as circles) representing the interventions under evaluation, and lines connecting these nodes represent the available direct comparisons between pairs of interventions [8] [4]. The structure of this geometry fundamentally determines which indirect comparisons are possible and how much uncertainty exists in the resulting estimates.

Diagram 1: Example drug comparison network geometry

Interpreting Network Geometry

The geometry of a network provides important visual cues about the evidence base. Network diagrams can be enhanced to show the volume of evidence by varying the size of nodes according to the number of patients or studies for each intervention and thickening the lines between nodes according to the number of studies making that direct comparison [9]. This visual representation helps identify interventions with substantial direct evidence versus those that are primarily evaluated through indirect comparisons.

Networks can contain different structural elements:

- Closed loops: Occur when all interventions in a subset are directly connected, allowing both direct and indirect evidence to inform the comparisons [8]

- Open/unclosed loops: Represent incomplete connections in the network (loose ends) where only indirect evidence is available for some comparisons [8]

- Star-shaped networks: Feature a single common comparator connected to multiple interventions but limited direct connections between the active interventions [4]

Table 2: Network Geometry Characteristics and Interpretations

| Geometric Feature | Description | Implications for NMA |

|---|---|---|

| Node Size | Usually proportional to number of patients or studies for each intervention [9] | Larger nodes indicate more precise direct evidence for that intervention |

| Line Width/Thickness | Proportional to number of studies available for each direct comparison [9] | Thicker lines indicate more precise direct evidence for that comparison |

| Closed Loops | Direct connections between all interventions in a subset forming triangle, square, etc. [8] | Enables assessment of coherence (consistency) between direct and indirect evidence |

| Common Comparator | Intervention serving as connection point for multiple other interventions [8] | Critical for forming indirect comparisons; Placebo/standard care often serves this role |

Integrating Components: The Network Meta-Analysis Process

From Direct and Indirect Evidence to Network Estimates

The network estimate in NMA represents the pooled result of both direct and indirect evidence for a given comparison when both are available, or only the indirect evidence if no direct evidence exists [9]. When both direct and indirect evidence are available for a comparison, they are statistically combined to produce a more precise estimate than either source alone could provide [4]. Empirical studies have suggested that NMA typically yields more precise estimates of intervention effects compared with single direct or indirect estimates [4].

The underlying assumption enabling this integration is that the different sets of randomized trials are similar, on average, in all important factors other than the intervention comparison being made – an assumption termed transitivity [4]. The statistical manifestation of this assumption is coherence (sometimes called consistency), which occurs when the direct and indirect estimates for a particular intervention comparison agree with one another [9] [4].

Diagram 2: NMA evidence integration process

Critical Assumptions: Transitivity and Coherence

The validity of any NMA depends on two fundamental assumptions: transitivity and coherence. Transitivity refers to the similarity between study characteristics that allows indirect effect comparisons to be made with the assurance that there are limited factors (other than the interventions being compared) that could modify treatment effects [9] [4]. In practical terms, this means that the different sets of studies included in the network should fundamentally address the same research question in the same population [9]. Potential violations of transitivity occur when studies comparing different interventions systematically differ in effect modifiers – characteristics associated with the effect of an intervention, such as patient demographics, disease severity, or outcome definitions [4].

Coherence (or consistency) represents the statistical manifestation of transitivity and exists when the direct and indirect estimates for a comparison are consistent with one another [9] [4]. Incoherence occurs when discrepancies between direct and indirect estimates are present, and transitivity violations are a common cause of such incoherence [9]. A meta-epidemiological study of 112 published NMAs found inconsistent direct and indirect treatment effects in 14% of comparisons made [9].

Experimental Design and Methodological Protocols

Systematic Review Foundation

The conduct of a valid NMA requires the same rigorous systematic review processes as conventional pairwise meta-analysis [9]. This includes clearly defined, explicit eligibility criteria; a reproducible, systematic search that confidently retrieves all relevant literature; systematic screening processes to identify and select all relevant studies; and assessment of risk of bias for each included study [9]. These processes minimize the risk of selection bias by considering all evidence relevant to a clinical question [9].

Table 3: Essential Methodological Components for Valid NMA

| Component | Purpose | Implementation Considerations |

|---|---|---|

| Systematic Search | Identify all relevant evidence | Multiple databases; No language restrictions; Grey literature search [9] |

| Risk of Bias Assessment | Evaluate internal validity of included studies | Cochrane Risk of Bias tool; Consider impact on transitivity [9] |

| Transitivity Assessment | Evaluate similarity of studies across comparisons | Compare distribution of effect modifiers (population, intervention, outcome characteristics) [4] |

| Coherence Evaluation | Check statistical consistency between direct and indirect evidence | Node-splitting; Design-by-treatment interaction model [4] |

| Certainty of Evidence Assessment | Evaluate confidence in network estimates | GRADE approach for NMA, evaluating risk of bias, inconsistency, indirectness, imprecision, publication bias, and incoherence [9] |

Statistical Analysis Frameworks

NMA can be implemented using either Frequentist or Bayesian statistical frameworks, with models available for all types of raw data and producing different pooled effect measures [8]. Bayesian approaches have been particularly popular for NMA as they naturally accommodate complex random-effects models, provide probabilistic interpretation of results, and facilitate treatment ranking [8]. The statistical analysis typically involves:

- Model Specification: Choosing fixed-effect or random-effects models based on heterogeneity considerations

- Effect Estimation: Calculating relative effects for all possible comparisons in the network

- Ranking Procedures: Estimating probability of each treatment being best, second best, etc. for a given outcome

- Incoherence Assessment: Using statistical tests to evaluate disagreement between direct and indirect evidence

Research Reagent Solutions for NMA

Table 4: Essential Methodological Tools for Network Meta-Analysis

| Tool Category | Specific Solutions | Function and Application |

|---|---|---|

| Statistical Software | R (netmeta, gemtc packages) [8] | Frequentist and Bayesian NMA implementation; Network geometry visualization |

| Bayesian Analysis Platforms | WinBUGS/OpenBUGS, JAGS [8] | Markov chain Monte Carlo (MCMC) simulation for complex Bayesian NMA models |

| Quality Assessment Tools | Cochrane Risk of Bias tool [9] | Assess internal validity of randomized trials included in the network |

| Certainty Assessment Framework | GRADE for NMA [9] [11] | Systematically rate confidence in each network comparison estimate |

| Protocol Registration | PROSPERO registry [9] | Pre-specify NMA methods, outcomes, and analysis plans to minimize bias |

Application in Drug Efficacy Research

In drug efficacy research, NMA provides invaluable methodology for comparing multiple competing interventions simultaneously, addressing the common scenario where many treatments are available but few have been studied in head-to-head trials [8] [10]. This methodology allows for the estimation of efficacy and safety metrics for all possible comparisons in the same model, providing a comprehensive overview of the entire therapeutic landscape for a clinical condition [8]. National agencies for health technology assessment and drug regulators increasingly use NMA methods to inform drug approval and reimbursement decisions [8].

The ability to rank order interventions through metrics such as SUCRA (Surface Under the Cumulative Ranking Curve) provides additional decision support, though these rankings must be interpreted cautiously considering both the magnitude of effect and the certainty of evidence [9]. More modern minimally or partially contextualized approaches developed by the GRADE working group provide improved methods for categorizing interventions from best to worst while considering both effect estimates and evidence certainty [11].

The core components of direct evidence, indirect evidence, and network geometry form the foundation of valid and informative network meta-analysis in drug efficacy research. Direct evidence from head-to-head trials provides the building blocks, while indirect evidence extends these comparisons through connected networks. The geometry of these networks determines which comparisons are possible and with what precision. The integration of these components through NMA methodology allows clinicians, researchers, and policy-makers to make informed decisions between multiple competing interventions, even when direct comparative evidence is limited or absent. As these methods continue to evolve and mature, they represent an increasingly essential tool for evidence-based drug development and evaluation.

Network meta-analysis (NMA) is an advanced statistical methodology that enables the simultaneous comparison of multiple interventions for the same condition by synthesizing both direct and indirect evidence. Unlike traditional pairwise meta-analyses that can only compare two treatments at a time, NMA incorporates evidence from a network of randomized controlled trials (RCTs), even when some treatments have never been directly compared in head-to-head studies [10]. This approach is particularly valuable in drug efficacy research where numerous treatment options may exist without comprehensive direct comparative evidence.

The methodology has undergone substantial development over recent decades, with implementations available in both frequentist and Bayesian statistical frameworks [12]. NMA provides three distinct advantages that make it indispensable for comparative effectiveness research: the ability to rank treatments, enhanced precision of effect estimates, and the capacity to compare interventions that lack direct head-to-head evidence. These advantages position NMA as a powerful tool for clinicians, researchers, and healthcare decision-makers who must determine optimal treatment strategies from complex bodies of evidence [10].

Treatment Ranking in Network Meta-Analysis

Ranking Methodologies and Metrics

Treatment ranking is a distinctive feature of NMA that allows researchers to generate hierarchies of interventions based on their relative efficacy or safety. Within a Bayesian framework, the probability that a treatment has a specific rank can be derived directly from the posterior distributions of all treatments in the network [12]. Several statistical metrics have been developed to summarize these rank distributions:

SUCRA (Surface Under the Cumulative Ranking Curve) values represent the proportion of competing treatments that a given treatment outperforms, with values ranging from 0% (completely ineffective) to 100% (universally effective) [12] [13]. For a treatment ( k ), the cumulative probability that it is at least ( j^{th} ) best is given by: [ CP(k,j) = \sum_{i=1}^{j} P(R(k) = i) ] where ( R(k) ) represents the rank of treatment ( k ) [13].

P-scores serve as a frequentist analogue to SUCRA and can be calculated without resampling methods. These scores are based solely on point estimates and standard errors from frequentist NMA under normality assumptions and can be calculated as means of one-sided p-values [12]. They measure the mean extent of certainty that a treatment is better than competing treatments.

Probability of Being Best represents the probability that a treatment is superior to all others in the network, calculated as ( P_{best}(k) = P(R(k) = 1) ) [13].

Table 1: Comparison of Primary Ranking Metrics in Network Meta-Analysis

| Metric | Framework | Calculation Basis | Interpretation | Limitations |

|---|---|---|---|---|

| SUCRA | Bayesian | Posterior distributions | Proportion of treatments outperformed (0-100%) | Requires resampling in frequentist approach |

| P-score | Frequentist | Point estimates & standard errors | Mean certainty of being better than competitors (0-1) | Assumes normality of treatment effects |

| Probability Best | Both | Direct ranking at each iteration | Probability of being superior to all alternatives | Does not account for full rank uncertainty |

MID-Adjusted Ranking Metrics

Standard ranking metrics ignore the magnitude of difference between treatment effects, potentially leading to rankings that lack clinical meaning. Minimally important differences (MIDs) represent the smallest value in a given outcome that patients or clinicians consider meaningful [13]. Incorporating MIDs into ranking metrics addresses this limitation:

MID-Adjusted Ranking Function modifies the standard approach by introducing a threshold for meaningful differences: [ R{MID}(k) = T - \sum{j=1,j\neq k}^{T} 1{dk - dj \geq MID} ] where ( T ) is the total number of treatments, ( dk ) and ( dj ) are treatment effects, and ( MID ) is the minimally important difference [13].

MID-Adjusted SUCRA values account for clinically meaningful differences between treatments, preventing trivial differences from influencing rankings. The use of the midpoint method for handling ties ensures comparability between standard and MID-adjusted ranking metrics [13].

Applications and Limitations of Treatment Ranking

Treatment ranking has been successfully applied across numerous therapeutic areas. In a network of 10 diabetes treatments including placebo with 26 studies, NMA ranking provided clear hierarchies for HbA1c reduction [12]. Similarly, in a NMA of biological therapies and small molecules for ulcerative colitis maintenance therapy, upadacitinib 30 mg once daily ranked first for clinical remission (p-score 0.99) and endoscopic improvement (p-score 0.99) in re-randomized studies [6].

However, several limitations must be considered. Neither SUCRA nor P-scores offer major advantages compared to examining credible or confidence intervals directly [12]. Additionally, rankings can be misleading when precision is not adequately considered, as treatments with more favorable point estimates but broader confidence intervals may be ranked higher despite greater uncertainty [12].

Precision Enhancement in Network Meta-Analysis

Mechanisms of Precision Improvement

NMA enhances the precision of treatment effect estimates through several interconnected mechanisms. By synthesizing both direct and indirect evidence, NMA increases the effective sample size for comparisons, leading to reduced confidence/credible intervals around point estimates [10]. This precision enhancement is particularly valuable when direct evidence is sparse or imprecise.

The incorporation of indirect evidence follows the principle of statistical precision: when treatment A has been compared to B, and B to C, the indirect estimate for A versus C borrows strength from both direct comparisons. This borrowing of strength across the network results in more precise effect estimates than would be possible through pairwise meta-analysis alone [10].

Bayesian hierarchical models further enhance precision when integrating different study designs. For instance, when synthesizing evidence from both RCTs and single-arm studies, hierarchical models can increase precision compared to analyses of RCT data alone, while appropriately accounting for between-study heterogeneity and potential biases [14] [15].

Hierarchical Models for Evidence Synthesis

Bayesian hierarchical frameworks provide sophisticated approaches for precision enhancement while managing potential biases. These models are particularly valuable when incorporating real-world evidence or single-arm studies alongside RCT data:

Bivariate Generalized Linear Mixed Effects Models (BGLMMs) allow for simultaneous modeling of multiple outcomes while accounting for correlation structures [14].

Hierarchical Commensurate Prior (HCP) Models adaptively downweight single-arm studies based on their consistency with RCT evidence, providing a balance between bias reduction and precision gain [14].

Hierarchical Power Prior (HPP) Models use power parameters to control the influence of non-randomized evidence, with parameters approaching 0 substantially discounting such evidence [14].

Table 2: Hierarchical Models for Precision Enhancement in NMA

| Model Type | Key Mechanism | Advantages | Application Context |

|---|---|---|---|

| Bivariate GLMM | Models multiple correlated outcomes | Accounts for outcome correlations | Multiple efficacy/safety endpoints |

| Hierarchical Commensurate Prior | Adaptively downweights inconsistent evidence | Balance between bias reduction and precision | Incorporating real-world evidence |

| Hierarchical Power Prior | Power parameters control influence | Explicit control over evidence contribution | Synthesizing RCTs and non-randomized studies |

| Bias Adjustment Model | Additive random bias terms | Adjusts for design-related bias | Combining different study designs |

Evidence Integration Across Study Designs

The integration of evidence from different study designs represents a significant opportunity for precision enhancement in drug efficacy research. When conducted appropriately, this integration can improve generalizability while maintaining rigorous standards for evidence synthesis [15].

In an illustrative example examining type 2 diabetes medications, hierarchical NMA models that accounted for differences between RCTs and non-randomized studies provided better model fit compared to naïve pooling approaches [15]. While some hierarchical models increased uncertainty around effect estimates, this appropriately reflected the additional heterogeneity introduced by combining different study designs and prevented overly optimistic conclusions [15].

Evaluating Uncompared Interventions

Foundations of Indirect Treatment Comparisons

The ability to compare interventions that have never been directly evaluated in head-to-head trials represents a cornerstone advantage of NMA. This capability is mathematically grounded in the consistency assumption, which enables the estimation of relative effects between treatments A and C through the common comparator B [15].

The statistical foundation rests on the concept that if treatment A has been compared to B, and B to C, then the relative effect between A and C can be estimated indirectly. In a random-effects NMA model, this is implemented as: [ \delta{i,jk} \sim N(d{jk}, \sigma^2) ] where ( \delta{i,jk} ) represents the treatment effect between interventions ( j ) and ( k ) in study ( i ), ( d{jk} ) is the mean treatment difference, and ( \sigma^2 ) represents the between-study variance [15]. The consistency assumption is then expressed through the relation ( d{jk} = d{1k} - d_{1j} ), where treatment 1 is the network reference treatment.

Applications in Drug Efficacy Research

The capacity to evaluate uncompared interventions has proven particularly valuable in areas with numerous treatment options but limited direct comparative evidence. In ulcerative colitis maintenance therapy, NMA enabled comparisons of 13 biological therapies and small molecules across different trial designs, identifying upadacitinib and etrasimod as consistently efficacious despite limited direct comparisons [6].

Similarly, in medication-overuse headache management, NMA compared 16 different treatment strategies, including withdrawal approaches, preventive medications, and educational interventions. The analysis identified combination therapies as most effective, providing crucial evidence for clinical decision-making where direct comparisons were unavailable [5].

Methodological Considerations and Assumptions

The validity of indirect comparisons rests on several key assumptions that must be carefully evaluated:

Transitivity requires that studies forming the indirect comparison are similar in effect modifiers - clinical or methodological characteristics that influence treatment effects [10].

Consistency refers to the statistical agreement between direct and indirect evidence when both are available within a network. This can be evaluated statistically through node-splitting approaches or design-by-treatment interaction tests [15].

Homogeneity assumes that studies comparing the same treatment pair estimate the same underlying treatment effect, after accounting for sampling error [15].

Violations of these assumptions can lead to biased estimates, making careful assessment of network geometry and potential effect modifiers an essential component of NMA practice.

Experimental Protocols and Implementation

Standard NMA Workflow

Implementing a network meta-analysis follows a structured workflow encompassing several distinct phases:

Systematic Review Conduct involves comprehensive literature searching across multiple databases (e.g., MEDLINE, EMBASE, Cochrane Central), study selection based on PICOS criteria, data extraction using standardized forms, and risk of bias assessment using tools like Cochrane Risk of Bias 2.0 [6] [5].

Network Geometry Evaluation includes creating network diagrams where nodes represent treatments and edges represent direct comparisons, with node size proportional to sample size and edge thickness proportional to number of studies [5].

Statistical Analysis comprises both pairwise meta-analyses for direct comparisons and network meta-analysis integrating direct and indirect evidence, typically using random-effects models to account for between-study heterogeneity [5].

Ranking and Assessment involves calculating ranking metrics (SUCRA, P-scores), evaluating consistency between direct and indirect evidence, and assessing quality of evidence using approaches like GRADE for NMA [10].

Software and Computational Tools

Several software options are available for implementing NMA, ranging from specialized packages to point-and-click applications:

R packages including netmeta for frequentist NMA and gemtc for Bayesian NMA provide comprehensive functionality for analysis and visualization [16].

MetaInsight is an interactive web application that provides a user-friendly interface for conducting NMA without requiring programming expertise, leveraging established R packages for computation [16].

Cytoscape offers advanced network visualization capabilities, particularly valuable for complex networks with multiple treatments and comparisons [17].

Stata and WinBUGS/OpenBUGS provide additional implementations, with WinBUGS particularly established for Bayesian NMA implementation [12].

Diagram 1: Network Meta-Analysis Standard Workflow

The Scientist's Toolkit: Essential Research Reagents

Table 3: Essential Methodological Components for Network Meta-Analysis

| Component | Function | Implementation Examples |

|---|---|---|

| Statistical Software | Computational implementation of NMA models | R (netmeta, gemtc), Stata, Python |

| Risk of Bias Tools | Methodological quality assessment of included studies | Cochrane RoB 2.0, ROBINS-I |

| Visualization Packages | Network diagrams and results presentation | ggplot2, Cytoscape, netmeta plotting functions |

| Consistency Check Methods | Evaluation of transitivity and coherence | Node-splitting, design-by-treatment interaction test |

| Ranking Metric Calculators | Generation of treatment hierarchies | SUCRA, P-scores, MID-adjusted metrics |

| Villosin | Villosin, MF:C20H28O2, MW:300.4 g/mol | Chemical Reagent |

| DHODH-IN-17 | 2-(4-Chloro-phenylamino)-nicotinic Acid|CAS 16344-26-6 |

Advanced Methodological Extensions

Incorporating Non-Randomized Evidence

Recent methodological advances have enabled the incorporation of non-randomized evidence into NMA, expanding the evidence base while addressing potential biases. Several sophisticated approaches have been developed:

Hierarchical models differentiate between study designs (RCTs vs. observational studies) by incorporating design-specific parameters, allowing for varying degrees of evidence contribution based on design [15].

Bias adjustment models introduce additive random bias terms for non-randomized studies, adjusting for systematic overestimation or underestimation of treatment effects [15].

Power priors in Bayesian frameworks systematically downweight non-randomized evidence based on its perceived reliability or consistency with RCT evidence [14] [15].

These approaches prevent overly optimistic conclusions while leveraging the complementary strengths of different study designs, such as the generalizability of real-world evidence and the internal validity of RCTs.

MID-Adjusted Ranking Implementation

The implementation of minimally important difference adjustments to ranking metrics requires specific methodological approaches:

MID-Adjusted Rank Calculation modifies standard ranking functions to incorporate clinical significance thresholds: [ R{MID}(k) = T - \sum{j=1,j\neq k}^{T} 1{dk - dj \geq MID} ] where ties (treatment differences < MID) are handled using the midpoint method to maintain comparability with standard SUCRA values [13].

Software Implementation is available through specialized packages such as the R package mid.nma.rank for Bayesian implementation of MID-adjusted ranking metrics, providing calculation of MID-adjusted probabilities of being best, cumulative probabilities, and SUCRA values [13].

Diagram 2: MID-Adjusted Ranking Implementation Process

Network meta-analysis represents a significant methodological advancement in evidence synthesis, providing three key advantages for drug efficacy research: sophisticated treatment ranking capabilities, enhanced precision of effect estimates through evidence integration, and the unique capacity to compare interventions that lack direct head-to-head evidence. These advantages make NMA an indispensable tool for comparative effectiveness research and healthcare decision-making.

As methodological development continues, emerging approaches including MID-adjusted ranking metrics, hierarchical models for combining different study designs, and bias-adjustment methods further enhance the utility and validity of NMA. When implemented following rigorous methodological standards and appropriate interpretation of results, NMA provides powerful insights for determining optimal treatment strategies across therapeutic areas, ultimately supporting evidence-based clinical practice and healthcare policy.

Network meta-analysis (NMA) represents a significant methodological advancement in evidence-based medicine, enabling the simultaneous comparison of multiple interventions through the synthesis of both direct and indirect evidence [18]. Unlike traditional pairwise meta-analyses that are limited to direct comparisons between two interventions, NMA incorporates a network of comparisons, allowing researchers to estimate the relative effectiveness of treatments that have never been directly compared in primary studies [18]. This approach is particularly valuable in drug efficacy research where numerous treatment options exist, but head-to-head clinical trials are scarce. The foundational principle of NMA lies in its ability to leverage indirect evidence—for instance, if treatment A has been compared to B, and B to C, then the A versus C comparison can be mathematically derived, creating a connected network of treatment effects [18].

The application of NMA has grown substantially across medical fields, particularly in areas with complex treatment landscapes such as ulcerative colitis (UC) and obesity [19]. For UC, the proliferation of biological therapies and small molecules has created an urgent need for comparative effectiveness research to guide clinical decision-making [6]. Similarly, in obesity research, the emergence of multiple pharmacotherapies with different mechanisms of action necessitates sophisticated evidence synthesis approaches. The core assumption underlying NMA is transitivity, which implies that studies comparing different sets of interventions are sufficiently similar in their clinical and methodological characteristics to allow valid indirect comparisons [18]. When transitivity holds, NMA provides more precise effect estimates than pairwise meta-analyses alone and enables treatment ranking to identify optimal therapeutic strategies.

Fundamental Principles and Methodological Framework of Network Meta-Analysis

Core Concepts and Evidence Structures

Network meta-analysis integrates two distinct types of evidence: direct evidence from head-to-head comparisons and indirect evidence derived through a common comparator [18]. This integration occurs within a network structure where nodes represent interventions and edges represent direct comparisons between them. The strength of evidence in NMA can be visualized and quantified in several ways. The effective number of studies, effective sample size, and effective precision are three proposed measures that quantify overall evidence in NMAs, reflecting the additional evidence gained from incorporating indirect comparisons [20]. These measures allow researchers to preliminarily evaluate how much NMA improves treatment effect estimates compared to simpler pairwise meta-analyses.

The graphical representation of evidence networks is a crucial component of NMA reporting. Traditional network diagrams display treatments as nodes and comparisons as connecting lines, with line thickness often proportional to the number of studies or patients contributing to each direct comparison [20]. However, as networks grow more complex, particularly with multicomponent interventions, standard visualizations may become insufficient. Newer visualization approaches like CNMA-UpSet plots, CNMA heat maps, and CNMA-circle plots have been developed to better represent complex data structures in component network meta-analysis (CNMA), which decomposes interventions into their constituent components [21].

Statistical Models and Key Assumptions

NMA models can be implemented within both frequentist and Bayesian frameworks, with each offering distinct advantages. The frequentist approach typically uses multivariate meta-analysis models that account for the correlation structure introduced by multiple treatments, while Bayesian approaches employ hierarchical models with Markov chain Monte Carlo (MCMC) simulation [18]. Both frameworks aim to estimate the relative treatment effects between all interventions in the network while preserving the randomized structure of the original trials.

The validity of NMA depends on two critical assumptions: transitivity and consistency. Transitivity requires that the distribution of effect modifiers (patient or study characteristics that influence treatment effects) is similar across different direct comparisons in the network [18]. Consistency refers to the statistical agreement between direct and indirect evidence for the same comparison [18]. Violations of these assumptions can lead to biased estimates, and therefore, methodological guidance strongly recommends evaluating potential effect modifiers and conducting inconsistency diagnostics when both direct and indirect evidence are available.

Table 1: Key Methodological Concepts in Network Meta-Analysis

| Concept | Definition | Importance in NMA |

|---|---|---|

| Direct Evidence | Evidence from studies directly comparing two interventions | Provides the foundation for treatment effect estimates |

| Indirect Evidence | Evidence derived through a common comparator | Enables comparisons between interventions never directly studied |

| Transitivity | Similarity of studies across different comparisons | Ensures validity of indirect comparisons |

| Consistency | Agreement between direct and indirect evidence | Validates the network model assumptions |

| Network Geometry | Structure and connectivity of the treatment network | Influences precision and reliability of estimates |

Case Study: Network Meta-Analysis in Ulcerative Colitis

Study Design and Methodological Protocol

A recent high-quality NMA examining biological therapies and small molecules for ulcerative colitis maintenance therapy exemplifies rigorous application of NMA methodology [6]. This analysis addressed a critical clinical question: what is the relative efficacy of available advanced therapies for maintaining remission in UC, and how does trial design influence estimated treatment effects? The researchers implemented a comprehensive search strategy across multiple databases (MEDLINE, EMBASE, Cochrane Central) through February 27, 2025, identifying 28 randomized controlled trials meeting inclusion criteria [6]. Notably, the protocol distinguished between two trial designs: re-randomization studies (where induction responders are re-randomized to maintenance therapy) and treat-through studies (where patients continue initial assignment throughout the study period) [6].

The analytical approach employed random-effects models within the frequentist framework, reporting results as pooled relative risks (RR) with 95% confidence intervals [6]. Treatments were ranked using p-scores, a frequentist analog to the Surface Under the Cumulative Ranking Curve (SUCRA) used in Bayesian NMAs [22]. The primary outcome was failure to achieve clinical remission, with secondary endpoints including endoscopic improvement, endoscopic remission, corticosteroid-free remission, and histological outcomes [6]. This methodological protocol illustrates several best practices in NMA: pre-specification of outcomes, assessment of trial design heterogeneity, use of appropriate statistical models, and comprehensive sensitivity analyses.

Diagram 1: NMA Workflow for UC Maintenance Therapies. This diagram illustrates the analytical workflow from study identification through outcome assessment and treatment ranking.

Results and Clinical Implications

The UC NMA yielded clinically important findings with direct implications for treatment selection. In re-randomized studies, upadacitinib 30 mg once daily ranked first for both clinical remission (RR of failure = 0.52; 95% CI 0.44–0.61; p-score 0.99) and endoscopic improvement (RR = 0.43; 95% CI 0.35–0.52; p-score 0.99) [6]. For other key endpoints in the re-randomized design, vedolizumab ranked first for endoscopic remission (RR = 0.73; 95% CI 0.64–0.84; p-score 0.92), while guselkumab ranked first for corticosteroid-free remission (RR = 0.40; 95% CI 0.28–0.55; p-score 0.95) [6]. In treat-through studies, etrasimod 2 mg once daily ranked first for clinical remission (RR = 0.73; 95% CI 0.64–0.83; p-score 0.88), while infliximab 10 mg/kg ranked first for endoscopic improvement (RR = 0.64; 95% CI 0.56–0.74; p-score 0.94) [6].

These findings demonstrate the value of NMA for comparative effectiveness research in UC. The results not only identify upadacitinib and etrasimod as consistently efficacious maintenance therapies but also highlight how treatment rankings can vary according to different endpoints and trial designs [6]. This nuanced understanding enables more personalized treatment selection based on individual patient characteristics and treatment goals. Furthermore, the analysis provides crucial evidence for clinical guideline development, such as the updated 2025 ACG guidelines for managing adult UC patients [23].

Table 2: Ranking of Ulcerative Colitis Maintenance Therapies Based on Network Meta-Analysis

| Therapy | Dosing | Clinical Remission (RR of failure) | Endoscopic Improvement (RR of failure) | Endoscopic Remission (RR of failure) | Corticosteroid-Free Remission (RR of failure) |

|---|---|---|---|---|---|

| Upadacitinib | 30 mg o.d. | 0.52 (0.44–0.61) [6] | 0.43 (0.35–0.52) [6] | - | - |

| Vedolizumab | 300 mg 4-weekly | - | - | 0.73 (0.64–0.84) [6] | - |

| Guselkumab | 200 mg 4-weekly | - | - | - | 0.40 (0.28–0.55) [6] |

| Etrasimod | 2 mg o.d. | 0.73 (0.64–0.83) [6] | - | - | - |

| Infliximab | 10 mg/kg 8-weekly | - | 0.64 (0.56–0.74) [6] | - | - |

Advanced Visualization of NMA Results

Innovative visualization approaches have been developed to enhance the interpretation and communication of complex NMA results. The beading plot represents one such advancement, specifically designed to present treatment rankings across multiple outcomes in a single, intuitive graphic [22]. This visualization displays global ranking metrics (P-best, SUCRA, or P-score) for each treatment across various outcomes, using continuous lines spanning 0 to 1 to represent different outcomes and color-coded beads to signify treatments [22]. Such visualizations are particularly valuable for clinical decision-making where multiple efficacy and safety outcomes must be considered simultaneously.

Another visualization challenge in NMA involves representing component network meta-analyses (CNMA), where interventions are decomposed into their constituent components. Traditional network diagrams become inadequate when dealing with numerous component combinations. The CNMA-UpSet plot, CNMA heat map, and CNMA-circle plot offer standardized approaches to visualize these complex data structures, revealing which component combinations have been studied and where evidence gaps exist [21]. These advanced visualization techniques facilitate more transparent reporting and interpretation of complex NMA results, making them accessible to diverse stakeholders including clinicians, patients, and policymakers.

Case Study: Network Meta-Analysis in Obesity Research

Methodological Considerations for Complex Interventions

Obesity interventions present unique methodological challenges for network meta-analysis due to their inherent complexity. Unlike pharmacological treatments for UC, obesity interventions often comprise multiple components including dietary modifications, physical activity programs, behavioral therapies, pharmacological agents, and surgical procedures [19]. This complexity necessitates careful consideration of the node-making process—how interventions are grouped or split for analysis. A recent methodological review of NMAs applied to complex public health interventions identified seven key considerations in node-making: Approach, Ask, Aim, Appraise, Apply, Adapt, and Assess [19].

The typology of node-making elements provides a framework for planning and reporting NMAs of complex obesity interventions. The "Approach" considers whether to take a lumping (broader categories) or splitting (finer distinctions) perspective [19]. "Ask" refers to the research questions driving node specification, while "Aim" considers the purpose of the analysis [19]. "Appraise" involves evaluating the available evidence, "Apply" entails implementing the node-making strategy, "Adapt" addresses how to handle heterogeneity, and "Assess" involves evaluating the impact of node-making decisions [19]. This structured approach enhances transparency and methodological rigor in obesity NMAs.

Diagram 2: Component-Based Approach to Obesity Interventions in NMA. This diagram illustrates how complex obesity interventions can be decomposed into constituent components for analysis.

Analytical Framework and Outcome Measures

NMAs in obesity research typically employ either standard NMA models that treat each multicomponent intervention as a distinct node or component network meta-analysis (CNMA) models that estimate the effects of individual intervention components [21]. The CNMA approach is particularly valuable for obesity research as it allows identification of active components within complex interventions and prediction of effects for novel combinations not yet evaluated in trials [21]. The simplest CNMA model assumes additive effects of components, but can be extended to include interaction terms between components [21].

Key outcome measures in obesity NMAs typically include weight-related metrics (body weight, body mass index, waist circumference), cardiometabolic parameters (blood pressure, lipid profile, glycemic indices), and safety outcomes [19]. Given the chronic nature of obesity, long-term outcomes such as weight maintenance, cardiovascular events, and diabetes incidence are particularly important but often limited by short follow-up periods in most trials. The increasing application of NMA in obesity research reflects the growing number of available interventions and the need for comparative effectiveness evidence to guide clinical and public health decision-making.

Comparative Analysis Across Disease States

Methodological Commonalities and Distinctions

The application of NMA in ulcerative colitis and obesity research reveals both important methodological commonalities and disease-specific considerations. Both fields benefit from the capacity of NMA to integrate diverse evidence sources and provide comparative effectiveness rankings for multiple interventions. In both contexts, treatment effect measures typically involve relative risks or odds ratios for dichotomous outcomes and mean differences for continuous outcomes [24]. The fundamental assumptions of transitivity and consistency apply equally across domains, as do approaches for evaluating heterogeneity and inconsistency.

However, key distinctions emerge in how interventions are conceptualized and analyzed. UC NMAs primarily focus on pharmacological agents with specific molecular targets, allowing relatively straightforward node definitions based on drug class and dosage [6] [24]. In contrast, obesity NMAs must contend with diverse intervention types (behavioral, pharmacological, surgical) and frequent multi-component approaches [19]. This complexity often necessitates component-based analytic approaches rather than intervention-based analyses. Additionally, obesity trials frequently employ stepped-care or adaptive designs that present challenges for standard NMA models.

Visualization and Reporting Standards

Effective visualization and reporting practices vary between UC and obesity NMAs based on the complexity of interventions and outcomes. UC NMAs typically employ standard network diagrams supplemented with ranking plots (such as beading plots) and league tables of comparative efficacy [22]. These visualizations effectively communicate complex ranking information across multiple endpoints to clinical audiences. Obesity NMAs, particularly those addressing complex multi-component interventions, often require more specialized visualizations like CNMA-UpSet plots or component combination maps to represent the evidence network adequately [21].

Reporting standards for both fields have been enhanced by the PRISMA-NMA statement, which provides a comprehensive checklist for transparent reporting of network meta-analyses [20]. However, additional specialized reporting elements may be needed for obesity NMAs addressing complex interventions, particularly regarding the node-making process and handling of multi-component strategies. The development of domain-specific extensions to PRISMA-NMA could further improve reporting quality and consistency in both fields.

Table 3: Essential Methodological Tools for Network Meta-Analysis

| Tool Category | Specific Tool/Resource | Function/Purpose |

|---|---|---|

| Statistical Software | R with netmeta package [22] | Frequentist NMA implementation |

| WinBUGS/OpenBUGS [21] | Bayesian NMA implementation | |

| Visualization Tools | Beading plot [22] | Display treatment rankings across outcomes |

| CNMA-UpSet plot [21] | Visualize component combinations in evidence network | |

| Rank-heat plot [22] | Illustrate treatment hierarchies | |

| Methodological Frameworks | PRISMA-NMA Statement [20] | Reporting guidelines for NMAs |

| Cochrane Risk of Bias Tool [6] | Quality assessment of included studies | |

| GRADE for NMA [23] | Assessing certainty of evidence |

Advanced Methodological Considerations and Future Directions

Handling Complex Evidence Structures

As NMA applications evolve, methodological advancements continue to address increasingly complex evidence structures. Component network meta-analysis (CNMA) represents one significant advancement, particularly relevant for obesity research and other fields with multi-component interventions [21]. CNMA models decompose interventions into their constituent components, allowing estimation of component-specific effects and interactions between components [21]. This approach offers several advantages: increased statistical power through sharing information across related interventions, ability to predict effects of novel component combinations, and identification of active intervention ingredients [21].

Another methodological innovation addresses the challenge of multi-arm trials, which contribute correlated treatment effects that must be appropriately accounted for in NMA models [18]. Advanced statistical methods preserve the randomized structure of multi-arm trials while integrating them into the broader evidence network. Similarly, methods for handling mixtures of different outcome types (e.g., binary, continuous, time-to-event) continue to develop, enhancing the applicability of NMA across diverse clinical contexts.

Enhancing Robustness and Validity

Future methodological developments in NMA will likely focus on enhancing the robustness and validity of conclusions through more sophisticated approaches to handling heterogeneity, inconsistency, and bias. Multivariate network meta-analysis methods that simultaneously synthesize multiple correlated outcomes offer promise for capturing the multidimensional nature of treatment effects in conditions like UC and obesity [22]. Similarly, network meta-regression approaches allow investigation of potential treatment effect modifiers, helping to assess the transitivity assumption and explore sources of heterogeneity [18].

The integration of individual participant data (IPD) into NMA represents another important direction, potentially overcoming limitations of aggregate data NMA by enabling more thorough investigation of treatment-covariate interactions and enhanced assessment of transitivity [18]. While IPD-NMA requires greater resources and collaboration, it offers substantial benefits for understanding how treatment effects vary across patient subgroups in both UC and obesity contexts.

Network meta-analysis has emerged as an indispensable methodological tool for comparative effectiveness research in complex disease areas like ulcerative colitis and obesity. The case studies presented demonstrate how NMA provides valuable evidence for clinical decision-making by simultaneously comparing multiple interventions and ranking them according to efficacy and safety outcomes. In ulcerative colitis, NMA has identified upadacitinib, vedolizumab, and etrasimod as highly effective maintenance therapies while highlighting how treatment rankings may vary according to different endpoints and trial designs [6]. In obesity research, NMA faces additional methodological challenges due to complex, multi-component interventions but offers unique insights through component-based approaches.

The continued evolution of NMA methodology—including advanced visualization techniques, component network meta-analysis, and individual participant data approaches—will further enhance its application across diverse therapeutic areas. As these methods mature, they will provide increasingly robust and nuanced evidence to guide treatment selection, clinical guideline development, and future research priorities. For researchers and drug development professionals, mastery of NMA principles and applications is becoming essential for generating and interpreting comparative effectiveness evidence in an era of multiple treatment options.

Network Meta-Analysis (NMA), sometimes called multiple treatments meta-analysis, is a powerful statistical methodology that enables the simultaneous comparison of multiple interventions, even when direct head-to-head evidence is absent. Within the context of drug efficacy research, it provides a formal framework for integrating evidence from a set of randomized controlled trials (RCTs) to obtain a complete ranking of available treatment options. The core of an NMA is its representation as a network, a graphical structure where nodes represent the different interventions or treatments being compared (e.g., Drug A, Drug B, placebo), and edges represent the direct comparisons between them that have been studied in existing trials. This network of comparisons allows for the estimation of relative treatment effects between any two interventions in the network, based on both direct and indirect evidence. The use of NMA has become increasingly central to evidence-based medicine, informing clinical guidelines and health technology assessments by providing a comprehensive summary of the relative efficacy and safety of all available therapies for a given condition.

Core Architectural Components of a Network

The architecture of a network in an NMA is built upon two fundamental components: nodes and edges. A precise understanding of these elements is critical for both constructing and interpreting a network.

Nodes: In a drug efficacy NMA, each node corresponds to a specific pharmacological intervention, a non-pharmacological therapy, or a control condition (such as a placebo or standard of care). For example, in an NMA for obesity management, nodes could represent various obesity management medications (OMMs) like orlistat, semaglutide, liraglutide, and tirzepatide, alongside a placebo node [25]. The selection of nodes defines the scope of the clinical question being addressed.

Edges: An edge, or a line connecting two nodes, signifies that a direct comparison between those two interventions has been made in at least one included RCT. The presence of an edge indicates the availability of direct evidence. The pattern of edges determines the network's connectivity. A well-connected network, where many paths of comparison exist, generally leads to more robust and reliable estimates of relative effects.